explain

-

k8s it is better to prepare 3 machines, including:

- One master node

- 2 node nodes

-

The three host names and ip addresses in my environment are as follows:

Note: do not use localhost for the host name localdomain- maset: 192.168.59.142

- node1: 192.168.59.143

- node2: 192.168.59.144

-

These hosts do not have to be connected to the Internet. My three hosts are not connected to the Internet, but I have prepared a host connected to the Internet to upload all the packages to be installed to these three servers offline. See the following blog for the method.

[yum] linux offline install rpm package and its dependencies and uninstall package and its dependencies

Virtual machine environment configuration [3 hosts synchronized]

Note: three hosts [master and node nodes] need to do the same operation

Configuring selinux

The firewall must be in disable mode.

Modify SELINUX=disabled in the following configuration file, and then restart to take effect.

[root@master ~]# cat /etc/selinux/config | grep SELINUX=disa SELINUX=disabled [root@master ~]# [root@master ~]# getenforce Disabled [root@master ~]#

Configure firewall

- If the firewall has no special requirements, close it directly: systemctl stop firewalld service

- If the firewall cannot be closed, it can be set to the default zone: firewall CMD -- set default zone = trusted

I'll set the default zone as an example by turning on the firewall in all environments below

[root@master ~]# systemctl is-active firewalld active [root@master ~]# firewall-cmd --set-default-zone=trusted success [root@master ~]#

Configuration resolution

The three ip addresses and host names are added to the parse file and can then be copied to the other two hosts through scp.

[root@master ~]# tail -n 4 /etc/hosts 192.168.59.142 master 192.168.59.143 node1 192.168.59.144 node2 [root@master ~]# [root@master ~]# scp /etc/hosts node1:/etc/hosts The authenticity of host 'node1 (192.168.59.143)' can't be established. ECDSA key fingerprint is SHA256:+JrT4G9aMhaod/a9gBjUOzX5aONqQ7a4OX0Oj3Z978c. ECDSA key fingerprint is MD5:7f:4c:cc:5c:10:d2:54:d8:3c:dd:da:39:48:30:12:59. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node1,192.168.59.143' (ECDSA) to the list of known hosts. root@node1's password: Permission denied, please try again. root@node1's password: hosts 100% 224 145.7KB/s 00:00 [root@master ~]# scp /etc/hosts node2:/etc/hosts The authenticity of host 'node2 (192.168.59.144)' can't be established. ECDSA key fingerprint is SHA256:+JrT4G9aMhaod/a9gBjUOzX5aONqQ7a4OX0Oj3Z978c. ECDSA key fingerprint is MD5:7f:4c:cc:5c:10:d2:54:d8:3c:dd:da:39:48:30:12:59. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node2,192.168.59.144' (ECDSA) to the list of known hosts. root@node2's password: hosts 100% 224 169.6KB/s 00:00 [root@master ~]#

Close swap

- Command: swapoff - A; sed -i '/swap/d' /etc/fstab

The above two commands are temporary shutdown in the front and closing the configuration file in the back, so that it can be closed after restart.

[root@master ~]# swapoff -a ; sed -i '/swap/d' /etc/fstab [root@master ~]#

The configuration file must be modified, or after the machine is restarted, k8s it will not work.

Configure yum source

- Enter / etc / yum.com first repos. D / directory, create a new folder and move all the current to the folder [delete also OK]

- Then download the network source of docker to the above Directory: wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

[ ftp://ftp.rhce.cc/k8s/ *This is the alicloud image address]

[root@master ~]#

[root@master ~]# cd /etc/yum.repos.d/

[root@master yum.repos.d]# mkdir bak

[root@master yum.repos.d]# mv * bak

[root@master yum.repos.d]# wget ftp://ftp.rhce.cc/k8s/* -P /etc/yum.repos.d/

--2021-06-16 16:27:53-- ftp://ftp.rhce.cc/k8s/*

=> '/etc/yum.repos.d/.listing'

Resolving ftp.rhce.cc (ftp.rhce.cc)... 101.37.152.41

Connecting to ftp.rhce.cc (ftp.rhce.cc)|101.37.152.41|:21... connected.

Logging in as anonymous ... Logged in!

==> SYST ... done. ==> PWD ... done.

==> TYPE I ... done. ==> CWD (1) /k8s ... done.

==> PASV ... done. ==> LIST ... done.

[ <=> ] 398 --.-K/s in 0s

2021-06-16 16:27:55 (45.7 MB/s) - '/etc/yum.repos.d/.listing' saved [398]

Removed '/etc/yum.repos.d/.listing'.

--2021-06-16 16:27:55-- ftp://ftp.rhce.cc/k8s/CentOS-Base.repo

=> '/etc/yum.repos.d/CentOS-Base.repo'

==> CWD not required.

==> PASV ... done. ==> RETR CentOS-Base.repo ... done.

Length: 2206 (2.2K)

100%[=====================================================================>] 2,206 --.-K/s in 0s

2021-06-16 16:27:56 (162 MB/s) - '/etc/yum.repos.d/CentOS-Base.repo' saved [2206]

--2021-06-16 16:27:56-- ftp://ftp.rhce.cc/k8s/docker-ce.repo

=> '/etc/yum.repos.d/docker-ce.repo'

==> CWD not required.

==> PASV ... done. ==> RETR docker-ce.repo ... done.

Length: 2640 (2.6K)

100%[=====================================================================>] 2,640 --.-K/s in 0s

2021-06-16 16:27:56 (127 MB/s) - '/etc/yum.repos.d/docker-ce.repo' saved [2640]

--2021-06-16 16:27:56-- ftp://ftp.rhce.cc/k8s/epel.repo

=> '/etc/yum.repos.d/epel.repo'

==> CWD not required.

==> PASV ... done. ==> RETR epel.repo ... done.

Length: 923

100%[=====================================================================>] 923 --.-K/s in 0.006s

2021-06-16 16:27:56 (163 KB/s) - '/etc/yum.repos.d/epel.repo' saved [923]

--2021-06-16 16:27:56-- ftp://ftp.rhce.cc/k8s/k8s.repo

=> '/etc/yum.repos.d/k8s.repo'

==> CWD not required.

==> PASV ... done. ==> RETR k8s.repo ... done.

Length: 276

100%[=====================================================================>] 276 --.-K/s in 0s

2021-06-16 16:27:57 (24.3 MB/s) - '/etc/yum.repos.d/k8s.repo' saved [276]

[root@master yum.repos.d]#

Install docker and start the service

k8s depends on the runtime, which is the runtime. We can use docker.

- Yum -y install docker Ce: install docker service [9 packages in total]

- systemctl enable docker --now: start up automatically and start now

- docker info # view details

[root@master yum.repos.d]# yum -y install docker-ce Loaded plugins: fastestmirror, langpacks Determining fastest mirrors base | 3.6 kB 00:00:00 docker-ce-stable | 3.5 kB 00:00:00 epel | 4.7 kB 00:00:00 extras | 2.9 kB 00:00:00 kubernetes/signature | 844 B 00:00:00 Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg Importing GPG key 0x307EA071: Userid : "Rapture Automatic Signing Key (cloud-rapture-signing-key-2021-03-01-08_01_09.pub)" Fingerprint: 7f92 e05b 3109 3bef 5a3c 2d38 feea 9169 307e a071 From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg kubernetes/signature | 1.4 kB 00:00:00 !!! updates | 2.9 kB 00:00:00 (1/10): epel/x86_64/group_gz | 96 kB 00:00:00 (2/10): docker-ce-stable/x86_64/updateinfo | 55 B 00:00:00 (3/10): docker-ce-stable/x86_64/primary_db | 62 kB 00:00:00 (4/10): base/7/x86_64/group_gz | 153 kB 00:00:00 (5/10): epel/x86_64/updateinfo | 1.0 MB 00:00:00 (6/10): kubernetes/primary | 92 kB 00:00:00 (7/10): extras/7/x86_64/primary_db | 242 kB 00:00:00 (8/10): base/7/x86_64/primary_db | 6.1 MB 00:00:01 (9/10): epel/x86_64/primary_db | 6.9 MB 00:00:02 (10/10): updates/7/x86_64/primary_db | 8.8 MB 00:00:02 kubernetes 678/678 Resolving Dependencies --> Running transaction check ---> Package docker-ce.x86_64 3:20.10.7-3.el7 will be installed --> Processing Dependency: container-selinux >= 2:2.74 for package: 3:docker-ce-20.10.7-3.el7.x86_64 --> Processing Dependency: containerd.io >= 1.4.1 for package: 3:docker-ce-20.10.7-3.el7.x86_64 --> Processing Dependency: docker-ce-cli for package: 3:docker-ce-20.10.7-3.el7.x86_64 --> Processing Dependency: docker-ce-rootless-extras for package: 3:docker-ce-20.10.7-3.el7.x86_64 --> Running transaction check ---> Package container-selinux.noarch 2:2.119.2-1.911c772.el7_8 will be installed ---> Package containerd.io.x86_64 0:1.4.6-3.1.el7 will be installed ---> Package docker-ce-cli.x86_64 1:20.10.7-3.el7 will be installed --> Processing Dependency: docker-scan-plugin(x86-64) for package: 1:docker-ce-cli-20.10.7-3.el7.x86_64 ---> Package docker-ce-rootless-extras.x86_64 0:20.10.7-3.el7 will be installed --> Processing Dependency: fuse-overlayfs >= 0.7 for package: docker-ce-rootless-extras-20.10.7-3.el7.x86_64 --> Processing Dependency: slirp4netns >= 0.4 for package: docker-ce-rootless-extras-20.10.7-3.el7.x86_64 --> Running transaction check ---> Package docker-scan-plugin.x86_64 0:0.8.0-3.el7 will be installed ---> Package fuse-overlayfs.x86_64 0:0.7.2-6.el7_8 will be installed --> Processing Dependency: libfuse3.so.3(FUSE_3.2)(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 --> Processing Dependency: libfuse3.so.3(FUSE_3.0)(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 --> Processing Dependency: libfuse3.so.3()(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 ---> Package slirp4netns.x86_64 0:0.4.3-4.el7_8 will be installed --> Running transaction check ---> Package fuse3-libs.x86_64 0:3.6.1-4.el7 will be installed --> Finished Dependency Resolution Dependencies Resolved ====================================================================================================== Package Arch Version Repository Size ====================================================================================================== Installing: docker-ce x86_64 3:20.10.7-3.el7 docker-ce-stable 27 M Installing for dependencies: container-selinux noarch 2:2.119.2-1.911c772.el7_8 extras 40 k containerd.io x86_64 1.4.6-3.1.el7 docker-ce-stable 34 M docker-ce-cli x86_64 1:20.10.7-3.el7 docker-ce-stable 33 M docker-ce-rootless-extras x86_64 20.10.7-3.el7 docker-ce-stable 9.2 M docker-scan-plugin x86_64 0.8.0-3.el7 docker-ce-stable 4.2 M fuse-overlayfs x86_64 0.7.2-6.el7_8 extras 54 k fuse3-libs x86_64 3.6.1-4.el7 extras 82 k slirp4netns x86_64 0.4.3-4.el7_8 extras 81 k Transaction Summary ====================================================================================================== Install 1 Package (+8 Dependent packages) Total download size: 107 M Installed size: 438 M Downloading packages: warning: /var/cache/yum/x86_64/7/extras/packages/container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm: Header V3 RSA/SHA256 Signature, key ID f4a80eb5: NOKEY Public key for container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm is not installed (1/9): container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm | 40 kB 00:00:00 warning: /var/cache/yum/x86_64/7/docker-ce-stable/packages/docker-ce-20.10.7-3.el7.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID 621e9f35: NOKEY Public key for docker-ce-20.10.7-3.el7.x86_64.rpm is not installed (2/9): docker-ce-20.10.7-3.el7.x86_64.rpm | 27 MB 00:00:06 (3/9): containerd.io-1.4.6-3.1.el7.x86_64.rpm | 34 MB 00:00:08 (4/9): docker-ce-rootless-extras-20.10.7-3.el7.x86_64.rpm | 9.2 MB 00:00:02 (5/9): fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm | 54 kB 00:00:00 (6/9): slirp4netns-0.4.3-4.el7_8.x86_64.rpm | 81 kB 00:00:00 (7/9): fuse3-libs-3.6.1-4.el7.x86_64.rpm | 82 kB 00:00:00 (8/9): docker-scan-plugin-0.8.0-3.el7.x86_64.rpm | 4.2 MB 00:00:01 (9/9): docker-ce-cli-20.10.7-3.el7.x86_64.rpm | 33 MB 00:00:08 ------------------------------------------------------------------------------------------------------ Total 7.1 MB/s | 107 MB 00:00:15 Retrieving key from https://mirrors.aliyun.com/docker-ce/linux/centos/gpg Importing GPG key 0x621E9F35: Userid : "Docker Release (CE rpm) <docker@docker.com>" Fingerprint: 060a 61c5 1b55 8a7f 742b 77aa c52f eb6b 621e 9f35 From : https://mirrors.aliyun.com/docker-ce/linux/centos/gpg Retrieving key from http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 Importing GPG key 0xF4A80EB5: Userid : "CentOS-7 Key (CentOS 7 Official Signing Key) <security@centos.org>" Fingerprint: 6341 ab27 53d7 8a78 a7c2 7bb1 24c6 a8a7 f4a8 0eb5 From : http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : 2:container-selinux-2.119.2-1.911c772.el7_8.noarch 1/9 Installing : containerd.io-1.4.6-3.1.el7.x86_64 2/9 Installing : 1:docker-ce-cli-20.10.7-3.el7.x86_64 3/9 Installing : docker-scan-plugin-0.8.0-3.el7.x86_64 4/9 Installing : slirp4netns-0.4.3-4.el7_8.x86_64 5/9 Installing : fuse3-libs-3.6.1-4.el7.x86_64 6/9 Installing : fuse-overlayfs-0.7.2-6.el7_8.x86_64 7/9 Installing : docker-ce-rootless-extras-20.10.7-3.el7.x86_64 8/9 Installing : 3:docker-ce-20.10.7-3.el7.x86_64 9/9 Verifying : containerd.io-1.4.6-3.1.el7.x86_64 1/9 Verifying : fuse3-libs-3.6.1-4.el7.x86_64 2/9 Verifying : docker-scan-plugin-0.8.0-3.el7.x86_64 3/9 Verifying : slirp4netns-0.4.3-4.el7_8.x86_64 4/9 Verifying : 2:container-selinux-2.119.2-1.911c772.el7_8.noarch 5/9 Verifying : 3:docker-ce-20.10.7-3.el7.x86_64 6/9 Verifying : 1:docker-ce-cli-20.10.7-3.el7.x86_64 7/9 Verifying : docker-ce-rootless-extras-20.10.7-3.el7.x86_64 8/9 Verifying : fuse-overlayfs-0.7.2-6.el7_8.x86_64 9/9 Installed: docker-ce.x86_64 3:20.10.7-3.el7 Dependency Installed: container-selinux.noarch 2:2.119.2-1.911c772.el7_8 containerd.io.x86_64 0:1.4.6-3.1.el7 docker-ce-cli.x86_64 1:20.10.7-3.el7 docker-ce-rootless-extras.x86_64 0:20.10.7-3.el7 docker-scan-plugin.x86_64 0:0.8.0-3.el7 fuse-overlayfs.x86_64 0:0.7.2-6.el7_8 fuse3-libs.x86_64 0:3.6.1-4.el7 slirp4netns.x86_64 0:0.4.3-4.el7_8 Complete! [root@master yum.repos.d]# [root@master ~]# systemctl enable docker --now Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. [root@master ~]# [root@master ~]# docker info Client: Context: default Debug Mode: false Plugins: app: Docker App (Docker Inc., v0.9.1-beta3) buildx: Build with BuildKit (Docker Inc., v0.5.1-docker) scan: Docker Scan (Docker Inc., v0.8.0) Server: Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 4 Server Version: 20.10.7 Storage Driver: overlay2 Backing Filesystem: xfs Supports d_type: true Native Overlay Diff: true userxattr: false Logging Driver: json-file Cgroup Driver: cgroupfs Cgroup Version: 1 Plugins: Volume: local Network: bridge host ipvlan macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog Swarm: inactive Runtimes: io.containerd.runtime.v1.linux runc io.containerd.runc.v2 Default Runtime: runc Init Binary: docker-init containerd version: d71fcd7d8303cbf684402823e425e9dd2e99285d runc version: b9ee9c6314599f1b4a7f497e1f1f856fe433d3b7 init version: de40ad0 Security Options: seccomp Profile: default Kernel Version: 3.10.0-957.el7.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 2 Total Memory: 3.701GiB Name: master ID: 5FW3:5O7N:PZTJ:YUAT:GFXD:QEGA:GOA6:C2IE:I2FJ:FUQE:D2QT:QI6A Docker Root Dir: /var/lib/docker Debug Mode: false Registry: https://index.docker.io/v1/ Labels: Experimental: false Insecure Registries: 127.0.0.0/8 Live Restore Enabled: false [root@master ~]#

Configure accelerator

- This configuration is just to get the image faster

- Directly copy the following command

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://frz7i079.mirror.aliyuncs.com"]

}

EOF

- Then restart the docker service

[root@master ~]# systemctl restart docker [root@master ~]#

Set kernel parameters

Because the bridge works at the data link layer, when iptables does not turn on bridge NF, the data will be forwarded directly through the bridge. As a result, the forward setting is invalid.

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF

- After execution, it is as follows:

[root@master ~]# cat <<EOF > /etc/sysctl.d/k8s.conf > net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > EOF [root@master ~]# [root@master ~]# cat /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 [root@master ~]#

Installation k8s

View available versions

Command: Yum list -- showduplicates kubedm -- disableexcludes = kubernetes

[root@ccx k8s]# yum list --showduplicates kubeadm --disableexcludes=kubernetes

Loaded plugins: fastestmirror, langpacks, product-id, search-disabled-repos, subscription-

: manager

This system is not registered with an entitlement server. You can use subscription-manager

to register.

Loading mirror speeds from cached hostfile

Available Packages

kubeadm.x86_64 1.6.0-0 kubernetes

kubeadm.x86_64 1.6.1-0 kubernetes

kubeadm.x86_64 1.6.2-0 kubernetes

kubeadm.x86_64 1.6.3-0 kubernetes

kubeadm.x86_64 1.6.4-0 kubernetes

kubeadm.x86_64 1.6.5-0 kubernetes

kubeadm.x86_64 1.6.6-0 kubernetes

kubeadm.x86_64 1.6.7-0 kubernetes

kubeadm.x86_64 1.6.8-0 kubernetes

kubeadm.x86_64 1.6.9-0 kubernetes

kubeadm.x86_64 1.6.10-0 kubernetes

kubeadm.x86_64 1.6.11-0 kubernetes

kubeadm.x86_64 1.6.12-0 kubernetes

kubeadm.x86_64 1.6.13-0 kubernetes

kubeadm.x86_64 1.7.0-0 kubernetes

kubeadm.x86_64 1.7.1-0 kubernetes

kubeadm.x86_64 1.7.2-0 kubernetes

kubeadm.x86_64 1.7.3-1 kubernetes

kubeadm.x86_64 1.7.4-0 kubernetes

kubeadm.x86_64 1.7.5-0 kubernetes

kubeadm.x86_64 1.7.6-1 kubernetes

kubeadm.x86_64 1.7.7-1 kubernetes

kubeadm.x86_64 1.7.8-1 kubernetes

kubeadm.x86_64 1.7.9-0 kubernetes

kubeadm.x86_64 1.7.10-0 kubernetes

kubeadm.x86_64 1.7.11-0 kubernetes

kubeadm.x86_64 1.7.14-0 kubernetes

kubeadm.x86_64 1.7.15-0 kubernetes

kubeadm.x86_64 1.7.16-0 kubernetes

kubeadm.x86_64 1.8.0-0 kubernetes

kubeadm.x86_64 1.8.0-1 kubernetes

kubeadm.x86_64 1.8.1-0 kubernetes

kubeadm.x86_64 1.8.2-0 kubernetes

kubeadm.x86_64 1.8.3-0 kubernetes

kubeadm.x86_64 1.8.4-0 kubernetes

kubeadm.x86_64 1.8.5-0 kubernetes

kubeadm.x86_64 1.8.6-0 kubernetes

kubeadm.x86_64 1.8.7-0 kubernetes

kubeadm.x86_64 1.8.8-0 kubernetes

kubeadm.x86_64 1.8.9-0 kubernetes

kubeadm.x86_64 1.8.10-0 kubernetes

kubeadm.x86_64 1.8.11-0 kubernetes

kubeadm.x86_64 1.8.12-0 kubernetes

kubeadm.x86_64 1.8.13-0 kubernetes

kubeadm.x86_64 1.8.14-0 kubernetes

kubeadm.x86_64 1.8.15-0 kubernetes

kubeadm.x86_64 1.9.0-0 kubernetes

kubeadm.x86_64 1.9.1-0 kubernetes

kubeadm.x86_64 1.9.2-0 kubernetes

kubeadm.x86_64 1.9.3-0 kubernetes

kubeadm.x86_64 1.9.4-0 kubernetes

kubeadm.x86_64 1.9.5-0 kubernetes

kubeadm.x86_64 1.9.6-0 kubernetes

kubeadm.x86_64 1.9.7-0 kubernetes

kubeadm.x86_64 1.9.8-0 kubernetes

kubeadm.x86_64 1.9.9-0 kubernetes

kubeadm.x86_64 1.9.10-0 kubernetes

kubeadm.x86_64 1.9.11-0 kubernetes

kubeadm.x86_64 1.10.0-0 kubernetes

kubeadm.x86_64 1.10.1-0 kubernetes

kubeadm.x86_64 1.10.2-0 kubernetes

kubeadm.x86_64 1.10.3-0 kubernetes

kubeadm.x86_64 1.10.4-0 kubernetes

kubeadm.x86_64 1.10.5-0 kubernetes

kubeadm.x86_64 1.10.6-0 kubernetes

kubeadm.x86_64 1.10.7-0 kubernetes

kubeadm.x86_64 1.10.8-0 kubernetes

kubeadm.x86_64 1.10.9-0 kubernetes

kubeadm.x86_64 1.10.10-0 kubernetes

kubeadm.x86_64 1.10.11-0 kubernetes

kubeadm.x86_64 1.10.12-0 kubernetes

kubeadm.x86_64 1.10.13-0 kubernetes

kubeadm.x86_64 1.11.0-0 kubernetes

kubeadm.x86_64 1.11.1-0 kubernetes

kubeadm.x86_64 1.11.2-0 kubernetes

kubeadm.x86_64 1.11.3-0 kubernetes

kubeadm.x86_64 1.11.4-0 kubernetes

kubeadm.x86_64 1.11.5-0 kubernetes

kubeadm.x86_64 1.11.6-0 kubernetes

kubeadm.x86_64 1.11.7-0 kubernetes

kubeadm.x86_64 1.11.8-0 kubernetes

kubeadm.x86_64 1.11.9-0 kubernetes

kubeadm.x86_64 1.11.10-0 kubernetes

kubeadm.x86_64 1.12.0-0 kubernetes

kubeadm.x86_64 1.12.1-0 kubernetes

kubeadm.x86_64 1.12.2-0 kubernetes

kubeadm.x86_64 1.12.3-0 kubernetes

kubeadm.x86_64 1.12.4-0 kubernetes

kubeadm.x86_64 1.12.5-0 kubernetes

kubeadm.x86_64 1.12.6-0 kubernetes

kubeadm.x86_64 1.12.7-0 kubernetes

kubeadm.x86_64 1.12.8-0 kubernetes

kubeadm.x86_64 1.12.9-0 kubernetes

kubeadm.x86_64 1.12.10-0 kubernetes

kubeadm.x86_64 1.13.0-0 kubernetes

kubeadm.x86_64 1.13.1-0 kubernetes

kubeadm.x86_64 1.13.2-0 kubernetes

kubeadm.x86_64 1.13.3-0 kubernetes

kubeadm.x86_64 1.13.4-0 kubernetes

kubeadm.x86_64 1.13.5-0 kubernetes

kubeadm.x86_64 1.13.6-0 kubernetes

kubeadm.x86_64 1.13.7-0 kubernetes

kubeadm.x86_64 1.13.8-0 kubernetes

kubeadm.x86_64 1.13.9-0 kubernetes

kubeadm.x86_64 1.13.10-0 kubernetes

kubeadm.x86_64 1.13.11-0 kubernetes

kubeadm.x86_64 1.13.12-0 kubernetes

kubeadm.x86_64 1.14.0-0 kubernetes

kubeadm.x86_64 1.14.1-0 kubernetes

kubeadm.x86_64 1.14.2-0 kubernetes

kubeadm.x86_64 1.14.3-0 kubernetes

kubeadm.x86_64 1.14.4-0 kubernetes

kubeadm.x86_64 1.14.5-0 kubernetes

kubeadm.x86_64 1.14.6-0 kubernetes

kubeadm.x86_64 1.14.7-0 kubernetes

kubeadm.x86_64 1.14.8-0 kubernetes

kubeadm.x86_64 1.14.9-0 kubernetes

kubeadm.x86_64 1.14.10-0 kubernetes

kubeadm.x86_64 1.15.0-0 kubernetes

kubeadm.x86_64 1.15.1-0 kubernetes

kubeadm.x86_64 1.15.2-0 kubernetes

kubeadm.x86_64 1.15.3-0 kubernetes

kubeadm.x86_64 1.15.4-0 kubernetes

kubeadm.x86_64 1.15.5-0 kubernetes

kubeadm.x86_64 1.15.6-0 kubernetes

kubeadm.x86_64 1.15.7-0 kubernetes

kubeadm.x86_64 1.15.8-0 kubernetes

kubeadm.x86_64 1.15.9-0 kubernetes

kubeadm.x86_64 1.15.10-0 kubernetes

kubeadm.x86_64 1.15.11-0 kubernetes

kubeadm.x86_64 1.15.12-0 kubernetes

kubeadm.x86_64 1.16.0-0 kubernetes

kubeadm.x86_64 1.16.1-0 kubernetes

kubeadm.x86_64 1.16.2-0 kubernetes

kubeadm.x86_64 1.16.3-0 kubernetes

kubeadm.x86_64 1.16.4-0 kubernetes

kubeadm.x86_64 1.16.5-0 kubernetes

kubeadm.x86_64 1.16.6-0 kubernetes

kubeadm.x86_64 1.16.7-0 kubernetes

kubeadm.x86_64 1.16.8-0 kubernetes

kubeadm.x86_64 1.16.9-0 kubernetes

kubeadm.x86_64 1.16.10-0 kubernetes

kubeadm.x86_64 1.16.11-0 kubernetes

kubeadm.x86_64 1.16.11-1 kubernetes

kubeadm.x86_64 1.16.12-0 kubernetes

kubeadm.x86_64 1.16.13-0 kubernetes

kubeadm.x86_64 1.16.14-0 kubernetes

kubeadm.x86_64 1.16.15-0 kubernetes

kubeadm.x86_64 1.17.0-0 kubernetes

kubeadm.x86_64 1.17.1-0 kubernetes

kubeadm.x86_64 1.17.2-0 kubernetes

kubeadm.x86_64 1.17.3-0 kubernetes

kubeadm.x86_64 1.17.4-0 kubernetes

kubeadm.x86_64 1.17.5-0 kubernetes

kubeadm.x86_64 1.17.6-0 kubernetes

kubeadm.x86_64 1.17.7-0 kubernetes

kubeadm.x86_64 1.17.7-1 kubernetes

kubeadm.x86_64 1.17.8-0 kubernetes

kubeadm.x86_64 1.17.9-0 kubernetes

kubeadm.x86_64 1.17.11-0 kubernetes

kubeadm.x86_64 1.17.12-0 kubernetes

kubeadm.x86_64 1.17.13-0 kubernetes

kubeadm.x86_64 1.17.14-0 kubernetes

kubeadm.x86_64 1.17.15-0 kubernetes

kubeadm.x86_64 1.17.16-0 kubernetes

kubeadm.x86_64 1.17.17-0 kubernetes

kubeadm.x86_64 1.18.0-0 kubernetes

kubeadm.x86_64 1.18.1-0 kubernetes

kubeadm.x86_64 1.18.2-0 kubernetes

kubeadm.x86_64 1.18.3-0 kubernetes

kubeadm.x86_64 1.18.4-0 kubernetes

kubeadm.x86_64 1.18.4-1 kubernetes

kubeadm.x86_64 1.18.5-0 kubernetes

kubeadm.x86_64 1.18.6-0 kubernetes

kubeadm.x86_64 1.18.8-0 kubernetes

kubeadm.x86_64 1.18.9-0 kubernetes

kubeadm.x86_64 1.18.10-0 kubernetes

kubeadm.x86_64 1.18.12-0 kubernetes

kubeadm.x86_64 1.18.13-0 kubernetes

kubeadm.x86_64 1.18.14-0 kubernetes

kubeadm.x86_64 1.18.15-0 kubernetes

kubeadm.x86_64 1.18.16-0 kubernetes

kubeadm.x86_64 1.18.17-0 kubernetes

kubeadm.x86_64 1.18.18-0 kubernetes

kubeadm.x86_64 1.18.19-0 kubernetes

kubeadm.x86_64 1.18.20-0 kubernetes

kubeadm.x86_64 1.19.0-0 kubernetes

kubeadm.x86_64 1.19.1-0 kubernetes

kubeadm.x86_64 1.19.2-0 kubernetes

kubeadm.x86_64 1.19.3-0 kubernetes

kubeadm.x86_64 1.19.4-0 kubernetes

kubeadm.x86_64 1.19.5-0 kubernetes

kubeadm.x86_64 1.19.6-0 kubernetes

kubeadm.x86_64 1.19.7-0 kubernetes

kubeadm.x86_64 1.19.8-0 kubernetes

kubeadm.x86_64 1.19.9-0 kubernetes

kubeadm.x86_64 1.19.10-0 kubernetes

kubeadm.x86_64 1.19.11-0 kubernetes

kubeadm.x86_64 1.19.12-0 kubernetes

kubeadm.x86_64 1.20.0-0 kubernetes

kubeadm.x86_64 1.20.1-0 kubernetes

kubeadm.x86_64 1.20.2-0 kubernetes

kubeadm.x86_64 1.20.4-0 kubernetes

kubeadm.x86_64 1.20.5-0 kubernetes

kubeadm.x86_64 1.20.6-0 kubernetes

kubeadm.x86_64 1.20.7-0 kubernetes

kubeadm.x86_64 1.20.8-0 kubernetes

kubeadm.x86_64 1.21.0-0 kubernetes

kubeadm.x86_64 1.21.1-0 kubernetes

kubeadm.x86_64 1.21.2-0 kubernetes

[root@ccx k8s]#

install

- Version 1.21 is installed here

yum install -y kubelet-1.21.0-0 kubeadm-1.21.0-0 kubectl-1.21.0-0 --disableexcludes=kubernetes

- As I said earlier, my three machines do not have an Internet, so I installed the k8s package offline. The decompression command is as follows.

[root@master ~]# cd /k8s/ [root@master k8s]# ls 13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet-1.21.0-0.x86_64.rpm conntrack-tools-1.4.4-7.el7.x86_64.rpm d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl-1.21.0-0.x86_64.rpm db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm-1.21.0-0.x86_64.rpm libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm socat-1.7.3.2-2.el7.x86_64.rpm [root@master k8s]# cp * node1:/k8s cp: target 'node1:/k8s' is not a directory [root@master k8s]# [root@master k8s]# scp * node1:/k8s root@node1's password: 13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet 100% 20MB 22.4MB/s 00:00 conntrack-tools-1.4.4-7.el7.x86_64.rpm 100% 187KB 21.3MB/s 00:00 d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl 100% 9774KB 22.3MB/s 00:00 db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kuberne 100% 19MB 21.9MB/s 00:00 dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm 100% 9285KB 21.9MB/s 00:00 libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm 100% 18KB 1.4MB/s 00:00 libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm 100% 18KB 6.3MB/s 00:00 libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm 100% 23KB 8.6MB/s 00:00 socat-1.7.3.2-2.el7.x86_64.rpm 100% 290KB 23.1MB/s 00:00 [root@master k8s]# scp * node2:/k8s root@node2's password: 13b4e820d82ad7143d786b9927adc414d3e270d3d26d844e93eff639f7142e50-kubelet 100% 20MB 20.0MB/s 00:01 conntrack-tools-1.4.4-7.el7.x86_64.rpm 100% 187KB 19.9MB/s 00:00 d625f039f4a82eca35f6a86169446afb886ed9e0dfb167b38b706b411c131084-kubectl 100% 9774KB 21.2MB/s 00:00 db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kuberne 100% 19MB 21.4MB/s 00:00 dc4816b13248589b85ee9f950593256d08a3e6d4e419239faf7a83fe686f641c-kubeadm 100% 9285KB 19.6MB/s 00:00 libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm 100% 18KB 5.9MB/s 00:00 libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm 100% 18KB 2.9MB/s 00:00 libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm 100% 23KB 8.3MB/s 00:00 socat-1.7.3.2-2.el7.x86_64.rpm 100% 290KB 21.1MB/s 00:00 [root@master k8s]# rpm -ivhU * --nodeps --force Preparing... ################################# [100%] Updating / installing... 1:socat-1.7.3.2-2.el7 ################################# [ 11%] 2:libnetfilter_queue-1.0.2-2.el7_2 ################################# [ 22%] 3:libnetfilter_cttimeout-1.0.0-7.el################################# [ 33%] 4:libnetfilter_cthelper-1.0.0-11.el################################# [ 44%] 5:conntrack-tools-1.4.4-7.el7 ################################# [ 56%] 6:kubernetes-cni-0.8.7-0 ################################# [ 67%] 7:kubelet-1.21.0-0 ################################# [ 78%] 8:kubectl-1.21.0-0 ################################# [ 89%] 9:kubeadm-1.21.0-0 ################################# [100%] [root@master k8s]#

Start k8s service

Command: systemctl enable kubelet --now

It means to join and start now.

[root@master k8s]# systemctl enable kubelet --now Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@master k8s]# systemctl is-active kubelet activating [root@master k8s]#

Install kubedmin [create cluster] [operate on master]

Install kubeadmin

Command description

- Command: kubedm init -- image repository registry aliyuncs. com/google_ containers --kubernetes-version=v1. 21.0 -- pod network CIDR = 10.244.0.0/16 [the last 10.244 is user-defined]

- Kubedm init: initialize the cluster

- --image-repository registry.aliyuncs.com/google_containers: if you need an image, you can start from the registry aliyuncs. com/google_ Containers get

- --kubernetes-version=v1.21.0 specified version

- --Pod network CIDR = 10.244.0.0/16: Specifies the network segment of the pod

List of mirrors after command execution

- There is no image in docker before installation

[root@ccx ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE [root@ccx ~]#

- After downloading, docker will have more images

These images are complete and lack a dns

[root@ccx ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZEregistry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 months ago 126MBregistry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 months ago 122MBregistry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 months ago 120MBregistry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 months ago 50.6MBregistry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 5 months ago 683kBregistry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 10 months ago 253MB[root@ccx ~]# [root@ccx ~]#

Command execution error reporting and handling

- After executing the command, the final error is as follows

[root@ccx k8s]# kubeadm init --image-repository registry.aliyuncs.com/google_containers -- kubernetes-version=v1.21.0 --pod-network-cidr=10.244.0.0/16[init] Using Kubernetes version: v1.21.0 [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. T he recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/[preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet conne ction[preflight] You can also perform this action in beforehand using 'kubeadm config images pu ll'error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/co redns/coredns:v1.8.0: output: Error response from daemon: pull access denied for registry.aliyuncs.com/google_containers/coredns/coredns, repository does not exist or may require 'docker login': denied: requested access to the resource is denied, error: exit status 1 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore- preflight-errors=...`To see the stack trace of this error execute with --v=5 or higher [root@ccx k8s]#

-

The reason is: pull image registry aliyuncs. com/google_ containers/co redns/coredns:v1. An error is reported when downloading the / coredns package in 8.0. In fact, the image is in, but the name is wrong, so download the / coredns package in other ways and then import it.

-

You can download and upload those you don't want to find: https://download.csdn.net/download/cuichongxin/19965486

-

Import command: docker load - I coredns-1.21 tar

The whole process is as follows

[root@master kubeadm]# rz -E rz waiting to receive. zmodem trl+C ȡ 100% 41594 KB 20797 KB/s 00:00:02 0 Errors [root@master kubeadm]# ls coredns-1.21.tar [root@master kubeadm]# docker load -i coredns-1.21.tar 225df95e717c: Loading layer 336.4kB/336.4kB 69ae2fbf419f: Loading layer 42.24MB/42.24MB Loaded image: registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0 [root@master kubeadm]# [root@master kubeadm]# docker images | grep dns registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 8 months ago 42.5MB [root@master kubeadm]#

- All mirrors are as follows

[root@master k8s]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 months ago 126MB registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 months ago 122MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 months ago 120MB registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 months ago 50.6MB registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 5 months ago 683kB registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 8 months ago 42.5MB registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 10 months ago 253MB [root@master k8s]#

- Note: coredns-1.21 The tar package needs to be copied to three nodes [master and node nodes]

Start kubeadmin

When all the above images are ready, execute kubedm init -- image repository registry aliyuncs. com/google_ containers --kubernetes-version=v1. 21.0 -- pod network CIDR = 10.244.0.0/16, these contents will be prompted at the bottom [it has not been started successfully now], saying that the authentication file is missing.

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.59.142:6443 --token 29ivpt.l5x5zhqzca4n47kp \

--discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[root@master k8s]#

- Now we can copy and execute the following command according to the prompt:

[root@master k8s]# mkdir -p $HOME/.kube [root@master k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@master k8s]#

- After the above three commands are executed, you can see the current node information

Command: kubectl get nodes

[root@master k8s]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady control-plane,master 8m19s v1.21.0 [root@master k8s]#

View current cluster information

- Command: kubedm token create -- print join command

Function: execute this command on the node node to join the cluster

[root@master k8s]# [root@master k8s]# kubeadm token create --print-join-command kubeadm join 192.168.59.142:6443 --token mguyry.aky5kho66cnjtlsl --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b [root@master k8s]#

Join the cluster [operate on node]

Join the cluster [kubedmin]

- Command: it is the result of executing kubedm token create -- print join command on the master.

For example, my results on the master are as follows:

- Then I will execute this result on both node nodes:

[root@node1 k8s]# kubeadm join 192.168.59.142:6443 --token sqjhzj.7aloiqau86k8xq54 --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node1 k8s]#

- After execution, you can see the information of these two nodes by executing kubectl get nodes on the master node

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady control-plane,master 29m v1.21.0 node1 NotReady <none> 3m59s v1.21.0 node2 NotReady <none> 79s v1.21.0 [root@master ~]#

Note: the STATUS status of the above cluster is NotReady, which is not normal. Because there are network plug-ins, the STATUS of the following calico will be normal after installation.

Network plug-in calico installation

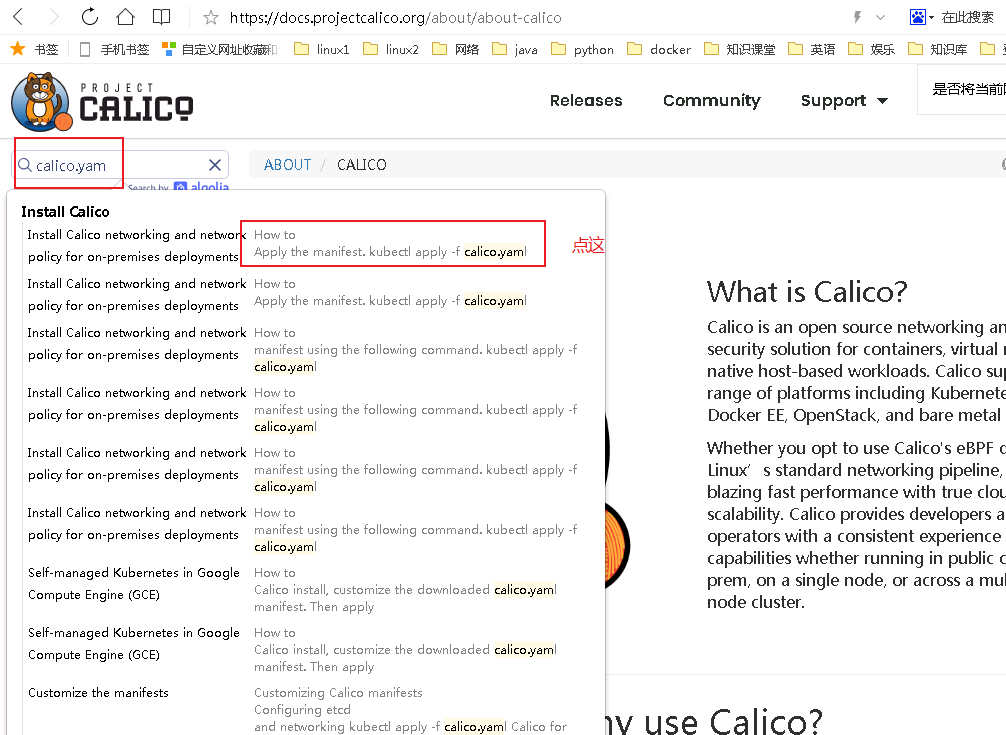

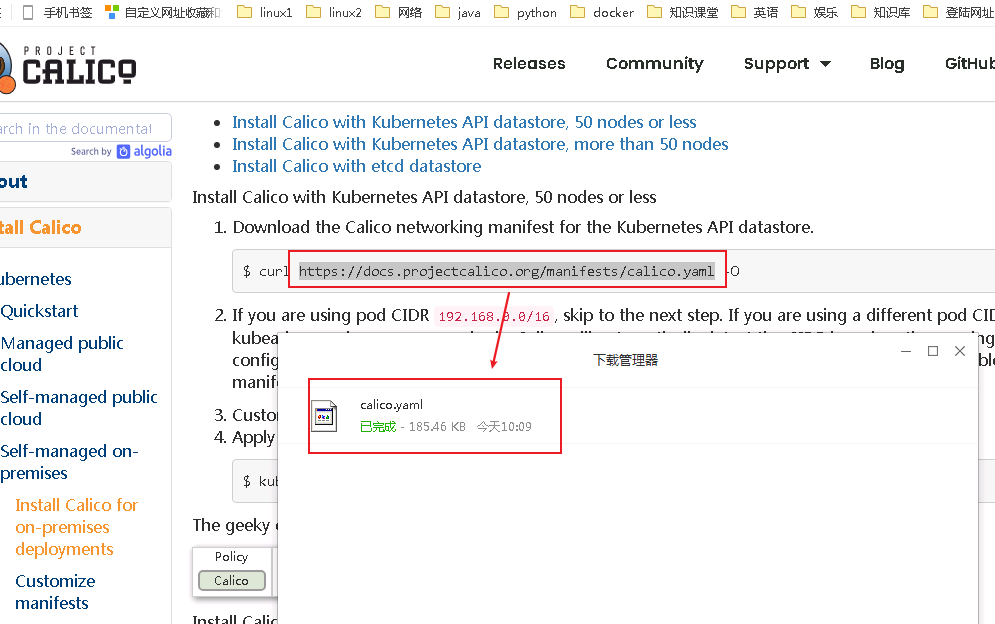

calico.yam file acquisition

- website: https://docs.projectcalico.org/

After entering, directly search calico yam

There will be a download URL starting with curl in the drop-down box. We can download directly with a complex download connection or with wget on linux. Replace curl with wget and remove the last - O.

View the image required by calico and download [synchronization of 3 hosts]

- Command: grep image calico yaml

My environment has no Internet. rz is the command to copy my download image.

[root@master ~]# rz -E

rz waiting to receive.

zmodem trl+C ȡ

100% 185 KB 185 KB/s 00:00:01 0 Errors

[root@master ~]# ls | grep calico.yaml

calico.yaml

[root@master ~]# grep image calico.yaml

image: docker.io/calico/cni:v3.19.1

image: docker.io/calico/cni:v3.19.1

image: docker.io/calico/pod2daemon-flexvol:v3.19.1

image: docker.io/calico/node:v3.19.1

image: docker.io/calico/kube-controllers:v3.19.1

[root@master ~]#

- Then go to a host with network to download these images

Download command: docker pull the above five image names, such as docker pull docker io/calico/cni:v3. nineteen point one

All the five images need to be downloaded [download is very slow, you can download them on one server, then package the images, copy them to the other two images and decompress them. No, there is an article in my blog about docker image management, you can go and have a look] - If you think the download is slow [about 400M in total], you can use the link I have downloaded: https://download.csdn.net/download/cuichongxin/19988222

The usage is as follows

[root@master ~]# scp calico-3.19-img.tar node1:~ root@node1's password: calico-3.19-img.tar 100% 381MB 20.1MB/s 00:18 [root@master ~]# [root@master ~]# scp calico-3.19-img.tar node2:~ root@node2's password: calico-3.19-img.tar 100% 381MB 21.1MB/s 00:18 [root@master ~]# [root@node1 ~]# docker load -i calico-3.19-img.tar a4bf22d258d8: Loading layer 88.58kB/88.58kB d570c523a9c3: Loading layer 13.82kB/13.82kB b4d08d55eb6e: Loading layer 145.8MB/145.8MB Loaded image: calico/cni:v3.19.1 01c2272d9083: Loading layer 13.82kB/13.82kB ad494b1a2a08: Loading layer 2.55MB/2.55MB d62fbb2a27c3: Loading layer 5.629MB/5.629MB 2ac91a876a81: Loading layer 5.629MB/5.629MB c923b81cc4f1: Loading layer 2.55MB/2.55MB aad8b538640b: Loading layer 5.632kB/5.632kB b3e6b5038755: Loading layer 5.378MB/5.378MB Loaded image: calico/pod2daemon-flexvol:v3.19.1 4d2bd71609f0: Loading layer 170.8MB/170.8MB dc8ea6c15e2e: Loading layer 13.82kB/13.82kB Loaded image: calico/node:v3.19.1 ce740cb4fc7d: Loading layer 13.82kB/13.82kB fe82f23d6e35: Loading layer 2.56kB/2.56kB 98738437610a: Loading layer 57.53MB/57.53MB 0443f62e8f15: Loading layer 3.082MB/3.082MB Loaded image: calico/kube-controllers:v3.19.1 [root@node1 ~]#

- After all images are prepared, the following are:

[root@node1 ~]# docker images | grep ca calico/node v3.19.1 c4d75af7e098 6 weeks ago 168MB calico/pod2daemon-flexvol v3.19.1 5660150975fb 6 weeks ago 21.7MB calico/cni v3.19.1 5749e8b276f9 6 weeks ago 146MB calico/kube-controllers v3.19.1 5d3d5ddc8605 6 weeks ago 60.6MB registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 months ago 50.6MB [root@node1 ~]#

Install calico [operation on master]

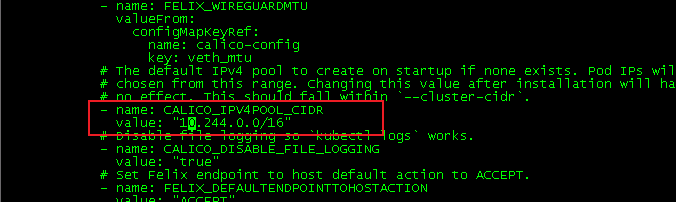

- Set network segment [operation on master]

- Edit the configuration file calico Yaml, delete the # and spaces in front of the following 2 lines [format alignment]

- Change 192.168 after value to the network segment when you initialize the cluster. I use 10.244.

[root@master ~]# cat calico.yaml | egrep -B 1 10.244

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

[root@master ~]#

- Then execute: kubectl apply - f calico yaml

[root@master ~]# kubectl apply -f calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget poddisruptionbudget.policy/calico-kube-controllers created [root@master ~]#

- Now execute kubectl get nodes to see that the STATUS is normal

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane,master 70m v1.21.0 node1 Ready <none> 45m v1.21.0 node2 Ready <none> 42m v1.21.0 [root@master ~]#

So far, the basic construction of the cluster has been completed.

Check the command of calico installed on those nodes: kubectl get Pods - n Kube system - O wide [just understand]

[root@master ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-78d6f96c7b-p4svs 1/1 Running 0 98m 10.244.219.67 master <none> <none> calico-node-cc4fc 1/1 Running 18 65m 192.168.59.144 node2 <none> <none> calico-node-stdfj 1/1 Running 20 98m 192.168.59.142 master <none> <none> calico-node-zhhz7 1/1 Running 1 98m 192.168.59.143 node1 <none> <none> coredns-545d6fc579-6kb9x 1/1 Running 0 167m 10.244.219.65 master <none> <none> coredns-545d6fc579-v74hg 1/1 Running 0 167m 10.244.219.66 master <none> <none> etcd-master 1/1 Running 1 167m 192.168.59.142 master <none> <none> kube-apiserver-master 1/1 Running 1 167m 192.168.59.142 master <none> <none> kube-controller-manager-master 1/1 Running 11 167m 192.168.59.142 master <none> <none> kube-proxy-45qgd 1/1 Running 1 65m 192.168.59.144 node2 <none> <none> kube-proxy-fdhpw 1/1 Running 1 167m 192.168.59.142 master <none> <none> kube-proxy-zf6nt 1/1 Running 1 142m 192.168.59.143 node1 <none> <none> kube-scheduler-master 1/1 Running 12 167m 192.168.59.142 master <none> <none> [root@master ~]#

kubectl subcommand tab settings

- Status: except kubectl can tab, the rest can't tab. You can test it first.

[root@master ~]# kubectl ge

- View cmd of kubectl

[root@master ~]# kubectl --help | grep bash completion Output shell completion code for the specified shell (bash or zsh) [root@master ~]#

- resolvent

It should be understood below. Add source < (kubectl completion bash) to the configuration file to make it effective. Then you can tab normally

[root@master ~]# head -n 3 /etc/profile # /etc/profile source <(kubectl completion bash) [root@master ~]# [root@master ~]# source /etc/profile [root@master ~]# [root@master ~]# [root@master ~]# kubectl get

- Note: ensure that there is a local completion package. If not, install it. Otherwise, it is meaningless to complete the above operations.

[root@master ~]# rpm -qa | grep bash bash-completion-2.1-6.el7.noarch bash-4.2.46-31.el7.x86_64 [root@master ~]#

Delete the cluster node [kick out cluster] [operate on master]

- Two steps are required

- 1. Kick command: kubectl drain nodes_ Name -- ignore daemonsets [nodes_name view, execute: kubectl get nodes]

After this command is executed, execute kubectl get nodes, and the STATUS status will be one more schedulendisabled, which can be deleted at this time. - 2. Delete command: kubectl delete nodes_ name

- 1. Kick command: kubectl drain nodes_ Name -- ignore daemonsets [nodes_name view, execute: kubectl get nodes]

- For example, I will now kick node2 out of the cluster

[root@master ~]# kubectl drain node2 --ignore-daemonsets node/node2 cordoned WARNING: ignoring DaemonSet-managed Pods: kube-system/calico-node-mw4zf, kube-system/kube-proxy-fqwtc node/node2 drained [root@master ~]# [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane,master 87m v1.21.0 node1 Ready <none> 62m v1.21.0 node2 Ready,SchedulingDisabled <none> 59m v1.21.0 [root@master ~]# [root@master ~]# kubectl delete nodes node2 node "node2" deleted [root@master ~]# [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready control-plane,master 88m v1.21.0 node1 Ready <none> 63m v1.21.0 [root@master ~]#

- After deletion, an error will be reported when the node node rejoins the cluster. See the following "node rejoining the cluster [node node operation]"

Cluster initialization [operation on master]

-

technological process

- 1. Kick out all clusters first [including their own master nodes]

- 2. Clear all cluster configurations

Command: kubedm reset enter and enter y. [all settings of the cluster are gone after execution]

-

After the above two steps are completed, you can re create the cluster and join the cluster from the above "install kubedmin [create cluster] [operation on master]. [calico also needs to be reinstalled].

Note: when joining a cluster, you need to re view the cluster information from the master. The previous one cannot be used. -

After deletion, an error will be reported when the node node rejoins the cluster. See the following "node rejoining the cluster [node node operation]"

Node node rejoining the cluster [node node operation]

Reasons and contents of error reporting when joining the cluster

If our node is kicked out of the cluster by the master and directly rejoins the cluster, the following error will be reported:

[root@node2 ~]# kubeadm join 192.168.59.142:6443 --token sqjhzj.7aloiqau86k8xq54 --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR Port-10250]: Port 10250 is in use

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@node2 ~]#

processing method

To rejoin the cluster, the node needs to reset the cluster status. The command is kubedm reset. Press enter and enter y

[root@node2 ~]# kubeadm reset [reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted. [reset] Are you sure you want to proceed? [y/N]: y [preflight] Running pre-flight checks W0702 11:17:49.527296 60215 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory [reset] No etcd config found. Assuming external etcd [reset] Please, manually reset etcd to prevent further issues [reset] Stopping the kubelet service [reset] Unmounting mounted directories in "/var/lib/kubelet" [reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki] [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf] [reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni] The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d The reset process does not reset or clean up iptables rules or IPVS tables. If you wish to reset iptables, you must do so manually by using the "iptables" command. If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar) to reset your system's IPVS tables. The reset process does not clean your kubeconfig files and you must remove them manually. Please, check the contents of the $HOME/.kube/config file. [root@node2 ~]#

- Now you can rejoin the cluster

[root@node2 ~]# kubeadm join 192.168.59.142:6443 --token sqjhzj.7aloiqau86k8xq54 --discovery-token-ca-cert-hash sha256:5a9779259cea2b3a23fa6e713ed02f5b0c6eda2d75049b703dbec78587015e0b

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node2 ~]#