You can learn the code with the paper and ReID below GitHub - michuanhaohao/ReID_tutorial_slides: courseware of deep learning and pedestrian recognitionDeep learning and pedestrian recognition, Dr. Luo Hao, Zhejiang University_ Beep beep beep_ bilibili https://www.bilibili.com/video/BV1Pg4y1q7sN?from=search&seid=12319613973768358764&spm_id_from=333.337.0.0

https://www.bilibili.com/video/BV1Pg4y1q7sN?from=search&seid=12319613973768358764&spm_id_from=333.337.0.0

The theoretical part is not described here. The explanation in the video is very clear. You can learn from the video. Here we only talk about the use of code.

The above code can carry out normal training, but there is no video or image detection code. Then, when looking for relevant materials, it is found that yolov3 and ReID are combined in this article, but there is no training code in this article https://zhuanlan.zhihu.com/p/82398949

Therefore, the codes of the above two projects are integrated. Thank the above two bloggers for their contributions. The code was slightly modified, and I also trained se_renext50 network can be detected normally. The video or image can be retrieved, and the effect is as follows [it can be seen that the retrieval can continue even after occlusion]:

Training

Step 1:

config folder:

defaults.py (some default configurations)

GPU settings:

_ C.MODEL.DEVICE = "cuda" whether to use GPU

_C.MODEL.DEVICE_ID = '0' GPU ID

Network settings:

_C.MODEL.NAME = 'resnet50_ibn_a'

_ C.MODEL.PRETRAIN_PATH =r'./pretrained.pth '# pre weighted path

Super parameter setting:

_ C.SOLVER.OPTIMIZER_NAME = "Adam" # select optimizer

_ C.SOLVER.MAX_EPOCHS = 120 # training maximum epoch s

_ C.SOLVER.BASE_LR = 3e-4 # initial learning rate

Step 2:

configs folder:

softmax_ triplet_ with_ center. In YML

MODEL:

PRETRAIN_PATH: # pre training weight

SOLVER:

OPTIMIZER_NAME: 'Adam' # optimizer

MAX_EPOCHS: 120 # maximum epoch s

BASE_LR: 0.00035 # initial learning rate

It mainly sets the weight saving cycle and log and eval recording cycle [I set 1, so that the log and weight will be saved once in each round, and mAP and rank will be calculated once in each round]

CHECKPOINT_PERIOD: 1

LOG_PERIOD: 1

EVAL_PERIOD: 1

OUTPUT_DIR:r'./logs' # output path

Step 3:

The data folder is used to store the Market1501 dataset

Step 4:

Enter the command to start training:

tools/train.py --config_file='configs/softmax_triplet.yml' MODEL.DEVICE_ID "('0')" DATASETS.NAMES "('market1501')" DATASETS.ROOT_DIR "(r'./data')" OUTPUT_DIR "('E:/ReID/logs')"=> Market1501 loaded Dataset statistics: ---------------------------------------- subset | # ids | # images | # cameras ---------------------------------------- train | 751 | 12936 | 6 query | 750 | 3368 | 6 gallery | 751 | 15913 | 6 ---------------------------------------- Loading pretrained ImageNet model...... 2022-02-18 16:17:54,983 reid_baseline.train INFO: Epoch[1] Iteration[1/1484] Loss: 7.667, Acc: 0.000, Base Lr: 3.82e-05 2022-02-18 16:17:55,225 reid_baseline.train INFO: Epoch[1] Iteration[2/1484] Loss: 7.671, Acc: 0.000, Base Lr: 3.82e-05 2022-02-18 16:17:55,436 reid_baseline.train INFO: Epoch[1] Iteration[3/1484] Loss: 7.669, Acc: 0.003, Base Lr: 3.82e-05 2022-02-18 16:17:55,646 reid_baseline.train INFO: Epoch[1] Iteration[4/1484] Loss: 7.663, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:55,856 reid_baseline.train INFO: Epoch[1] Iteration[5/1484] Loss: 7.663, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:56,069 reid_baseline.train INFO: Epoch[1] Iteration[6/1484] Loss: 7.658, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:56,277 reid_baseline.train INFO: Epoch[1] Iteration[7/1484] Loss: 7.654, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:56,490 reid_baseline.train INFO: Epoch[1] Iteration[8/1484] Loss: 7.660, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:56,699 reid_baseline.train INFO: Epoch[1] Iteration[9/1484] Loss: 7.653, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:56,906 reid_baseline.train INFO: Epoch[1] Iteration[10/1484] Loss: 7.651, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:57,110 reid_baseline.train INFO: Epoch[1] Iteration[11/1484] Loss: 7.645, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:57,316 reid_baseline.train INFO: Epoch[1] Iteration[12/1484] Loss: 7.643, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:57,526 reid_baseline.train INFO: Epoch[1] Iteration[13/1484] Loss: 7.644, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:57,733 reid_baseline.train INFO: Epoch[1] Iteration[14/1484] Loss: 7.638, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:57,942 reid_baseline.train INFO: Epoch[1] Iteration[15/1484] Loss: 7.634, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:58,148 reid_baseline.train INFO: Epoch[1] Iteration[16/1484] Loss: 7.630, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:58,355 reid_baseline.train INFO: Epoch[1] Iteration[17/1484] Loss: 7.634, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:58,564 reid_baseline.train INFO: Epoch[1] Iteration[18/1484] Loss: 7.627, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:58,770 reid_baseline.train INFO: Epoch[1] Iteration[19/1484] Loss: 7.629, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:58,980 reid_baseline.train INFO: Epoch[1] Iteration[20/1484] Loss: 7.624, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:59,186 reid_baseline.train INFO: Epoch[1] Iteration[21/1484] Loss: 7.619, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:59,397 reid_baseline.train INFO: Epoch[1] Iteration[22/1484] Loss: 7.614, Acc: 0.002, Base Lr: 3.82e-05 2022-02-18 16:17:59,605 reid_baseline.train INFO: Epoch[1] Iteration[23/1484] Loss: 7.608, Acc: 0.002, Base Lr: 3.82e-05 ··············································

After training, the trained weights will be saved in the logs file

testing

Enter person_search folder

Test picture:

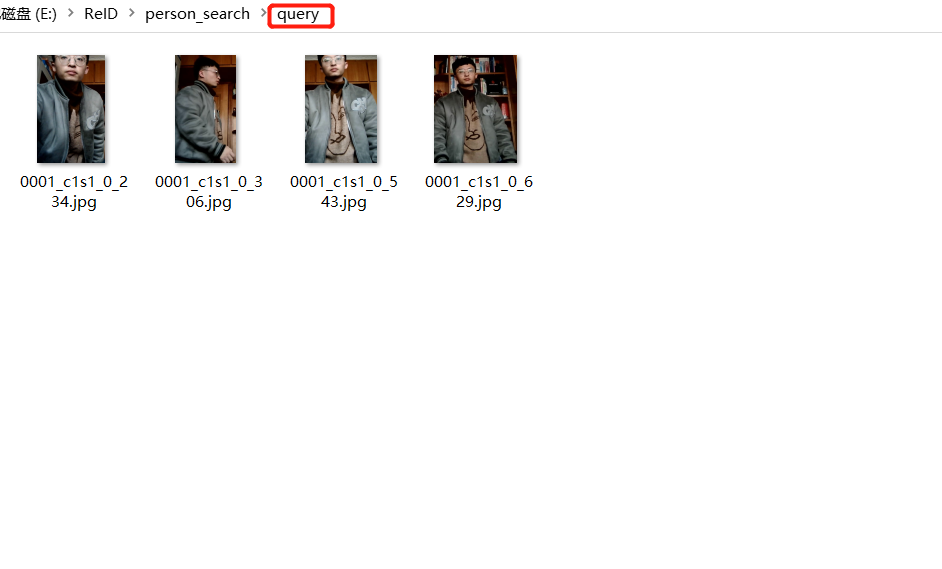

Put the image to be detected into the query folder, Put the image or video to be detected under data / samples / [note that the image to be detected here is different from the query image. For example, if you want to find a person from a pile of images or videos, you can put the person's photo into query now, and the program can retrieve the person from a pile of images or videos under sampers file],

Set search py. images is the image or video to be detected, dist_thres is the distance between two samples calculated in ReID measurement matching. If it is less than this distance, it indicates that the similarity is very high. This needs to be manually debugged according to different videos.

def detect(cfg,

data,

weights,

images='data/samples', # input folder

output='output', # output folder

fourcc='mp4v', # video codec

img_size=416,

conf_thres=0.5,

nms_thres=0.5,

dist_thres=1.0, # Distance threshold

save_txt=False,

save_images=True):Run search py

The final search result will be output to output [Note: if you put pictures in query, the naming format needs to be the same as market1501]

Detection video:

query_get.py, set the video path first

After running, press the space bar (by frame) to continue playing the video, and press the left mouse button to take a screenshot (the image will be automatically saved and named under the query file)

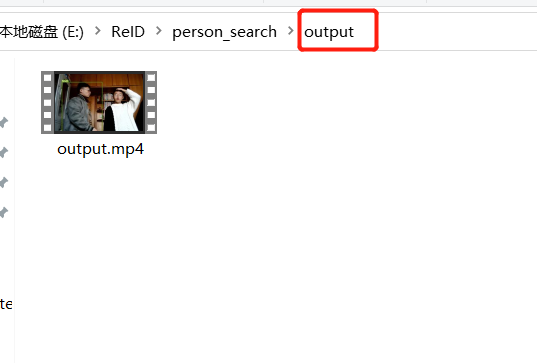

Similarly, put the video to be detected into data/samples, set the parameters and run search Py, and the detected results will be output to person_ In the search / output file.

--------------------------------------------------------------------------------------------------------------------------------

[description of original code modification]

engine folder: trainer Py mainly defines some training functions and adds the saved network weight on the basis of the original code. The weight of the original code is to save the optimizer and other parameter settings as weights. These are not required to load into the original network, and the keys error will be reported. Therefore, I directly save the network weight to facilitate loading

modeling folder baseline Py if you modify the backbone network [to facilitate later magic modification of the network], when you load the weight, you will report keys for storage, so the following code is added to solve this problem_ dict = {k: v for k, v in pretrained_dict.items() if k in model_dict.keys() == pretrained_dict.keys()}

Code and weight Baidu cloud: https://pan.baidu.com/s/1p5C5mCVxGK61_eYc7HHpHA

Extraction code: yypn