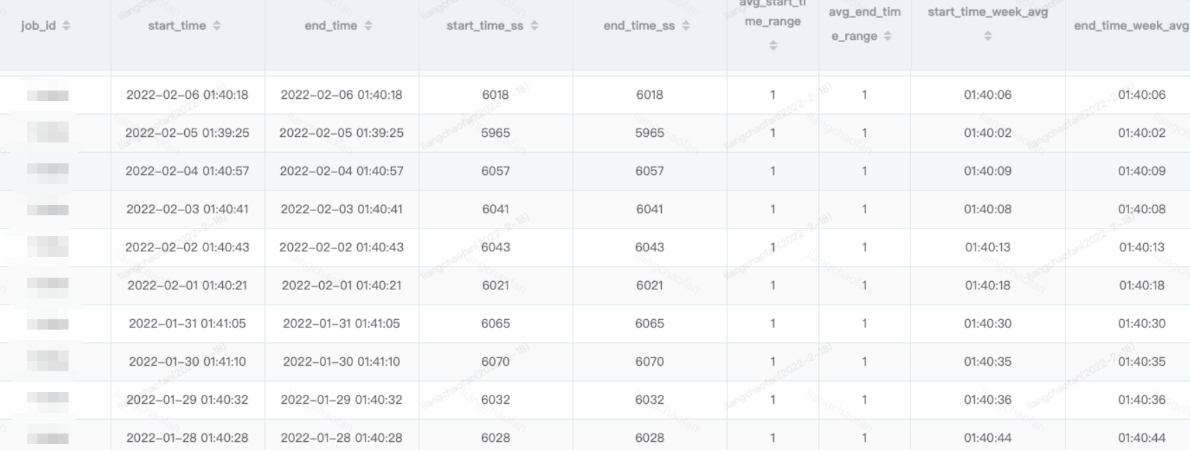

What needs to be done: a full scale to calculate the average start and end time of the task in seven days

1, Introduction data

The data table is a full synchronization table. The partition is based on the date. It contains the start time, end time, total seconds of start time (total seconds to the early morning), and total seconds of end time (total seconds to the early morning).

Partition value example: log_date = '20218', not '2022-02-18'

Now you need to find the average end and start time of each task in seven days

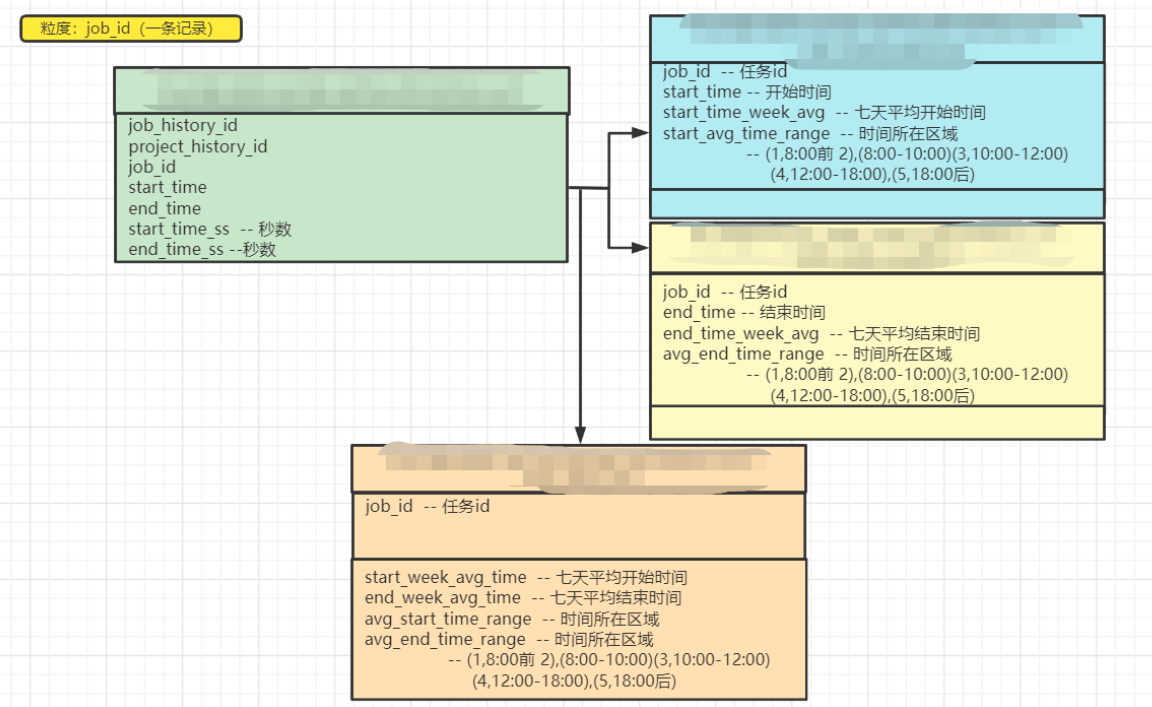

2, ER model

When analyzing a requirement, try to clarify the field relationship and the results we want. After the model is established, the idea will be opened. The ER model of this requirement is actually very clear. The data granularity is naturally a record of a task, and then it is nothing more than finding the average start time and average end time, and then judging the range of time, Then connect it

3, Train of thought combing

- First of all, there is no doubt that you need to use the windowing function

- Secondly, how to set the partition in the windowing function

- Because it is within seven days, you need to sort the data according to which field

- Because the partition format is' 20220218 ', if the date is automatically obtained, the format is' 2022-02-18', so how to convert

- The average time of seven days cannot be calculated directly from the sum of dates. How to average the time

- How to convert the obtained time average into time

We need to consider the above issues. When these issues are considered, we can write normal code

4, Problem solving

Solve the above small problems one by one

-

Windowing function

Recall the use of windowing function,

Usage syntax:

udf() over(partition by ... order by ... rows ... ... ...)

The function we want to implement here is to take the average value, so it is

avg(values) over(...)

-

How to set partition in windowing function

First of all, we need to understand the purpose we need to achieve. Our data granularity is a record of a task and the required seven day average value. Therefore, we need to enter the data of the same job into a window, so it is natural to partition according to the job

avg(values) over(partition by job_id ... ... ...)

-

How to sort the data

Because there are a lot of data entering the window, but we only need to sort the data in the previous seven days in reverse order to get the data in the previous seven days, so we should sort according to our start time. Note here that if it is the start time, we use the start time; if it is the end time, we use the end time

avg(values) over(partition by job_id order by start_time ...)

-

How to convert date format

If the date is obtained automatically, the default date format is' 2022-02-18 ', which is different from the partition requirements, so how to convert it

At the beginning, I didn't find the corresponding method, that is, when I first convert it to time stamp, and then convert it from time stamp to time, I set it to 'yyyyMMdd'

select from_unixtime(unix_timestamp('2022-02-18','yyyy-mm-dd'),'yyyymmdd') -

Average time

We can't calculate it directly, such as' 2022-02-18 12:00:00 'and' 2022-02-17 12:00:00 '. The average value is obviously' 2022-02-18 00:00 ', which obviously doesn't meet the requirements. What we need should be "12:00:00". Therefore, when calculating the average value, we need to convert it to the total seconds to zero in the morning, and then calculate the average value

hour(start_time)*3600 + minute(start_time)*60 + second(start_time)

However, there is a field in the table that has recorded this data, so it can be used directly

-

When we get the average of the total seconds, how can we convert it into time format

For example, how can 3600s be converted to time '01:00:00'

You can do this:

concat(floor(36839/3600),':',floor((36839%3600)/60),':',floor((36839%3600)%60))

This can be converted, but there is a problem. The time "12:00:00" will be parsed into "12:0:0", which is obviously not beautiful. Then you can use the judgment function to make up a 0 if it is one bit

concat_ws(':', if(length(cast(floor(t2.avg_start_time/3600) as string)) = 1,concat('0',cast(floor(t2.avg_start_time/3600) as string)),cast(floor(t2.avg_start_time/3600) as string)) ,if(length(cast(floor(t2.avg_start_time%3600/60) as string)) = 1,concat('0',cast(floor(t2.avg_start_time%3600/60) as string)),cast(floor(t2.avg_start_time%3600/60) as string)) ,if(length(cast(t2.avg_start_time%3600%60 as string)) = 1,concat('0',cast(t2.avg_start_time%3600%60 as string)),cast(t2.avg_start_time%3600%60 as string))) start_time_week_avg, -- Seconds are converted to minutes and secondsHowever, in real production, it is suggested that this function can be encapsulated into udf. One is to simplify the code, and the other is that this function is really commonly used

5, Total sql preparation

When all the small problems are solved, sum them up

SELECT

t2.jb job_id,

t2.st start_time,

t2.et end_time,

t2.sts start_time_ss,

t2.ets end_time_ss,

case

when t2.avg_start_time<28800 then 1

when t2.avg_start_time<36000 then 2

else 3 end avg_start_time_range,

case

when t2.avg_end_time<28800 then 1

when t2.avg_end_time<36000 then 2

else 3 end avg_end_time_range,

concat_ws(':',

if(length(cast(floor(t2.avg_start_time/3600) as string)) = 1,concat('0',cast(floor(t2.avg_start_time/3600) as string)),cast(floor(t2.avg_start_time/3600) as string))

,if(length(cast(floor(t2.avg_start_time%3600/60) as string)) = 1,concat('0',cast(floor(t2.avg_start_time%3600/60) as string)),cast(floor(t2.avg_start_time%3600/60) as string))

,if(length(cast(t2.avg_start_time%3600%60 as string)) = 1,concat('0',cast(t2.avg_start_time%3600%60 as string)),cast(t2.avg_start_time%3600%60 as string))) start_time_week_avg, -- Seconds are converted to minutes and seconds

concat_ws(':',

if(length(cast(floor(t2.avg_end_time/3600) as string)) = 1,concat('0',cast(floor(t2.avg_end_time/3600) as string)),cast(floor(t2.avg_end_time/3600) as string))

,if(length(cast(floor(t2.avg_end_time%3600/60) as string)) = 1,concat('0',cast(floor(t2.avg_end_time%3600/60) as string)),cast(floor(t2.avg_end_time%3600/60) as string))

,if(length(cast(t2.avg_end_time%3600%60 as string)) = 1,concat('0',cast(t2.avg_end_time%3600%60 as string)),cast(t2.avg_end_time%3600%60 as string))) end_time_week_avg -- Seconds are converted to minutes and seconds

from

(SELECT

t1.job_id jb,

t1.start_time st,

t1.end_time et,

t1.start_time_ss sts,

t1.end_time_ss ets,

floor(t1.avg_start) avg_start_time,

floor(t1.avg_end) avg_end_time

from

(select

job_id,

start_time,

end_time,

start_time_ss,

end_time_ss,

AVG(start_time_ss)

over(partition by job_id order by start_time desc rows BETWEEN 7 preceding and current row) avg_start,

AVG(end_time_ss)

over(partition by job_id order by end_time desc rows BETWEEN 7 preceding and current row) avg_end

from table_name

where log_date='20220218') t1) t2;

6, Display of operation results