This paper will realize the simultaneous coding and multiplexing of the collected preview frame (add filter) and PCM audio to generate an mp4 file, that is, to realize a small video recording function imitating wechat.

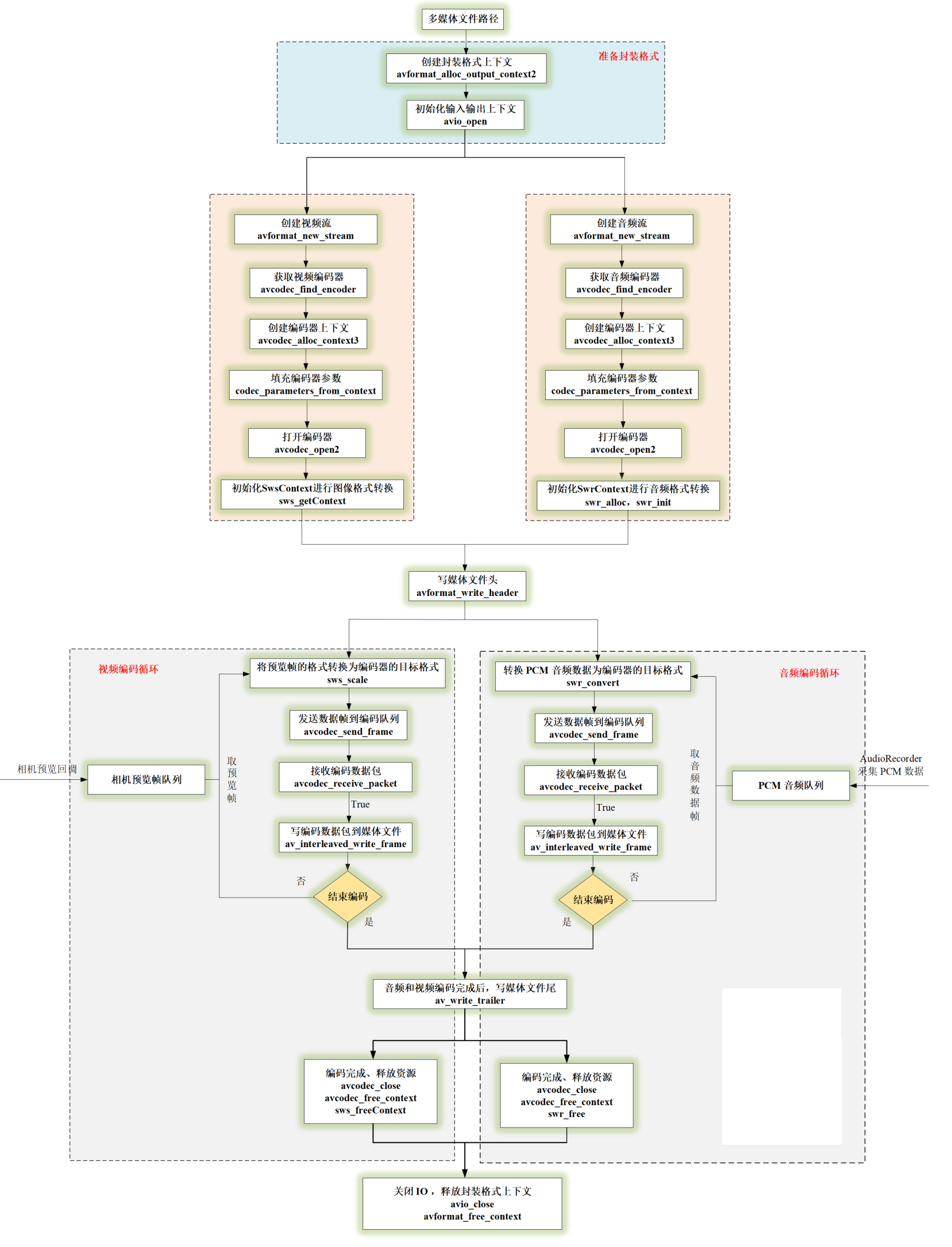

Audio and video recording and coding process

This paper adopts software coding (CPU) implementation, so when aiming at high-resolution preview frames, we need to consider whether the CPU can bear it. Using software to encode images with a resolution of more than 1080P on Xiaolong 8250 will lead to CPU difficulty, and the frame rate can't keep up at this time.

Audio and video recording code implementation

Java layer video frames come from Android Camera2 API callback interface.

private ImageReader.OnImageAvailableListener mOnPreviewImageAvailableListener = new ImageReader.OnImageAvailableListener() {

@Override

public void onImageAvailable(ImageReader reader) {

Image image = reader.acquireLatestImage();

if (image != null) {

if (mCamera2FrameCallback != null) {

mCamera2FrameCallback.onPreviewFrame(CameraUtil.YUV_420_888_data(image), image.getWidth(), image.getHeight());

}

image.close();

}

}

};

The Java layer audio is recorded using the Android AudioRecorder API. The audiodecoder is encapsulated into the thread and the PCM data is transmitted through the interface callback. The default sampling rate is 44.1kHz, dual channel stereo, and the sampling format is PCM 16 bit.

JNI is mainly realized by inputting the output file path, video code rate, frame rate, video width and other parameters at the beginning of recording, and then continuously inputting audio frames and video frames into the coding queue of the Native layer for encoder coding.

//Start recording, output file path, video code rate, frame rate, video width and other parameters

extern "C"

JNIEXPORT jint JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1StartRecord(JNIEnv *env,

jobject thiz,

jint recorder_type,

jstring out_url,

jint frame_width,

jint frame_height,

jlong video_bit_rate,

jint fps) {

const char* url = env->GetStringUTFChars(out_url, nullptr);

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

env->ReleaseStringUTFChars(out_url, url);

if(pContext) return pContext->StartRecord(recorder_type, url, frame_width, frame_height, video_bit_rate, fps);

return 0;

}

//Incoming audio frame to encoding queue

extern "C"

JNIEXPORT void JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1OnAudioData(JNIEnv *env,

jobject thiz,

jbyteArray data,

jint size) {

int len = env->GetArrayLength (data);

unsigned char* buf = new unsigned char[len];

env->GetByteArrayRegion(data, 0, len, reinterpret_cast<jbyte*>(buf));

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

if(pContext) pContext->OnAudioData(buf, len);

delete[] buf;

}

//Incoming video frame to encoding queue

extern "C"

JNIEXPORT void JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1OnPreviewFrame(JNIEnv *env,

jobject thiz,

jint format,

jbyteArray data,

jint width,

jint height) {

int len = env->GetArrayLength (data);

unsigned char* buf = new unsigned char[len];

env->GetByteArrayRegion(data, 0, len, reinterpret_cast<jbyte*>(buf));

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

if(pContext) pContext->OnPreviewFrame(format, buf, width, height);

delete[] buf;

}

//Stop recording

extern "C"

JNIEXPORT jint JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1StopRecord(JNIEnv *env,

jobject thiz) {

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

if(pContext) return pContext->StopRecord();

return 0;

}

Encapsulate the implementation process of audio and video coding into a class, and the code is basically implemented according to the above flow chart.

//Encapsulation class of audio and video recording

class MediaRecorder {

public:

MediaRecorder(const char *url, RecorderParam *param);

~MediaRecorder();

//start recording

int StartRecord();

//Add audio data to audio queue

int OnFrame2Encode(AudioFrame *inputFrame);

//Add video data to video queue

int OnFrame2Encode(VideoFrame *inputFrame);

//Stop recording

int StopRecord();

private:

//Start audio encoding thread

static void StartAudioEncodeThread(MediaRecorder *recorder);

//Start video encoding thread

static void StartVideoEncodeThread(MediaRecorder *recorder);

static void StartMediaEncodeThread(MediaRecorder *recorder);

//Allocate audio buffer frames

AVFrame *AllocAudioFrame(AVSampleFormat sample_fmt, uint64_t channel_layout, int sample_rate, int nb_samples);

//Allocate video buffer frames

AVFrame *AllocVideoFrame(AVPixelFormat pix_fmt, int width, int height);

//Write encoding package to media file

int WritePacket(AVFormatContext *fmt_ctx, AVRational *time_base, AVStream *st, AVPacket *pkt);

//Add media process

void AddStream(AVOutputStream *ost, AVFormatContext *oc, AVCodec **codec, AVCodecID codec_id);

//Print packet information

void PrintfPacket(AVFormatContext *fmt_ctx, AVPacket *pkt);

//Turn on the audio encoder

int OpenAudio(AVFormatContext *oc, AVCodec *codec, AVOutputStream *ost);

//Turn on the video encoder

int OpenVideo(AVFormatContext *oc, AVCodec *codec, AVOutputStream *ost);

//Encode one frame of audio

int EncodeAudioFrame(AVOutputStream *ost);

//Encode a frame of video

int EncodeVideoFrame(AVOutputStream *ost);

//Release encoder context

void CloseStream(AVOutputStream *ost);

private:

RecorderParam m_RecorderParam = {0};

AVOutputStream m_VideoStream;

AVOutputStream m_AudioStream;

char m_OutUrl[1024] = {0};

AVOutputFormat *m_OutputFormat = nullptr;

AVFormatContext *m_FormatCtx = nullptr;

AVCodec *m_AudioCodec = nullptr;

AVCodec *m_VideoCodec = nullptr;

//Video frame queue

ThreadSafeQueue<VideoFrame *>

m_VideoFrameQueue;

//Audio frame queue

ThreadSafeQueue<AudioFrame *>

m_AudioFrameQueue;

int m_EnableVideo = 0;

int m_EnableAudio = 0;

volatile bool m_Exit = false;

//Audio coding thread

thread *m_pAudioThread = nullptr;

//Video coding thread

thread *m_pVideoThread = nullptr;

};

The implementation of encoding one frame of video and encoding one frame of audio is basically the same, which is to convert the format into the target format first, and then avcodec_send_frame\avcodec_receive_packet, and finally encode an empty frame as the end flag.

int MediaRecorder::EncodeVideoFrame(AVOutputStream *ost) {

LOGCATE("MediaRecorder::EncodeVideoFrame");

int result = 0;

int ret;

AVCodecContext *c;

AVFrame *frame;

AVPacket pkt = { 0 };

c = ost->m_pCodecCtx;

av_init_packet(&pkt);

while (m_VideoFrameQueue.Empty() && !m_Exit) {

usleep(10* 1000);

}

frame = ost->m_pTmpFrame;

AVPixelFormat srcPixFmt = AV_PIX_FMT_YUV420P;

VideoFrame *videoFrame = m_VideoFrameQueue.Pop();

if(videoFrame) {

frame->data[0] = videoFrame->ppPlane[0];

frame->data[1] = videoFrame->ppPlane[1];

frame->data[2] = videoFrame->ppPlane[2];

frame->linesize[0] = videoFrame->pLineSize[0];

frame->linesize[1] = videoFrame->pLineSize[1];

frame->linesize[2] = videoFrame->pLineSize[2];

frame->width = videoFrame->width;

frame->height = videoFrame->height;

switch (videoFrame->format) {

case IMAGE_FORMAT_RGBA:

srcPixFmt = AV_PIX_FMT_RGBA;

break;

case IMAGE_FORMAT_NV21:

srcPixFmt = AV_PIX_FMT_NV21;

break;

case IMAGE_FORMAT_NV12:

srcPixFmt = AV_PIX_FMT_NV12;

break;

case IMAGE_FORMAT_I420:

srcPixFmt = AV_PIX_FMT_YUV420P;

break;

default:

LOGCATE("MediaRecorder::EncodeVideoFrame unSupport format pImage->format=%d", videoFrame->format);

break;

}

}

if((m_VideoFrameQueue.Empty() && m_Exit) || ost->m_EncodeEnd) frame = nullptr;

if(frame != nullptr) {

/* when we pass a frame to the encoder, it may keep a reference to it

* internally; make sure we do not overwrite it here */

if (av_frame_make_writable(ost->m_pFrame) < 0) {

result = 1;

goto EXIT;

}

if (srcPixFmt != AV_PIX_FMT_YUV420P) {

/* as we only generate a YUV420P picture, we must convert it

* to the codec pixel format if needed */

if (!ost->m_pSwsCtx) {

ost->m_pSwsCtx = sws_getContext(c->width, c->height,

srcPixFmt,

c->width, c->height,

c->pix_fmt,

SWS_FAST_BILINEAR, nullptr, nullptr, nullptr);

if (!ost->m_pSwsCtx) {

LOGCATE("MediaRecorder::EncodeVideoFrame Could not initialize the conversion context\n");

result = 1;

goto EXIT;

}

}

sws_scale(ost->m_pSwsCtx, (const uint8_t * const *) frame->data,

frame->linesize, 0, c->height, ost->m_pFrame->data,

ost->m_pFrame->linesize);

}

ost->m_pFrame->pts = ost->m_NextPts++;

frame = ost->m_pFrame;

}

/* encode the image */

ret = avcodec_send_frame(c, frame);

if(ret == AVERROR_EOF) {

result = 1;

goto EXIT;

} else if(ret < 0) {

LOGCATE("MediaRecorder::EncodeVideoFrame video avcodec_send_frame fail. ret=%s", av_err2str(ret));

result = 0;

goto EXIT;

}

while(!ret) {

ret = avcodec_receive_packet(c, &pkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

result = 0;

goto EXIT;

} else if (ret < 0) {

LOGCATE("MediaRecorder::EncodeVideoFrame video avcodec_receive_packet fail. ret=%s", av_err2str(ret));

result = 0;

goto EXIT;

}

LOGCATE("MediaRecorder::EncodeVideoFrame video pkt pts=%ld, size=%d", pkt.pts, pkt.size);

int result = WritePacket(m_FormatCtx, &c->time_base, ost->m_pStream, &pkt);

if (result < 0) {

LOGCATE("MediaRecorder::EncodeVideoFrame video Error while writing audio frame: %s",

av_err2str(ret));

result = 0;

goto EXIT;

}

}

EXIT:

NativeImageUtil::FreeNativeImage(videoFrame);

if(videoFrame) delete videoFrame;

return result;

}

Finally, pay attention to the alignment of audio and video time stamps in the encoding process to prevent the end of video and sound playback and the picture is not over.

void MediaRecorder::StartVideoEncodeThread(MediaRecorder *recorder) {

AVOutputStream *vOs = &recorder->m_VideoStream;

AVOutputStream *aOs = &recorder->m_AudioStream;

while (!vOs->m_EncodeEnd) {

double videoTimestamp = vOs->m_NextPts * av_q2d(vOs->m_pCodecCtx->time_base);

double audioTimestamp = aOs->m_NextPts * av_q2d(aOs->m_pCodecCtx->time_base);

LOGCATE("MediaRecorder::StartVideoEncodeThread [videoTimestamp, audioTimestamp]=[%lf, %lf]", videoTimestamp, audioTimestamp);

if (av_compare_ts(vOs->m_NextPts, vOs->m_pCodecCtx->time_base,

aOs->m_NextPts, aOs->m_pCodecCtx->time_base) <= 0 || aOs->m_EncodeEnd) {

LOGCATE("MediaRecorder::StartVideoEncodeThread start queueSize=%d", recorder->m_VideoFrameQueue.Size());

//The time stamps of video and audio are aligned, and people are sensitive to sound, so as to prevent the situation that the video sound playback is over and the picture is not over

if(audioTimestamp <= videoTimestamp && aOs->m_EncodeEnd) vOs->m_EncodeEnd = aOs->m_EncodeEnd;

vOs->m_EncodeEnd = recorder->EncodeVideoFrame(vOs);

} else {

LOGCATE("MediaRecorder::StartVideoEncodeThread start usleep");

//When the video timestamp is greater than the audio timestamp, the video coding will sleep and wait for alignment

usleep(5 * 1000);

}

}

}

So far, a small video recording function has been realized. Due to space limitation, all the codes have not been posted. For the complete implementation code, please refer to the project:

https://github.com/githubhaohao/LearnFFmpeg

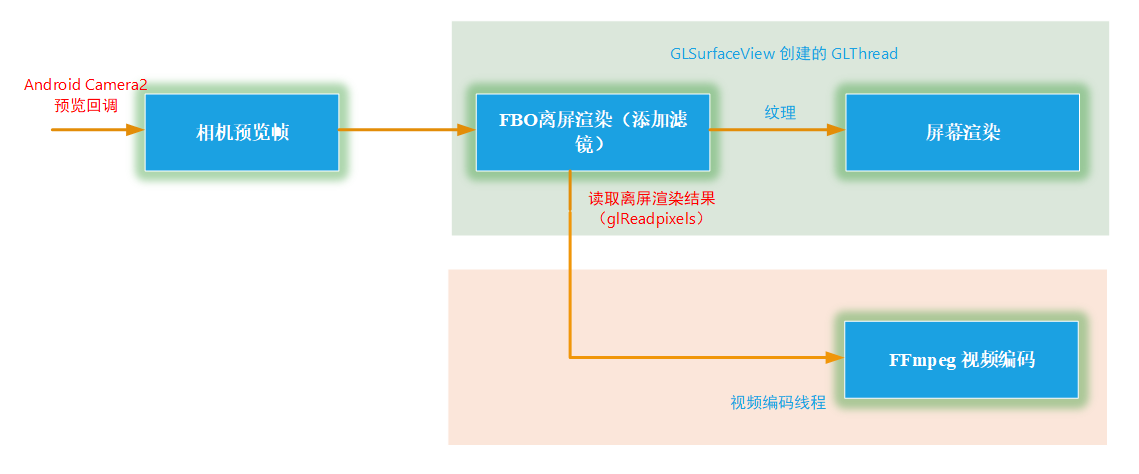

Small video recording with filter

Based on the code in the previous section, we have implemented a small video recording function similar to wechat, but simple video recording is obviously not the purpose of this article. There are too many articles about FFmpeg video recording, so this article makes some differences.

We make a small video recording function with filter based on the functions in the previous section.

Referring to the above figure, we first create FBO in the GL thread, render the preview frame to the FBO bound texture, add a filter, then use glreadpixels to read the video frame after adding the filter, put it into the coding thread for coding, and finally bind to the FBO texture for screen rendering, This point has been introduced in detail in the article "FFmpeg video player with filter".

Here we define a class GLCameraRender, which is responsible for off screen rendering (adding filters) and screen rendering to display preview frames. For this part of the code, please refer to the article on rendering optimization of FFmpeg video player.

class GLCameraRender: public VideoRender, public BaseGLRender{

public:

//Initializes the width and height of the preview frame

virtual void Init(int videoWidth, int videoHeight, int *dstSize);

//Render a frame of video

virtual void RenderVideoFrame(NativeImage *pImage);

virtual void UnInit();

//Three callbacks of GLSurfaceView

virtual void OnSurfaceCreated();

virtual void OnSurfaceChanged(int w, int h);

virtual void OnDrawFrame();

static GLCameraRender *GetInstance();

static void ReleaseInstance();

//Update the transformation matrix, and the Camera preview frame needs to be rotated

virtual void UpdateMVPMatrix(int angleX, int angleY, float scaleX, float scaleY);

virtual void UpdateMVPMatrix(TransformMatrix * pTransformMatrix);

//After adding the filter, call back the video frame, and then put the video frame with the filter into the encoding queue

void SetRenderCallback(void *ctx, OnRenderFrameCallback callback) {

m_CallbackContext = ctx;

m_RenderFrameCallback = callback;

}

//Load filter material image

void SetLUTImage(int index, NativeImage *pLUTImg);

//Load Java layer shader script

void SetFragShaderStr(int index, char *pShaderStr, int strSize);

private:

GLCameraRender();

virtual ~GLCameraRender();

//Create FBO

bool CreateFrameBufferObj();

void GetRenderFrameFromFBO();

//Create or update filter material texture

void UpdateExtTexture();

static std::mutex m_Mutex;

static GLCameraRender* s_Instance;

GLuint m_ProgramObj = GL_NONE;

GLuint m_FboProgramObj = GL_NONE;

GLuint m_TextureIds[TEXTURE_NUM];

GLuint m_VaoId = GL_NONE;

GLuint m_VboIds[3];

GLuint m_DstFboTextureId = GL_NONE;

GLuint m_DstFboId = GL_NONE;

NativeImage m_RenderImage;

glm::mat4 m_MVPMatrix;

TransformMatrix m_transformMatrix;

int m_FrameIndex;

vec2 m_ScreenSize;

OnRenderFrameCallback m_RenderFrameCallback = nullptr;

void *m_CallbackContext = nullptr;

//Support sliding selection filter function

volatile bool m_IsShaderChanged = false;

volatile bool m_ExtImageChanged = false;

char * m_pFragShaderBuffer = nullptr;

NativeImage m_ExtImage;

GLuint m_ExtTextureId = GL_NONE;

int m_ShaderIndex = 0;

mutex m_ShaderMutex;

};

In the JNI layer, we need to pass in shader scripts of different filters and LUT diagrams of some LUT filters, so that we can switch different filters by sliding the screen left and right in the Java layer.

extern "C"

JNIEXPORT void JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1SetFilterData(JNIEnv *env,

jobject thiz,

jint index,

jint format,

jint width,

jint height,

jbyteArray bytes) {

int len = env->GetArrayLength (bytes);

uint8_t* buf = new uint8_t[len];

env->GetByteArrayRegion(bytes, 0, len, reinterpret_cast<jbyte*>(buf));

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

if(pContext) pContext->SetLUTImage(index, format, width, height, buf);

delete[] buf;

env->DeleteLocalRef(bytes);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_byteflow_learnffmpeg_media_MediaRecorderContext_native_1SetFragShader(JNIEnv *env,

jobject thiz,

jint index,

jstring str) {

int length = env->GetStringUTFLength(str);

const char* cStr = env->GetStringUTFChars(str, JNI_FALSE);

char *buf = static_cast<char *>(malloc(length + 1));

memcpy(buf, cStr, length + 1);

MediaRecorderContext *pContext = MediaRecorderContext::GetContext(env, thiz);

if(pContext) pContext->SetFragShader(index, buf, length + 1);

free(buf);

env->ReleaseStringUTFChars(str, cStr);

}

Similarly, the complete implementation code can refer to the project:

https://github.com/githubhaohao/LearnFFmpeg

In addition, if you want more filters, you can refer to the project OpenGL camera 2, which implements 30 kinds of camera filters and special effects

https://github.com/githubhaohao/OpenGLCamera2

Implementation code path

FFmpeg/WebRTC/RTMP audio and video streaming media advanced development learning and tutorials. If necessary, add a learning group 973961276

Advanced development of audio and video streaming media: https://ke.qq.com/course/3202131?flowToken=1031864