Recommended Video: RTSP/RTMP streaming analysis streaming architecture analysis / streaming cache queue design / FFmpeg function blocking analysis https://www.bilibili.com/video/BV1ky4y177Jh

1. I ntroduction to streaming

When I first heard "streaming", I thought to myself, "what is this high-end thing?", Under the pressure of the project, we have to carry out research and development under pressure. After a period of study, development and summary, the author finally understands what high-end thing streaming is?

What is streaming?

Streaming refers to the process of transmitting the encapsulated content in the collection stage to the server. In fact, it is the process of transmitting the live video signal to the network.

In the vernacular, streaming is to upload local audio and video data to the cloud / background server through the network. The so-called "good packet in the acquisition stage". The author believes that it is an uncoded NALU of H264.

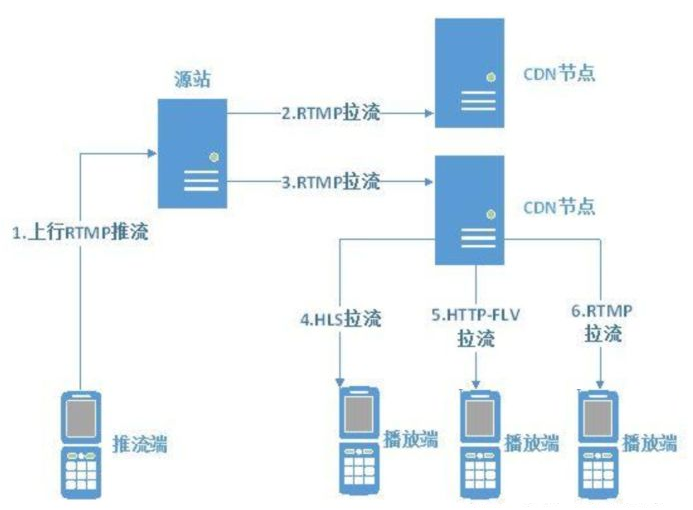

In the above figure, the video and audio data in the whole process from "streaming end" to "source station" (the same as the "server" mentioned above), to CDN distribution node, and finally to "playback end" are compressed data streams. In other words, for video data, it is H264 code stream. The decoding work is carried out at the playback end.

It is conceivable that the most popular application of streaming is live broadcasting; In most video portals, the author is also very confused about whether there is step 1. In terms of efficiency, video data is directly stored in the "source station" and distributed according to the client's request through CDN. As for the specific practice, it needs to be verified. The author will write it down here for the time being.

After understanding what is streaming, the next question naturally comes out: how should I push streaming? In fact, this is a complex process, and we also need to understand the "source station", that is, the streaming server. This paper only involves the local audio and video upload stage of uploading local audio and video data to the cloud / background server through the network. Therefore, the first step is to understand how the local data is uploaded. The first step is to understand the streaming protocol!

Several commonly used streaming protocols learned by the author are: RTMP, HLS, webRTC, HTTP-FLV. This article only introduces RTMP, because the author has only contacted the streaming work of RTMP protocol at present.

RTMP

RTMP is the acronym of Real Time Messaging Protocol. It is an application layer protocol based on TCP developed by Adobe company. In other words, RTMP is a protocol family of application layer like HTTP/HTTPS. RTMP generally transmits FLV format stream on TCP channel. Please note that RTMP is the network transmission protocol, while flv is the packaging format of video. Flv packaging format is designed for network transmission, so RTMP+FLV can be said to be a "golden partner".

RTMP protocol includes: basic protocol and RTMPT/RTMPS/RTMPE and other variants. The RTMP protocol family has the following interesting points, which readers may wish to take a look at:

- RTMP works on TCP and uses port 1935 by default. This is the basic form;

- RTMPE adds encryption function on the basis of RTMP;

- RTMPT is encapsulated on the HTTP request and can penetrate the firewall;

- RTMPS is similar to RTMPT and adds the security function of TLS/SSL;

- RTMFP: RTMP transmitted by UDP;

RTMP is a network data transmission protocol specially designed for the real-time communication of multimedia data stream. It is mainly used for audio, video and data communication between Flash/AIR platform and streaming media / interactive server supporting RTMP protocol. Now Adobe does not support it, but at present, the protocol is still widely used.

Readers can know how RTMP performs handshake connection, transmits data, and its encapsulated packet format through various materials, which will not be repeated in this paper. (the main reason is that the author hasn't thoroughly understood this knowledge. Cover his face)

II. rtmp streaming of ffmpeg

The following describes two methods of ffmpeg streaming: command line (cmd) and code.

Command line (CMD) streaming

There are too many parameters of ffmpeg cmd, so here we only introduce the basic and what the author knows, and share with the readers.

First, let's take a look at the most basic command for pushing local video files to the server:

ffmpeg -i ${input_video} -f flv rtmp://${server}/live/${streamName}

- -i: Indicates the input video file, followed by the video file path / URL.

- -f: Force ffmpeg to adopt a certain format, followed by the corresponding format.

As mentioned above, RTMP generally uses flv to stream data, so - f flv is often set.

Then, another basic requirement is that you don't want to add audio when streaming. This can also be realized:

ffmpeg -i ${input_video} -vcodec copy -an -f flv rtmp://${server}/live/${streamName}

- -vcodec: Specifies the video decoder. v is video, codec is the decoder, followed by the decoder name, and copy means no decoding;

- -acodec: Specifies the audio decoder. Similarly, a is audio followed by the decoder name. an stands for acode C none, which means to remove the audio.

There are many ways to write a/v. in addition to the above, there are - c:v copy -c:a copy and so on.

For other needs, readers can Google by themselves.

code streaming

Ffmpeg c + + code streaming, the Internet is also a lot of search. There are also a lot of searches on the Internet. The author recommends Raytheon's simplest ffmpeg based streaming device (taking pushing RTMP as an example), which can meet the basic streaming requirements. However, the author encountered several case s in practical application scenarios, and finally summarized a relatively robust and available code segment:

AVFormatContext *mp_ifmt_ctx = nullptr;

AVFormatContext *mp_ofmt_ctx = nullptr;

uint64_t start_timestamp; // Get the time to get the first frame

// ...

int pushStreaming(AVPacket *pkt, int frm_cnt) {

// Screening: because the actual source video file may include multiple audio and video code streams, only one video stream is selected here m_vs_index and one audio stream m_as_index.

if (pkt->stream_index == m_vs_index || pkt->stream_index == m_as_index) {

// Video data without pts, such as uncoded H.264 raw stream, needs to recalculate its pts.

if (pkt->pts == AV_NOPTS_VALUE) {

AVRational time_base = mp_ifmt_ctx->streams[m_vs_index]->time_base;

// Duration between 2 frames (us)

int64_t calc_duration = (double)AV_TIME_BASE /

av_q2d(mp_ifmt_ctx->streams[m_vs_index]->r_frame_rate);

// Reset Parameters

pkt->pts = (double)(frm_cnt * calc_duration) /

(double)(av_q2d(time_base) * AV_TIME_BASE);

pkt->dts = pkt->pts;

pkt->duration = (double)calc_duration /

(double)(av_q2d(time_base) * AV_TIME_BASE);

}

// The author omits the delay operation here, and readers can increase it according to their needs. This operation reduces the pressure on the streaming server by controlling the streaming rate.

// if (pkt->stream_index == m_vs_index) {

// AVRational time_base = mp_ifmt_ctx->streams[m_vs_index]->time_base;

// AVRational time_base_q = {1, AV_TIME_BASE};

// int64_t pts_time = av_rescale_q(pkt->dts, time_base, time_base_q);

// int64_t now_time = av_gettime() - start_timestamp;

// if (pts_time > now_time) {

// av_usleep((unsigned int)(pts_time - now_time));

// }

// }

//After calculating the delay, reassign the timestamp

AVRational istream_base = mp_ifmt_ctx->streams[pkt->stream_index]->time_base;

AVRational ostream_base = mp_ofmt_ctx->streams[pkt->stream_index]->time_base;

pkt->pts = av_rescale_q_rnd(pkt->pts, istream_base, ostream_base,

(AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt->dts = av_rescale_q_rnd(pkt->dts, istream_base, ostream_base,

(AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pkt->pts = pkt->pts < 0 ? 0 : pkt->pts;

pkt->dts = pkt->dts < 0 ? 0 : pkt->dts;

pkt->duration = (int)av_rescale_q(pkt->duration, istream_base, ostream_base);

pkt->pos = -1;

if (pkt->pts < pkt->dts) {

return 1;

}

// Push streaming data to the streaming server

int ret = av_interleaved_write_frame(mp_ofmt_ctx, pkt);

if (ret < 0) {

return ret;

}

}

return 0;

}

3. Pit encountered by plug flow

The author has encountered two case s of streaming failure:

When pushing the streaming data of an RTSP camera, once the camera is turned on, the audio track will be in AV_ interleaved_ write_ Error at frame() function. (I don't remember the return code)

When using some cameras of Infineon to push the stream, it always fails, and the return code displays - 33.

The above two questions have successfully located the problem:

When opening the audio track, the operator always opens two audio tracks at the same time. As long as he chooses to close one of them, he can push the stream immediately;

These cameras include multiple video streams at the same time. It seems that they will transmit multiple streams at the same time through RTSP; Finally, the video and audio code stream is forcibly filtered in the code, and only one video + one audio is reserved.

The reason comes down to one: flv format can only include one video stream and one audio stream at most.

So far, the author thought that the video packaging format just recorded the cognition of several irrelevant parameters, which completely collapsed. As far as I know, MP4 can include multiple code streams at the same time. It seems that the water of multimedia / streaming media technology is still very deep. There are still many places for the author to learn, step on the pit and summarize! Encourage yourself.

Write it at the back

The article is not rigorous, welcome to criticize.

Advanced development of FFmpeg/WebRTC/RTMP audio and video streaming media Learning materials Learning group 973961276