Chapter 2 Hive installation

2.1 hive installation address

1. Hive official website address

http://hive.apache.org/

2. Document viewing address

https://cwiki.apache.org/confluence/display/Hive/GettingStarted

3. Download address

http://archive.apache.org/dist/hive/

4. github address

https://github.com/apache/hive

2.2 Hive installation and deployment

1. Hive installation and configuration

(1) Put apache-hive-1.2.1-bin tar. GZ upload to / opt/software directory of linux

(2) Unzip apache-hive-1.2.1-bin tar. GZ to / opt/module / directory

[root@hadoop102 software]$ tar -zxvf apache-hive-1.2.1-bin.tar.gz -C /opt/module/

(3) Modify apache-hive-1.2.1-bin tar. The name of GZ is hive

[root@hadoop102 module]$ mv apache-hive-1.2.1-bin/ hive

(4) Modify HIV Env. In the / opt/module/hive/conf directory The sh.template name is hive env sh

[root@hadoop102 conf]$ mv hive-env.sh.template hive-env.sh

(5) Configure HIV Env SH file

(a)to configure HADOOP_HOME route

export HADOOP_HOME=/opt/module/hadoop-2.7.2

(b) configure HIVE_CONF_DIR path

export HIVE_CONF_DIR=/opt/module/hive/conf

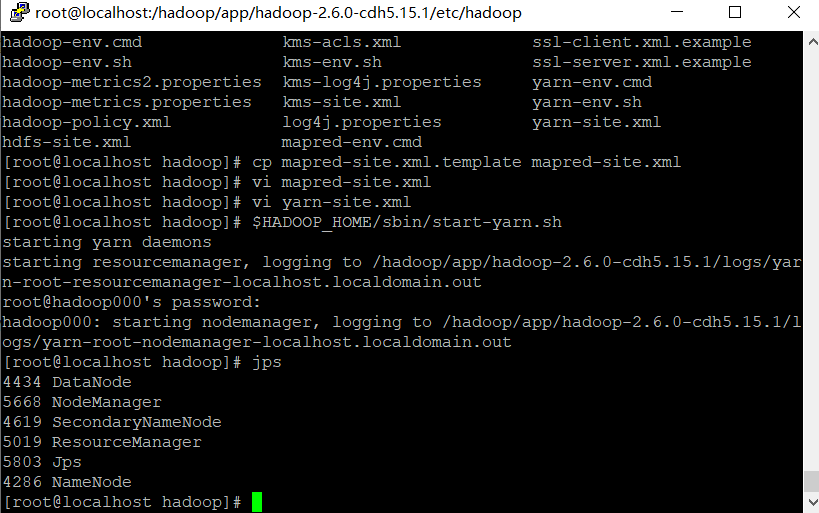

2. Hadoop cluster configuration

(1) hdfs and yarn must be started

[root@hadoop102 hadoop-2.7.2]$sbin/start-dfs.sh

[root@hadoop102 hadoop-2.7.2]$sbin/start-yarn.sh

(2) Create / tmp and / user/hive/warehouse directories on HDFS and modify their same group permissions to be writable

[root@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -mkdir /tmp

[root@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -mkdir -p /user/hive/warehouse

[root@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /tmp

[root@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /user/hive/warehouse

3. Hive basic operation

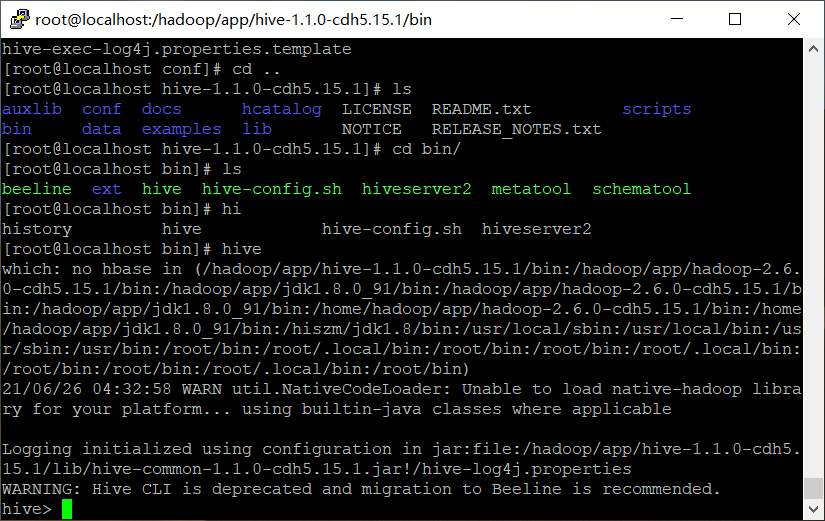

(1) Start hive

[root@hadoop102 hive]$ bin/hive

(2) View database

hive> show databases;

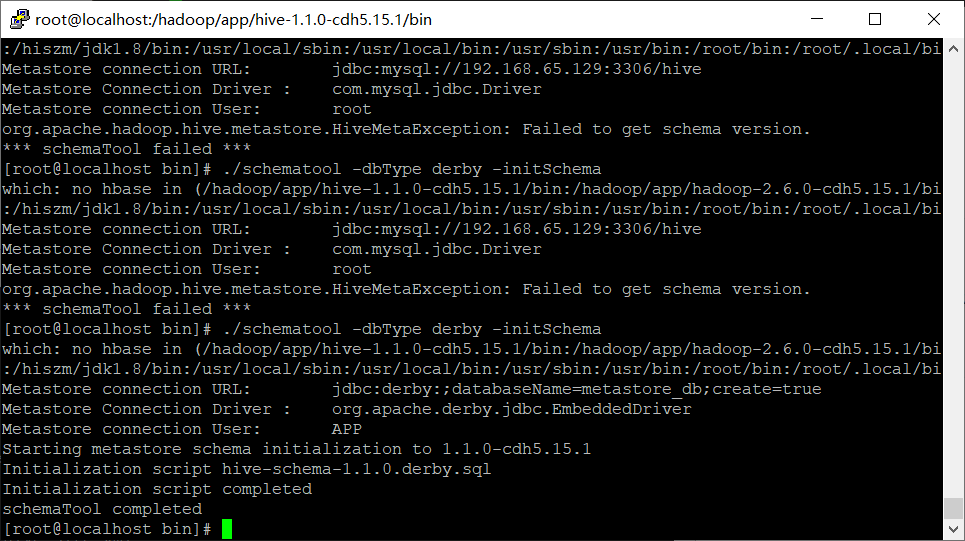

./schematool -dbType derby -initSchem

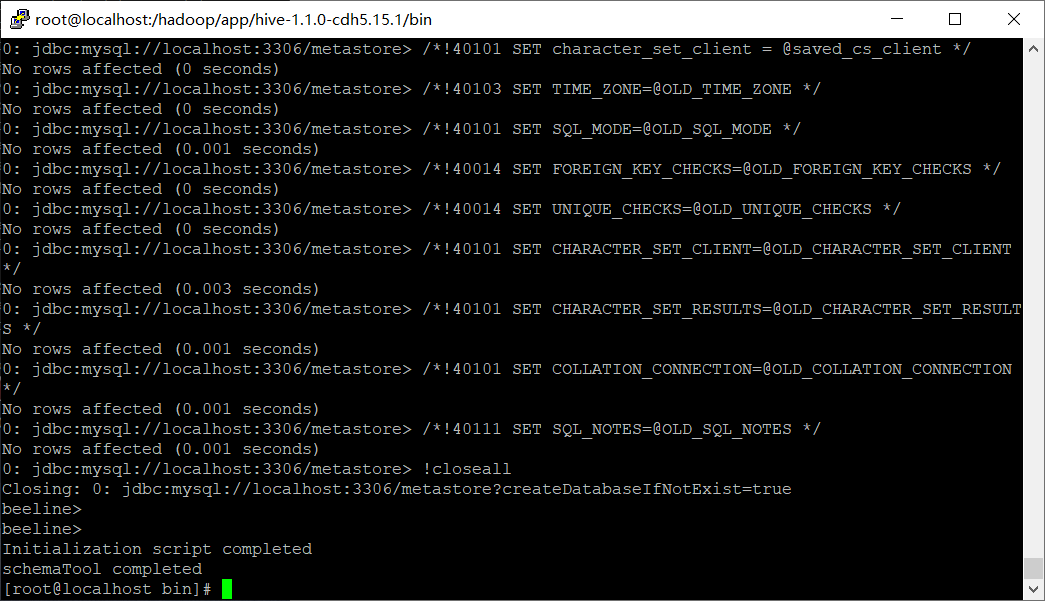

hive> show databases; FAILED: SemanticException org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ==== Just initialize the database ==== [root@localhost bin]# ./schematool -dbType derby -initSchema which: no hbase in (/hadoop/app/hive-1.1.0-cdh5.15.1/bin:/hadoop/app/hadoop-2.6.0-cdh5.15.1/bi:/hiszm/jdk1.8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/root/.local/bi Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver Metastore connection User: APP Starting metastore schema initialization to 1.1.0-cdh5.15.1 Initialization script hive-schema-1.1.0.derby.sql Initialization script completed schemaTool completed

(3) Open default database

hive> use default;

(4) Displays the tables in the default database

hive> show tables;

(5) Create a table

hive> create table student(id int, name string);

(6) Displays how many tables are in the database

hive> show tables;

(7) View table structure

hive> desc student;

(8) Insert data into a table

hive> insert into student values(1000,"ss");

(9) Data in query table

hive> select * from student;

(10) Exit hive

hive> quit;

schemaTool completed

[root@localhost bin]# hive

which: no hbase in (/hadoop/app/hive-1.1.0-cdh5.15.1/bin:/hadoop/app/hadoop-2.6.0-cdh5.15.1/bin:/hadoop/app/jdk1.8.0_91/bin:/hiszm/jdk1.8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/root/.local/bin:/root/bin)

21/06/26 05:19:57 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Logging initialized using configuration in jar:file:/hadoop/app/hive-1.1.0-cdh5.15.1/lib/hive-common-1.1.0-cdh5.15.1.jar!/hive-log4j.properties

WARNING: Hive CLI is deprecated and migration to Beeline is recommended.

hive> show databases;

OK

default

Time taken: 3.22 seconds, Fetched: 1 row(s)

hive> use default

> ;

OK

Time taken: 0.043 seconds

hive> show tables;

OK

Time taken: 0.041 seconds

hive> create table student(id int, name string);

OK

Time taken: 0.326 seconds

hive> show tables;

OK

student

Time taken: 0.018 seconds, Fetched: 1 row(s)

hive> desc student;

OK

id int

name string

Time taken: 0.119 seconds, Fetched: 2 row(s)

hive> insert into student values(1000,"ss");

Query ID = root_20210626072828_6d53bcd2-305b-4c7a-950e-3185ef9d1b73

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1624695917121_0001, Tracking URL = http://localhost:8088/proxy/application_1624695917121_0001/

Kill Command = /hadoop/app/hadoop-2.6.0-cdh5.15.1/bin/hadoop job -kill job_1624695917121_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2021-06-26 07:28:51,889 Stage-1 map = 0%, reduce = 0%

2021-06-26 07:28:57,038 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.21 sec

MapReduce Total cumulative CPU time: 2 seconds 210 msec

Ended Job = job_1624695917121_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://hadoop000:8020/user/hive/warehouse/student/.hive-staging_hive_2021-06-26_07-28-44_192_2202570064323571586-1/-ext-10000

Loading data to table default.student

Table default.student stats: [numFiles=1, numRows=1, totalSize=8, rawDataSize=7]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 2.21 sec HDFS Read: 3737 HDFS Write: 79 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 210 msec

OK

Time taken: 14.075 seconds

hive> select * from student;

OK

1000 ss

Time taken: 0.038 seconds, Fetched: 1 row(s)

hive>

2.3 importing local files into Hive cases

demand

Set the local / opt / module / data / student.exe Txt the data in this directory is imported into the student (ID, int, name string) table of hive.

1. Data preparation

Prepare data in the directory / opt / module / data

(1) Create data in the / opt/module / directory

[root@hadoop102 module]$ mkdir datas

(2) Create a student.net in the / opt / module / data / directory Txt file and add data

[root@hadoop102 datas]$ touch student.txt [root@hadoop102 datas]$ vi student.txt 1001 zhangshan 1002 lishi 1003 zhaoliu

Note the tab interval.

2. Hive actual operation

(1) Start hive

[root@hadoop102 hive]$ bin/hive

(2) Display database

hive> show databases;

(3) Use default database

hive> use default;

(4) Displays the tables in the default database

hive> show tables;

(5) Delete the created student table

hive> drop table student;

(6) Create student table and declare file separator '\ t'

hive> create table student(id int, name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

(7) Load / opt / module / data / student Txt file into the student database table.

hive> load data local inpath '/opt/module/datas/student.txt' into table student;

(8) Hive query results

hive> select * from student; OK 1001 zhangshan 1002 lishi 1003 zhaoliu Time taken: 0.266 seconds, Fetched: 3 row(s)

3. Problems encountered

Open another client window and start hive, which will generate Java sql. Sqlexception.

Exception in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:677) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.run(RunJar.java:221) at org.apache.hadoop.util.RunJar.main(RunJar.java:136) Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:86) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:503) ... 8 more

The reason is that Metastore is stored in the built-in derby database by default. MySQL is recommended to store Metastore;

2.4 MySql installation

2.4.1 preparation of installation package

1. Check whether MySQL is installed. If so, uninstall mysql

(1) view

[ root@hadoop102 Desktop]# rpm -qa|grep mysql

mysql-libs-5.1.73-7.el6.x86_64

(2) unloading

[ root@hadoop102 Desktop]# RPM - E -- nodeps mysql-libs-5.1.73-7 el6. x86_ sixty-four

2. Unzip MySQL LIBS Zip file to current directory

[root@hadoop102 software]# unzip mysql-libs.zip

[root@hadoop102 software]# ls

mysql-libs.zip

mysql-libs

3. Enter the MySQL LIBS folder

[root@hadoop102 mysql-libs]# ll

Total consumption 76048 -rw-r--r--. 1 root root 18509960 3 June 26, 2015 MySQL-client-5.6.24-1.el6.x86_64.rpm -rw-r--r--. 1 root root 3575135 12 January 2013 mysql-connector-java-5.1.27.tar.gz -rw-r--r--. 1 root root 55782196 3 June 26, 2015 MySQL-server-5.6.24-1.el6.x86_64.rpm

2.4.2 installing MySql server

1. Install mysql server

[root@hadoop102 mysql-libs]# rpm -ivh MySQL-server-5.6.24-1.el6.x86_64.rpm

2. View the generated random password

[root@hadoop102 mysql-libs]# cat /root/.mysql_secret

OEXaQuS8IWkG19Xs YLxnB8x8fC7txIm6

3. View mysql status

[root@hadoop102 mysql-libs]# service mysql status

4. Start mysql

[root@hadoop102 mysql-libs]# service mysql start

2.4.3 installing MySql client

1. Install mysql client

[root@hadoop102 mysql-libs]# rpm -ivh MySQL-client-5.6.24-1.el6.x86_64.rpm

2. Link mysql

[root@hadoop102 mysql-libs]# mysql -uroot -pOEXaQuS8IWkG19Xs

3. Change password

mysql>SET PASSWORD=PASSWORD('000000');

4. Exit mysql

mysql>exit

2.4.4 host configuration in user table in MySQL

As long as the configuration is root + password, you can log in to MySQL database on any host.

-- 1.get into mysql [root@hadoop102 mysql-libs]# mysql -uroot -p123456` -- 2.show database mysql>show databases; -- 3.use mysql database mysql>use mysql; -- 4.Exhibition mysql All tables in the database mysql>show tables; -- 5.Exhibition user Table structure mysql>desc user; -- 6.query user surface mysql>select User, Host, Password from user; -- 7.modify user Watch, put Host The table content is modified to% mysql>update user set host='%' where host='localhost'; -- 8.delete root User's other host mysql>delete from user where Host='hadoop102'; mysql>delete from user where Host='127.0.0.1'; mysql>delete from user where Host='::1'; -- 9.Refresh mysql>flush privileges; -- 10.sign out mysql>quit;

2.5 Hive metadata configuration to MySql

2.5.1 driver copy

1. In / opt / software / MySQL LIBS directory, unzip mysql-connector-java-5.1.27 tar. GZ driver package

[root@hadoop102 mysql-libs]# tar -zxvf mysql-connector-java-5.1.27.tar.gz

2. Copy mysql-connector-java-5.1.27-bin. In / opt / software / MySQL LIBS / mysql-connector-java-5.1.27 directory Jar to / opt/module/hive/lib/

[root@hadoop102 mysql-connector-java-5.1.27]# cp mysql-connector-java-5.1.27-bin.jar

/opt/module/hive/lib/

2.5.2 configuring Metastore to MySql

1. Create a hive site in the / opt/module/hive/conf directory xml

[root@hadoop102 conf]$ touch hive-site.xml

[root@hadoop102 conf]$ vi hive-site.xml

2. Copy the data to hive site according to the official document configuration parameters XML file

https://cwiki.apache.org/confluence/display/Hive/AdminManual+MetastoreAdmin

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> <description>password to use against metastore database</description> </property> </configuration>

3. After configuration, if hive is abnormal, you can restart the virtual machine. (after restarting, don't forget to start the hadoop cluster)

2.5.3 multi window start Hive test

1. Start MySQL first

[root@hadoop102 mysql-libs]$ mysql -uroot -p000000

How many databases are there

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | +--------------------+

2. Open multiple windows again and start hive respectively

Initialize data source

schematool -initSchema -dbTyper mysql -verbose

[root@hadoop102 hive]$ bin/hive

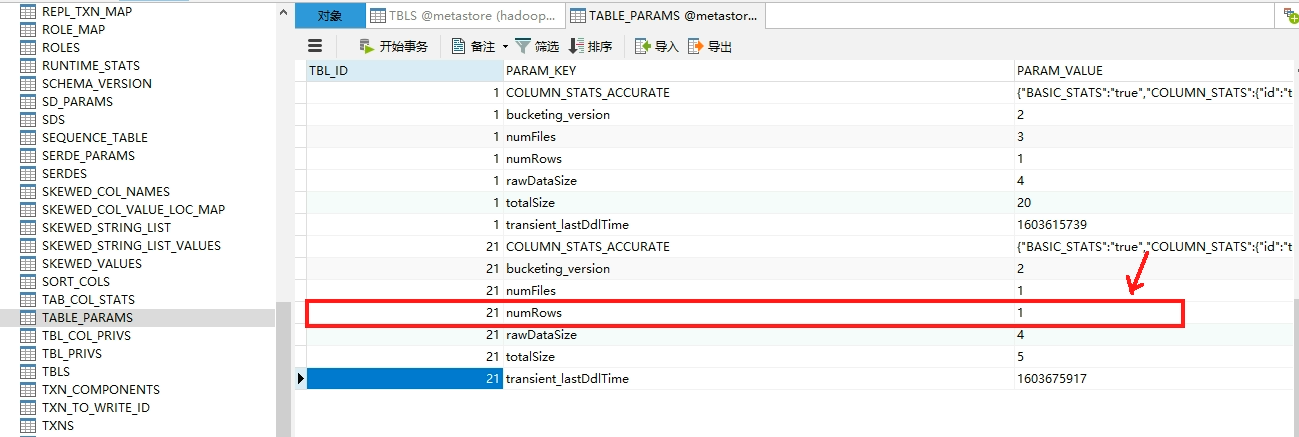

3. After you start hive, go back to the MySQL window to view the database. The metastore database is added

`mysql> show databases;`

+--------------------+ | Database | +--------------------+ | information_schema | | metastore | | mysql | | performance_schema | | test | +--------------------+

[root@localhost conf]# cat hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://localhost:9083</value>

</property>

<!-- This is hiveserver2 -->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>localhost</value>

</property>

</configuration>

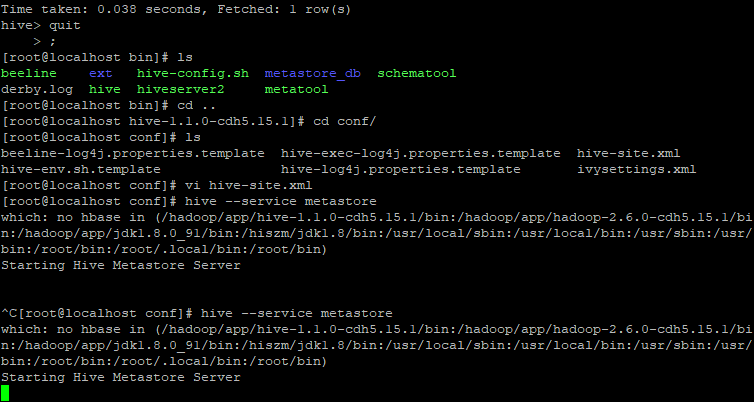

[root@localhost conf]# hive --service metastore

[root@localhost conf]# hive --service hiveserver2

[root@localhost bin]# touch hiveservices.sh [root@localhost bin]# vi hiveservices.sh

#!/bin/bash

HIVE_LOG_DIR=$HIVE_HOME/logs

if [ ! -d $HIVE_LOG_DIR ]

then

mkdir -p $HIVE_LOG_DIR

fi

#Check whether the process is running normally. Parameter 1 is the process name and parameter 2 is the process port

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print

$2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -

d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

function hive_start()

{

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1

&"

[ -z "$metapid" ] && eval $cmd || echo "Metastroe Service started"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "HiveServer2 Service started"

}

function hive_stop()

{

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "Metastore Service not started"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "HiveServer2 Service not started"

}

case $1 in

"start")

hive_start

;;

"stop")

hive_stop

;;

"restart")

hive_stop

sleep 2

hive_start

;;

"status")

check_process HiveMetastore 9083 >/dev/null && echo "Metastore Service operation

normal" || echo "Metastore Service running abnormally"

check_process HiveServer2 10000 >/dev/null && echo "HiveServer2 Service transportation

Line normal" || echo "HiveServer2 Service running abnormally"

;;

*)

echo Invalid Args!

echo 'Usage: '$(basename $0)' start|stop|restart|status'

;;

esac

[root@localhost bin]# ps -ef | grep -v grep | grep -i HiveMetastore

root 21141 6747 0 08:40 pts/1 00:00:07 /hadoop/app/jdk1.8.0_91/bin/java -Xmx256m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hadoop/app/hadoop-2.6.0-cdh5.15.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hadoop/app/hadoop-2.6.0-cdh5.15.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Xmx512m -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /hadoop/app/hive-1.1.0-cdh5.15.1/lib/hive-service-1.1.0-cdh5.15.1.jar org.apache.hadoop.hive.metastore.HiveMetaStore

[root@localhost bin]# ps -ef | grep -i HiveMetastore

root 21141 6747 0 08:40 pts/1 00:00:07 /hadoop/app/jdk1.8.0_91/bin/java -Xmx256m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hadoop/app/hadoop-2.6.0-cdh5.15.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hadoop/app/hadoop-2.6.0-cdh5.15.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hadoop/app/hadoop-2.6.0-cdh5.15.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Xmx512m -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /hadoop/app/hive-1.1.0-cdh5.15.1/lib/hive-service-1.1.0-cdh5.15.1.jar org.apache.hadoop.hive.metastore.HiveMetaStore

root 22794 3337 0 09:14 pts/0 00:00:00 grep --color=auto -i HiveMetastore

[root@localhost bin]#

[root@localhost bin]# ps -ef | grep -v grep | grep -i HiveMetastore | awk '{print $2}'

21141

2.6 hivejdbc access

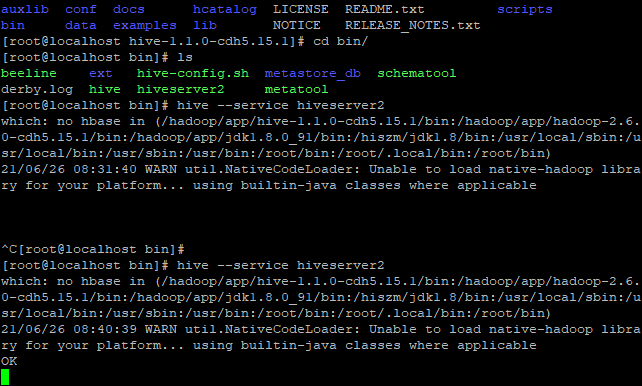

Start the hiveserver2 service

[root@localhost hive-1.1.0-cdh5.15.1]# tail -f -n 200 /tmp/root/hive.log

[root@localhost conf]# hive --service metastore

[root@hadoop102 hive]$ bin/hiveserver2

[root@localhost conf]# hive --service hiveserver2

Start beeline

[root@hadoop102 hive]$ bin/beeline jdbc:hive2://loaclhost:10000

Connect to hiveserver

beeline> !connect jdbc:hive2://loaclhost:10000 (enter) Connecting to jdbc:hive2://hadoop102:10000 Enter username for jdbc:hive2://Hadoop 102:10000: root (enter) Enter password for jdbc:hive2://Hadoop 102:10000: (enter directly) Connected to: Apache Hive (version 1.2.1) Driver: Hive JDBC (version 1.2.1) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://hadoop102:10000> show databases; +----------------+--+ | database_name | +----------------+--+ | default | | hive_db2 | +----------------+--+

2.7 Hive common interactive commands

[root@hadoop202 hive]$ nohup hive --service metastore 2>&1 &

[root@hadoop202 hive]$ nohup hive --service hiveserver2 2>&1 &

[root@localhost bin]# hive -help

which: no hbase in (/hadoop/app/hive-1.1.0-cdh5.15.1/bin:/hadoop/app/hadoop-2.6.0-cdh5.15.1/bin:/hadoop/app/jdk1.8.0_91/bin:/hiszm/jdk1.8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/root/.local/bin:/root/bin)

21/06/26 21:14:35 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

usage: hive

-d,--define <key=value> Variable subsitution to apply to hive

commands. e.g. -d A=B or --define A=B

--database <databasename> Specify the database to use

-e <quoted-query-string> SQL from command line

-f <filename> SQL from files

-H,--help Print help information

--hiveconf <property=value> Use value for given property

--hivevar <key=value> Variable subsitution to apply to hive

commands. e.g. --hivevar A=B

-i <filename> Initialization SQL file

-S,--silent Silent mode in interactive shell

-v,--verbose Verbose mode (echo executed SQL to the

console)

1. "- e" does not enter the interactive window of hive to execute sql statements

[root@hadoop102 hive]$ bin/hive -e "select id from student;"

2. "- f" executes sql statements in the script

- Create hivef.com in the / opt / module / data directory SQL file

[root@hadoop102 datas]$ touch hivef.sql

Write the correct sql statement in the file

select *from student;

- Execute the sql statement in the file

[root@hadoop102 hive]$ bin/hive -f /opt/module/datas/hivef.sql

- Execute the sql statements in the file and write the results to the file

[root@hadoop102 hive]$ bin/hive -f /opt/module/datas/hivef.sql > /opt/module/datas/hive_result.txt

2.8 Hive other command operations

1. Exit hive window:

hive(default)>exit; hive(default)>quit;

In the new version of hive, there is no difference. In the previous version, there was:

exit: submit data implicitly before exiting;

Quit: quit without submitting data;

2. How to view the hdfs file system in the hive cli command window

hive(default)>dfs -ls /;

3. How to view the local file system in the hive cli command window

hive(default)>! ls /opt/module/datas;

4. View all historical commands entered in hive

(1) enter the root directory / root or / home/root of the current user

(2) view Historyfile

[root@hadoop102 ~]$ cat .hivehistory

2.9Hive common attribute configuration

2.9.1 Hive data warehouse location configuration

1) the original location of the Default data warehouse is in the: / user/hive/warehouse Path on hdfs.

2) under the warehouse directory, no folder is created for the default database default. If a table belongs to the default database, create a folder directly under the data warehouse directory.

3) modify the original location of the default data warehouse (copy the following configuration information of hive-default.xml.template to hive-site.xml file).

<property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property>

Configure that users in the same group have execution permission

bin/hdfs dfs -chmod g+w /user/hive/warehouse

2.9.2 information display configuration after query

1) At hive site The following configuration information is added to the XML file to display the current database and the header information configuration of the query table.

<property> <name>hive.cli.print.header</name> <value>true</value> </property> <property> <name>hive.cli.print.current.db</name> <value>true</value> </property>

2) restart hive and compare the differences before and after configuration.

[root@localhost conf]# vi hive-site.xml [root@localhost conf]# ../bin/hive which: no hbase in (/hadoop/app/hive-1.1.0-cdh5.15.1/bin:/hadoop/app/hadoop-2.6.0-cdh5.15.1/bin:/hadoop/app/jdk1.8.0_91/bin:/hiszm/jdk1.8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/root/.local/bin:/root/bin) 21/06/26 21:30:42 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Logging initialized using configuration in jar:file:/hadoop/app/hive-1.1.0-cdh5.15.1/lib/hive-common-1.1.0-cdh5.15.1.jar!/hive-log4j.properties WARNING: Hive CLI is deprecated and migration to Beeline is recommended. hive (default)> ====================================before============================== hive > ====================================================================== hive (default)> select * from student; OK student.id student.name 1000 ss 1000 ss Time taken: 0.047 seconds, Fetched: 2 row(s) hive (default ====================================before============================== hive > select * from student; OK 1000 ss 1000 ss Time taken: 0.047 seconds, Fetched: 2 row(s) ======================================================================

2.9.3 Hive operation log information configuration - yes, but not necessary

[root@localhost tmp]# ls /tmp/root/ dc5329d8-11c0-49ff-87f8-d76e83801e75 hive.log dc5329d8-11c0-49ff-87f8-d76e83801e752257204420012449420.pipeout operation_logs dc5329d8-11c0-49ff-87f8-d76e83801e753902258702291924058.pipeout [root@localhost tmp]# tail /tmp/root/hive.log 2021-06-26 08:45:08,764 INFO [HiveServer2-Background-Pool: Thread-41]: ql.Driver (Driver.java:execute(1658)) - Executing command(queryId=root_20210626084545_674ddfa4-27e7-41a7-a6c7-bf25cea5b0c5): show databases 2021-06-26 08:45:08,770 INFO [HiveServer2-Background-Pool: Thread-41]: ql.Driver (Driver.java:launchTask(2052)) - Starting task [Stage-0:DDL] in serial mode 2021-06-26 08:45:08,816 INFO [HiveServer2-Background-Pool: Thread-41]: ql.Driver (Driver.java:execute(1960)) - Completed executing command(queryId=root_20210626084545_674ddfa4-27e7-41a7-a6c7-bf25cea5b0c5); Time taken: 0.052 seconds 2021-06-26 08:45:08,816 INFO [HiveServer2-Background-Pool: Thread-41]: ql.Driver (SessionState.java:printInfo(1087)) - OK 2021-06-26 09:38:35,711 WARN [pool-4-thread-1]: util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2021-06-26 09:38:38,790 INFO [main]: session.SessionState (SessionState.java:createPath(703)) - Created local directory: /tmp/dc5329d8-11c0-49ff-87f8-d76e83801e75_resources 2021-06-26 09:38:38,802 INFO [main]: session.SessionState (SessionState.java:createPath(703)) - Created HDFS directory: /tmp/hive/root/dc5329d8-11c0-49ff-87f8-d76e83801e75 2021-06-26 09:38:38,806 INFO [main]: session.SessionState (SessionState.java:createPath(703)) - Created local directory: /tmp/root/dc5329d8-11c0-49ff-87f8-d76e83801e75 2021-06-26 09:38:38,808 INFO [main]: session.SessionState (SessionState.java:createPath(703)) - Created HDFS directory: /tmp/hive/root/dc5329d8-11c0-49ff-87f8-d76e83801e75/_tmp_space.db 2021-06-26 09:38:38,809 INFO [main]: session.SessionState (SessionState.java:start(587)) - No Tez session required at this point. hive.execution.engine=mr. [root@localhost tmp]#

1. Hive's log is stored in / TMP / root / hive.com by default Log directory (under the current user name)

2. Modify hive's log and save the log to / opt/module/hive/logs

- Modify / opt / module / hive / conf / hive log4j properties. The template file name is

hive-log4j.properties [root@hadoop102 conf]$ pwd /opt/module/hive/conf [root@hadoop102 conf]$ mv hive-log4j.properties.template hive-log4j.properties

- In hive log4j Modify the log storage location in the properties file

hive.log.dir=/opt/module/hive/logs

2.9.4 parameter configuration mode

1. View all current configuration information

hive>set;

2. There are three ways to configure parameters

- (1) Profile mode

Default configuration file: hive default xml

User defined profile: hive site xml

Note: user defined configuration overrides the default configuration.

In addition, Hive will also read the Hadoop configuration, because Hive is started as a Hadoop client, and the Hive configuration will overwrite the Hadoop configuration.

The configuration file settings are valid for all Hive processes started locally.

- (2) Command line parameter mode

hive-default.xml contains

When you start Hive, you can add - hiveconf param=value on the command line to set parameters.

For example:

[root@hadoop103 hive]$ bin/hive -hiveconf mapred.reduce.tasks=10; # Note: it is only valid for this hive startup # View parameter settings: hive (default)> set mapred.reduce.tasks;

- (3) Parameter declaration method

You can use the SET keyword to SET parameters in HQL

For example:

hive (default)> set mapred.reduce.tasks=100; # Note: only valid for this hive startup. # View parameter settings hive (default)> set mapred.reduce.tasks;

The priority of the above three setting methods increases in turn. That is, configuration file < command line parameter < parameter declaration. Note that some system level parameters, such as log4j related settings, must be set in the first two ways, because the reading of those parameters has been completed before the session is established.

count does not execute MR task