I Common Web Cluster scheduler

The current Web Cluster scheduler is divided into software and hardware

Software usually uses open source LVS,Haproxy and nginx

LVS has the best performance, but the construction is relatively complex

The upstream module of Nginx supports the cluster function, but the health check function of cluster nodes is not strong, and the high concurrency performance is not as good as that of Haproxy.

F5 is the most commonly used hardware, and many people use some domestic products, such as shuttle fish, green alliance, etc

II Haproxy application analysis

HAProxy is a proxy that can provide high availability, load balancing and TCP and HTTP based applications. It is a free, fast and reliable solution. HAProxy is very suitable for large concurrent (and more than 1w developed) web sites, which usually need session persistence or seven layer processing. The running mode of HAProxy makes it easy and safe to integrate into the current architecture, and can protect the web server from being exposed to the network.

LVS has strong anti load ability in enterprise applications, but it has shortcomings.

LVS does not support regular processing and cannot realize dynamic and static separation;

For large websites, the implementation configuration of LVS is complex and the maintenance cost is relatively high.

Haproxy is a software that can provide high availability, load balancing, and proxy based on TCP and HTTP applications.

Use a Web site that matches the load;

It runs on hardware and supports tens of thousands of connection requests for concurrent connections.

III Haproxy scheduling algorithm

roundrobin: there are many HAProxy load balancing strategies. There are eight common ones:

Represents a simple poll.

Static RR: indicates according to the weight.

leastconn: indicates that the least connected person processes first.

Source: indicates the source IP according to the request, similar to the IP of Nginx_ Hash mechanism.

ri: indicates the URI according to the request.

rl_param: it means to lock every HTTP request according to the HTTP request header.

RDP cookie(name): it means that each TCP request is locked and hashed according to the cookie(name).

Haproxy supports a variety of scheduling algorithms, three of which are most commonly used

RR(Round Robin) polling scheduling

LC(Least Connections) minimum connection number algorithm

SH(Source Hashing) source access algorithm

1.RR(Round Robin)

RR algorithm is the simplest and most commonly used algorithm, namely polling scheduling

Understanding examples

There are three nodes a, B and C;

The first user access will be assigned to node A;

The first user access will be assigned to node B;

The first user access will be assigned to node C;

The fourth user access continues to be assigned to node A, polling and distributing access requests to achieve load balancing.

2.LC(Least Connections)

LC algorithm dynamically allocates front-end requests according to the number of connections of back-end nodes, that is, the minimum number of connections algorithm

Understanding examples

There are three nodes A, B and C, and the connection numbers of each node are A:4, B:5 and C:6 respectively;

The first user connection request will be assigned to A, and the number of connections will change to A:5, B:5, C:6;

The second user request will continue to be allocated to a, and the number of connections will change to A:6, B:5, C:6; A new request will be assigned to B, and the new request will be assigned to the client with the smallest number of connections each time;

In practice, the number of connections of A, B and C will be released dynamically, so it is difficult to have the same number of connections;

Compared with rr algorithm, this algorithm has a great improvement and is widely used at present.

3.SH(Source Hashing)

The source based access scheduling algorithm is used in some scenarios where Session sessions are recorded on the server. Cluster scheduling can be done based on source IP, cookies, etc

Understanding examples

There are three nodes A, B and C. The first user is assigned to A for the first access, and the second user is assigned to B for the first access;

The first user will continue to be assigned to A during the second access, and the second user will still be assigned to B during the second access. As long as the load balancing scheduler does not restart, the first user access will be assigned to A and the second user access will be assigned to B to realize the scheduling of the cluster;

The advantage of this scheduling algorithm is to maintain the session, but when some IP accesses are very large, the load will be unbalanced, and the accesses of some nodes are too large, which will affect the service use.

IV Main features of Haproxy

▶ The reliability and stability are very good, which can be comparable to the hardware level F5 load balancing equipment;

▶ Up to 40000-50000 concurrent connections can be maintained at the same time, the maximum number of requests processed per unit time is 20000, and the maximum processing capacity can reach 10Git/s;

▶ It supports up to 8 load balancing algorithms and session maintenance;

▶ Support virtual machine host function, so as to realize web load balancing more flexibly;

▶ Support connection rejection, fully transparent proxy and other unique functions;

▶ Strong ACL support for access control;

▶ Its unique elastic binary tree data structure makes the complexity of data structure rise to 0 (1), that is, the search speed of data will not decrease with the increase of data items;

▶ Support the keepalive function of the client, reduce the waste of resources caused by multiple handshakes between the client and haproxy, and allow multiple requests to be completed in one tcp connection;

▶ Support TCP acceleration and zero replication, similar to mmap mechanism;

▶ Support response buffering;

▶ Support RDP protocol;

▶ Source based stickiness, similar to nginx's ip_hash function, which always schedules requests from the same client to the same upstream server within a certain period of time;

▶ Better statistical data interface, and its web interface displays the statistical information of data received, sent, rejected and error of each server in the back-end cluster;

▶ Detailed health status detection. The web interface has the health detection status of the upstream server, and provides some management functions;

▶ Flow based health assessment mechanism;

▶ http based authentication;

▶ Command line based management interface;

▶ Log analyzer, which can analyze logs.

V Building Web cluster with Haproxy

Haproxy server: 192.168.121.33

Nginx server 1: 192.168.121.44

Nginx server 2: 192.168.121.55

Client: 192.168.121.200

1.Haproxy server deployment

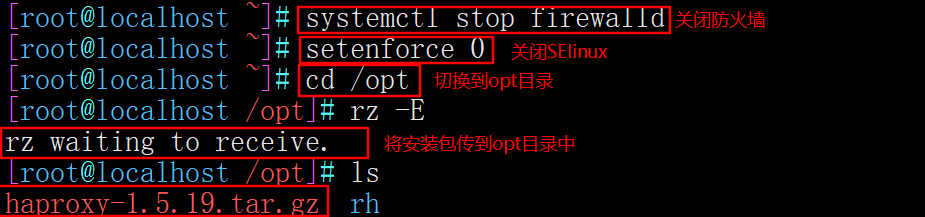

1. Close the firewall and transfer the software package required to install Haproxy to the / opt directory

systemctl stop firewalld setenforce 0 cd /opt haproxy-1.5.19.tar.gz

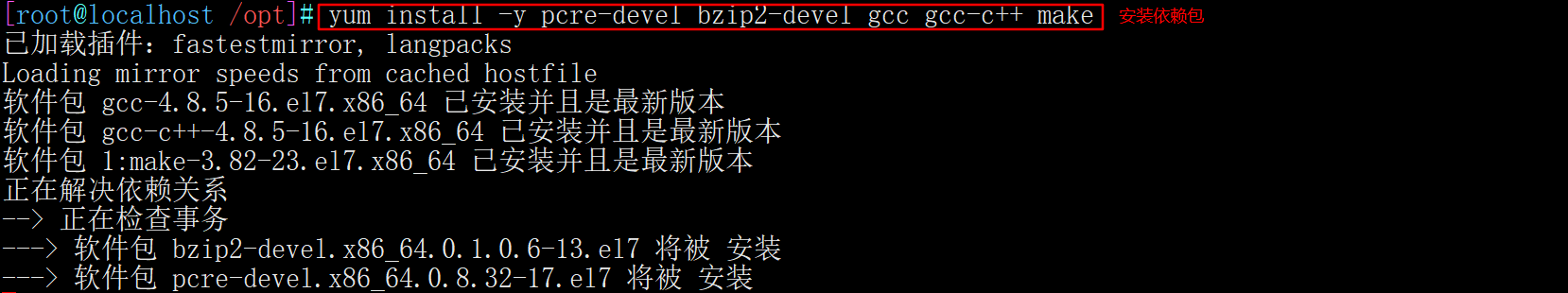

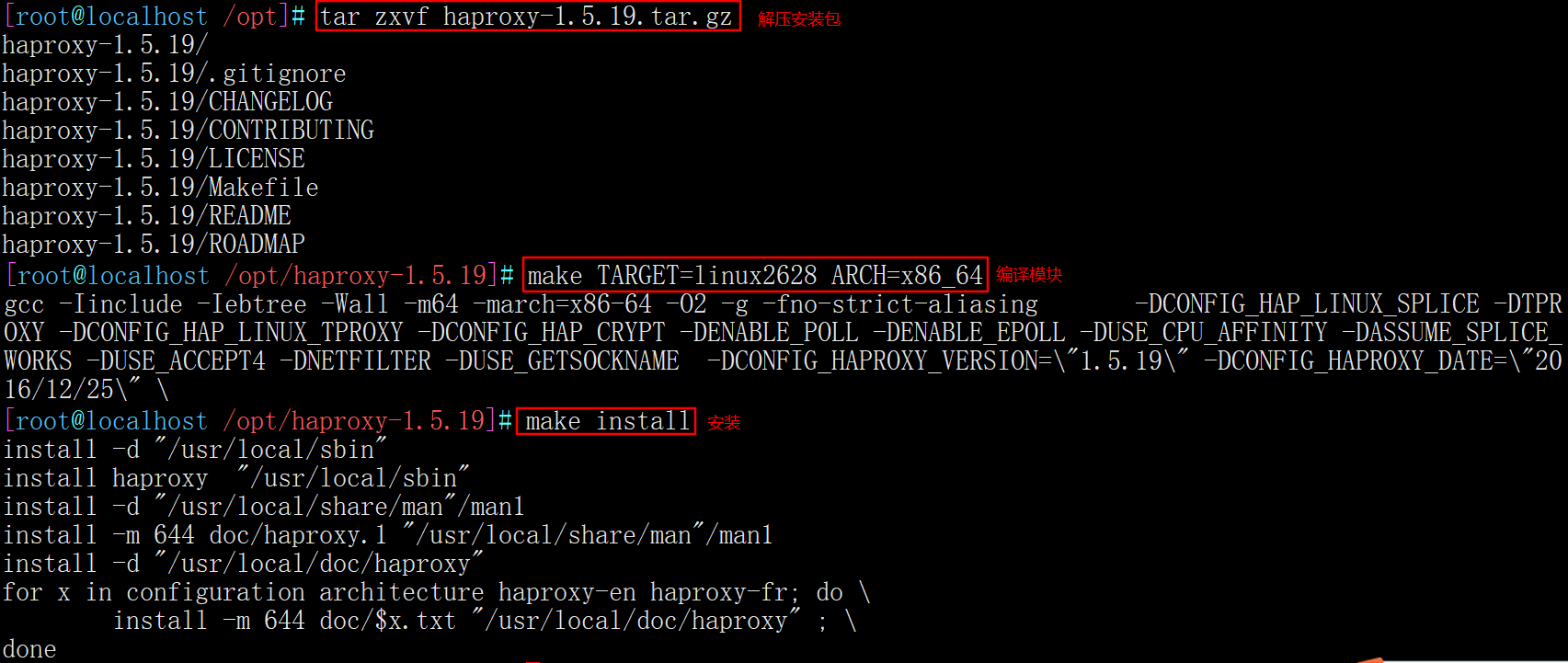

2. Compile and install Haproxy

yum install -y pcre-devel bzip2-devel gcc gcc-c++ make tar zxvf haproxy-1.5.19.tar.gz cd haproxy-1.5.19/ make TARGET=linux2628 ARCH=x86_64 make install

Parameter description

Target = Linux 26 # kernel version,

#Use uname -r to view the kernel, such as 2.6.18-371 El5, in this case, TARGET=linux26 is used for this parameter; TARGET=linux2628 for kernel greater than 2.6.28

ARCH=x86_64 # system bits

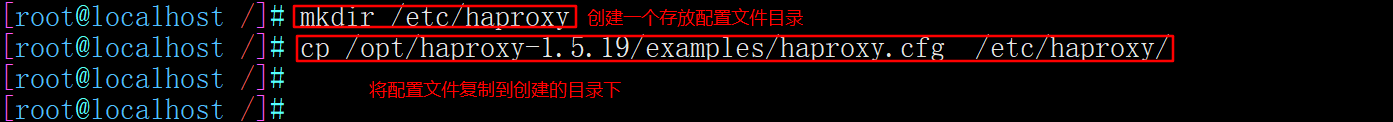

3.Haproxy server configuration

mkdir /etc/haproxy cp /opt/haproxy-1.5.19/examples/haproxy.cfg /etc/haproxy/

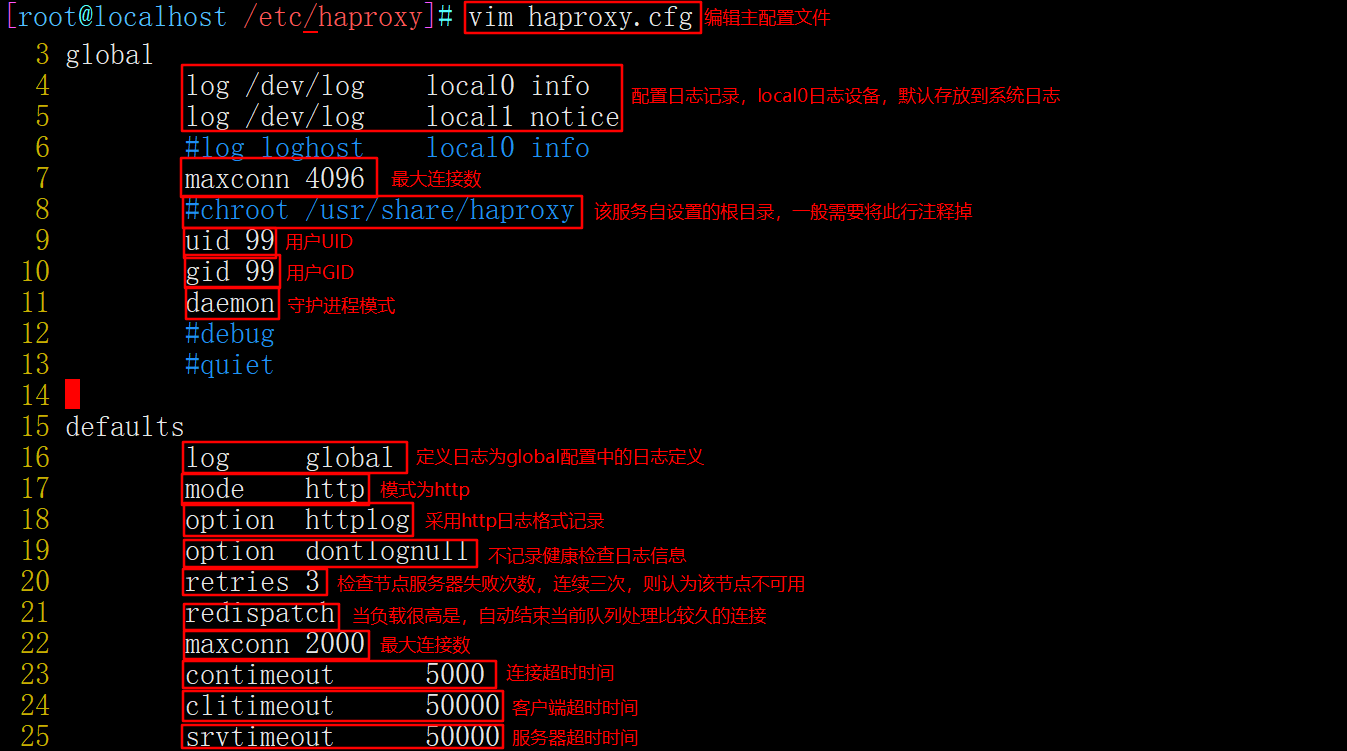

vim /etc/haproxy/haproxy.cfg

global

--4~5 that 's ok--Modify, configure logging, local0 It is a log device and is stored in the system log by default

log /dev/log local0 info

log /dev/log local0 notice

#log loghost local0 info

maxconn 4096 #For the maximum number of connections, consider the ulimit -n limit

--8 that 's ok--notes, chroot The running path is the root directory set by the service itself. Generally, this line needs to be commented out

#chroot /usr/share/haproxy

uid 99 #User UID

gid 99 #User GID

daemon #Daemon mode

defaults

log global #Define log is the log definition in global configuration

mode http #The mode is http

option httplog #Log in http log format

option dontlognull #Do not record health check log information

retries 3 #Check the number of failures of the node server. If there are three consecutive failures, the node is considered unavailable

redispatch #When the server load is very high, it will automatically end the connection that has been queued for a long time

maxconn 2000 #maximum connection

contimeout 5000 #Connection timeout

clitimeout 50000 #Client timeout

srvtimeout 50000 #Server timeout

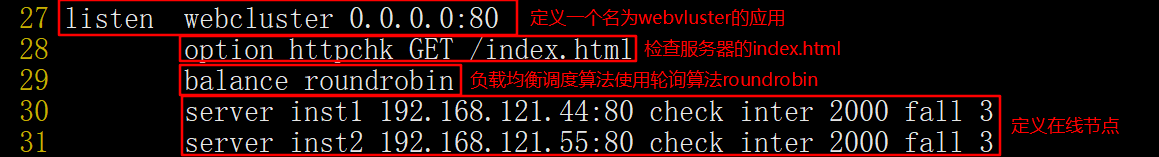

--Delete all below listen term--,add to

listen webcluster 0.0.0.0:80 #Define an application called webcuster

option httpchk GET /index.html #Check the index of the server HTML file

balance roundrobin #The load balancing scheduling algorithm uses the polling algorithm roundrobin

server inst1 192.168.80.121.44 check inter 2000 fall 3 #Define online nodes

server inst2 192.168.80.121.55 check inter 2000 fall 3

Parameter description

balance roundrobin # load balancing scheduling algorithm

#Polling algorithm: roundrobin; Minimum connection number algorithm: leastconn; Source access scheduling algorithm: source, which is similar to the IP address of nginx_ hash

check inter 2000 # indicates a heartbeat rate between the haproxy server and the node

fall 3 # indicates that the node fails if the heartbeat frequency is not detected for three consecutive times

If the node is configured with "backup", it means that the node is only a backup node, and the node will not be connected until the primary node fails. If "backup" is not carried, it means the master node and provides services together with other master nodes.

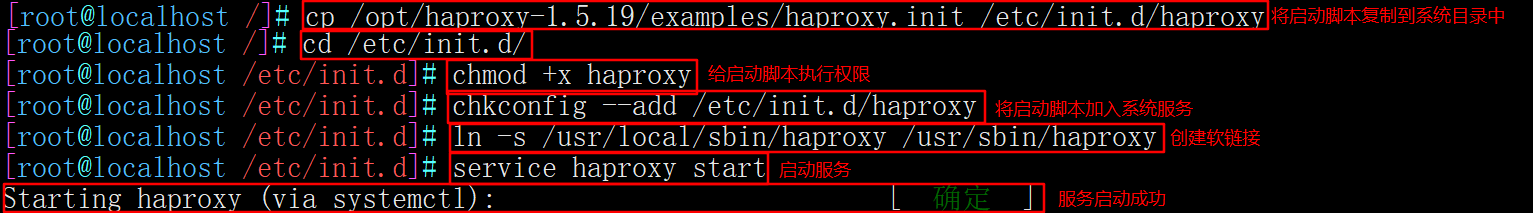

4. Add haproxy system service

cp /opt/haproxy-1.5.19/examples/haproxy.init /etc/init.d/haproxy chmod +x haproxy chkconfig --add /etc/init.d/haproxy ln -s /usr/local/sbin/haproxy /usr/sbin/haproxy service haproxy start or /etc/init.d/haproxy start

2. Node server deployment (192.168.121.44192.168.121.55 configuration is similar)

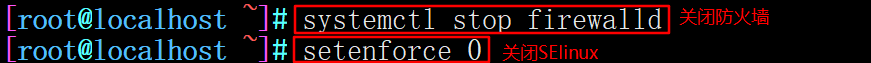

1. Turn off the firewall

systemctl stop firewalld setenforce 0

2. Install Nginx using Yum or up2date

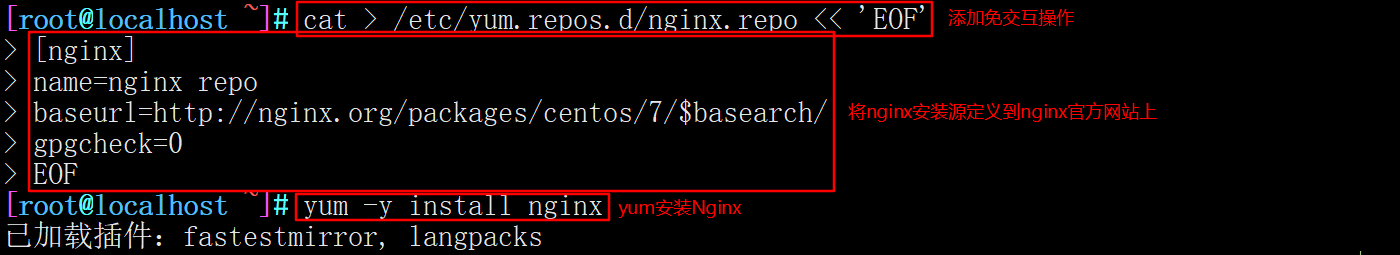

You need to add this interactive free configuration first, otherwise you can't yum install Nginx cat > /etc/yum.repos.d/nginx.repo << 'EOF' [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 EOF yum install nginx -y

3. Re edit the web page file

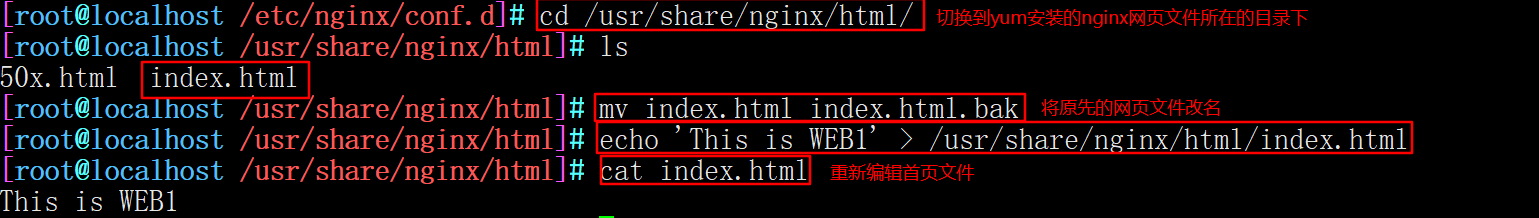

--------------192.168.121.44-------------- cd /usr/share/nginx/html/ #The path to the Nginx site directory where yum is installed mv index.html index.html.bak #Rename the original home page file echo 'This is WEB1' > /usr/share/nginx/html/index.html #Recreate a home page file

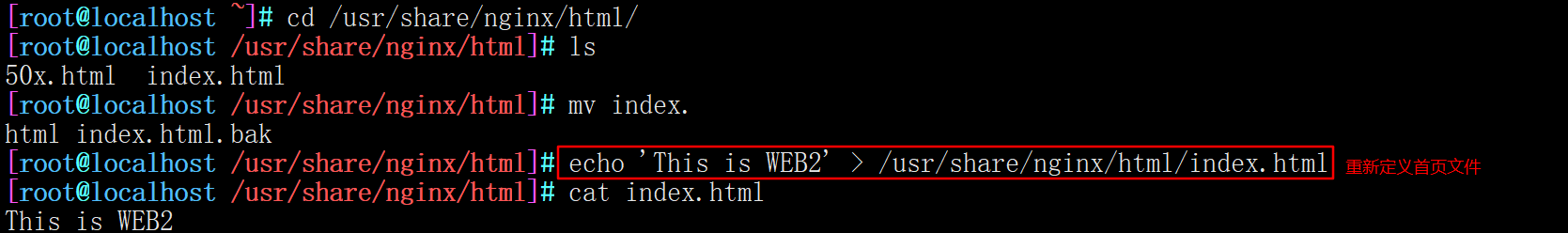

--------------192.168.121.55-------------- cd /usr/share/nginx/html/ #The path to the Nginx site directory where yum is installed mv index.html index.html.bak #Rename the original home page file echo 'This is WEB2' > /usr/share/nginx/html/index.html #Recreate a home page file

4. Restart the service

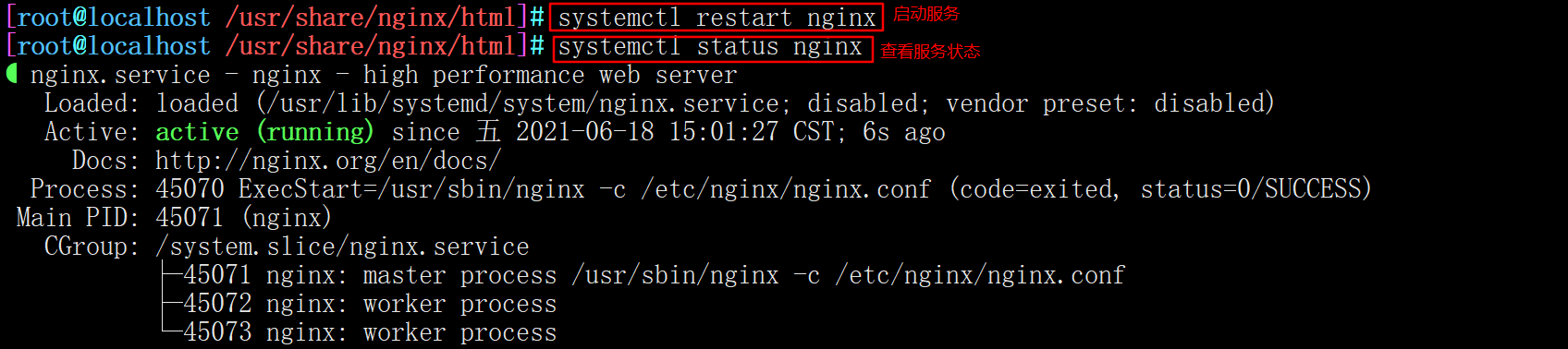

systemctl restart nginx

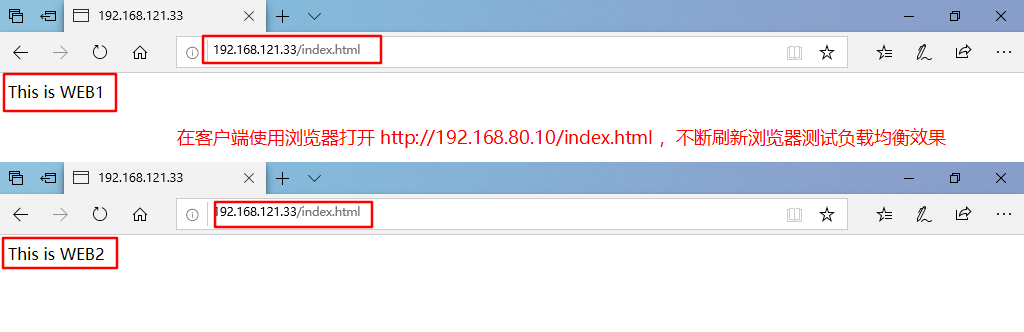

3. Test Web Cluster

Open using browser on client http://192.168.80.10/index.html, constantly refresh the browser to test the effect of load balancing

Vi Haproxy log definition

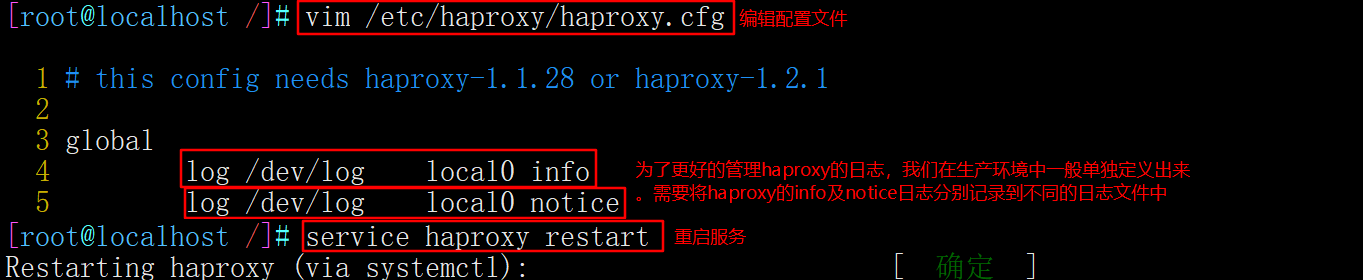

#By default, the log of haproxy is output to the syslog of the system, which is not very convenient to view. In order to better manage the log of haproxy, we generally define it separately in the production environment. The info and notice logs of haproxy need to be recorded in different log files. vim /etc/haproxy/haproxy.cfg global log /dev/log local0 info log /dev/log local0 notice service haproxy restart

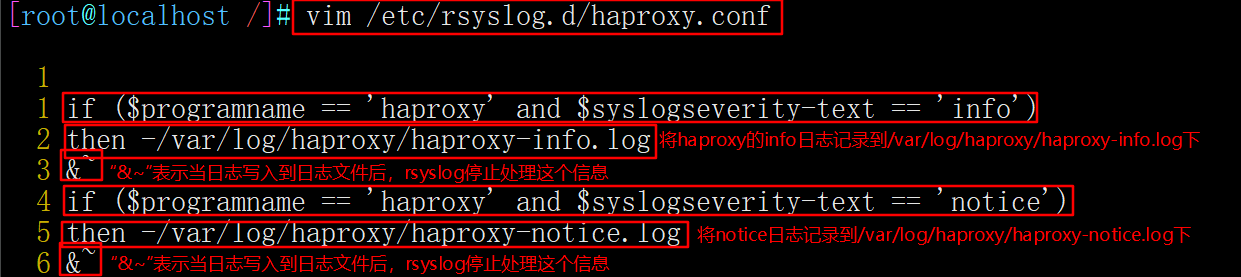

#The rsyslog configuration needs to be modified to facilitate management. Define the configuration related to haproxy independently to haproxy Conf and put it in / etc / rsyslog D /, all configuration files in this directory will be loaded automatically when rsyslog is started. vim /etc/rsyslog.d/haproxy.conf if ($programname == 'haproxy' and $syslogseverity-text == 'info') then -/var/log/haproxy/haproxy-info.log &~ if ($programname == 'haproxy' and $syslogseverity-text == 'notice') then -/var/log/haproxy/haproxy-notice.log &~

#Description:

This part of the configuration is to record the info log of haproxy to / var / log / haproxy / haproxy info Log, record the notice log to / var / log / haproxy / haproxy notice Log. "&~" means that rsyslog stops processing this information after the log is written to the log file.

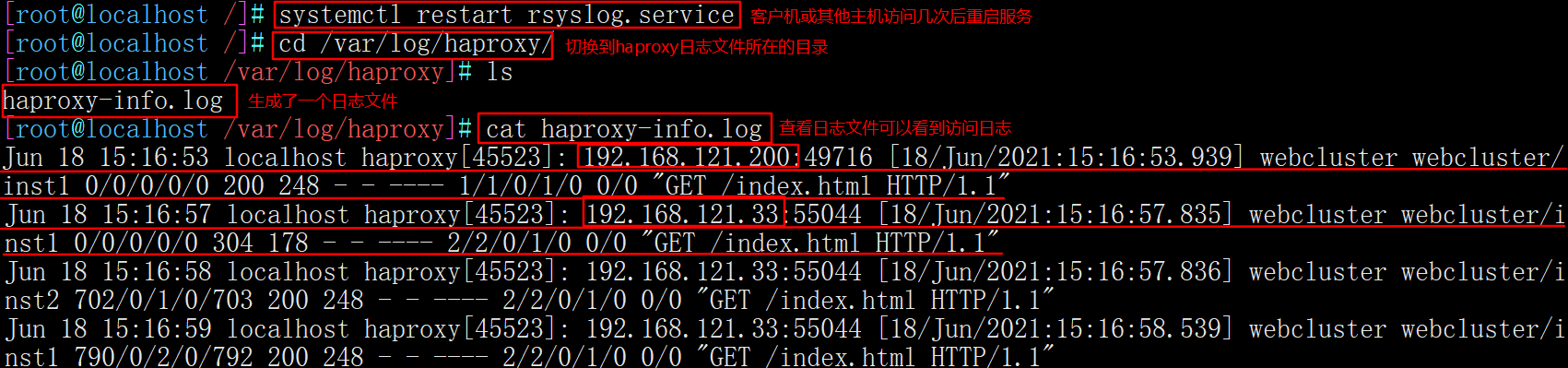

systemctl restart rsyslog.service cd /var/log/haproxy/ cat haproxy-info.log #View the access request log information of haproxy

VII Haproxy parameter optimization

With the increasing load of enterprise websites, the optimization of haproxy parameters is very important

● maxconn: the maximum number of connections, which can be adjusted according to the actual situation of the application. It is recommended to use 10 240

● daemon: daemon mode. Haproxy can be started in non daemon mode. It is recommended to start in daemon mode

● nbproc: the number of concurrent processes for load balancing. It is recommended to be equal to or twice the number of CPU cores of the current server

◆ retries: the number of retries, which is mainly used to check the cluster nodes. If there are many nodes and a large amount of concurrency, set it to 2 or 3 times

● option http server close: actively turn off the http request option. It is recommended to use this option in the production environment

● timeout HTTP keep alive: long connection timeout, which can be set to 10s

● timeout HTTP request: http request timeout. It is recommended to set this time to 5~ 10s to increase the release speed of HTTP connection

● timeout client: the timeout time of the client. If the traffic is too large and the response of the node is slow, you can set this time to be shorter. It is recommended to set it to about 1min