The purpose of current limiting is to protect the system when the system flow is too large to avoid system instability or even failure due to too large flow.

There are many current limiting schemes in the cloud native environment. The lightweight scheme can use the memory data combination scheme of Bucket4j + Hazelcast/ignite/infinispan. Sentinel cluster can be used for the complete scheme.

1.Sentinel

sentinel can be used for current limiting, including single machine current limiting or multi machine cluster current limiting.

Advantages: flexible control. There is a cluster scheme.

Disadvantages: you need to write code, which is highly invasive.

Sentinel rules are configured in sentinel clusters. Reference cluster configuration:

Cluster flow control · alibaba/Sentinel Wiki · GitHub

The current limit needs to be combined with the number of instances of each service, so you can monitor the change of pod status of k8s to update the current limit policy. Listening k8s cluster:

java/WatchExample.java at master · kubernetes-client/java · GitHub

Using Watch with the Kubernetes API | Baeldung

How to watch events on a kubernetes service using its go client - Stack Overflowj

Listening k8s instance code:

import com.google.gson.reflect.TypeToken;

import io.kubernetes.client.openapi.ApiClient;

import io.kubernetes.client.openapi.ApiException;

import io.kubernetes.client.openapi.Configuration;

import io.kubernetes.client.openapi.apis.CoreV1Api;

import io.kubernetes.client.openapi.models.V1Namespace;

import io.kubernetes.client.util.Config;

import io.kubernetes.client.util.Watch;

import java.io.IOException;

import java.util.concurrent.TimeUnit;

import okhttp3.OkHttpClient;

/** A simple example of how to use Watch API to watch changes in Namespace list. */

public class WatchExample {

public static void main(String[] args) throws IOException, ApiException {

ApiClient client = Config.defaultClient();

// infinite timeout

OkHttpClient httpClient =

client.getHttpClient().newBuilder().readTimeout(0, TimeUnit.SECONDS).build();

client.setHttpClient(httpClient);

Configuration.setDefaultApiClient(client);

CoreV1Api api = new CoreV1Api();

Watch<V1Namespace> watch =

Watch.createWatch(

client,

api.listNamespaceCall(

null, null, null, null, null, 5, null, null, null, Boolean.TRUE, null),

new TypeToken<Watch.Response<V1Namespace>>() {}.getType());

try {

for (Watch.Response<V1Namespace> item : watch) {

System.out.printf("%s : %s%n", item.type, item.object.getMetadata().getName());

}

} finally {

watch.close();

}

}

}2.Bucket4j limits the flow of API

Basic use

Rate Limiting a Spring API Using Bucket4j | Baeldung

How to set rate limit for each user in Spring Boot?

https://youssefelyamani.medium.com/secure-your-spring-boot-api-with-rate-limit-20d868148fd

<dependency>

<groupId>com.github.vladimir-bukhtoyarov</groupId>

<artifactId>bucket4j-core</artifactId>

<version>4.10.0</version>

</dependency>

Examples

@RestController

class AreaCalculationController {

private final Bucket bucket;

public AreaCalculationController() {

Bandwidth limit = Bandwidth.classic(20, Refill.greedy(20, Duration.ofMinutes(1)));

this.bucket = Bucket4j.builder()

.addLimit(limit)

.build();

}

//..

}

@PostMapping(value = "/api/v1/area/rectangle")

public ResponseEntity<AreaV1> rectangle(@RequestBody RectangleDimensionsV1 dimensions) {

if (bucket.tryConsume(1)) {

return ResponseEntity.ok(new AreaV1("rectangle", dimensions.getLength() * dimensions.getWidth()));

}

return ResponseEntity.status(HttpStatus.TOO_MANY_REQUESTS).build();

}Test and verify the effect of current limiting

$ curl -X POST http://localhost:9001/api/v1/area/rectangle \

-H "Content-Type: application/json" \

-d '{ "length": 10, "width": 12 }'

{ "shape":"rectangle","area":120.0 }advantage:

RateLimiter and cluster current limiting scheme are provided.

Disadvantages: Hazelcast/ignite/infinispan memory data is required to be combined for cluster current limiting, which increases heavy external dependence.

Cluster current limiting scheme

Hazelcast, ignite and infinispan can be used as distributed memory databases.

Hazelcast and ignite have their own advantages and disadvantages. Ignite has better performance and openness.

3.Resilience4j ratelimiter

Resilience4j usage ex amp le & dynamic adjustment limit:

Rate-Limiting with Spring Boot and Resilience4j

Implementing Rate Limiting with Resilience4j

Advantages: different stability guarantee methods such as ratelimiter, circuitbreaker, retry and bulkhead are provided.

Disadvantages: there is no cluster solution.

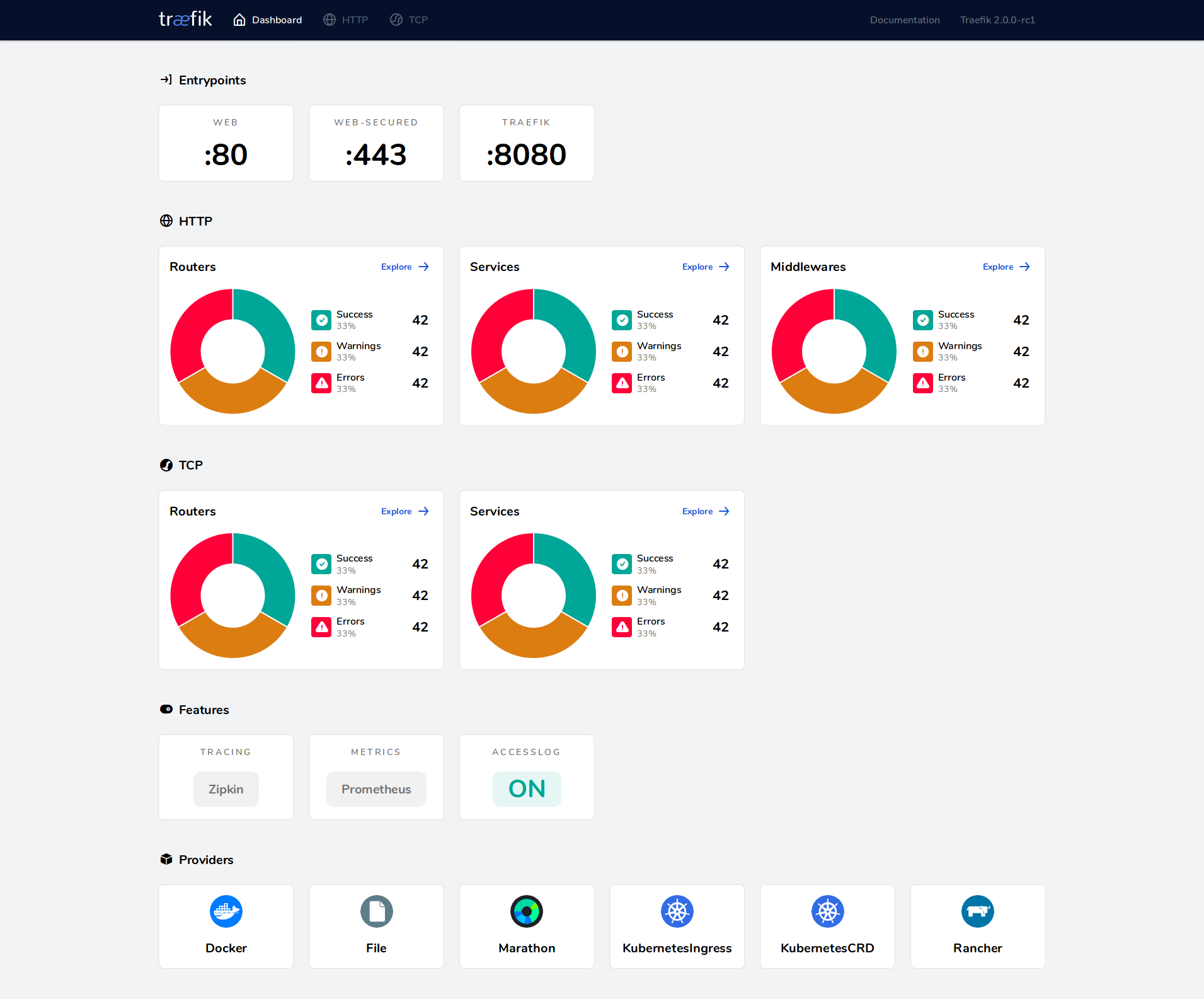

4. Use Traefik to control the flow

Rate limiting on Kubernetes applications | Traefik Blog

How to use Traefik reverse proxy

traefik-helm-chart/README.md at master · traefik/traefik-helm-chart · GitHub

Advantages: the threshold of limit can be adjusted in real time. The current of the whole cluster can be limited, and qps and concurrent number can be used to limit the current.

Disadvantages: one entry API corresponds to multiple later algorithms (routing to different algorithm services according to different request conditions), and the routing logic cannot be expressed by Traefik's Route rule.

For example, the following is an example. The average maximum request for setting the / prod path is 100 qps per second and the maximum concurrency is 50. The two parameters together can accurately control qps.

Define a middleware of rate limit. Then reference the middleware in IngressRoute.

When the cluster expands or shrinks, the current limiting strategy can be changed by updating the configuration of middleware.

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: prod-rate-limit

spec:

rateLimit:

average: 100

burst: 50

--

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: prod-route

spec:

entryPoints:

- web

routes:

- match: PathPrefix(`/prod`)

kind: Rule

services:

- name: prod-service

port: 80

middlewares:

- name: prod-rate-limit Traefik not only provides current limiting, but also provides API statistics and monitoring Dashboard, which is convenient to observe the health of the cluster and can be opened as needed.

5. Use istio current limiting

Istio / Traffic Management

Istio / Circuit Breaking

Istio / Destination Rule

Istio / Ingress Gateways

Istio / Istio as a Proxy for External Services

The number of concurrent connections can be controlled, but qps cannot be accurately controlled.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews

spec:

host: reviews

subsets:

- name: v1

labels:

version: v1

trafficPolicy:

connectionPool:

tcp:

maxConnections: 100In addition, Spring Cloud Zuul RateLimit can be used for current limiting, or qps traffic can be controlled in nginx ingress, with less flexibility. Reference:

managed - Rate Limiting based on URL and Path in Kubernetes - Stack Overflow