Previously: Interpretation of mask rcnn super detailed code (I)

(Xiaosheng has been busy with other things recently, and the update has been delayed for a month... Next, he will try to finish all the contents by the day in a row)

Summary of network structure in 1 (I) (this paragraph can be ignored after just reading (I))

(1) Three parts: Resnet Graph, Region Proposal Network (RPN) and Proposal Layer are parsed in. (MaskRCNN Class layer will connect everyone)

Resnet Graph is a series of convolutions whose purpose is to extract features. For the image input network, first extract the features through Resnet Graph to obtain [C1, C2, C3, C4, C5]. These features are the basis of the following network.

In the MaskRCNN Class layer analysis later, it will be found that the C-series features obtained by Resnet Graph are convoluted by 3x3 to obtain the P-Series features, namely [P1, P2, P3, P4, P5], and then P6 is obtained by maxpooling, [P1, P2, P3, P4, P5, P6] as the feature_map enter Region Proposal Network (RPN) to get rpn_class_logits , rpn_class , rpn_bbox .

These output results can be input into the Proposal Layer to get proposals.

The above is the association of the three parts analyzed in (I). Next, we will continue to analyze the structure of ROIAlign Layer, Detection Target Layer and Feature Pyramid Network Heads.

2. Continue to parse the train process code

2.1 ROIAlign Layer

ROIAlign is the most difficult part to understand. ROIAlign in the code includes two parts:

- Define function def log2_graph(x): This is because there is no requirement in TensorFlow l o g 2 x log_2x log2 * x method, so the code defines a method to calculate and directly returns TF log(x) / tf. log(2.0)

- Define class PyramidROIAlign(KE.Layer): also inherit Ke The purpose of layer is to enable the data flow processed by TensorFlow to be processed by keras. It has been described above and will not be repeated here.

The PyramidROIAlign class is parsed below.

The first is__ init__ method:

def __init__(self, pool_shape, **kwargs):

super(PyramidROIAlign, self).__init__(**kwargs)

self.pool_shape = tuple(pool_shape)

According to the source code, when instantiating the PyramidROIAlign class, you need to pass in a pool_shape parameter. This parameter is very important. It determines the characteristic shape and general pool output by the ROIAlign layer_ Shape = (7, 7), that is, regardless of the size of the input feature, the size of the output feature must be 7x7 (regardless of the number of channels).

This is very important. Because mask rcnn is set to input pictures of any size. For convolution, the parameter quantity of this layer = convolution kernel Height x convolution kernel width x convolution kernel quantity (number of channels). The height and width of convolution kernel are the set parameters, and the number of channels is a super parameter. The input picture size will not affect the parameter quantity of convolution layer, but the output feature size is different. No matter how large the input picture is, it can be calculated (that is, no error will be reported).

However, for the deny layer, the input image size is different, and the amount of parameters is different. When classifying, the network should be connected to the deny layer at last, and the feature size of the input deny should be consistent. But the image size of mask rcnn is uncertain. What should I do???

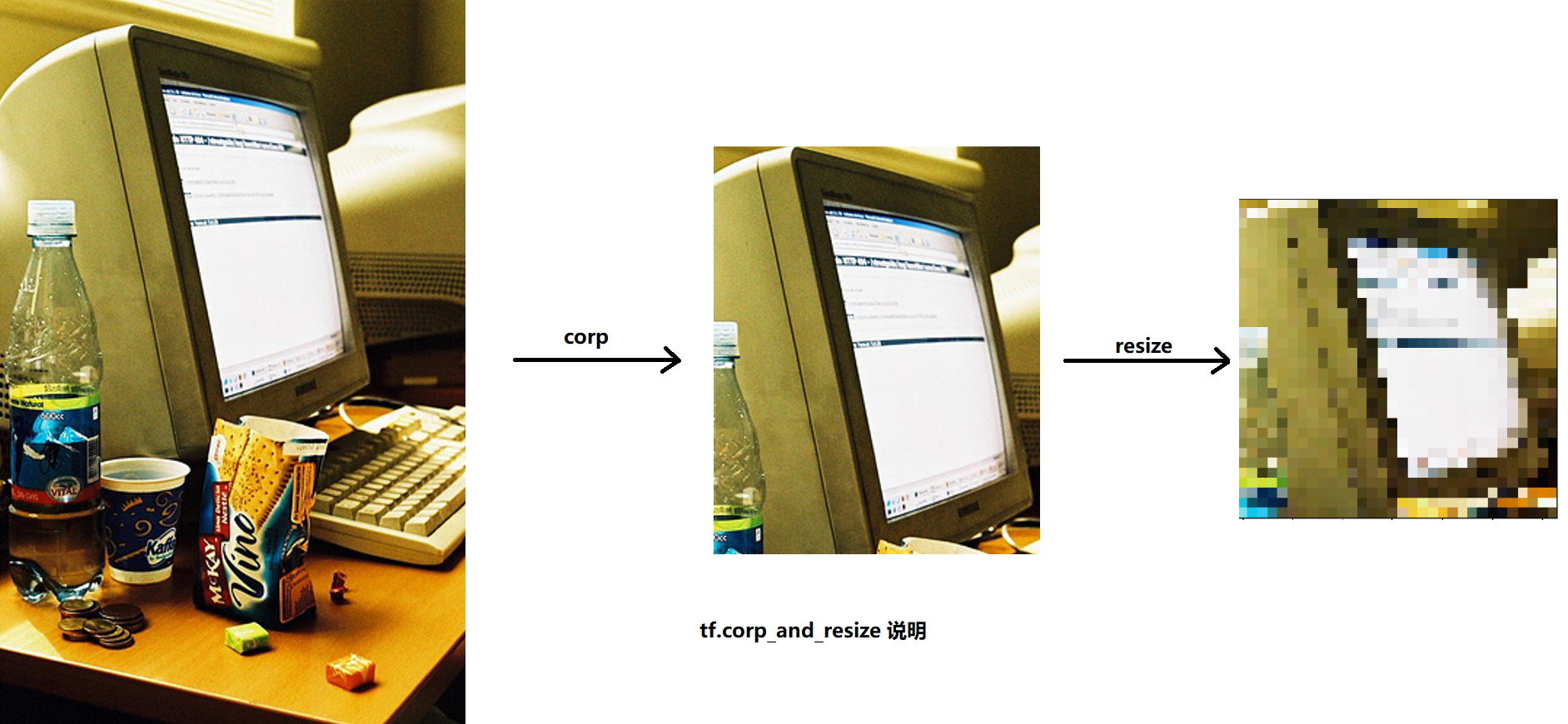

Therefore, this is the important role of PyramidROIAlign: no matter what the feature size of the input layer is, it will become a fixed value after passing through the layer (that is, pool_size, generally 7x7). The core technology calls this method: TF crop_ and_ Resize (in addition, instead of changing the features of the whole input picture into 7x7, if so, only resize has no corp. the function of PyramidROIAlign is to cut out the features of the salient object on the feature map of different sizes according to the bbox coordinates of the salient object and the size of the salient object relative to the area of the whole picture. This process can be understood in combination with the code.)

Let's see how PyramidROIAlign does, that is, the call(self, inputs) method. Code flow:

(1) Initialization, get bboxes and image from input_ meta ,feature_maps:

def call(self, inputs):

# num_boxes refers to the number of proposal s

# Find a suitable proposal through the cyclic feature layer and apply it to ROIAlign

# Crop boxes [batch, num_boxes, (y1, x1, y2, x2)] in normalized coords

boxes = inputs[0]

print('boxes:',boxes)

# Image meta

# Holds details about the image. See compose_image_meta()

image_meta = inputs[1]

# Feature Maps. List of feature maps from different level of the

# feature pyramid. Each is [batch, height, width, channels]

feature_maps = inputs[2:]

Of which:

- Boxes: shape = [batch, num_boxes, (y1, x1, y2, x2)], the coordinates here have been normalized.

- input_meta: it contains all kinds of picture information, including the size and id of the original input picture (although only image_shape will be used...) This is through compose_ image_ Generated by meta method, parse can be used_ image_ Meta (meta) obtains the data in meta. These two methods are Interpretation (I) As described in.

- feature_maps: features extracted by Resnet Graph. Each shape is [batch, height, width, channels]

what? You asked how this parameter was passed in, of course:

layer = PyramidROIAlign(7,7)([bboxes, image_meta, feature_maps])

(2) According to image_ Based on the area information of the original image carried in the meta, we can get which feature image the processed image should be pooled in.

def call(self, inputs):

# (1) Initialize and get bboxes and image from 'input'_ meta ,feature_maps

... # Initialization code omitted

# Assign each ROI to a level in the pyramid based on the ROI area.

# Boxes here are ROI boxes, which are used to calculate the area of each ROI box

y1, x1, y2, x2 = tf.split(boxes, 4, axis=2)

h = y2 - y1 # h.shape=[batch,num_boxes,1]

w = x2 - x1

# Use shape of first image. Images in a batch must have the same size.

# Here we get the size of the original drawing and calculate the area of the original drawing

image_shape = parse_image_meta_graph(image_meta)['image_shape'][0]

# Equation 1 in the Feature Pyramid Networks paper. Account for

# the fact that our coordinates are normalized here.

# e.g. a 224x224 ROI (in pixels) maps to P4

# Original drawing area

image_area = tf.cast(image_shape[0] * image_shape[1], tf.float32)

# It is divided into two steps to calculate the layer in which each ROI box needs to be pooled

roi_level = log2_graph(tf.sqrt(h * w) / (224.0 / tf.sqrt(image_area))) # h. W has been normalized

roi_level = tf.minimum(5, tf.maximum(

2, 4 + tf.cast(tf.round(roi_level), tf.int32))) # Make sure the value is between 2-5

roi_level = tf.squeeze(roi_level, 2) # roi_level.shape=[batch,num_boxes,1]

A little explanation is added here:

- Why should ROI be calculated_ level ?

roi_ The calculation method of level (marked as k) is: k = k 0 + l o g 2 ( w ∗ h 244 ) k=k_0+log_2(\frac{\sqrt{w*h}}{244}) k=k0+log2(244w∗h ) here, W and H are the width and height of the binding frame of the salient object respectively, so w*h is the size of the salient object. 244 is the input size of the pre trained Image Net, such as k 0 k_0 k0 = 4, then, when w*h=244, k=4, the feature of the significance object is crop ped from P4 in the feature pyramid.

If the salient object occupies a large area of the original image, cut it on a deeper (i.e. more convolution times) feature image (such as P5). If the salient object is an insignificant small thing, such as k 0 k_0 k0 = 4, w*h=112, then k=3, and small salient objects are cut on a "shallower" feature map (such as P3). This is conducive to detecting targets of different sizes.

- In which feature map is ROI calculated? The results of Pooling are stored in ROI_ ROI in level_ level. shape=[batch,num_boxes,1]

(3) Loop feature_maps, in feature_ TF. Is used in maps image. crop_ and_ The resize function gets pooled and stores it in the list:

def call(self, inputs):

#(1) Initialize and get bboxes and image from 'input'_ meta ,feature_maps

... # Initialization code omitted

#(2) According to image_ Based on the area information of the original image carried in the meta, we can get which feature image the processed image should be pooled in

... # Code omission

# Loop through levels and apply ROI pooling to each. P2 to P5.

# The five obtained feature maps are fused with different levels

pooled = []

box_to_level = [] # box_to_level[i, 0] refers to the image index to which the current feat belongs, box_to_level[i, 1] indicates its box serial number

for i, level in enumerate(range(2, 6)): # Only use 2-5 four feature maps

# First, find out the ROI that needs to be calculated at the level

# tf.where return format [coordinate 1, coordinate 1...]

# np.where returns the format [[coordinate 1.x, coordinate 2.x...], [coordinate 1.y, coordinate 2.y...]]

# Return the i-th proposal coordinate of the nth picture (n corresponds to batch coordinate and I corresponds to the one-dimensional coordinate of num_boxes)

ix = tf.where(tf.equal(roi_level, level)) # ix is a coordinate set, each coordinate has three numbers, and the third digit must be 0 (because roi_level.shape=[batch,num_boxes,1]).

# level_boxes records the coordinates of each box allocated in the corresponding level feature layer (the picture corresponding to the candidate box index)

# box_ Indexes records the index of the picture corresponding to each box in the batch (the candidate box index corresponds to its coordinates, that is, the coordinates of the small black box)

level_boxes = tf.gather_nd(boxes, ix) # [number of proposal s at this level, 4]

# Box indices for crop_and_resize.

box_indices = tf.cast(ix[:, 0], tf.int32) # Record the serial number of the picture corresponding to each proposal

# ↑ take ix[:,0] as TF image. crop_ and_ Resize parameter passing requires

# Keep track of which box is mapped to which level

box_to_level.append(ix)

# Stop gradient propogation to ROI proposals

# level_boxes and boxes_ Indices are the result of RPN calculation,

# However, the output Tensor after the two actions on the feature is the input of RCNN,

# The gradients of the two parts cannot flow through each other, so TF is required stop_ Gradient() truncates gradient propagation.

level_boxes = tf.stop_gradient(level_boxes)

box_indices = tf.stop_gradient(box_indices)

# Crop and Resize

# From Mask R-CNN paper: "We sample four regular locations, so

# that we can evaluate either max or average pooling. In fact,

# interpolating only a single value at each bin center (without

# pooling) is nearly as effective."

#

# Here we use the simplified approach of a single value per bin,

# which is how it's done in tf.crop_and_resize()

# Result: [batch * num_boxes, pool_height, pool_width, channels]

# Call API bilinear interpolation

# tf. image. crop_ and_ Parameter description of resize:

# -image: represents the feature map

# -boxes: refers to the area to be divided. The input format is [ymin, xmin, ymax, xmax]

# - box_ind: is the index between boxes and image. It is a 1-dimensional tensor with the shape of [num_boxes], box_ The ind [i] value specifies the image to be referenced by the ith box

# - crop_size: indicates the size after RoiAlign

pooled.append(tf.image.crop_and_resize(

feature_maps[i], level_boxes, box_indices, self.pool_shape,

method="bilinear"))

# Input parameter shape:

# [batch, image_height, image_width, channels]

# [this_level_num_boxes, 4]

# [this_level_num_boxes]

# [height, pool_width]

# Pack pooled features into one tensor

# For each box, the corresponding features of the box on the feature map of each layer are extracted, and then a large feature table pooled is formed

pooled = tf.concat(pooled, axis=0)

# Pack box_to_level mapping into one array and add another

# column representing the order of pooled boxes

box_to_level = tf.concat(box_to_level, axis=0)

box_range = tf.expand_dims(tf.range(tf.shape(box_to_level)[0]), 1)

box_to_level = tf.concat([tf.cast(box_to_level, tf.int32), box_range],

axis=1)

About TF image. crop_ and_ Supplementary description of the key function resize: this function will first cut out a part on the graph by index according to the input parameters [ymin, xmin, ymax, xmax], and then resize this part to the size you want, such as:

In addition, the index code (that is, the code related to ix) is not easy to understand. You can see it The third part of this article is an example of index explanation (it's OK to be reasonable and not understand. It doesn't affect the understanding of the whole mask rcnn code, but understanding it will help you write your own code and use the index in the future)

(4) Adjust the order of shapes to obtain the output of shapes such as [batch, num_bbox, pool_height, pool_width, channels];

def call(self, inputs):

... #(1) (3) omit code

# So far, we have obtained the tensor pooled of the feat set recording all ROIAlign results and the tensor box recording the relevant information of these feats_ to_ level,

# Due to the extraction method, the feat s at this time are not sorted in the original order (batch first and then box index)

# Next, we try to restore the order (ROIAlign acts on the corresponding proposal of the corresponding image to generate a feat)

# Rearrange pooled features to match the order of the original boxes

# Sort box_to_level by batch then box index

# TF doesn't have a way to sort by two columns, so merge them and sort.

# box_to_level[i, 0] refers to the image index to which the current feat belongs, box_to_level[i, 1] indicates its box serial number

sorting_tensor = box_to_level[:, 0] * 100000 + box_to_level[:, 1]

ix = tf.nn.top_k(sorting_tensor, k=tf.shape(

box_to_level)[0]).indices[::-1]

ix = tf.gather(box_to_level[:, 2], ix)

pooled = tf.gather(pooled, ix)

# Re-add the batch dimension

shape = tf.concat([tf.shape(boxes)[:2], tf.shape(pooled)[1:]], axis=0)

pooled = tf.reshape(pooled, shape)

return pooled

2.2 Detection Target Layer

Detection Target Layer part input (gt refers to ground truth):

- proposals: [POST_NMS_ROIS_TRAINING, (y1, x1, y2, x2)] coordinates are normalized. If the actual number of proposal s generated by the picture is insufficient, zero will be added to the fixed value

- gt_class_ids: [MAX_GT_INSTANCES] int class IDs

- gt_boxes: [MAX_GT_INSTANCES, (y1, x1, y2, x2)] coordinates are normalized

- gt_masks: [height, width, MAX_GT_INSTANCES] of boolean type.

return: - rois: [TRAIN_ROIS_PER_IMAGE, (y1, x1, y2, x2)] coordinates are normalized

- class_ids: [TRAIN_ROIS_PER_IMAGE]. Integer class IDs. If the quantity is insufficient, zero will be added to the fixed value.

- deltas: [TRAIN_ROIS_PER_IMAGE, (dy, dx, log(dh), log(dw))]

- masks: [TRAIN_ROIS_PER_IMAGE, height, width]. These masks are masks that are cropped into corresponding bbox boxes and resized to the network output size.

There are three parts:

- overlaps_ Graph (box1, box2) method: calculate the overlapping part between two boxes, that is, IoU value. This part of the code is simple and omitted.

- detection_targets_graph method: the main processing flow of detection

- DetectionTargetLayer class

detection_targets_graph code implementation process:

(1) remove zero padding, remove gt_class_ids and gt_masks,proposals,gt_ 0 in boxes (GT is short for ground truth)

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

"""Generates detection targets for one image. Subsamples proposals and

generates target class IDs, bounding box deltas, and masks for each.

Inputs:

proposals: [POST_NMS_ROIS_TRAINING, (y1, x1, y2, x2)] in normalized coordinates. Might

be zero padded if there are not enough proposals.

gt_class_ids: [MAX_GT_INSTANCES] int class IDs

gt_boxes: [MAX_GT_INSTANCES, (y1, x1, y2, x2)] in normalized coordinates.

gt_masks: [height, width, MAX_GT_INSTANCES] of boolean type.

Returns: Target ROIs and corresponding class IDs, bounding box shifts,

and masks.

rois: [TRAIN_ROIS_PER_IMAGE, (y1, x1, y2, x2)] in normalized coordinates

class_ids: [TRAIN_ROIS_PER_IMAGE]. Integer class IDs. Zero padded.

deltas: [TRAIN_ROIS_PER_IMAGE, (dy, dx, log(dh), log(dw))]

masks: [TRAIN_ROIS_PER_IMAGE, height, width]. Masks cropped to bbox

boundaries and resized to neural network output size.

Note: Returned arrays might be zero padded if not enough target ROIs.

"""

# Assertions

asserts = [

tf.Assert(tf.greater(tf.shape(proposals)[0], 0), [proposals],

name="roi_assertion"),

]

with tf.control_dependencies(asserts):

proposals = tf.identity(proposals)

# Remove zero padding

proposals, _ = trim_zeros_graph(proposals, name="trim_proposals")

gt_boxes, non_zeros = trim_zeros_graph(gt_boxes, name="trim_gt_boxes")

gt_class_ids = tf.boolean_mask(gt_class_ids, non_zeros,

name="trim_gt_class_ids")

gt_masks = tf.gather(gt_masks, tf.where(non_zeros)[:, 0], axis=2,

name="trim_gt_masks")

(2) Handle crowds (a crowd references to a bounding box around severe instances), using TF Where get crowd_id, and then use TF Gather get crowd_boxes, and non_crowd_ix get gt_class_id,gt_boxes,gt_masks

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

... # Code omission

# Handle COCO crowds

# A crowd box in COCO is a bounding box around several instances. Exclude

# them from training. A crowd box is given a negative class ID.

crowd_ix = tf.where(gt_class_ids < 0)[:, 0]

non_crowd_ix = tf.where(gt_class_ids > 0)[:, 0]

crowd_boxes = tf.gather(gt_boxes, crowd_ix)

gt_class_ids = tf.gather(gt_class_ids, non_crowd_ix)

gt_boxes = tf.gather(gt_boxes, non_crowd_ix)

gt_masks = tf.gather(gt_masks, non_crowd_ix, axis=2)

(3) Calculate proposals and GT_ The overlapped IoU of boxes exists in overlaps

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

... # Code omission

# Compute overlaps matrix [proposals, gt_boxes]

overlaps = overlaps_graph(proposals, gt_boxes)

(4) Calculate crowd_overlaps = IoU(proposals and crowd_boxes), where Max is obtained. The criterion for judging whether it is crowd is: crowd_ iou_ max<0.001

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

... # Code omission

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

crowd_overlaps = overlaps_graph(proposals, crowd_boxes)

crowd_iou_max = tf.reduce_max(crowd_overlaps, axis=1)

no_crowd_bool = (crowd_iou_max < 0.001)

(5) Judge positive/negative ROIs: ① positive ROIs refers to and GT_ The maximum IOU of boxes > = 0.5 ② negative refers to and GT_ The maximum IOU of boxes is less than 0.5 and is not crowd (crowd_iou_max < 0.001)

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

... # Code omission

# Determine positive and negative ROIs

roi_iou_max = tf.reduce_max(overlaps, axis=1)

# 1. Positive ROIs are those with >= 0.5 IoU with a GT box

positive_roi_bool = (roi_iou_max >= 0.5)

positive_indices = tf.where(positive_roi_bool)[:, 0]

# 2. Negative ROIs are those with < 0.5 with every GT box. Skip crowds.

negative_indices = tf.where(tf.logical_and(roi_iou_max < 0.5, no_crowd_bool))[:, 0]

(6) According to the set number of positive, control the Positive/Negative ratio and filter the proposals to obtain the proposal_rois

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

# Determine positive and negative ROIs

... # Code omission

# Subsample ROIs. Aim for 33% positive

# Positive ROIs

positive_count = int(config.TRAIN_ROIS_PER_IMAGE *

config.ROI_POSITIVE_RATIO)

positive_indices = tf.random_shuffle(positive_indices)[:positive_count]

positive_count = tf.shape(positive_indices)[0]

# Negative ROIs. Add enough to maintain positive:negative ratio.

r = 1.0 / config.ROI_POSITIVE_RATIO

negative_count = tf.cast(r * tf.cast(positive_count, tf.float32), tf.int32) - positive_count

negative_indices = tf.random_shuffle(negative_indices)[:negative_count]

# Gather selected ROIs

positive_rois = tf.gather(proposals, positive_indices)

negative_rois = tf.gather(proposals, negative_indices)

(7)assign positive rois to gt boxes

roi_gt_box_assignment is positive_ The maximum index value of overlaps. ROI is obtained from this index_ gt_ Boxes and roi_gt_class_ids

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

# Determine positive and negative ROIs

# Subsample ROIs. Aim for 33% positive

... # Code omission

# Assign positive ROIs to GT boxes.

positive_overlaps = tf.gather(overlaps, positive_indices)

roi_gt_box_assignment = tf.cond(

tf.greater(tf.shape(positive_overlaps)[1], 0),

true_fn = lambda: tf.argmax(positive_overlaps, axis=1),

false_fn = lambda: tf.cast(tf.constant([]),tf.int64)

)

roi_gt_boxes = tf.gather(gt_boxes, roi_gt_box_assignment)

roi_gt_class_ids = tf.gather(gt_class_ids, roi_gt_box_assignment)

(8) Calculate roi_gt_boxes and positive_ The delta of ROIs (this is also the coordinate)

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

# Determine positive and negative ROIs

# Subsample ROIs. Aim for 33% positive

# Assign positive ROIs to GT boxes.

... # Code omission

# Compute bbox refinement for positive ROIs

deltas = utils.box_refinement_graph(positive_rois, roi_gt_boxes)

deltas /= config.BBOX_STD_DEV

(9)assign positive rois to gt masks

According to roi_gt_box_assignment select the correct roi_masks

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

# Determine positive and negative ROIs

# Subsample ROIs. Aim for 33% positive

# Assign positive ROIs to GT boxes.

# Compute bbox refinement for positive ROIs

... # Code omission

# Assign positive ROIs to GT masks

# Permute masks to [N, height, width, 1]

transposed_masks = tf.expand_dims(tf.transpose(gt_masks, [2, 0, 1]), -1)

# Pick the right mask for each ROI

roi_masks = tf.gather(transposed_masks, roi_gt_box_assignment)

# Compute mask targets

boxes = positive_rois

if config.USE_MINI_MASK:

# Transform ROI coordinates from normalized image space

# to normalized mini-mask space.

# If enabled, resizes instance masks to a smaller size to reduce

# memory load. Recommended when using high-resolution images.

y1, x1, y2, x2 = tf.split(positive_rois, 4, axis=1)

gt_y1, gt_x1, gt_y2, gt_x2 = tf.split(roi_gt_boxes, 4, axis=1)

gt_h = gt_y2 - gt_y1

gt_w = gt_x2 - gt_x1

y1 = (y1 - gt_y1) / gt_h

x1 = (x1 - gt_x1) / gt_w

y2 = (y2 - gt_y1) / gt_h

x2 = (x2 - gt_x1) / gt_w

boxes = tf.concat([y1, x1, y2, x2], 1)

box_ids = tf.range(0, tf.shape(roi_masks)[0])

masks = tf.image.crop_and_resize(tf.cast(roi_masks, tf.float32), boxes,

box_ids,

config.MASK_SHAPE)

# Remove the extra dimension from masks.

masks = tf.squeeze(masks, axis=3)

# Threshold mask pixels at 0.5 to have GT masks be 0 or 1 to use with

# binary cross entropy loss.

masks = tf.round(masks)

(10) Put positive and negative cat together for rois, and roi_gt_class_ids,delta_ Mask zero

def detection_targets_graph(proposals, gt_class_ids, gt_boxes, gt_masks, config):

# Remove zero padding

# Handle COCO crowds

# Compute overlaps matrix [proposals, gt_boxes]

# Compute overlaps with crowd boxes [proposals, crowd_boxes]

# Determine positive and negative ROIs

# Subsample ROIs. Aim for 33% positive

# Assign positive ROIs to GT boxes.

# Compute bbox refinement for positive ROIs

# Assign positive ROIs to GT masks

# Compute mask targets

... # Code omission

# Append negative ROIs and pad bbox deltas and masks that

# are not used for negative ROIs with zeros.

rois = tf.concat([positive_rois, negative_rois], axis=0)

N = tf.shape(negative_rois)[0]

P = tf.maximum(config.TRAIN_ROIS_PER_IMAGE - tf.shape(rois)[0], 0)

rois = tf.pad(rois, [(0, P), (0, 0)])

roi_gt_boxes = tf.pad(roi_gt_boxes, [(0, N + P), (0, 0)])

roi_gt_class_ids = tf.pad(roi_gt_class_ids, [(0, N + P)])

deltas = tf.pad(deltas, [(0, N + P), (0, 0)])

masks = tf.pad(masks, [[0, N + P], (0, 0), (0, 0)])

return rois, roi_gt_class_ids, deltas, masks

3 about the index used in the code

Sometimes I'm confused. I don't know how to turn in the code. One way to help understand is to compile some false data and run the code of the index separately to see how it changes.

Here are two examples:

Example 1

Index code example 1

The source code is part of the call method in class PyramidROIAlign(KE.Layer):

# The five obtained feature maps are fused with different levels

pooled = []

box_to_level = [] # box_to_level[i, 0] refers to the image index to which the current feat belongs, box_to_level[i, 1] indicates its box serial number

for i, level in enumerate(range(2, 6)): # Only use 2-5 four feature maps

# First, find out the ROI that needs to be calculated at the level

# tf.where return format [coordinate 1, coordinate 1...]

# np. X coordinate [. 1, where], [coordinate 1.y, coordinate 2.y...]]

# Return the i-th proposal coordinate of the nth picture (n corresponds to batch coordinate and I corresponds to the one-dimensional coordinate of num_boxes)

ix = tf.where(tf.equal(roi_level, level)) # ix is a coordinate set, each coordinate has three numbers, and the third digit must be 0 (because roi_level.shape=[batch,num_boxes,1]).

# level_boxes records the coordinates of each box allocated in the corresponding level feature layer (the picture corresponding to the candidate box index)

# box_ Indexes records the index of the picture corresponding to each box in the batch (the candidate box index corresponds to its coordinates, that is, the coordinates of the small black box)

level_boxes = tf.gather_nd(boxes, ix) # [number of proposal s at this level, 4]

# Box indices for crop_and_resize.

box_indices = tf.cast(ix[:, 0], tf.int32) # Record the serial number of the picture corresponding to each proposal

# ↑ take ix[:,0] as TF image. crop_ and_ Resize parameter passing requires

# Keep track of which box is mapped to which level

box_to_level.append(ix)

This section of the index is hard to understand, so let's compile some data and write a code to see how it changes:

import numpy as np

import tensorflow as tf

# I'll show you how to slice and index

# For a picture, probs shape=(N,num_class)

# Where N is the number of objects detected in this picture. In the example, suppose N=6, that is, a total of 6 objects are detected in the picture

# num_class is the total number of categories marked in all training data. In the example, it is assumed that there are a total of 8 objects

def test():

box_to_level = []

# Suppose batch=1 num_boxes=5. Make up some data randomly on this basis:

roi_level = [[

[4],

[3],

[3],

[2],

[5]

]] # roi_level.shape=[batch,num_boxes,1]

roi_level = np.array(roi_level)

print('roi_level.shape=', roi_level.shape)

boxes = [[

[0.1, 0.3, 0.13, 0.34],

[0.5, 0.66, 0.67, 0.89],

[0.4, 0.61, 0.7, 0.8],

[0.2, 0.3, 0.4, 0.5],

[0.23, 0.13, 0.43, 0.54]

]] # [batch, num_boxes, (y1, x1, y2, x2)]

boxes = np.array(boxes)

print('boxes.shape=', boxes.shape)

# ------------Run hard to understand code--------------

for i, level in enumerate(range(2, 6)):

ix = tf.where(tf.equal(roi_level, level))

level_boxes = tf.gather_nd(boxes, ix)

box_indices = tf.cast(ix[:, 0], tf.int32)

print('i=',i,' level=',level,' ---------------')

with tf.Session() as sess:

print('ix:', sess.run(ix))

print('level_boxes:', sess.run(level_boxes))

print('box_indices:', sess.run(box_indices))

box_to_level.append(ix)

print("box_to_level:",)

with tf.Session() as sess:

for i in box_to_level:

print(sess.run(i))

if __name__ == '__main__':

test()

Operation results:

roi_level.shape= (1, 5, 1)

boxes.shape= (1, 5, 4)

roi_level = [[

[4],

[3],

[3],

[2],

[5]

]]

boxes = [[

[0.1, 0.3, 0.13, 0.34],

[0.5, 0.66, 0.67, 0.89],

[0.4, 0.61, 0.7, 0.8],

[0.2, 0.3, 0.4, 0.5],

[0.23, 0.13, 0.43, 0.54]

]]

# i= 0 level= 2 ---------------

ix: [[0 3 0]]

level_boxes: [0.2]

box_indices: [0]

# i= 1 level= 3 ---------------

ix: [[0 1 0]

[0 2 0]]

level_boxes: [0.5 0.4]

box_indices: [0 0]

# i= 2 level= 4 ---------------

ix: [[0 0 0]]

level_boxes: [0.1]

box_indices: [0]

# i= 3 level= 5 ---------------

ix: [[0 4 0]]

level_boxes: [0.23]

box_indices: [0]

Example 2

The source code is refine in the Detection Layer_ detections_ In the first few sentences of graph, the code of mask rcnn is as follows:

# -----------Get the score of the class with the highest score in each recommended area-----------

# Class IDs per ROI

class_ids = tf.argmax(probs, axis=1, output_type=tf.int32) #[N] , the highest category per picture

# Class probability of the top class of each ROI

indices = tf.stack([tf.range(probs.shape[0]), class_ids], axis=1) # [N, (picture serial number, highest class serial number)]

class_scores = tf.gather_nd(probs, indices) # [N] , the highest classification score of each picture

Compile some data and write a code to see how it changes:

import numpy as np

import tensorflow as tf

# I'll show you how to slice and index

# For a picture, probs shape=(N,num_class)

# Where N is the number of objects detected in this picture. In the example, suppose N=6, that is, a total of 6 objects are detected in the picture

# num_class is the total number of categories marked in all training data. In the example, it is assumed that there are a total of 8 objects

def test():

probs = np.array([

[0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.3],

[0, 0.5, 0.2, 0.3, 0.4, 0.1, 0.6, 0.2, 0.3],

[0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.1, 0.3],

[0, 0.9, 0.2, 0.3, 0.4, 0.5, 0.6, 0.4, 0.3],

[0, 0.1, 0.2, 0.9, 0.4, 0.5, 0.6, 0.2, 0.3],

[0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.9, 0.6, 0.3],

])

print(probs)

class_ids = tf.argmax(probs, axis=1, output_type=tf.int32) # [N] , the highest category per picture

# Class probability of the top class of each ROI

indices = tf.stack([tf.range(probs.shape[0]), class_ids], axis=1) # [N, (picture serial number, highest class serial number)]

class_scores = tf.gather_nd(probs, indices) # [N] , the highest classification score of each picture

with tf.Session() as sess:

print(sess.run(indices))

print(sess.run(class_scores))

if __name__ == '__main__':

test()

The output is:

# The output is: # probs = [[0. 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.3] [0. 0.5 0.2 0.3 0.4 0.1 0.6 0.2 0.3] [0. 0.1 0.2 0.3 0.4 0.5 0.6 0.1 0.3] [0. 0.9 0.2 0.3 0.4 0.5 0.6 0.4 0.3] [0. 0.1 0.2 0.9 0.4 0.5 0.6 0.2 0.3] [0. 0.1 0.2 0.3 0.4 0.5 0.9 0.6 0.3]] # class_ids = [7 6 6 1 3 6] # indices = [[0 7] [1 6] [2 6] [3 1] [4 3] [5 6]] # class_scores = [0.7 0.6 0.6 0.9 0.9 0.9]

So can you better understand:

- tf.gather_nd and TF The difference between gather

- Obtaining method and significance of indices

Ideas for writing code:

First, specify the variable shape and target - to get the maximum score of each line from probs, which is a 2D tensor, so the index must also be 2D, using TF Stack can do it. To get the index of the maximum value per row, TF Argmax can do it.

After getting indices, use TF gather_ Nd gets the specific value, and this completes the goal!