Reproduced from the public address: fun MySQL Author: Hong bin

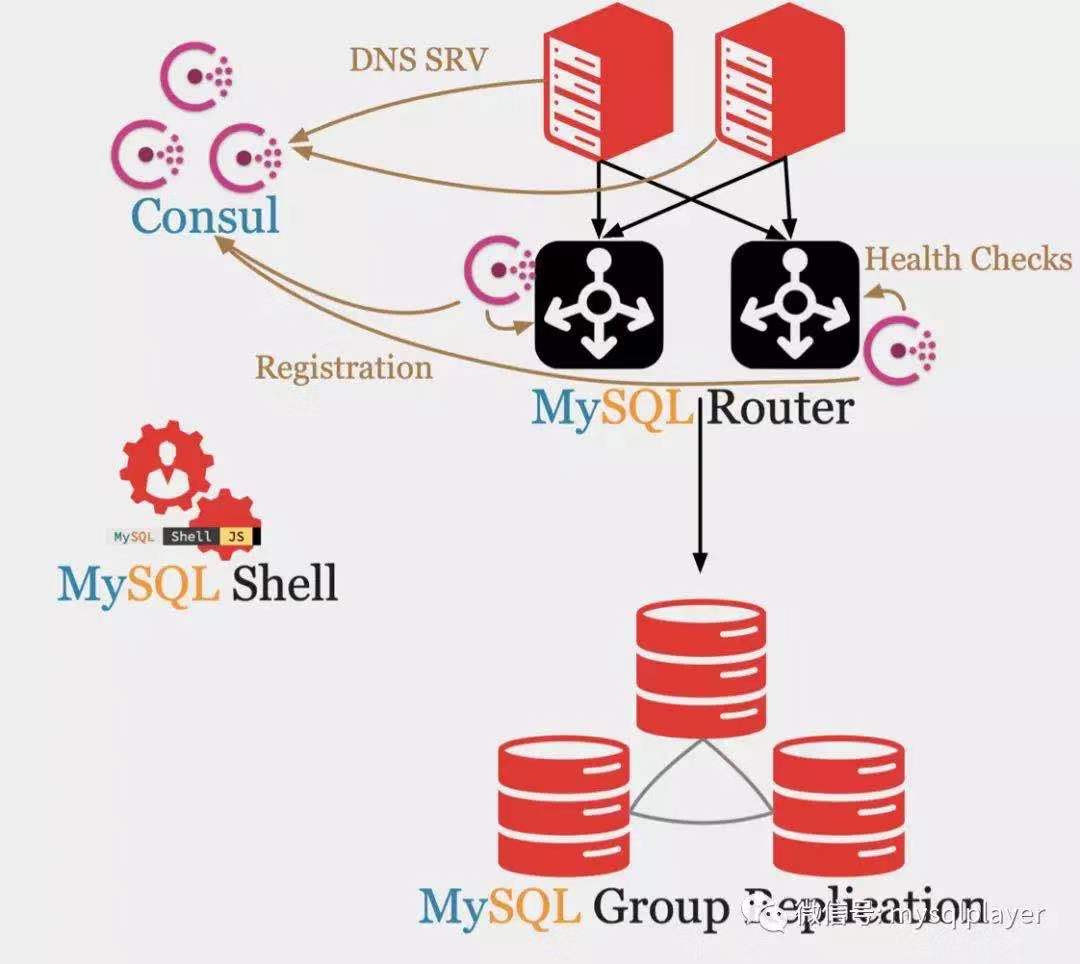

MySQL Router is the access portal of InnoDB Cluster architecture. In the architecture deployment, the official advice is to bind the router to the application side to avoid the router single point problem. Before that, there was a customer consultation about whether the router could not be bound to the application side for deployment, which was not convenient for deployment. Before that, VIP or a layer of load balancing should be added in front of the router. I still think that MySQL Connector should be used to implement Failover and Loadbalance of the access link. Now with the support of DNS SRV, router does not need to be deployed with the application side, but also saves VIP and load balancing. MySQL InnoDB Cluster solution is more perfect, and it is easier to adapt to the service mesh architecture with service discovery components such as consumer. DNS SRV is a type of DNS record used to specify the service address. SRV records not only have the service target address, but also the service port, and can set the priority and weight of each service address.

MySQL Connector 8.0.19 covers multiple languages and supports DNS SRV, including classic protocol and X protocol. According to RFC 2782 implementation, support Priority and Weight clients must connect to the address with the lowest Priority value. If the Priority is the same, the higher the Weight value, the higher the access probability.

- Connector/NET

- Connector/ODBC

- Connector/J

- Connector/Node.js

- Connector/Python

- Connector/C++

Let's show how to use DNS SRV for the next application. Here we use consumer for service discovery.

The consumer agent and MySQL Router are deployed on the same node, check the service activity and register the service information with the consumer server. The Connector on the application side is configured with a service address. When accessing the DB, it first initiates a DNS SRV service request to the consumer server. The consumer server replies to the service address and port of MySQL Router on the application side, and the application side accesses MySQL Router again.

I tested it on this machine.

- First, use mysql shell to create a group of InnoDB Cluster clusters

for i in `seq 4000 4002`; do echo "Deploy mysql sandbox $i" mysqlsh -- dba deploy-sandbox-instance $i --password=root done echo "Create innodb cluster..." mysqlsh root@localhost:4000 -- dba create-cluster cluster01 mysqlsh root@localhost:4000 -- cluster add-instance --recoveryMethod=clone --password=root root@localhost:4001 mysqlsh root@localhost:4000 -- cluster add-instance --recoveryMethod=clone --password=root root@localhost:4002

- Deploy two MySQL routers as access agents

for i in 6446 6556; do echo "Bootstrap router $i" mysqlrouter --bootstrap root@localhost:4000 --conf-use-gr-notifications -d router_$i --conf-base-port $i --name router_$i sed -i 's/level = INFO/level = DEBUG/g router_$i/mysqlrouter.conf sh router_$i/stop.sh sh router_$i/start.sh done

- Install consumer for service registration and DNS resolution. In the test environment, we use the development mode to deploy only one consumer node. If we use it in the production environment, we need to deploy multiple agent s and server s

echo "Install consul..." brew install consul consul agent -dev &

- Register two router proxy services in consumer

echo "Services register..." consul services register -name router -id router1 -port 6446 -tag rw consul services register -name router -id router2 -port 6556 -tag rw

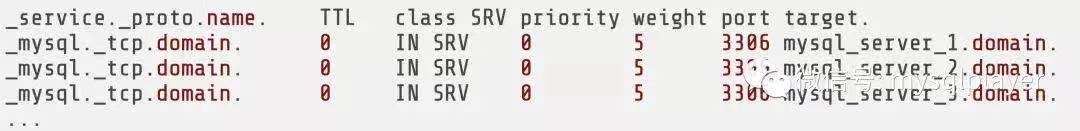

- Test whether DNS SRV can resolve normally. The response recorded by SRV returns service port and service address. The service address has corresponding A record, which is 127.0.0.1 address.

echo "Test dns srv..." dig router.service.consul SRV -p 8600 ;; QUESTION SECTION: ;router.service.consul. IN SRV ;; ANSWER SECTION: router.service.consul. 0 IN SRV 1 1 6556 MBP.node.dc1.consul. router.service.consul. 0 IN SRV 1 1 6446 MBP.node.dc1.consul. ;; ADDITIONAL SECTION: MBP.node.dc1.consul. 0 IN A 127.0.0.1 MBP.node.dc1.consul. 0 IN TXT "consul-network-segment=" MBP.node.dc1.consul. 0 IN A 127.0.0.1 MBP.node.dc1.consul. 0 IN TXT "consul-network-segment="

- The DNS service port of consumer is 8600. You need to set DNS forwarding on the local machine to forward DNS requests from the application to the consumer service to the port of consumer. Here, I use dnsmasq for local forwarding, and for the production environment, I can use the BIND service.

echo "Install dnsmasq..." brew install dnsmasq echo 'server=/consul/127.0.0.1#8600' > /usr/local/etc/dnsmasq.d/consul sudo brew services restart dnsmasq

7. After the DNS forwarding setting is completed, do not specify the DNS port, and test whether the forwarding still resolves the SRV record normally.

echo "Test dns forwarding..." dig router.service.consul SRV

- Install python connector

pip install mysql-connector-python

- When setting the connector connection parameter, note that the host fills in the service address registered in the consumer and adds the dns_srv parameter. You do not need to specify the port.

import mysql.connector cnx = mysql.connector.connect(user='root', password='root', database='mysql_innodb_cluster_metadata', host='router.service.consul', dns_srv=True) cursor = cnx.cursor() query = ("select instance_id from v2_this_instance") cursor.execute(query) for (instance_id) in cursor: print("instance id: {}".format(instance_id)) cursor.close() cnx.close()

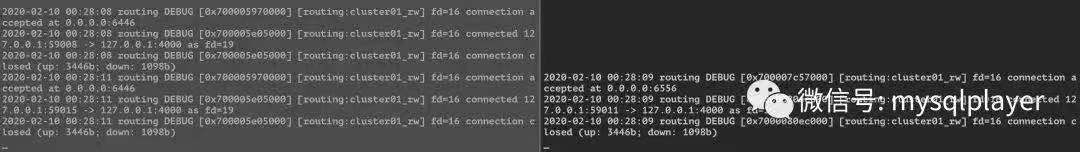

From the MySQL Router log, you can see that the request is sent to both sides in a load balancing manner.