1 Introduction

Keepalived is a high-performance server high availability or hot standby solution. Keepalived is mainly used to prevent the occurrence of single point of failure of the server. It can cooperate with the load balancing servers of Nginx, Haproxy and other reverse agents to achieve high availability of the web server. Keepalived is based on VRRP protocol and uses VRRP protocol to realize high availability (HA) VRRP (Virtual Router Redundancy Protocol) protocol is a protocol used to realize router redundancy. VRRP protocol virtualizes two or more router devices into one device and provides virtual router IP (one or more).

2 installation configuration

2.1 installation dependency

[root@localhost ~]# yum install -y openssl openssl-devel

2.2 compilation and installation

[root@localhost ~]# wget http://www.keepalived.org/software/keepalived-1.2.18.tar.gz [root@localhost ~]# tar -zxvf keepalived-1.2.18.tar.gz -C /usr/local/ [root@localhost ~]# cd .. [root@localhost ~]# cd keepalived-1.2.18/ && ./configure --prefix=/usr/local/keepalived [root@localhost ~]# make && make install

2.3 configuration

2.3.1 user defined configuration

Install keepalived as a Linux system service because the default installation path of keepalived is not used (default path: / usr/local). After the installation is completed, some modifications need to be made

[root@localhost ~]# mkdir /etc/keepalived [root@localhost ~]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

Copy keepalived script file

[root@localhost ~]# cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/ [root@localhost ~]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ [root@localhost ~]# ln -s /usr/local/sbin/keepalived /usr/sbin/

Delete if it exists: rm /sbin/keepalived

[root@localhost ~]# ln -s /usr/local/keepalived/sbin/keepalived /sbin/

2.3.2 setting startup

You can set the startup: chkconfig kept on. Now we have completed the installation

[root@localhost ~]# chkconfig keepalived on

2.3.3 configuration information

Modify keepalived Conf configuration file:

[root@localhost ~]# vi /etc/keepalived/keepalived.conf

79 node (Master) configuration is as follows:

! Configuration File for keepalived

global_defs {

router_id bhz74 ##A string identifying the node, usually hostname

}

vrrp_script chk_haproxy {

script "/etc/keepalived/haproxy_check.sh" ##Execute script location

interval 2 ##Detection interval

weight -20 ##If the condition holds, the weight is reduced by 20

}

vrrp_instance VI_1 {

state MASTER ## The primary node is MASTER and the BACKUP node is BACKUP

interface eno16777736 ## The network interface (network card) that binds the virtual IP is the same as the network interface where the local IP address is located (eth0 here)

virtual_router_id 74 ## Virtual route ID number (the primary and standby nodes must be the same)

mcast_src_ip 192.168.11.74 ## Native ip address

priority 100 ##Priority configuration (value of 0-254)

nopreempt

advert_int 1 ## The multicast information sending interval must be consistent between the two nodes. The default is 1s

authentication { ## Authentication matching

auth_type PASS

auth_pass bhz

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.11.70 ## Virtual ip, multiple can be specified

}

}

The 80 node backup is configured as follows:

! Configuration File for keepalived

global_defs {

router_id bhz75 ##A string identifying the node, usually hostname

}

vrrp_script chk_haproxy {

script "/etc/keepalived/haproxy_check.sh" ##Execute script location

interval 2 ##Detection interval

weight -20 ##If the condition holds, the weight is reduced by 20

}

vrrp_instance VI_1 {

state BACKUP ## The primary node is MASTER and the BACKUP node is BACKUP

interface eno16777736 ## The network interface (network card) that binds the virtual IP is the same as the network interface where the local IP address is located (I'm eno16777736 here)

virtual_router_id 74 ## Virtual route ID number (the primary and standby nodes must be the same)

mcast_src_ip 192.168.11.75 ## Native ip address

priority 90 ##Priority configuration (value of 0-254)

nopreempt

advert_int 1 ## The multicast information sending interval must be consistent between the two nodes. The default is 1s

authentication { ## Authentication matching

auth_type PASS

auth_pass bhz

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.1.70 ## Virtual ip, multiple can be specified

}

}

3 custom script

3.1 script writing

Add the file location as / etc / kept / haproxy_ check. Sh (the contents of the files of 74 and 75 nodes can be consistent)

#!/bin/bash

COUNT=`ps -C haproxy --no-header |wc -l`

if [ $COUNT -eq 0 ];then

/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg

sleep 2

if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];then

killall keepalived

fi

fi

3.2 authorization

haproxy_check.sh script authorization, giving executable permission

[root@localhost ~]# chmod +x /etc/keepalived/haproxy_check.sh

4 startup and test

4.1 start keepalived

After two haproxy nodes are successfully started, we can start the keepalived service program

If the haproxy of 74 and 75 is not started, execute the startup script

[root@localhost ~]# /usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg

Start two machines kept alive

[root@localhost ~]# service keepalived start | stop | status | restart

View the status of haproxy and keepalived processes

[root@localhost ~]# ps -ef | grep haproxy [root@localhost ~]# ps -ef | grep keepalived

4.2 high availability test

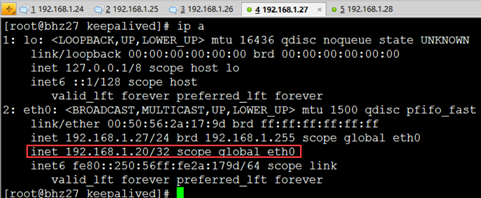

vip on node 27

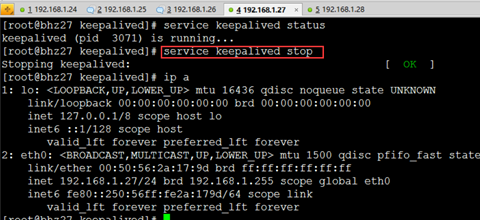

27 node downtime test: just stop the keepalived service of 27

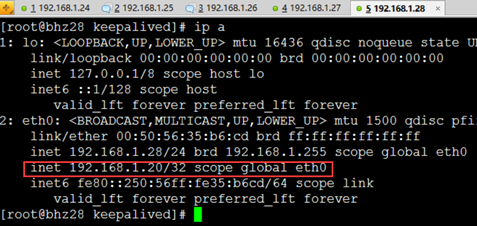

Check the status of node 28: we find that the VIP has drifted to node 28, so the 28 node haproxy can continue to provide external services

5 relevant information

Previous: MQ RabbitMQ high availability cluster (III): Ha proxy installation and configuration

It's not easy to blog. Thank you for your attention and praise