Before reading this article, I suggest reading it first This article , it talks about the principle of nonlinear optimization, that is, the concept of related terms, and then introduces the use method of NLopt, which is based on C language. This film introduces an example of NLopt in C + + language.

Before the example, first introduce the algorithms supported by NLopt and the precautions for using the algorithm

NLopt supported algorithms

NLopt contains many different optimization algorithms.

In the header file, the enumeration type of algorithm name is

enum algorithm {

GN_DIRECT = 0,

GN_DIRECT_L,

GN_DIRECT_L_RAND,

GN_DIRECT_NOSCAL,

GN_DIRECT_L_NOSCAL,

GN_DIRECT_L_RAND_NOSCAL,

GN_ORIG_DIRECT,

GN_ORIG_DIRECT_L,

GD_STOGO,

GD_STOGO_RAND,

LD_LBFGS_NOCEDAL,

LD_LBFGS,

LN_PRAXIS,

LD_VAR1,

LD_VAR2,

LD_TNEWTON,

LD_TNEWTON_RESTART,

LD_TNEWTON_PRECOND,

LD_TNEWTON_PRECOND_RESTART,

GN_CRS2_LM,

GN_MLSL,

GD_MLSL,

GN_MLSL_LDS,

GD_MLSL_LDS,

LD_MMA,

LN_COBYLA,

LN_NEWUOA,

LN_NEWUOA_BOUND,

LN_NELDERMEAD,

LN_SBPLX,

LN_AUGLAG,

LD_AUGLAG,

LN_AUGLAG_EQ,

LD_AUGLAG_EQ,

LN_BOBYQA,

GN_ISRES,

AUGLAG,

AUGLAG_EQ,

G_MLSL,

G_MLSL_LDS,

LD_SLSQP,

LD_CCSAQ,

GN_ESCH,

NUM_ALGORITHMS /*Not an algorithm, just the number of algorithms*/

};

Naming rules:

G/L stands for global or local optimization

N/D represents the optimization without derivative or derivative

For example, LN_COBYLA is the COBYLA algorithm used, and then the algorithm is used for local (L) optimization without derivative (N)

Algorithm selection

So what kind of algorithm do we use? Any choice? NO

For the optimization problem of a certain mathematical model, a better way is to compare several available algorithms and then determine which one to choose, because there is no optimal algorithm, and different optimization problems have different optimal solutions.

When comparing algorithms, it should also be noted that the function value tolerance and parameter tolerance adapted by different algorithms should also be different.

There is a better way to compare the advantages and disadvantages of two algorithms for a mathematical model:

First run an algorithm to get the minimum value, and then set it to converge to that value when running the second algorithm, and then compare the running time

Problems needing attention in selecting global optimization

At present, all global optimization algorithms require boundary constraints for all optimization parameters.

And it must be noted that

Among these algorithms, only ISRES, AGS, and ORIG_DIRECT supports nonlinear inequality constraints

Only ISRES supports nonlinear equality constraints

A good way is to find a best advantage through the global algorithm, and then take this best advantage as the starting point of local optimization to make the results more accurate

The global optimization algorithm takes much more energy to find the best advantage than the local optimization algorithm

Code

Mathematical model:

Find: max (lnx1+lnx2) objective function

Constraints: p1x1+p2x2=5 equality constraints

X1 < = x2 inequality constraint

x1>=0; X2 > = 0 boundary constraint

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

#Include < nlopt. HPP > / / header file of nlopt

Reference header file

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

//Declare a global variable whether to use gradients bool grad_bool=1;

Declare a global variable whether to use gradients

In this way, it is convenient to change this variable when switching between gradient based algorithm and algorithm without derivative

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

double myfunc(const std::vector<double>& x,std::vector<double>&grad,void* f_data )

{

double result;//Declare results

if (grad_bool)

{

grad[0] = 1 / x[0];

grad[1] = 1 / x[1];

}

result = log(x[0])+log(x[1]);//Calculation results

return result;//Return results

}

The objective function is max (lnx1+x2)

When defining the objective function, note that the form of the parameters of the function cannot be changed

Parameter x is the parameter vector to be optimized

Grad returns to the gradient of the optimization parameter at this time, which is the result of partial derivation of X in the mathematical model. If partial derivation is required, lnx1+lnx2 partial derivation of x1, and 1/x1, that is, grad[0] = 1 / x[0]; This place

f_data is the parameter to be passed in

The return value is the value of the objective function under an x vector

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

double myconstraint(const std::vector<double>& x,std::vector<double>&grad,void* f_data)

{

double *p = (double *) f_data;

if (grad_bool)

{

grad[0] = p[0];

grad[1] = p[1];

}

double result;//Declare results

result = x[0] * p[0] + x[1] * p[1] - 5;//Calculation results

return result;//Return results

}

Define equality constraint function

The equation of the mathematical model is: p1x1+p2x2=5

p1 and p2 are passed in as external parameters

The best way is to change the writing method, so that any parameter format can be applied

typedef struct{

double p1,p2;

}my_constraint_data;

Declare the structure to pass in the parameter

double myconstraint(const std::vector<double>& x,std::vector<double>&grad,void* f_data)

{

my_constraint_data *d = (my_constraint_data*)f_data;

//double *p = (double *) f_data;

double p1 = d->p1;

double p2 = d->p2;

if (grad_bool)

{

grad[0] = p1;

grad[1] = p2;

}

double result;//Declare results

result = x[0] * p1 + x[1] * p2 - 5;//Calculation results

return result;//Return results

}

Then when taking parameters, you can

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

double myinconstraint(const std::vector<double>& x,std::vector<double>&grad,void* f_data)

{

if (grad_bool)

{

grad[0] = 1;

grad[1] = -1;

}

double result;//Declare results

result = x[0] - x[1] ;//Calculation results

return result;//Return results

}

Constraint function of inequality

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

int main(int argc, char** argv)

{

//Initialize node

ros::init(argc, argv, "lidar_align");

//Declare two handles

ros::NodeHandle nh;

Then there is the main function. I did it under ros, so I added a node initialization

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

//Parameters in mathematical model

//double p[2]={1,2};

my_constraint_data data = {1,2};

//Parameters to be optimized

std::vector<double> x{1,1};

Declare the parameters of equality constraints in the mathematical model_ constraint_ The data structure is defined above

Declaring the parameter 1,1 to be optimized is equivalent to initializing

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

//Declare an optimizer

nlopt::opt opter;

Declare an optimizer for NLopt

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/*Local optimization algorithm*/

//opter = nlopt::opt(nlopt::LD_SLSQP,2); // Derivative

//opter = nlopt::opt(nlopt::LN_COBYLA,2); // derivative-free

/*Global optimization algorithm*/

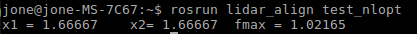

opter = nlopt::opt(nlopt::GN_ISRES,2); //No derivative is possible X1 = 1.66667 x2 = 1.66667 Fmax = 1.02165

Set what optimization algorithm to use for the optimizer, and set the number of factors, that is, the number of x

There are optimization algorithms in nlopt.hpp, from which you can choose what you want to use

But note that not all algorithms can be calculated. For example, in the global optimization algorithm with inequality constraints and equality constraints in this mathematical model, only ISRES can be used

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/*Boundary constraints of optimization parameters*/

std::vector<double> lb {1,1};//Note that the number of parameters should correspond to

std::vector<double> ub {10000,10000};//Note that the number of parameters should correspond to

//Set parameter boundary

opter.set_lower_bounds(lb);//Set parameter lower limit

opter.set_upper_bounds(ub);//Set parameter upper limit

Boundary constraints of optimization parameters

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/*Set optimization accuracy*/

double tol = 1e-8;//tolerance

opter.set_xtol_rel(tol);

opter.set_force_stop(tol);

Set optimization accuracy

When does control optimization stop

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/*Set the objective function, where the objective function is self-defined */

// myfunc is the function name defined by itself. The second parameter is data that can be passed in. If there is no data, it is NULL

opter.set_max_objective(myfunc,NULL);

Set objective function

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/*Set equality constraints and inequality constraints of mathematical model */

//myconstraint is the name of the self-defined function, and the second parameter is the data that can be passed in,

opter.add_equality_constraint(myconstraint,&data,tol);

opter.add_inequality_constraint(myinconstraint, NULL,tol);

Set equality constraints and inequality constraints of mathematical model

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

/* The maximum value of the obtained result is stored in F_ The corresponding vector in Max is stored in x*/

double f_max = -10000;

nlopt::result res = opter.optimize(x,f_max);

Perform optimization calculations

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

std::cout<<"x1 = " <<x[0]<<" x2= "<<x[1]<<" fmax = "<<f_max<<std::endl;

Print calculation results

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Result