1, HOG characteristics

HOG(Histograms of Oriented Gradients) gradient direction histogram

2, HOG feature extraction process

1) Gray image conversion (the image is regarded as a three-dimensional image of X, y and Z (gray);

2) Gamma correction method is used to standardize (normalize) the color space of the input image; At the same time, the purpose of adjusting the noise caused by illumination and shadow is to reduce the contrast of the image;

#if 1 / / image enhancement algorithm -- gamma

int Gamma = 2;

int main(int args, char* arg)

{

Mat src = imread("C:\\Users\\19473\\Desktop\\opencv_images\\88.jpg");

if (!src.data)

{

printf("could not load image....\n");

}

imshow("Original image", src);

// Note: CV_32FC3

Mat dst(src.size(), CV_32FC3);

for (int i = 0; i < src.rows; i++)

{

for (int j = 0; j < src.cols; j++)

{

// Each channel of bgr is calculated

dst.at<Vec3f>(i, j)[0] = pow(src.at<Vec3b>(i, j)[0], Gamma);

dst.at<Vec3f>(i, j)[1] = pow(src.at<Vec3b>(i, j)[1], Gamma);

dst.at<Vec3f>(i, j)[2] = pow(src.at<Vec3b>(i, j)[2], Gamma);

}

}

// normalization

normalize(dst, dst, 0, 255, CV_MINMAX);

convertScaleAbs(dst, dst);

imshow("Enhanced image", dst);

waitKey(0);

return -1;

}

#endif3) Calculate the gradient of each pixel of the image (including size and direction); It is mainly to capture the contour information and further weaken the interference of illumination

Mat non_max_supprusion(Mat dx, Mat dy) //What comes in is the mask of difference matrix 3 * 3 in two directions

{

//Edge strength = sqrt (square of DX + square of dy)

Mat edge;

magnitude(dx, dy, edge);// Calculate amplitude value

int rows = dx.rows;

int cols = dy.cols;

//Non maximum suppression of edge strength

Mat edgemag_nonMaxSup = Mat::zeros(dx.size(), dx.type());

// The sum gradient direction is calculated in two steps and converted into an angleMatrix

for (int row = 1; row < rows - 1; row++)

{

for (int col = 1; col < cols - 1; col++)

{

float x = dx.at<float>(row, col);

float y = dx.at<float>(row, col);

// Direction of gradient -- atan2f

float angle = atan2f(y, x) / CV_PI * 180;

// Edge strength at current position

float mag = edge.at<float>(row, col);

// Find the left and right directions

if (abs(angle) < 22.5 || abs(angle) > 157.5)

{

float left = edge.at<float>(row, col - 1);

float right = edge.at<float>(row, col + 1);

// Judge in two directions

if (mag > left && mag > right) {

edgemag_nonMaxSup.at<float>(row, col) = mag;

}

}

// Upper left and lower right

if ((abs(angle) >= 22.5 && abs(angle) < 67.5) || (abs(angle) < -112.5 && abs(angle) > 157.5))

{

float lefttop = edge.at<float>(row - 1, col - 1);

float rightbottom = edge.at<float>(row + 1, col + 1);

// Judge in two directions

if (mag > lefttop && mag > rightbottom) {

edgemag_nonMaxSup.at<float>(row, col) = mag;

}

}

// Up and down direction

if ((abs(angle) >= 67.5 && abs(angle) <= 112.5) || (abs(angle) >= -112.5 && abs(angle) <= -67.5))

{

float top = edge.at<float>(row - 1, col);

float down = edge.at<float>(row + 1, col);

// Judge in two directions

if (mag > top && mag > down) {

edgemag_nonMaxSup.at<float>(row, col) = mag;

}

}

// Upper right and lower left

if ((abs(angle) > 122.5 && abs(angle) < 157.5) || (abs(angle) > -67.5 && abs(angle) <= -22.5))

{

float leftdown = edge.at<float>(row - 1, col + 1);

float rightup = edge.at<float>(row + 1, col - 1);

// Judge in two directions

if (mag > leftdown && mag > rightup) {

edgemag_nonMaxSup.at<float>(row, col) = mag;

}

}

}

}

return edgemag_nonMaxSup;

}

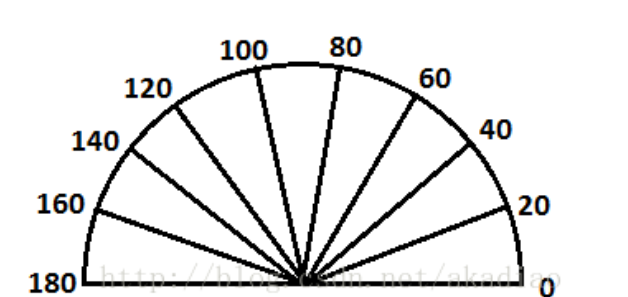

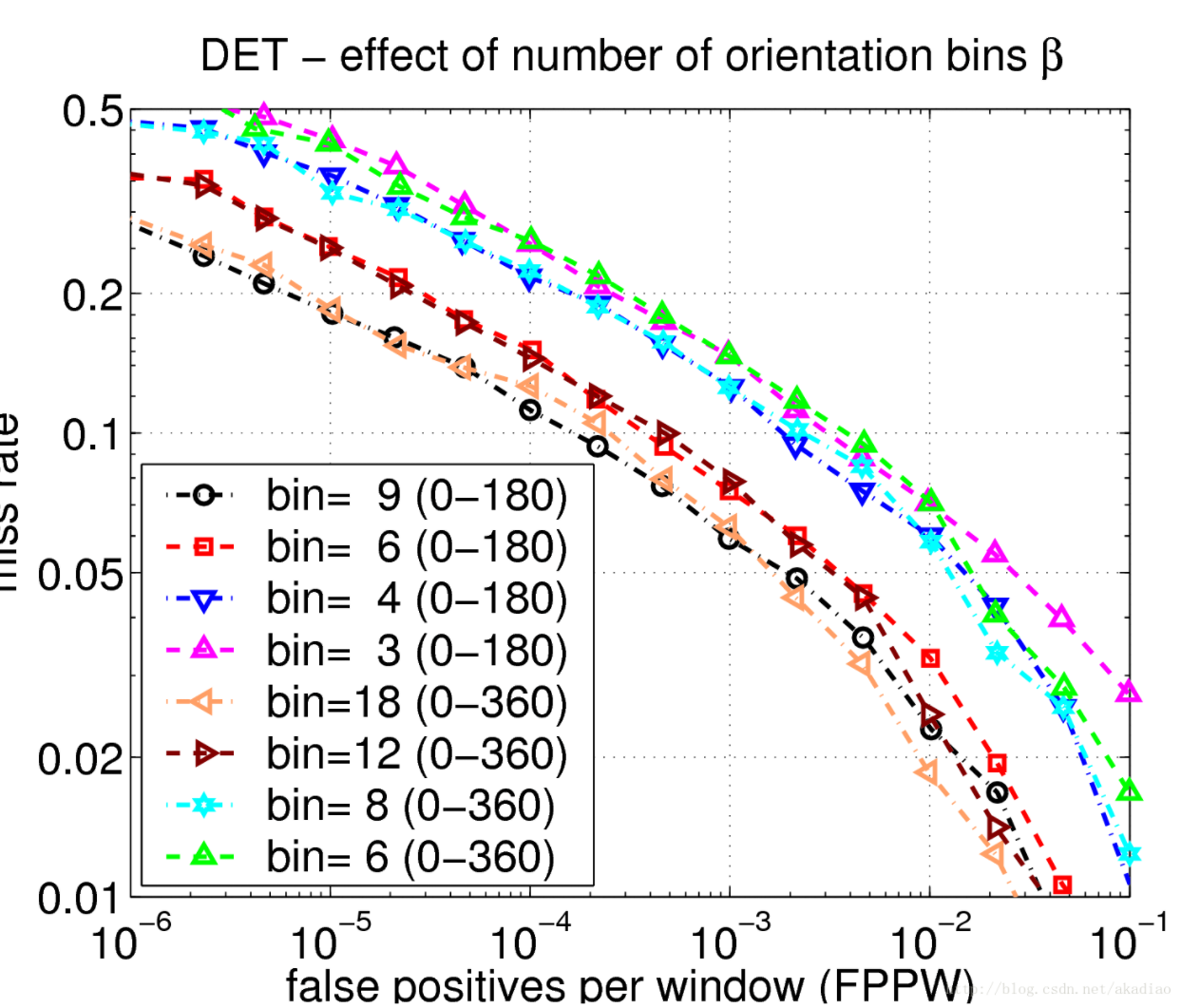

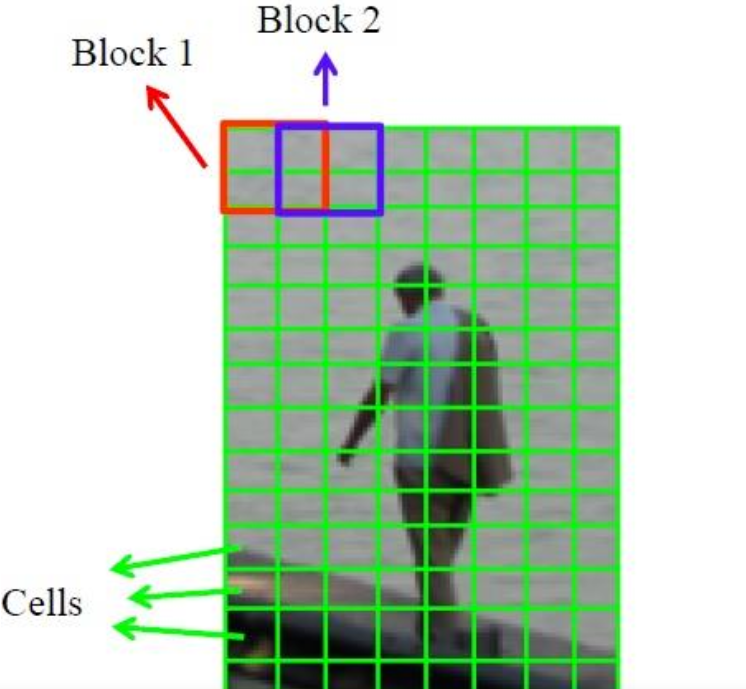

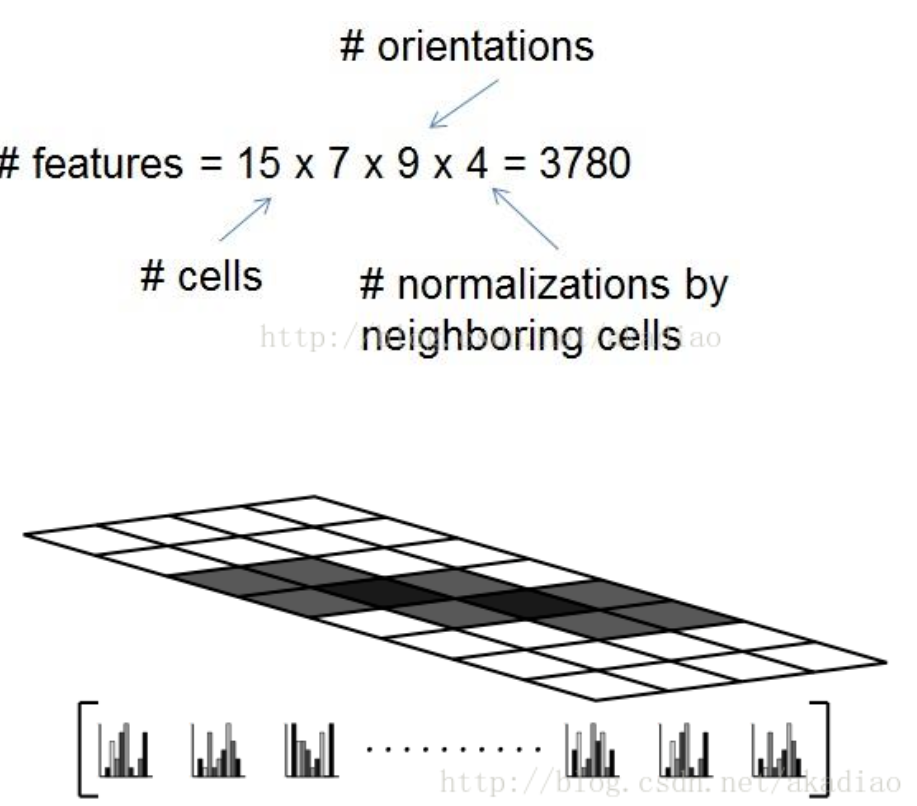

4) Divide the image into small cells (e.g. 8 * 8 pixels / cell); Calculate the gradient size and direction of each cell. Then divide the gradient direction of each pixel into 9 bins in the interval of , undirected: 0-180, directed: 0-360. The pixels in each cell use the amplitude to represent the weight and vote for the gradient histogram where they are located

5) Count the gradient histogram of each cell (the number of different gradients) to form the descriptor of each cell; Quick description seed

6) Every few cells form a block (e.g. 3 * 3 cells / block). The feature descriptors of all cells in a block are connected in series to obtain the HOG feature descriptors of the block. Fast description seed normalization

7) Connect the HOG feature descriptors of all block s in the image to get the HOG feature descriptor of the image (the target you want to detect). This is the final feature vector for classification, which provides feature data and detection window

For size 128 × 64 size image, using 8 * 8 pixel sell, 2 × 16 cells composed of 2 cells × For 16 pixel blocks, the 8-pixel block moving step is adopted, so the number of detection window blocks is ((128-16) / 8 + 1) × ((64-16)/8+1)=15 × 7. Then the dimension of HOG feature descriptor is 15 × seven × four × 9.

8) Matching method

Disadvantages of HOG:

Slow speed and poor real-time performance; Difficult to handle occlusion

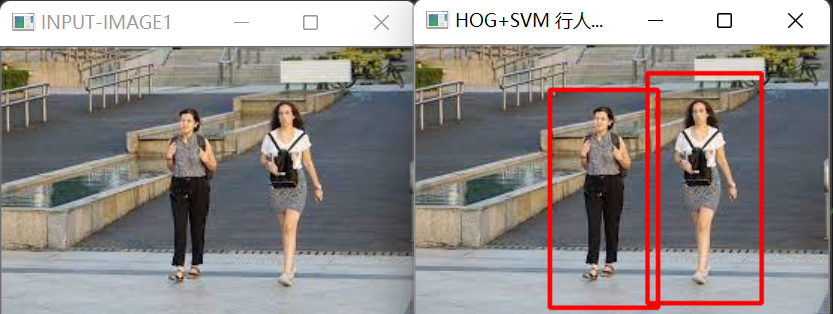

3, Code demonstration

int main(int args, char* arg)

{

//target image

src = imread("C:\\Users\\19473\\Desktop\\opencv_images\\153.jpg");

if (!src.data)

{

printf("could not load image....\n");

}

namedWindow(INPUT_TITLE, CV_WINDOW_AUTOSIZE);

//namedWindow(OUT_TITLE, CV_WINDOW_AUTOSIZE);

imshow(INPUT_TITLE, src);

/*

// Resize image

resize(src, dst,Size(64,128));

cvtColor(dst, src_gary, CV_BGR2GRAY);

HOGDescriptor detector(Size(64,128), Size(16,16), Size(8,8),Size(8,8),9);

vector<float> descripers;

vector<Point> locations;

detector.compute(src_gary, descripers, Size(0,0), Size(0, 0), locations);

printf("num of HOG: %d\n", descripers.size());

*/

//SVM classifier -- descriptor

HOGDescriptor hog = HOGDescriptor();

hog.setSVMDetector(hog.getDefaultPeopleDetector());

vector<Rect> foundloactions;

// Multiscale detection

hog.detectMultiScale(src, foundloactions, 0, Size(8, 8), Size(32, 32), 1.05, 2, false);

//If rects is nested, take the outermost rectangle and store it in rect

for (size_t i = 0; i < foundloactions.size(); i++)

{

rectangle(src, foundloactions[i], Scalar(0, 0, 255), 2, 8.0);

}

namedWindow(OUT_TITLE, CV_WINDOW_AUTOSIZE);

imshow(OUT_TITLE, src);

waitKey(0);

return 0;

}