HDR

content

- Generally speaking, when stored in the frame buffer, the values of brightness and color are limited to 0.0 to 1.0 by default. This seemingly innocent statement makes us always set the brightness and color values within this range, trying to fit the scene. This can run and give good results. But what happens if we encounter a specific area in which there are multiple bright lights that make the sum of these values exceed 1.0? The answer is that the brightness or color values exceeding 1.0 in these clips will be constrained to 1.0, resulting in the scene mixing and difficult to distinguish.

- The display is limited to colors with values between 0.0 and 1.0, but there is no such limit in the lighting equation. By making the color of the clip exceed 1.0, we have a larger color range, which is also called HDR(High Dynamic Range). With HDR, bright things can become very bright, and dark things can become very dark and full of details.

Simple way of thinking:

- Idea: mediation through parameters

Tone mapping

-

Tone mapping is a process of converting floating-point color values to the required LDR[0.0, 1.0] range with little loss, usually accompanied by a specific style of color balance.

-

The simplest tone mapping algorithm is Reinhard tone mapping, which involves dispersing the whole HDR color value to LDR color value, and all values have corresponding values. The Reinhard tone mapping algorithm evenly disperses all luminance values onto the LDR. We apply Reinhard tone mapping to the previous clip shader, and add a Gamma correction filter (including the use of SRGB texture) for better measurement:

void main() { const float gamma = 2.2; vec3 hdrColor = texture(hdrBuffer, TexCoords).rgb; // Reinhard tone mapping vec3 mapped = hdrColor / (hdrColor + vec3(1.0)); // Gamma correction mapped = pow(mapped, vec3(1.0 / gamma)); color = vec4(mapped, 1.0); } -

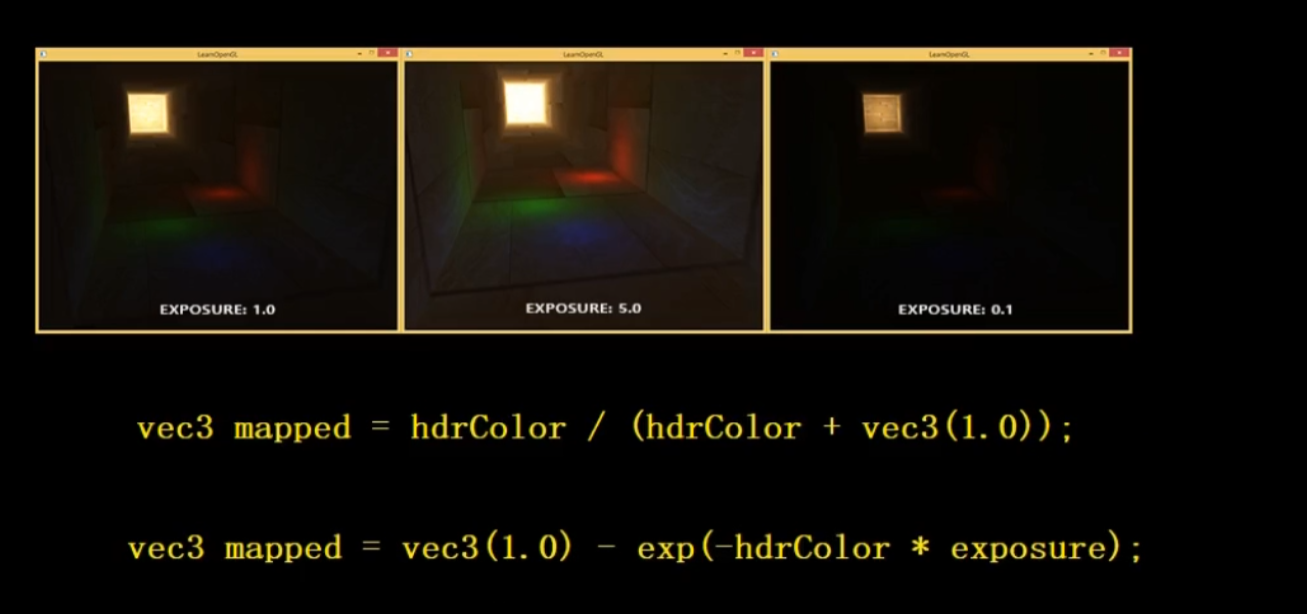

Another interesting tone mapping application is the use of exposure parameters. As you may remember in our introduction, HDR pictures contain details at different exposure levels. If we have a scene to show the alternation of day and night, we will certainly use low exposure during the day and high exposure at night, just like the human eye adjustment. With this exposure parameter, we can set the lighting parameters that can work under different lighting conditions during the day and night at the same time. We just need to adjust the exposure parameters. A simple exposure tone mapping algorithm would look like this:

uniform float exposure; void main() { const float gamma = 2.2; vec3 hdrColor = texture(hdrBuffer, TexCoords).rgb; // Exposure tone mapping vec3 mapped = vec3(1.0) - exp(-hdrColor * exposure); // Gamma correction mapped = pow(mapped, vec3(1.0 / gamma)); color = vec4(mapped, 1.0); } -

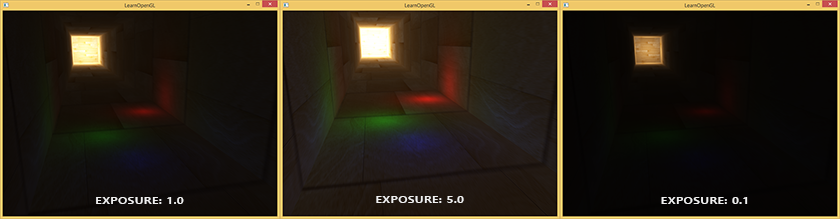

Here, we define exposure as a uniform with a default of 1.0, which allows us to more accurately set the HDR color value of dark or bright areas. For example, a high exposure value will make the dark part of the tunnel show more details, while a low exposure value will significantly reduce the details of the dark area, but allow us to see more details of the bright area. The following group of pictures shows the channels under different exposure values:

code

-

Texture loading and frame buffering

// load textures // ------------- unsigned int woodTexture = loadTexture("resources/textures/wood.png", true); // note that we're loading the texture as an SRGB texture // configure floating point framebuffer // ------------------------------------ unsigned int hdrFBO; glGenFramebuffers(1, &hdrFBO); // create floating point color buffer unsigned int colorBuffer; glGenTextures(1, &colorBuffer); glBindTexture(GL_TEXTURE_2D, colorBuffer); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA16F, SCR_WIDTH, SCR_HEIGHT, 0, GL_RGBA, GL_FLOAT, NULL); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); // create depth buffer (renderbuffer) unsigned int rboDepth; glGenRenderbuffers(1, &rboDepth); glBindRenderbuffer(GL_RENDERBUFFER, rboDepth); glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT, SCR_WIDTH, SCR_HEIGHT); // attach buffers glBindFramebuffer(GL_FRAMEBUFFER, hdrFBO); glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, colorBuffer, 0); glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_RENDERBUFFER, rboDepth); if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE) std::cout << "Framebuffer not complete!" << std::endl; glBindFramebuffer(GL_FRAMEBUFFER, 0); -

Light and shader settings

// lighting info // ------------- // positions std::vector<glm::vec3> lightPositions; lightPositions.push_back(glm::vec3(0.0f, 0.0f, 49.5f)); // back light lightPositions.push_back(glm::vec3(-1.4f, -1.9f, 9.0f)); lightPositions.push_back(glm::vec3(0.0f, -1.8f, 4.0f)); lightPositions.push_back(glm::vec3(0.8f, -1.7f, 6.0f)); // colors std::vector<glm::vec3> lightColors; lightColors.push_back(glm::vec3(200.0f, 200.0f, 200.0f)); lightColors.push_back(glm::vec3(0.1f, 0.0f, 0.0f)); lightColors.push_back(glm::vec3(0.0f, 0.0f, 0.2f)); lightColors.push_back(glm::vec3(0.0f, 0.1f, 0.0f)); // shader configuration // -------------------- shader.use(); shader.setInt("diffuseTexture", 0); hdrShader.use(); hdrShader.setInt("hdrBuffer", 0); -

Rendering: hdrshader on

// render // ------ glClearColor(0.1f, 0.1f, 0.1f, 1.0f); glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); // 1. render scene into floating point framebuffer // ----------------------------------------------- glBindFramebuffer(GL_FRAMEBUFFER, hdrFBO); glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); glm::mat4 projection = glm::perspective(glm::radians(camera.Zoom), (GLfloat)SCR_WIDTH / (GLfloat)SCR_HEIGHT, 0.1f, 100.0f); glm::mat4 view = camera.GetViewMatrix(); shader.use(); shader.setMat4("projection", projection); shader.setMat4("view", view); glActiveTexture(GL_TEXTURE0); glBindTexture(GL_TEXTURE_2D, woodTexture); // set lighting uniforms for (unsigned int i = 0; i < lightPositions.size(); i++) { shader.setVec3("lights[" + std::to_string(i) + "].Position", lightPositions[i]); shader.setVec3("lights[" + std::to_string(i) + "].Color", lightColors[i]); } shader.setVec3("viewPos", camera.Position); // Render Passes glm::mat4 model = glm::mat4(1.0f); model = glm::translate(model, glm::vec3(0.0f, 0.0f, 25.0)); model = glm::scale(model, glm::vec3(2.5f, 2.5f, 27.5f)); shader.setMat4("model", model); shader.setInt("inverse_normals", true); renderCube(); glBindFramebuffer(GL_FRAMEBUFFER, 0); // 2. now render floating point color buffer to 2D quad and tonemap HDR colors to default framebuffer's (clamped) color range // -------------------------------------------------------------------------------------------------------------------------- glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); hdrShader.use(); glActiveTexture(GL_TEXTURE0); glBindTexture(GL_TEXTURE_2D, colorBuffer); hdrShader.setInt("hdr", hdr); hdrShader.setFloat("exposure", exposure); renderQuad(); std::cout << "hdr: " << (hdr ? "on" : "off") << "| exposure: " << exposure << std::endl; -

Channel ray clip shader

#version 330 core out vec4 FragColor; in VS_OUT { vec3 FragPos; vec3 Normal; vec2 TexCoords; } fs_in; struct Light { vec3 Position; vec3 Color; }; uniform Light lights[16]; uniform sampler2D diffuseTexture; uniform vec3 viewPos; void main() { vec3 color = texture(diffuseTexture, fs_in.TexCoords).rgb; vec3 normal = normalize(fs_in.Normal); // ambient vec3 ambient = 0.0 * color; // lighting vec3 lighting = vec3(0.0); for(int i = 0; i < 16; i++) { // diffuse vec3 lightDir = normalize(lights[i].Position - fs_in.FragPos); float diff = max(dot(lightDir, normal), 0.0); vec3 diffuse = lights[i].Color * diff * color; vec3 result = diffuse; // attenuation (use quadratic as we have gamma correction) float distance = length(fs_in.FragPos - lights[i].Position); result *= 1.0 / (distance * distance); lighting += result; } FragColor = vec4(ambient + lighting, 1.0); } -

hdr shader

#version 330 core out vec4 FragColor; in vec2 TexCoords; uniform sampler2D hdrBuffer; uniform bool hdr; uniform float exposure; void main() { const float gamma = 2.2; vec3 hdrColor = texture(hdrBuffer, TexCoords).rgb; if(hdr) { // reinhard // vec3 result = hdrColor / (hdrColor + vec3(1.0)); // exposure vec3 result = vec3(1.0) - exp(-hdrColor * exposure); // also gamma correct while we're at it result = pow(result, vec3(1.0 / gamma)); FragColor = vec4(result, 1.0); } else { vec3 result = pow(hdrColor, vec3(1.0 / gamma)); FragColor = vec4(result, 1.0); } }

effect

-

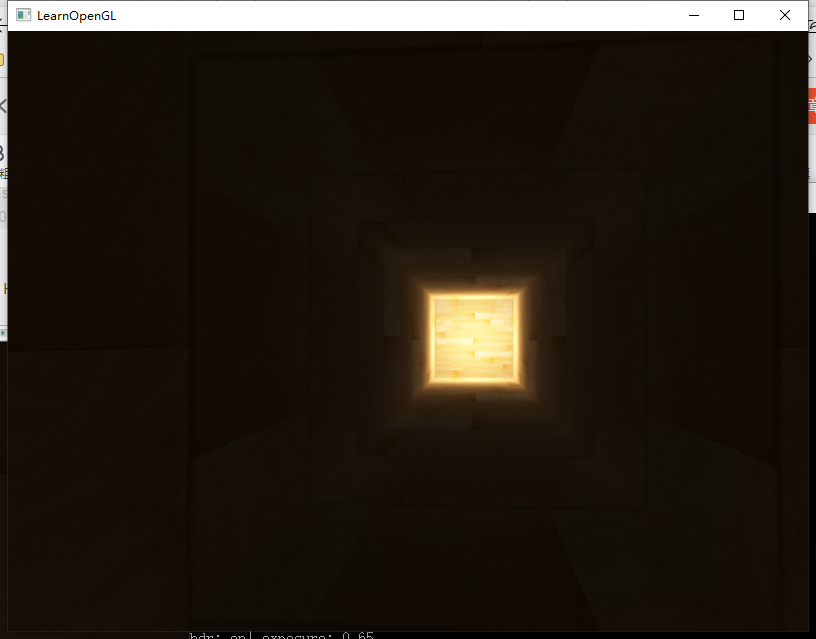

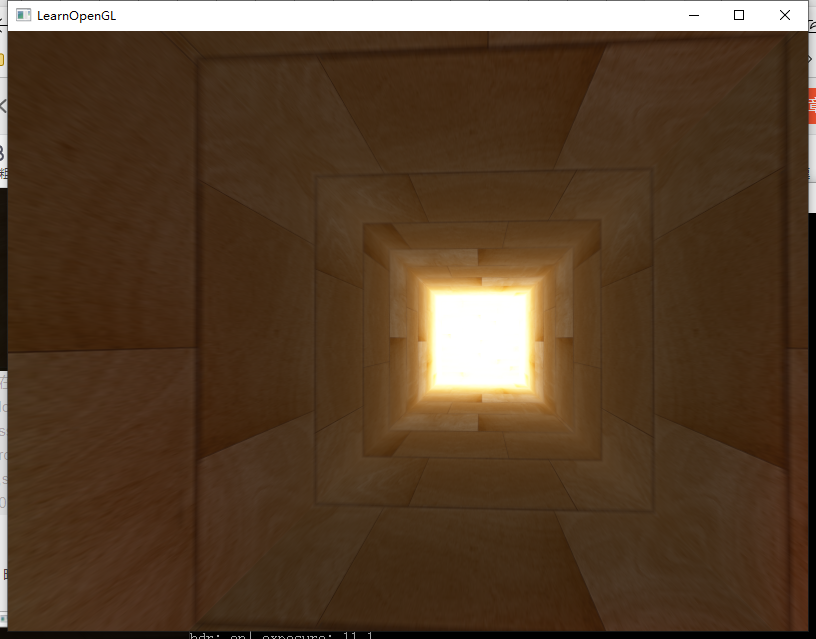

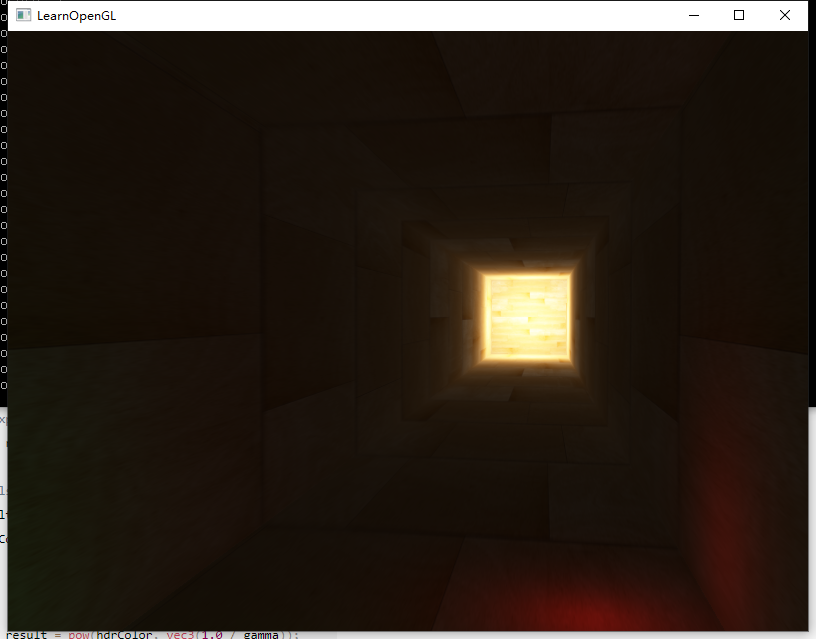

HDR effect on

-

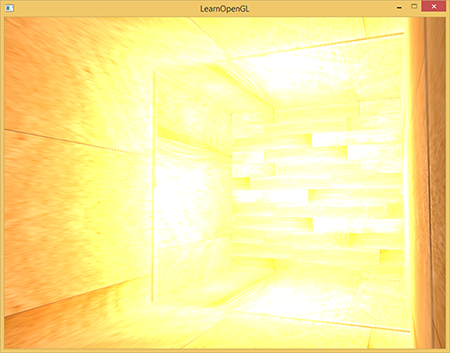

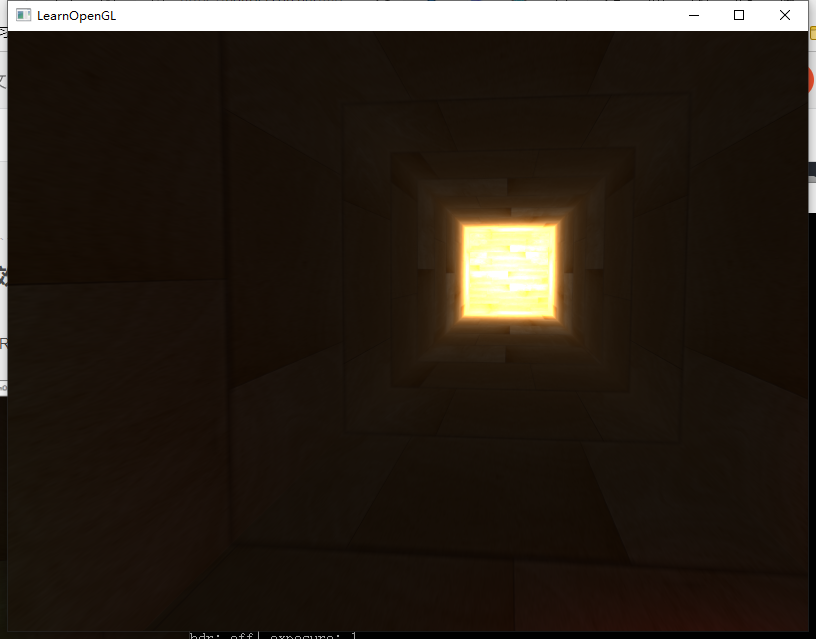

HDR effect off (details lost)

-

Exposure modification (low and high)