preface

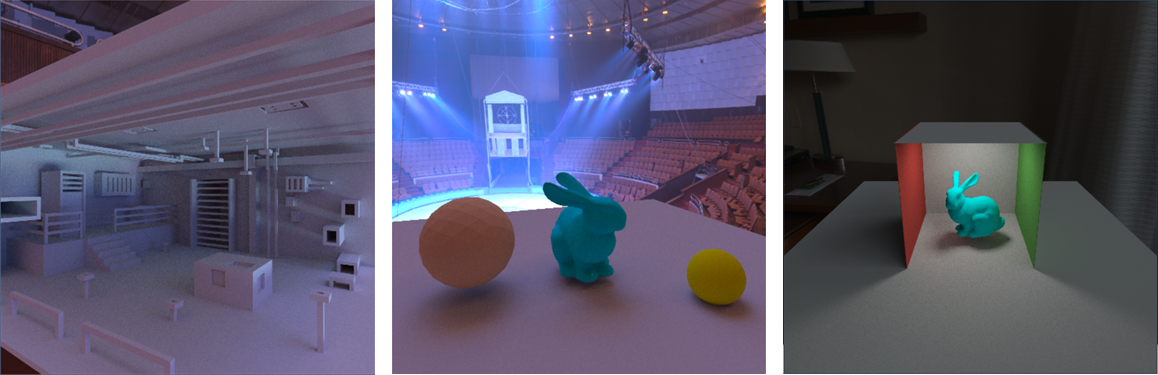

Ray tracing on GPU!

About half a year ago, I was Raytrace rendering practice Simple ray tracing is discussed and implemented in. It is the simplest calculation on cpu with c + + and multithreading, but only supports simple triangles and balls as primitives

Over time, it is no longer novel to manipulate these exquisite spheres. In order to traverse a large number of triangles efficiently, this article Ray tracing accelerated traversal structure This paper studies and discusses the BVH data structure, and gives the simple implementation and visual code of c + +

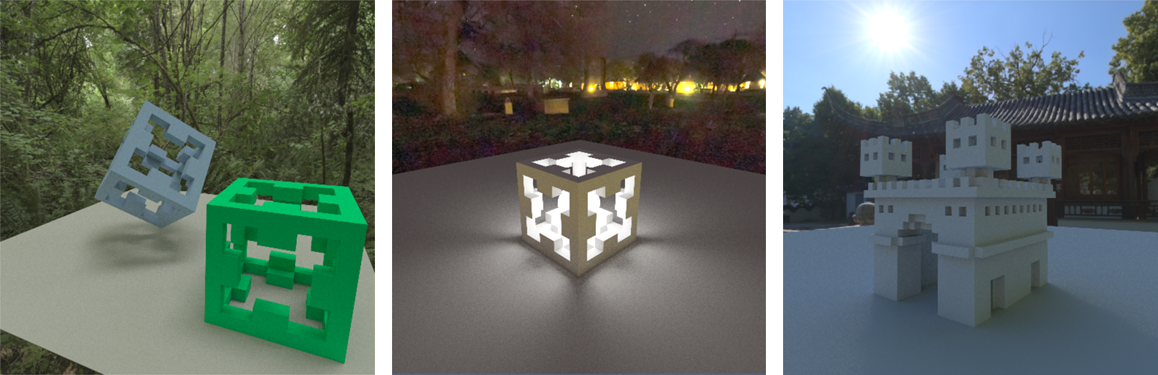

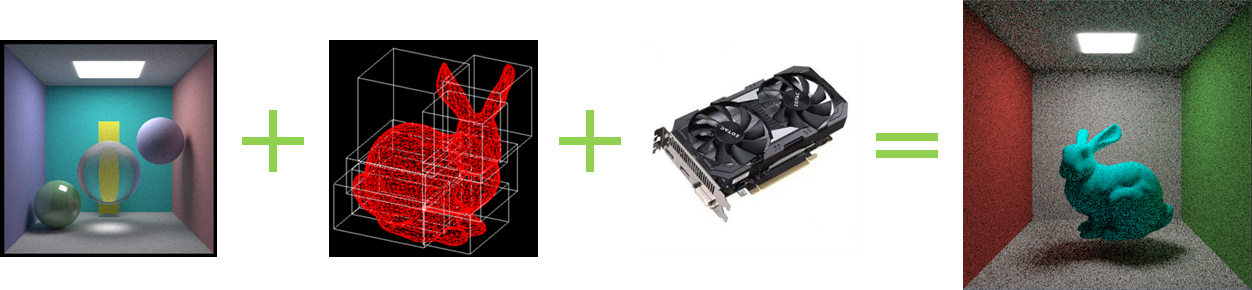

If the first two blogs are wood pickaxes and stone pickaxes in mc, today we'll knock an iron pickaxe and bake the GPU on the fire:

Well, let's talk about the idea: the fragment shader of OpenGL is used to calculate the light chase pixel by pixel, and then the triangle, BVH structure, material and other information are encoded, stuffed into the texture and passed to the shader, and then, um, it's finished

The core part of hhh is very simple, that is, transplant the original c + + code and run it in GLSL. emmm this is not a classic elephant stuffed into the refrigerator. In fact, I spend a lot of time solving all kinds of bug s

Fortunately, the difficult period has passed. Over this mountain... Ok... Stop gossiping and get ready. We will enter the world of waves and particles again!

0. Pre knowledge

Note:

This part does not involve any principle, code explanation

Only by dabbling in the pre knowledge listed in this section can you read this blog smoothly, so that you won't be confused when you see the code hhh

environment

visual studio 2019 uses glm for math library, glew for OpenGL, freeglut for window management, and SOIL2 for image reading. For how to install a third-party library on vs, you can use vcpkg for package management. For specific usage, refer to My previous blog

OpenGL

Because it is based on GLSL, you must have a certain understanding of OpenGL first. Common concepts, such as drawing process, shader, coordinate transformation, etc. Common operations, such as buffer object, passing texture, passing uniform consistent variable and so on. The most classic tutorial about OpenGL is Learn OpenGL Yes. Of course, I also sell my own special column , although it's ferra

GLSL

Then, the clip shader is not simply outputting the vertex color after centroid interpolation. We need to write nearly 114514e1919 lines of code in the fragment shader for light tracing, so we need to understand the syntax specification, function interface, uniform variable, texture, sampling and other operations of GLSL

Simple geometry

Mathematical and geometric principles such as intersection with triangles, axis aligned bounding box AABB, cuboid box intersection, triangle barycenter interpolation, etc. Principle of intersection with triangle, intersection with AABB

Path tracking

The color of a point is not determined by magic, but by integral solution of rendering equation. Each integration recursively solves the light path pixel by pixel until it touches the light source. Principle and code reference: Raytrace rendering practice

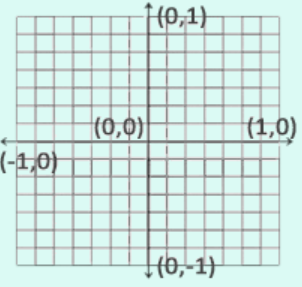

1. Layout canvas

The fragment shader will calculate each pixel once and is parallel on the GPU, which just meets our requirements: parallel and pixel by pixel calculation! We use it to calculate the color of ray tracing!

Using the slice shader to calculate each pixel, first we draw a square covering the whole screen as our canvas:

Without any transformation matrix, the six vertices are directly fixed to the NDC space, that is, the range of [- 1,1]. The code of c + + is very simple:

std::vector<vec3> square = {

vec3(-1, -1, 0), vec3(1, -1, 0), vec3(-1, 1, 0),

vec3(1, 1, 0), vec3(-1, 1, 0), vec3(1, -1, 0)

};

// Generate VAO, VBO, transmit data, draw

...

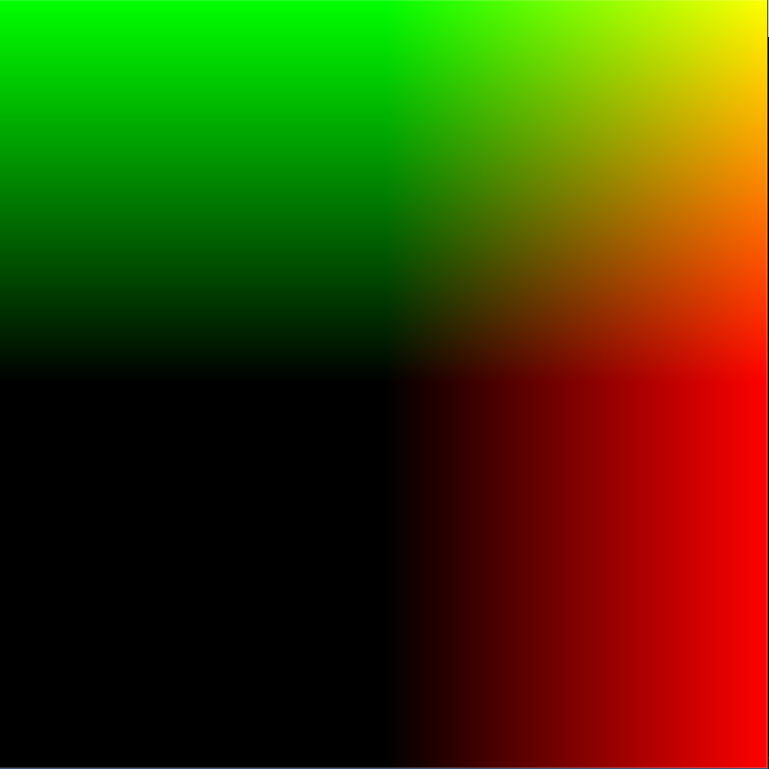

The vertex shader directly passes the NDC coordinates of the vertex as the slice coordinates of the pixel, the range of the pix variable [- 1, 1], and tries to output the pixel coordinates pix as the color in the fragment shader. When you see the following image, it indicates that the canvas is working normally:

2. Transfer triangle data to shader

Note:

This part of the code is quite boring. We are preparing data for the shader

If you are interested in the GLSL implementation of path tracing, you can skip this section

Unlike previous ray tracing programs, the calculation takes place on the GPU side. There are many ways to transfer data to the shader, such as uniform array, uniform buffer, etc., but these methods have a number limit and can not transfer a large amount of data

Recall how we transferred pictures to the GPU. Yes, texture! We read the model data into memory through c + +, then transfer the data to video memory in the form of texture, and then sample the texture in the shader to read out the original data!

Our data is usually transmitted in the form of arrays, such as triangle array, BVH binary tree array, material array and so on. These arrays are one-dimensional to facilitate access and sampling with subscript pointers

However, it is well known that the general image texture is two-dimensional, sampling through a uv coordinate in the range of 0 ~ 1 instead of subscript. Not to mention the trick of folding a one-dimensional array into a two-dimensional texture, the hardware bilinear interpolation filter welded in the graphics card circuit will also destroy the accuracy of the data

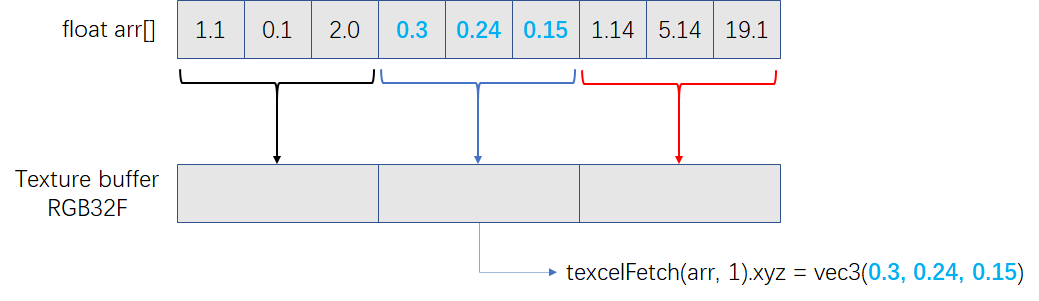

For these reasons, OpenGL provides a more primitive transfer method called Buffer Texture, which uses texture as a general data buffer. It allows us to directly move the binary data in memory to video memory, and then access it through a special sampler, samplerBuffer.

Different from the general sampler2D, samplerBuffer regards the texture content (i.e. the original data in the video memory) as a one-dimensional array, which can directly index the data through subscripts, and does not use any filters, which just meets our needs!

For the usage of Buffer Texture, please refer to OpenGL Wiki , or the following simple example. Suppose we have an array to pass:

int n; // Array size float triangles[];

First, we need to create a buffer object called texture buffer object, or tbo for short, which can be compared to opening up a space in video memory:

GLuint tbo; glGenBuffers(1, &tbo); glBindBuffer(GL_TEXTURE_BUFFER, tbo);

Then insert the data into the buffer:

glBufferData(GL_TEXTURE_BUFFER, n * sizeof(float), &your_data[0], GL_STATIC_DRAW);

Then create a texture. Note that the texture type should be GL_TEXTURE_BUFFER this means that we are not developing an image texture, but a data buffer texture:

GLuint tex; glGenTextures(1, &tex); glBindTexture(GL_TEXTURE_BUFFER, tex);

Then use glTexBuffer to associate the data in tbo to the texture buffer. Here, we use GL_RGB32F format, such an access can take out the data of a vec3 vector. The return value of the sampler has three RGB channels, and each channel is a 32-bit floating-point number:

glTexBuffer(GL_TEXTURE_BUFFER, GL_RGB32F, tbo);

Finally, pass texture 0 to shader:

glActiveTexture(GL_TEXTURE0); glUniform1i(glGetUniformLocation(program, "triangles"), 0);

Then use texelFetch and an integer subscript index on the shader side to query the texture of samplerBuffer type:

uniform samplerBuffer triangles; ... int index = xxx vec3 data = texelFetch(triangles, index).xyz;

Note the data format here_ rgb32f refers to how much data can be read by a subscript (one sampling), that is, the unit of a grid of data. A subscript will index three 32-bit floating-point numbers and return a vec4, but only the rgb component is valid. Their mapping relationship with memory data is as follows:

So far, we have mastered the method of accessing 12 byte (RGB32) memory data on GPU, but our structures are not necessarily aligned with 12 bytes

Note:

You can also use GL_R32F to read a 32-bit floating-point number at a time, which can organize data more flexibly

But obviously reading one vec3 at a time is more efficient

Taking triangles and their materials as an example, their definitions in c + + code on CPU are as follows:

// Object surface material definition

struct Material {

vec3 emissive = vec3(0, 0, 0); // The luminous color when used as a light source

vec3 baseColor = vec3(1, 1, 1);

float subsurface = 0.0;

float metallic = 0.0;

float specular = 0.0;

float specularTint = 0.0;

float roughness = 0.0;

float anisotropic = 0.0;

float sheen = 0.0;

float sheenTint = 0.0;

float clearcoat = 0.0;

float clearcoatGloss = 0.0;

float IOR = 1.0;

float transmission = 0.0;

};

// Triangle definition

struct Triangle {

vec3 p1, p2, p3; // Vertex coordinates

vec3 n1, n2, n3; // Vertex normal

Material material; // texture of material

};

Note:

Sell it here

Forget it, the PBR material parameters defined by Disney are used here. In the next chapter, we will implement Disney principle's BRDF, which is simply standardized physics based rendering

Encoding triangle data, we create the structure Triangle_encoded. Their data types are composed of vec3 and meet the 12 byte alignment:

struct Triangle_encoded {

vec3 p1, p2, p3; // Vertex coordinates

vec3 n1, n2, n3; // Vertex normal

vec3 emissive; // Self luminous parameters

vec3 baseColor; // colour

vec3 param1; // (subsurface, metallic, specular)

vec3 param2; // (specularTint, roughness, anisotropic)

vec3 param3; // (sheen, sheenTint, clearcoat)

vec3 param4; // (clearcoatGloss, IOR, transmission)

};

Then prepare to encode the data. This part of the c + + code is quite boring, just tossing the data around:

// Read triangle

std::vector<Triangle> triangles;

readObj()

int nTriangles = triangles.size();

...

// Coded triangle, material

std::vector<Triangle_encoded> triangles_encoded(nTriangles);

for (int i = 0; i < nTriangles; i++) {

Triangle& t = triangles[i];

Material& m = t.material;

// Vertex Position

triangles_encoded[i].p1 = t.p1;

triangles_encoded[i].p2 = t.p2;

triangles_encoded[i].p3 = t.p3;

// Vertex normal

triangles_encoded[i].n1 = t.n1;

triangles_encoded[i].n2 = t.n2;

triangles_encoded[i].n3 = t.n3;

// texture of material

triangles_encoded[i].emissive = m.emissive;

triangles_encoded[i].baseColor = m.baseColor;

triangles_encoded[i].param1 = vec3(m.subsurface, m.metallic, m.specular);

triangles_encoded[i].param2 = vec3(m.specularTint, m.roughness, m.anisotropic);

triangles_encoded[i].param3 = vec3(m.sheen, m.sheenTint, m.clearcoat);

triangles_encoded[i].param4 = vec3(m.clearcoatGloss, m.IOR, m.transmission);

}

Then use the texture buffer to transfer it to the shader. Here, create a texture buffer object, import the data into tbo, create a texture, and bind tbo to the texture:

GLuint trianglesTextureBuffer; GLuint tbo0; glGenBuffers(1, &tbo0); glBindBuffer(GL_TEXTURE_BUFFER, tbo0); glBufferData(GL_TEXTURE_BUFFER, triangles_encoded.size() * sizeof(Triangle_encoded), &triangles_encoded[0], GL_STATIC_DRAW); glGenTextures(1, &trianglesTextureBuffer); glBindTexture(GL_TEXTURE_BUFFER, trianglesTextureBuffer); glTexBuffer(GL_TEXTURE_BUFFER, GL_RGB32F, tbo0);

Then decode the data through textelfetch in the shader and restore it to the original structure. This part of the code is also quite boring, which is also the inverted data. Just note that the texture is accessed in vec3 units, and the encoded triangle contains 12 vec3 vectors, so the offset corresponding to each subscript should be calculated through the subscript. The GLSL code is as follows:

#define SIZE_TRIANGLE 12

uniform samplerBuffer triangles;

...

// Gets the triangle with the i-th subscript

Triangle getTriangle(int i) {

int offset = i * SIZE_TRIANGLE;

Triangle t;

// Vertex coordinates

t.p1 = texelFetch(triangles, offset + 0).xyz;

t.p2 = texelFetch(triangles, offset + 1).xyz;

t.p3 = texelFetch(triangles, offset + 2).xyz;

// normal

t.n1 = texelFetch(triangles, offset + 3).xyz;

t.n2 = texelFetch(triangles, offset + 4).xyz;

t.n3 = texelFetch(triangles, offset + 5).xyz;

return t;

}

// Gets the material of the triangle with subscript i

Material getMaterial(int i) {

Material m;

int offset = i * SIZE_TRIANGLE;

vec3 param1 = texelFetch(triangles, offset + 8).xyz;

vec3 param2 = texelFetch(triangles, offset + 9).xyz;

vec3 param3 = texelFetch(triangles, offset + 10).xyz;

vec3 param4 = texelFetch(triangles, offset + 11).xyz;

m.emissive = texelFetch(triangles, offset + 6).xyz;

m.baseColor = texelFetch(triangles, offset + 7).xyz;

m.subsurface = param1.x;

m.metallic = param1.y;

m.specular = param1.z;

m.specularTint = param2.x;

m.roughness = param2.y;

m.anisotropic = param2.z;

m.sheen = param3.x;

m.sheenTint = param3.y;

m.clearcoat = param3.z;

m.clearcoatGloss = param4.x;

m.IOR = param4.y;

m.transmission = param4.z;

return m;

}

Then try to transfer a triangle and its material, and output its color, vertex information, or any attributes in the shader to verify. If the color (or other data, such as coordinates) is successfully read, the data transmission is correct

3. Triangle intersection in shader

The first is the definition of light:

// light

struct Ray {

vec3 startPoint;

vec3 direction;

};

Then we also need an intersection result, which contains some necessary information, such as intersection position, distance and surface material:

// Ray intersection result

struct HitResult {

bool isHit; // Hit

bool isInside; // Whether to hit from inside

float distance; // Distance from intersection

vec3 hitPoint; // Light midpoint

vec3 normal; // Hit point normal

vec3 viewDir; // The direction of the light hitting the point

Material material; // Surface material of hit point

};

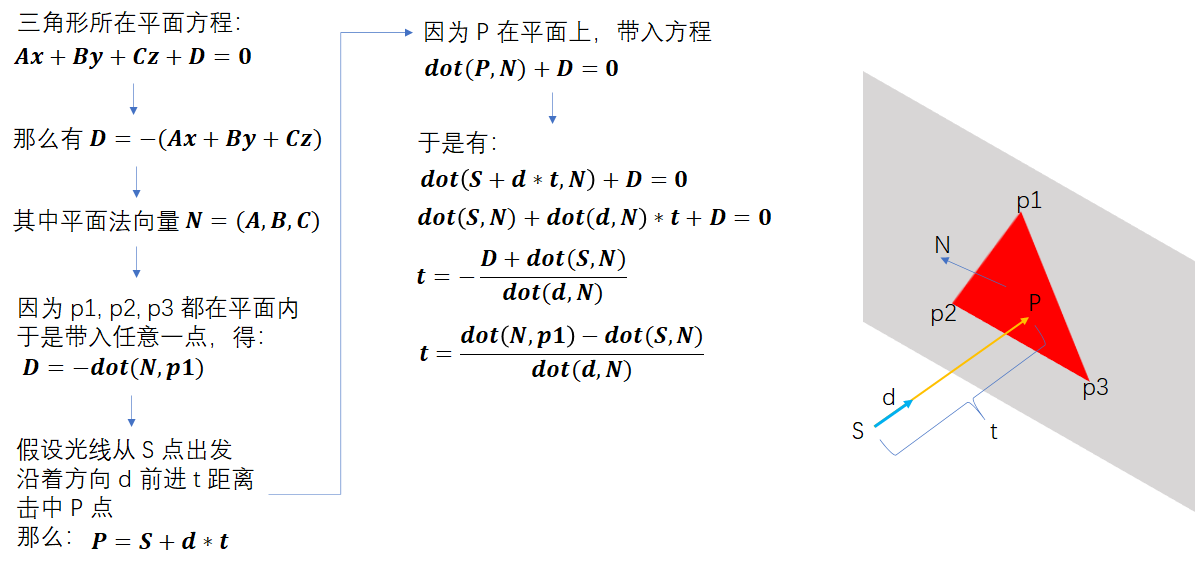

It is not difficult to find the intersection of triangles. First, solve the distance t between the light and the plane where the triangle is located. With the distance, find the intersection P. the idea is as follows:

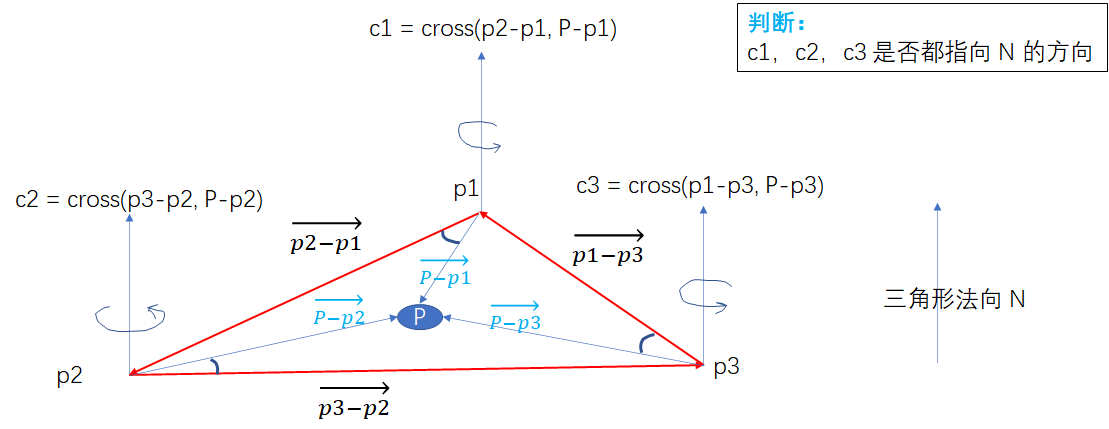

After finding the intersection, judge whether the intersection is in the triangle. Here, it is judged by whether the direction of cross multiplication and normal phase are the same. If all three cross products are in the same direction as N, P is in the triangle:

The code is also very simple. The only thing worth noting is that if the line of sight direction d is the same as the normal direction N of the triangle, flip N to ensure that we can get the correct normal vector no matter whether we hit the triangle from the front or back. The code is as follows:

#define INF 114514.0

// Intersection of light and triangle

HitResult hitTriangle(Triangle triangle, Ray ray) {

HitResult res;

res.distance = INF;

res.isHit = false;

res.isInside = false;

vec3 p1 = triangle.p1;

vec3 p2 = triangle.p2;

vec3 p3 = triangle.p3;

vec3 S = ray.startPoint; // Ray starting point

vec3 d = ray.direction; // Ray direction

vec3 N = normalize(cross(p2-p1, p3-p1)); // Normal vector

// Hit from behind the triangle (inside the model)

if (dot(N, d) > 0.0f) {

N = -N;

res.isInside = true;

}

// If the line of sight is parallel to the triangle

if (abs(dot(N, d)) < 0.00001f) return res;

// distance

float t = (dot(N, p1) - dot(S, N)) / dot(d, N);

if (t < 0.0005f) return res; // If the triangle is on the back of the light

// Intersection calculation

vec3 P = S + d * t;

// Determine whether the intersection is in the triangle

vec3 c1 = cross(p2 - p1, P - p1);

vec3 c2 = cross(p3 - p2, P - p2);

vec3 c3 = cross(p1 - p3, P - p3);

bool r1 = (dot(c1, N) > 0 && dot(c2, N) > 0 && dot(c3, N) > 0);

bool r2 = (dot(c1, N) < 0 && dot(c2, N) < 0 && dot(c3, N) < 0);

// Hit, encapsulate the returned result

if (r1 || r2) {

res.isHit = true;

res.hitPoint = P;

res.distance = t;

res.normal = N;

res.viewDir = d;

// Interpolate vertex normals according to the intersection position

float alpha = (-(P.x-p2.x)*(p3.y-p2.y) + (P.y-p2.y)*(p3.x-p2.x)) / (-(p1.x-p2.x-0.00005)*(p3.y-p2.y+0.00005) + (p1.y-p2.y+0.00005)*(p3.x-p2.x+0.00005));

float beta = (-(P.x-p3.x)*(p1.y-p3.y) + (P.y-p3.y)*(p1.x-p3.x)) / (-(p2.x-p3.x-0.00005)*(p1.y-p3.y+0.00005) + (p2.y-p3.y+0.00005)*(p1.x-p3.x+0.00005));

float gama = 1.0 - alpha - beta;

vec3 Nsmooth = alpha * triangle.n1 + beta * triangle.n2 + gama * triangle.n3;

res.normal = (res.isInside) ? (-Nsmooth) : (Nsmooth);

}

return res;

}

Then we write a function to traverse the triangle array for intersection and return the nearest intersection:

#define INF 114514.0

// Brutally traverse the array subscript range [l, r] to find the nearest intersection

HitResult hitArray(Ray ray, int l, int r) {

HitResult res;

res.isHit = false;

res.distance = INF;

for(int i=l; i<=r; i++) {

Triangle triangle = getTriangle(i);

HitResult r = hitTriangle(triangle, ray);

if(r.isHit && r.distance<res.distance) {

res = r;

res.material = getMaterial(i);

}

}

return res;

}

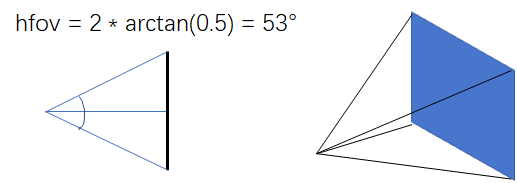

Then we configure the camera. The camera is located in vec3(0, 0, 4), looks at the negative direction of the z axis, and projects rays according to the NDC coordinates of canvas pixels. Here, the length and width of the projection plane are 2.0, while zNear is 2.0, which ensures a field angle of view of about 50 °

The code is as follows:

Ray ray; ray.startPoint = vec3(0, 0, 4); vec3 dir = vec3(pix.xy, 2) - ray.startPoint; ray.direction = normalize(dir);

Then use hitArray written just now to traverse the array, find the intersection and return the color of the surface:

uniform int nTriangles; ... HitResult res = hitArray(ray, 0, nTriangles-1); if(res.isHit) fragColor = vec4(res.material.color, 1);

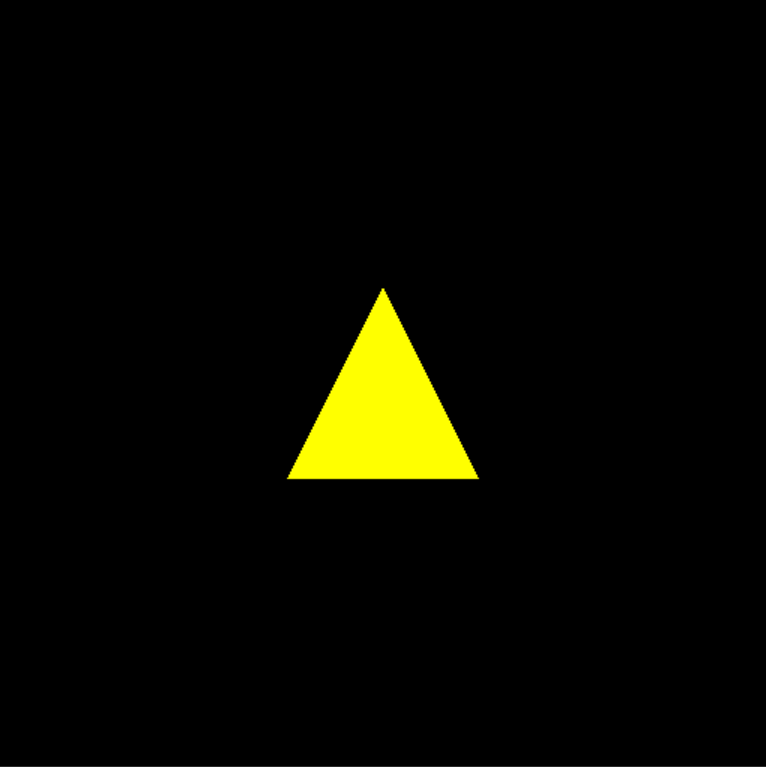

The triangle... Has been seen enough times

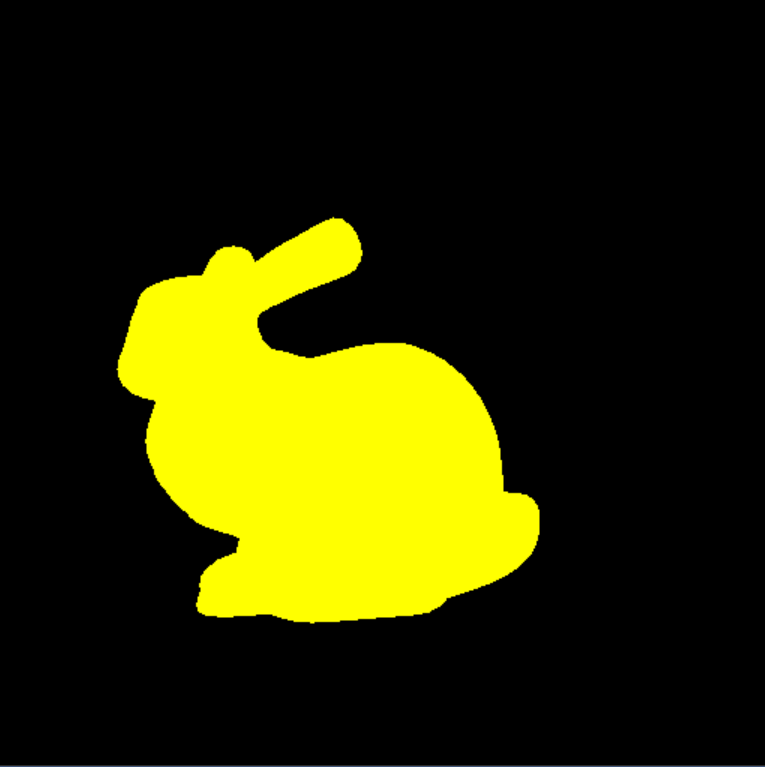

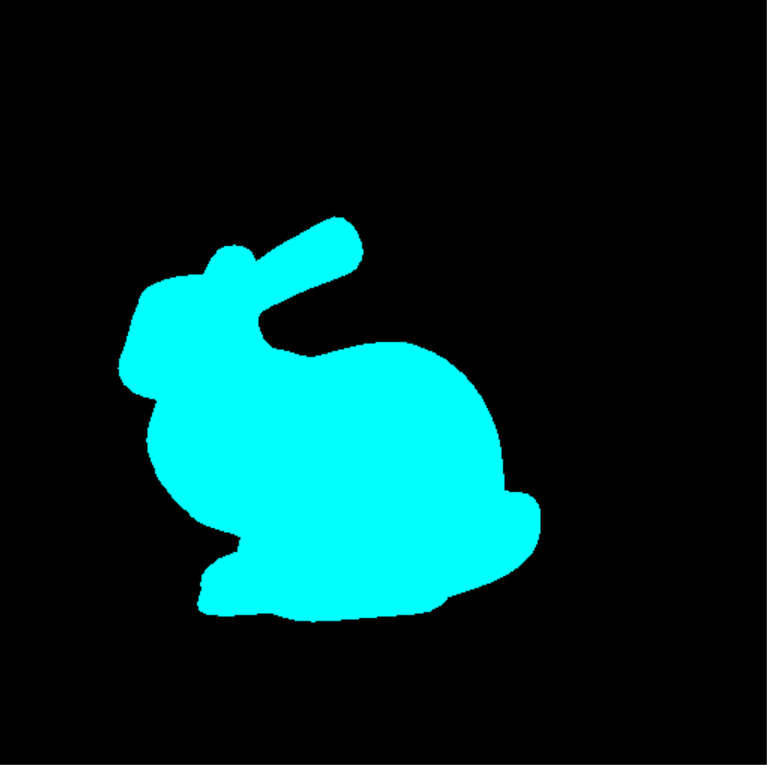

Read an obj model of Stanford Bunny and try again:

Ohhh although it traverses more than 4000 triangles violently, we can maintain 4 ~ 5 FPS because of the powerful parallel ability of GPU

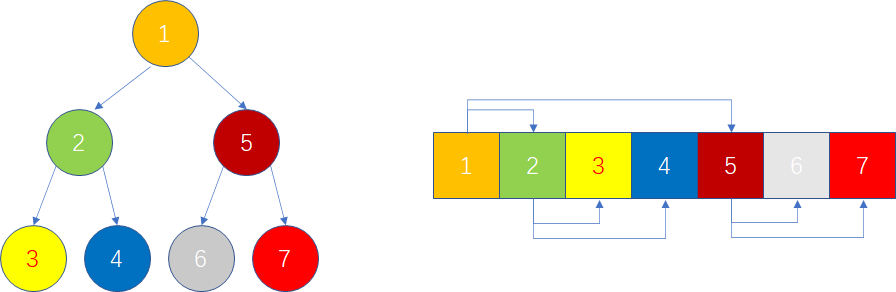

4. Linearized BVH tree

We successfully traverse triangles, but we need to traverse more efficiently. BVH tree is a good choice. However, there is no concept of pointer in GLSL. We need to change the tree structure using pointer to a linear binary tree using array subscript as pointer. I believe that children's shoes who have learned data structure are not unfamiliar with this

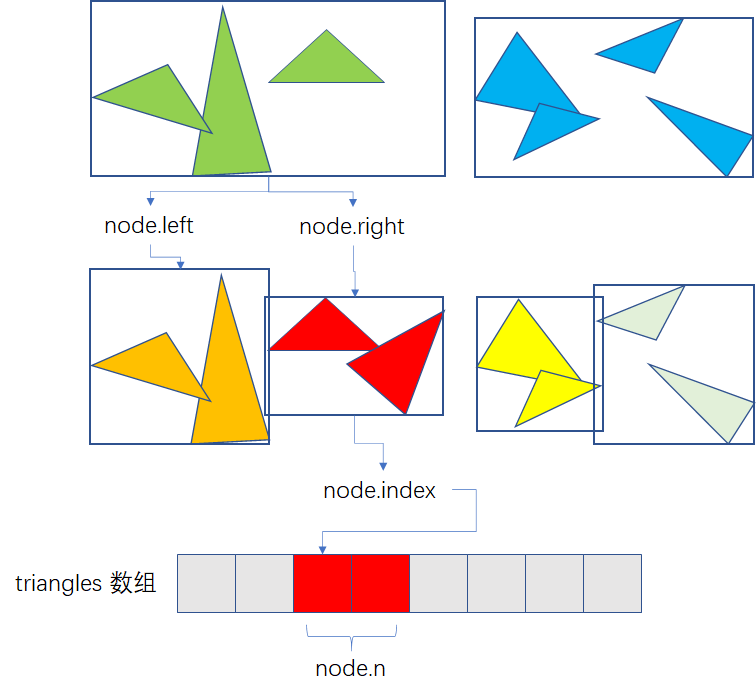

This is the original BVH node structure. The content is divided into three parts: left and right children, AABB collision box and leaf node information, in which AA is the minimum point and BB is the maximum point. Because we can't use pointers, we can only use array subscripts. We change the structure to:

// BVH tree node

struct BVHNode {

int left, right; // Left and right subtree index

int n, index; // Leaf node information

vec3 AA, BB; // Collision box

};

A small change is also introduced here: a leaf node can store multiple triangles, n represents the number of triangles of the leaf node, index represents the first triangle of the node, and the index in the triangles array:

Linearizing a binary tree is also very simple. You only need to change new Node() to push every time you create a node_ Back () inserts the array, and the index of the subscript is the same as usual.

Here, we allow a leaf to contain n triangles and directly push each node created_ Back, and then indexed by subscript. Use the simplest dichotomy to create a triangle array half at a time! The code for creating BVH is as follows:

Note:

Only the common binary tree code is given here

The code for the SAH optimized version can be found in the "complete code" section below

// Build BVH

int buildBVH(std::vector<Triangle>& triangles, std::vector<BVHNode>& nodes, int l, int r, int n) {

if (l > r) return 0;

// Note:

// It cannot be operated by pointer, reference, etc. it must be operated by nodes[id]

// Because STD:: vector < > will be copied to larger memory during capacity expansion, the address will change

// The pointer and reference point to the original memory, so an error will occur

nodes.push_back(BVHNode());

int id = nodes.size() - 1; // Note: save the index first

nodes[id] Property initialization for ...

// Calculate AABB

for (int i = l; i <= r; i++) {

... // Traversal triangle calculation AABB

}

// No more than n triangles return leaf nodes

if ((r - l + 1) <= n) {

nodes[id].n = r - l + 1;

nodes[id].index = l;

return id;

}

// Otherwise, recursive tree building

// Divide the array by x,y,z

std::sort(...)

// recursion

int mid = (l + r) / 2;

int left = buildBVH(triangles, nodes, l, mid, n);

int right = buildBVH(triangles, nodes, mid + 1, r, n);

nodes[id].left = left;

nodes[id].right = right;

return id;

}

So far, we have a linearized binary tree. Next, we are ready to send it to the GPU

5. BVH data transfer to shader

Note:

This part of the code is also quite boring. Skip it

Similar to triangle data, encode and then transmit:

struct BVHNode_encoded {

vec3 childs; // (left, right, reserved)

vec3 leafInfo; // (n, index, reserved)

vec3 AA, BB;

};

The coding process is too boring to copy the code. Here is the code for decoding BVHNode in the shader:

#define SIZE_BVHNODE 4

uniform samplerBuffer nodes;

// Gets the BVHNode object of the ith subscript

BVHNode getBVHNode(int i) {

BVHNode node;

// Left and right subtree

int offset = i * SIZE_BVHNODE;

ivec3 childs = ivec3(texelFetch(nodes, offset + 0).xyz);

ivec3 leafInfo = ivec3(texelFetch(nodes, offset + 1).xyz);

node.left = int(childs.x);

node.right = int(childs.y);

node.n = int(leafInfo.x);

node.index = int(leafInfo.y);

// Bounding box

node.AA = texelFetch(nodes, offset + 2).xyz;

node.BB = texelFetch(nodes, offset + 3).xyz;

return node;

}

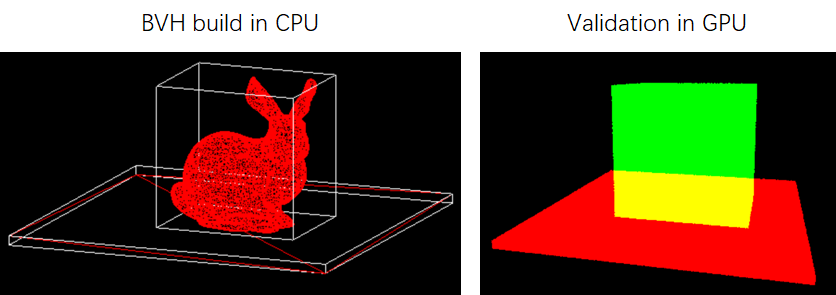

Read a rabbit, and then traverse all nodes. For each leaf node, traverse all triangles it contains to see whether the rabbit is complete to verify whether the BVH transmission is correct:

Note:

This step is still a violent traversal. There is no binary traversal bvh, just to verify whether the data is correct

throw light

...

for(int i=0; i<nNodes; i++) {

BVHNode node = getBVHNode(i);

if(node.n>0) {

int L = node.index;

int R = node.index + node.n - 1;

HitResult res = hitArray(ray, L, R);

if(res.isHit) fragColor = vec4(res.material.color, 1);

}

}

If the same result as hitArray is returned, it means that we have no problem with BVH data transmission:

In addition, before traversal, it is recommended to output the left pointer of node 1. No accident, it should be 2 and put node Left / 3 is output as pixel color. If gray indicates that it is correct, be sure to ensure that the integer subscript is transmitted correctly!

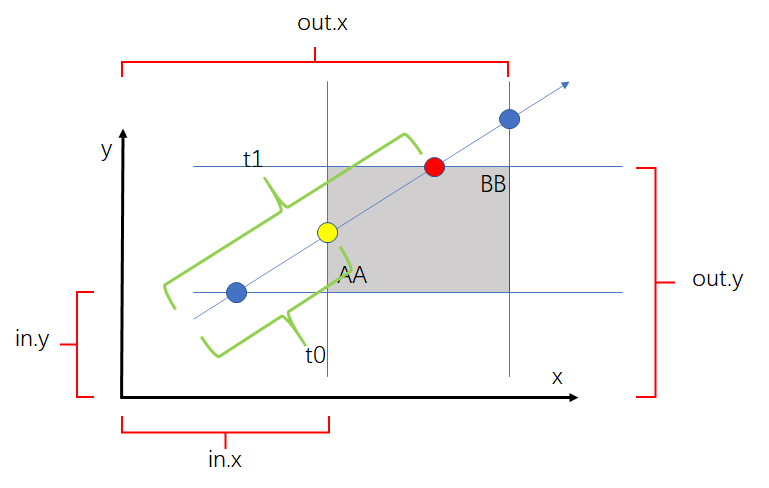

6. Cross with AABB box

For axis aligned bounding boxes, there are three groups of penetration points and penetration points when the light enters and exits xoy, xoz and yoz planes. If a group of penetration points and penetration points are found, so that the distance between the starting point of the light and the penetration point is less than the distance between the starting point of the light and the penetration point, that is, t0 < T1, it indicates a hit

Let's take the minimum distance in out as t1 and the maximum distance in in as T0, and then see if t1 > t0. If the equation is satisfied, it indicates that the hit:

The corresponding GLSL codes are as follows:

// Intersection with aabb box. If there is no intersection, return - 1

float hitAABB(Ray r, vec3 AA, vec3 BB) {

vec3 invdir = 1.0 / r.direction;

vec3 f = (BB - r.startPoint) * invdir;

vec3 n = (AA - r.startPoint) * invdir;

vec3 tmax = max(f, n);

vec3 tmin = min(f, n);

float t1 = min(tmax.x, min(tmax.y, tmax.z));

float t0 = max(tmin.x, max(tmin.y, tmin.z));

return (t1 >= t0) ? ((t0 > 0.0) ? (t0) : (t1)) : (-1);

}

Note:

n is the near intersection, that is, in

f is the far intersection far, which is out

Because in and out are keywords in GLSL, they cannot be used as variable names

Next, test it. For the root node (node 1) of BVH, we intersect with its left and right subtrees respectively. If the left subtree hits, it returns red, the right subtree hits, it returns green, and both hit, it returns yellow:

... BVHNode node = getBVHNode(1); BVHNode left = getBVHNode(node.left); BVHNode right = getBVHNode(node.right); float r1 = hitAABB(ray, left.AA, left.BB); float r2 = hitAABB(ray, right.AA, right.BB); vec3 color; if(r1>0) color = vec3(1, 0, 0); if(r2>0) color = vec3(0, 1, 0); if(r1>0 && r2>0) color = vec3(1, 1, 0); ...

The results are as follows:

The above results show that there are no problems in the construction, transmission and intersection of BVH

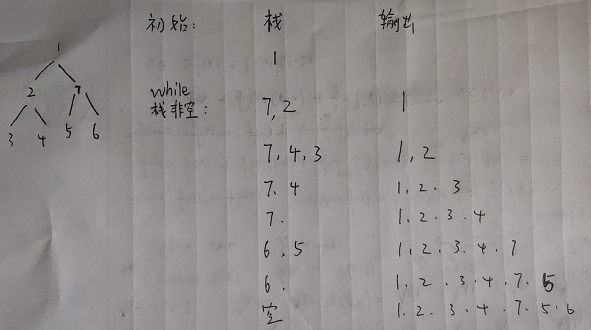

7. Non recursive traversal of BVH tree

There is no stack concept on GPU, nor can we execute recursive programs. We need to manually write code for binary tree traversal. For BVH tree, after intersecting with the root node, we always find its left and right subtrees, which is equivalent to the preorder traversal of binary tree, which is relatively easy to implement

Maintain a stack to hold nodes. First put the tree root on the stack, and then loop through while(!stack.empty()):

- Pop the node root from the stack

- If the right tree is not empty, push the right subtree of root into the stack

- If the left tree is not empty, push the left subtree of root into the stack

It's very simple, but pay attention to the nodes accessed first and then put into the stack, because the access order of the stack is opposite, so as to ensure that the next top element of the stack must be the node accessed first. Here is a stack state diagram during non recursive traversal:

If you don't know if there are bug s in the code, you can test it in question 144 of leetcode. Here is Portal

So far, we have mastered the method of non recursive traversal of binary trees. There is no STL stack in GLSL, so we simulate the stack with an array and an sp pointer. The following is the GLSL code for traversing the nearest triangle of BVH query:

// Traversal BVH intersection

HitResult hitBVH(Ray ray) {

HitResult res;

res.isHit = false;

res.distance = INF;

// Stack

int stack[256];

int sp = 0;

stack[sp++] = 1;

while(sp>0) {

int top = stack[--sp];

BVHNode node = getBVHNode(top);

// Is the leaf node, traverse the triangle and find the nearest intersection

if(node.n>0) {

int L = node.index;

int R = node.index + node.n - 1;

HitResult r = hitArray(ray, L, R);

if(r.isHit && r.distance<res.distance) res = r;

continue;

}

// Intersection with left and right box AABB

float d1 = INF; // Left box distance

float d2 = INF; // Right box distance

if(node.left>0) {

BVHNode leftNode = getBVHNode(node.left);

d1 = hitAABB(ray, leftNode.AA, leftNode.BB);

}

if(node.right>0) {

BVHNode rightNode = getBVHNode(node.right);

d2 = hitAABB(ray, rightNode.AA, rightNode.BB);

}

// Search in the nearest box

if(d1>0 && d2>0) {

if(d1<d2) { // D1 < D2, left first

stack[sp++] = node.right;

stack[sp++] = node.left;

} else { // D2 < D1, right first

stack[sp++] = node.left;

stack[sp++] = node.right;

}

} else if(d1>0) { // Hit left only

stack[sp++] = node.left;

} else if(d2>0) { // Hit right only

stack[sp++] = node.right;

}

}

return res;

}

Here, by judging the distance of the intersection, the priority is to find the nearest box, which can be greatly accelerated. Replace the hitArray of the original violence search with a new hitBVH function, and then look at the effect again:

The number of frames is stable at about 100, which is more than 20 times more efficient than the previous five frames

8. Start ray tracing

8.1. Principle review

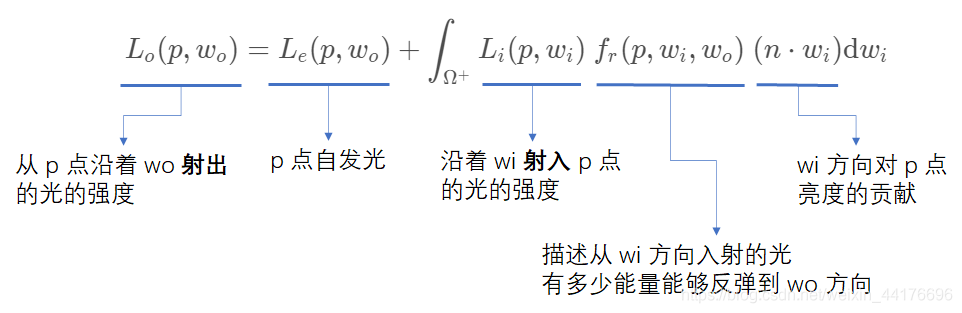

Review the rendering equation again:

Because the optical path is reversible, the energy of the light emitted into point p along the wi direction is equal to the energy of the light emitted along the wi direction from point q:

L i ( p , w i ) = L o ( q , w i ) L_i(p, w_i)=L_o(q, w_i) Li(p,wi)=Lo(q,wi)

Therefore, a recursive formula is formed:

L o ( p , w o ) = L e ( p , w o ) + ∫ Ω + L o ( q , w i ) f r ( p , w i , w o ) ( n ⋅ w i ) d w i L_o(p, w_o)=L_e(p, w_o)+\int_{\Omega^+}L_o(q, w_i) \ f_r(p, w_i, w_o) \ (n\cdot w_i) {\rm d} w_i Lo(p,wo)=Le(p,wo)+∫Ω+Lo(q,wi) fr(p,wi,wo) (n⋅wi)dwi

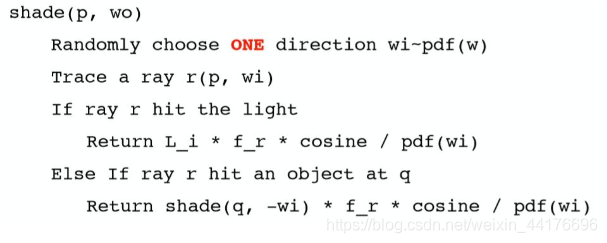

So the pseudo code is:

Note:

Because there is a random wi in the normal hemisphere at point p, the direction of wi is emission, which is opposite to the defined injection, so the negative sign is taken

The return result of each recursion is multiplied by f_r * cosine / pdf, but for no recursion in the shader, we use a loop instead. Maintain a variable history to record each recursion and return the cumulative multiplication of the result.

It is not consistent with the parameter entry of the above pseudo code. We give the surface information of a point p, that is, HitResult structure, an incident light direction viewDir and a maximum ejection times, and then solve the color of point p through pathTracing function:

throw light

...

// primary hit

HitResult firstHit = hitBVH(ray);

vec3 color;

if(!firstHit.isHit) {

color = vec3(0);

} else {

vec3 Le = firstHit.material.emissive;

int maxBounce = 2;

vec3 Li = pathTracing(firstHit, maxBounce);

color = Le + Li;

}

fragColor = vec4(color, 1.0);

We just finished writing the main function, but pathTracing hasn't started yet. But we have to do some groundwork

8.2. Help function

Write three help functions:

- Function of random numbers with uniform distribution of 0 ~ 1

- Generating a function of a random vector uniformly distributed in the hemisphere

- Function of any vector projected onto the normal hemisphere

The first is the random number with uniform distribution of 0 ~ 1. This part of the code is quoted from Casual Shadertoy Path Tracing Part 1 , and a uniform uint variable frameCounter (frame counter) is required as random seed, as well as width, height and NDC coordinate pix variables of current screen pixels:

uniform uint frameCounter;

uint seed = uint(

uint((pix.x * 0.5 + 0.5) * width) * uint(1973) +

uint((pix.y * 0.5 + 0.5) * height) * uint(9277) +

uint(frameCounter) * uint(26699)) | uint(1);

uint wang_hash(inout uint seed) {

seed = uint(seed ^ uint(61)) ^ uint(seed >> uint(16));

seed *= uint(9);

seed = seed ^ (seed >> 4);

seed *= uint(0x27d4eb2d);

seed = seed ^ (seed >> 15);

return seed;

}

float rand() {

return float(wang_hash(seed)) / 4294967296.0;

}

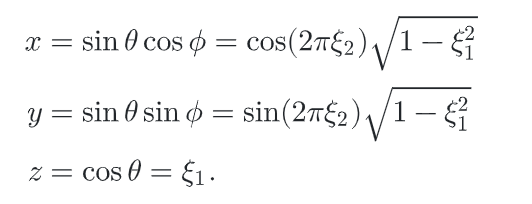

The second is hemispherical uniform distribution, and this part of the code is quoted from PBRT 13.6 , where ξ 1 , ξ 2 \xi_1, \xi_2 ξ 1, ξ 2 ¢ is a random number evenly distributed from 0 to 1:

The code is as follows:

// Hemispherical uniform sampling

vec3 SampleHemisphere() {

float z = rand();

float r = max(0, sqrt(1.0 - z*z));

float phi = 2.0 * PI * rand();

return vec3(r * cos(phi), r * sin(phi), z);

}

Then it is projected to the normal hemisphere, and the code of this part is quoted from GPU Path Tracing in Unity – Part 2 , the GLSL implementation is different from the text:

// Project the vector v onto the normal hemisphere of N

vec3 toNormalHemisphere(vec3 v, vec3 N) {

vec3 helper = vec3(1, 0, 0);

if(abs(N.x)>0.999) helper = vec3(0, 0, 1);

vec3 tangent = normalize(cross(N, helper));

vec3 bitangent = normalize(cross(N, tangent));

return v.x * tangent + v.y * bitangent + v.z * N;

}

8.3. Implementation of pathtracing

This is followed by the implementation of pathTracing. Here we only implement diffuse reflection

Hemispherical area 2 π 2\pi 2 π, here we take the probability density function pdf of diffuse reflection as 1 / 2 π 1/2\pi 1 / 2 π, in addition, about f_r here we take the surface color divided by π \pi π. Let's sell it first. Let's take it as a constant

Note:

Here f_r is actually BRDF, that is, bidirectional reflection distribution function

The value of the function BRDF(p, wi, wo) describes how much light can be emitted from Wo after scattering when light enters point p from wi

One conclusion is that the BRDF of diffuse reflection is the color value divided by pi

Remember here, my subsequent blog will have a detailed derivation

The specific pathTracing code is as follows:

// Path tracking

vec3 pathTracing(HitResult hit, int maxBounce) {

vec3 Lo = vec3(0); // Final color

vec3 history = vec3(1); // Recursive accumulated color

for(int bounce=0; bounce<maxBounce; bounce++) {

// Random exit direction wi

vec3 wi = toNormalHemisphere(SampleHemisphere(), hit.normal);

// Diffuse: emitting rays randomly

Ray randomRay;

randomRay.startPoint = hit.hitPoint;

randomRay.direction = wi;

HitResult newHit = hitBVH(randomRay);

float pdf = 1.0 / (2.0 * PI); // Hemispherical uniform sampling probability density

float cosine_o = max(0, dot(-hit.viewDir, hit.normal)); // Cosine of angle between incident light and normal

float cosine_i = max(0, dot(randomRay.direction, hit.normal)); // Cosine of angle between outgoing light and normal

vec3 f_r = hit.material.baseColor / PI; // Diffuse BRDF

// Miss

if(!newHit.isHit) {

break;

}

// Hit light accumulation color

vec3 Le = newHit.material.emissive;

Lo += history * Le * f_r * cosine_i / pdf;

// Recursion (step)

hit = newHit;

history *= f_r * cosine_i / pdf; // Cumulative color

}

return Lo;

}

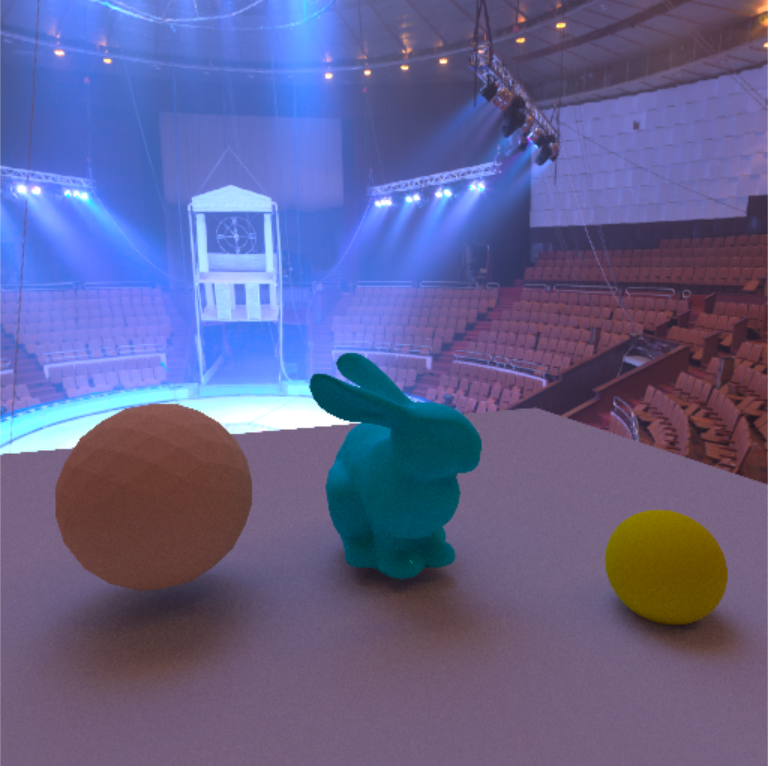

Run code:

The result is very noisy. This is because we want to accumulate the results of each frame as the integral value instead of taking each discrete sample separately. Therefore, we need to mix the rendering results of multiple frames

8.4. Multi frame mixing and post-processing

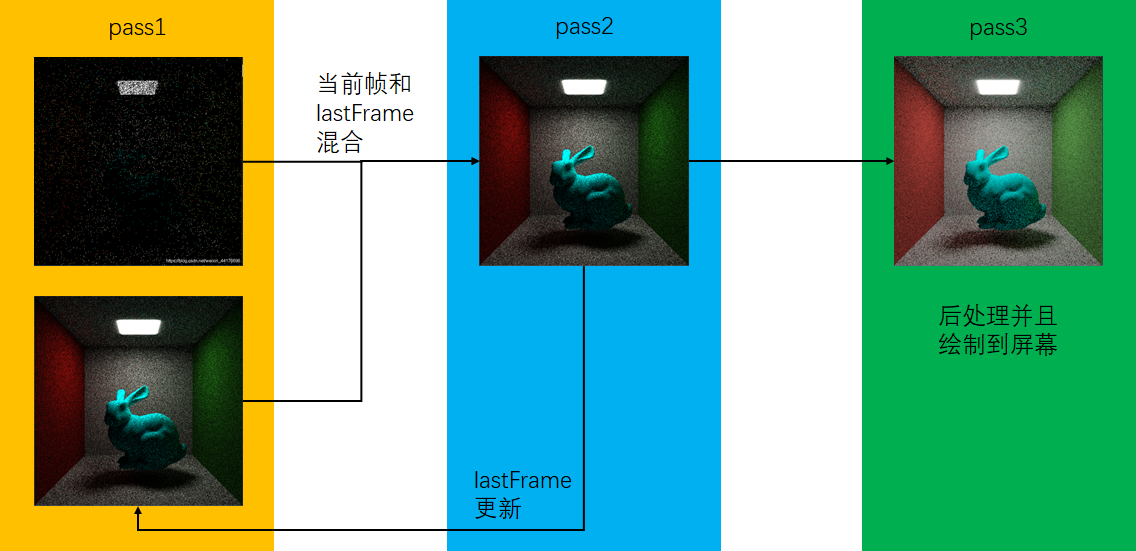

In order to obtain the result of the previous frame, we need to maintain a texture lastFrame to save the image of the previous frame. At the same time, in order to post process the output (such as gamma correction and tone mapping), we need to implement a simple pipeline:

pass1 is raytraced, mixed with the previous frame and output. pass2 frame buffer has only one color attachment, which is associated with lastFrame. The value of lastFrame can be updated by directly outputting the color, while pass3 is post-processing and final output.

For pipeline and frame caching, you should be familiar with the children's shoes of defer render or Minecraft shader. You can also refer to my blog: OpenGL learning (XI): delayed rendering pipeline , here we just change the first geometry pass to raytracing pass

Note:

We have to deal with geometry pass later

A RenderPass class is encapsulated here, where colorAttachments is the texture id to be passed into the next pass, and these textures will be used as color attachments for frame buffer. Then each pass directly calls draw, where texPassArray is the colorAttachments of the previous pass. For the specific c + + code implementation, please refer to the [complete] code section below:

class RenderPass {

public:

std::vector<GLuint> colorAttachments;

// More properties

void bindData(bool finalPass = false) {

}

void draw(std::vector<GLuint> texPassArray = {}) {

}

};

After we implement the pipeline, we add multi frame mixing in the code of the chip shader of pass1:

uniform sampler2D lastFrame; ... // Blend with previous frame vec3 lastColor = texture2D(lastFrame, pix.xy*0.5+0.5).rgb; color = mix(lastColor, color, 1.0/float(frameCounter+1));

Again, the motion picture is compressed, and there is a little more noise:

Yeah! So far, we have realized light tracing on GPU, and the fitting process is still visual

9. HDR environment map

add a beautiful thing to a contrasting beautiful thing.

HDR picture is a panorama. Different from ordinary soap flakes, HDR picture can represent a wider range of brightness. The contrast between general pictures and HDR pictures when brightness changes is as follows:

Note:

Picture quoted from How to Create Your Own HDR Environment Maps

This is a blog about photography. In a sense, photography is also a process of sampling (or integrating) the real world. When the light gate of the camera photosensitive chip opens, the capacitor begins to accumulate photons (charge), and then read the value of the capacitor to obtain the pixel color

(Chunyun, I don't promise. Yes, I can't take photos, and I don't have money to buy a camera Orz

The general picture brightness is 255 when it is full, but the HDR brightness is the whole floating-point number range, which can better represent the real light, so it is used for environment mapping

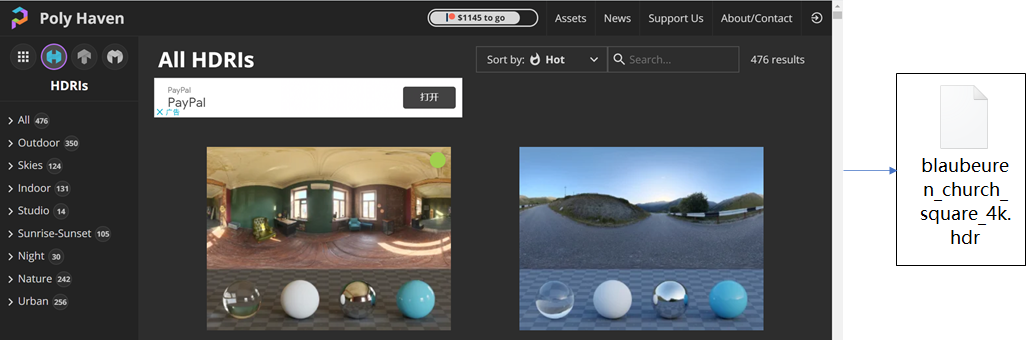

First, you can ploy heaven Download HDR map above:

Then we need to read the HDR picture. Obviously, sol cannot be read (in fact, there is A pseudo HDR, which is realized through RGBE, RGBdivA and RGBdivA2, but it seems that there is A BUG with channel A always 128, so it can't be used...

Here we choose a lightweight Library: HDR Image Loader. It does not need to be installed, but only needs to be include d. Its code is in here

After successfully include, read the code of HDR diagram as follows:

#include "lib/hdrloader.h"

...

// hdr panorama

HDRLoaderResult hdrRes;

bool r = HDRLoader::load("./skybox/sunset.hdr", hdrRes);

GLuint hdrMap = Create a texture ()

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB32F, hdrRes.width, hdrRes.height, 0, GL_RGB, GL_FLOAT, hdrRes.cols);

Then in the shader, we directly output the following:

There are two questions:

- The image is a little dark because there is no gamma correction

- The image is reversed. Just flip y when sampling later

- The image is distorted: we'll use spherical coord sampling later

Then we give a vector v and convert it into the texture coordinate uv of the sampled HDR image, which is the code reference of this part stack overflow , the difference from the original is that I flip the y coordinate:

// Convert the 3D vector v to the texture coordinate uv of HDR map

vec2 SampleSphericalMap(vec3 v) {

vec2 uv = vec2(atan(v.z, v.x), asin(v.y));

uv /= vec2(2.0 * PI, PI);

uv += 0.5;

uv.y = 1.0 - uv.y;

return uv;

}

At this point, we can sample the HDR diagram:

// Get HDR ambient color

vec3 sampleHdr(vec3 v) {

vec2 uv = SampleSphericalMap(normalize(v));

vec3 color = texture2D(hdrMap, uv).rgb;

//color = min(color, vec3(10));

return color;

}

Note:

There is a sentence in the above code that min claps the HDR brightness to less than 10. Strictly speaking, this is wrong, and I also commented it out

However, if this is not done, many firefly images will appear, and the pictures will be noisy, as shown in the figure:

Then, in the main function, in the processing of miss of primary ray, color = vec3(0) is replaced by:

color = sampleHdr(ray.direction);

In addition, in pathTracing, ray miss also needs to be processed:

// Miss

if(!newHit.isHit) {

vec3 skyColor = sampleHdr(randomRay.direction);

Lo += history * skyColor * f_r * cosine_i / pdf;

break;

}

The maximum value of HDR in the following figure is clamp ed to within 10. You can see that the effect is still good:

If you don't clamp, you can cast a brighter shadow, but the disadvantage is that it is firefly. Later, you may try to solve it with importance sampling (escape)

10. Complete code

10.1. c + + code

#include <iostream>

#include <iomanip>

#include <string>

#include <fstream>

#include <vector>

#include <sstream>

#include <iostream>

#include <algorithm>

#include <ctime>

#include <GL/glew.h>

#include <GL/freeglut.h>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

#include <SOIL2/SOIL2.h>

#include "lib/hdrloader.h"

#define INF 114514.0

using namespace glm;

// ----------------------------------------------------------------------------- //

// Object surface material definition

struct Material {

vec3 emissive = vec3(0, 0, 0); // The luminous color when used as a light source

vec3 baseColor = vec3(1, 1, 1);

float subsurface = 0.0;

float metallic = 0.0;

float specular = 0.0;

float specularTint = 0.0;

float roughness = 0.0;

float anisotropic = 0.0;

float sheen = 0.0;

float sheenTint = 0.0;

float clearcoat = 0.0;

float clearcoatGloss = 0.0;

float IOR = 1.0;

float transmission = 0.0;

};

// Triangle definition

struct Triangle {

vec3 p1, p2, p3; // Vertex coordinates

vec3 n1, n2, n3; // Vertex normal

Material material; // texture of material

};

// BVH tree node

struct BVHNode {

int left, right; // Left and right subtree index

int n, index; // Leaf node information

vec3 AA, BB; // Collision box

};

// ----------------------------------------------------------------------------- //

struct Triangle_encoded {

vec3 p1, p2, p3; // Vertex coordinates

vec3 n1, n2, n3; // Vertex normal

vec3 emissive; // Self luminous parameters

vec3 baseColor; // colour

vec3 param1; // (subsurface, metallic, specular)

vec3 param2; // (specularTint, roughness, anisotropic)

vec3 param3; // (sheen, sheenTint, clearcoat)

vec3 param4; // (clearcoatGloss, IOR, transmission)

};

struct BVHNode_encoded {

vec3 childs; // (left, right, reserved)

vec3 leafInfo; // (n, index, reserved)

vec3 AA, BB;

};

// ----------------------------------------------------------------------------- //

class RenderPass {

public:

GLuint FBO = 0;

GLuint vao, vbo;

std::vector<GLuint> colorAttachments;

GLuint program;

int width = 512;

int height = 512;

void bindData(bool finalPass = false) {

if (!finalPass) glGenFramebuffers(1, &FBO);

glBindFramebuffer(GL_FRAMEBUFFER, FBO);

glGenBuffers(1, &vbo);

glBindBuffer(GL_ARRAY_BUFFER, vbo);

std::vector<vec3> square = { vec3(-1, -1, 0), vec3(1, -1, 0), vec3(-1, 1, 0), vec3(1, 1, 0), vec3(-1, 1, 0), vec3(1, -1, 0) };

glBufferData(GL_ARRAY_BUFFER, sizeof(vec3) * square.size(), NULL, GL_STATIC_DRAW);

glBufferSubData(GL_ARRAY_BUFFER, 0, sizeof(vec3) * square.size(), &square[0]);

glGenVertexArrays(1, &vao);

glBindVertexArray(vao);

glEnableVertexAttribArray(0); // layout (location = 0)

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, (GLvoid*)0);

// If it is not finalPass, the color attachment of frame buffer is generated

if (!finalPass) {

std::vector<GLuint> attachments;

for (int i = 0; i < colorAttachments.size(); i++) {

glBindTexture(GL_TEXTURE_2D, colorAttachments[i]);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0 + i, GL_TEXTURE_2D, colorAttachments[i], 0);// Bind color texture to color i attachment

attachments.push_back(GL_COLOR_ATTACHMENT0 + i);

}

glDrawBuffers(attachments.size(), &attachments[0]);

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

void draw(std::vector<GLuint> texPassArray = {}) {

glUseProgram(program);

glBindFramebuffer(GL_FRAMEBUFFER, FBO);

glBindVertexArray(vao);

// Transmit the frame buffer color attachment of the previous frame

for (int i = 0; i < texPassArray.size(); i++) {

glActiveTexture(GL_TEXTURE0+i);

glBindTexture(GL_TEXTURE_2D, texPassArray[i]);

std::string uName = "texPass" + std::to_string(i);

glUniform1i(glGetUniformLocation(program, uName.c_str()), i);

}

glViewport(0, 0, width, height);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glDrawArrays(GL_TRIANGLES, 0, 6);

glBindVertexArray(0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glUseProgram(0);

}

};

// ----------------------------------------------------------------------------- //

GLuint trianglesTextureBuffer;

GLuint nodesTextureBuffer;

GLuint lastFrame;

GLuint hdrMap;

RenderPass pass1;

RenderPass pass2;

RenderPass pass3;

// Camera parameters

float upAngle = 0.0;

float rotatAngle = 0.0;

float r = 4.0;

// ----------------------------------------------------------------------------- //

// Sort by triangle center -- comparison function

bool cmpx(const Triangle& t1, const Triangle& t2) {

vec3 center1 = (t1.p1 + t1.p2 + t1.p3) / vec3(3, 3, 3);

vec3 center2 = (t2.p1 + t2.p2 + t2.p3) / vec3(3, 3, 3);

return center1.x < center2.x;

}

bool cmpy(const Triangle& t1, const Triangle& t2) {

vec3 center1 = (t1.p1 + t1.p2 + t1.p3) / vec3(3, 3, 3);

vec3 center2 = (t2.p1 + t2.p2 + t2.p3) / vec3(3, 3, 3);

return center1.y < center2.y;

}

bool cmpz(const Triangle& t1, const Triangle& t2) {

vec3 center1 = (t1.p1 + t1.p2 + t1.p3) / vec3(3, 3, 3);

vec3 center2 = (t2.p1 + t2.p2 + t2.p3) / vec3(3, 3, 3);

return center1.z < center2.z;

}

// ----------------------------------------------------------------------------- //

// Reads the file and returns a long string representing the file content

std::string readShaderFile(std::string filepath) {

std::string res, line;

std::ifstream fin(filepath);

if (!fin.is_open())

{

std::cout << "file " << filepath << " Open failed" << std::endl;

exit(-1);

}

while (std::getline(fin, line))

{

res += line + '\n';

}

fin.close();

return res;

}

GLuint getTextureRGB32F(int width, int height) {

GLuint tex;

glGenTextures(1, &tex);

glBindTexture(GL_TEXTURE_2D, tex);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA32F, width, height, 0, GL_RGBA, GL_FLOAT, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

return tex;

}

// Get shader object

GLuint getShaderProgram(std::string fshader, std::string vshader) {

// Read shader source file

std::string vSource = readShaderFile(vshader);

std::string fSource = readShaderFile(fshader);

const char* vpointer = vSource.c_str();

const char* fpointer = fSource.c_str();

// fault-tolerant

GLint success;

GLchar infoLog[512];

// Create and compile vertex shaders

GLuint vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, (const GLchar**)(&vpointer), NULL);

glCompileShader(vertexShader);

glGetShaderiv(vertexShader, GL_COMPILE_STATUS, &success); // Error detection

if (!success)

{

glGetShaderInfoLog(vertexShader, 512, NULL, infoLog);

std::cout << "Vertex shader compilation error\n" << infoLog << std::endl;

exit(-1);

}

// Create and compile clip shaders

GLuint fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, (const GLchar**)(&fpointer), NULL);

glCompileShader(fragmentShader);

glGetShaderiv(fragmentShader, GL_COMPILE_STATUS, &success); // Error detection

if (!success)

{

glGetShaderInfoLog(fragmentShader, 512, NULL, infoLog);

std::cout << "Fragment shader compilation error\n" << infoLog << std::endl;

exit(-1);

}

// Link two shaders to the program object

GLuint shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glLinkProgram(shaderProgram);

// Delete shader object

glDeleteShader(vertexShader);

glDeleteShader(fragmentShader);

return shaderProgram;

}

// ----------------------------------------------------------------------------- //

// Model transformation matrix

mat4 getTransformMatrix(vec3 rotateCtrl, vec3 translateCtrl, vec3 scaleCtrl) {

glm::mat4 unit( // Identity matrix

glm::vec4(1, 0, 0, 0),

glm::vec4(0, 1, 0, 0),

glm::vec4(0, 0, 1, 0),

glm::vec4(0, 0, 0, 1)

);

mat4 scale = glm::scale(unit, scaleCtrl);

mat4 translate = glm::translate(unit, translateCtrl);

mat4 rotate = unit;

rotate = glm::rotate(rotate, glm::radians(rotateCtrl.x), glm::vec3(1, 0, 0));

rotate = glm::rotate(rotate, glm::radians(rotateCtrl.y), glm::vec3(0, 1, 0));

rotate = glm::rotate(rotate, glm::radians(rotateCtrl.z), glm::vec3(0, 0, 1));

mat4 model = translate * rotate * scale;

return model;

}

// Read obj

void readObj(std::string filepath, std::vector<Triangle>& triangles, Material material, mat4 trans, bool smoothNormal) {

// Vertex position, index

std::vector<vec3> vertices;

std::vector<GLuint> indices;

// Open file stream

std::ifstream fin(filepath);

std::string line;

if (!fin.is_open()) {

std::cout << "file " << filepath << " Open failed" << std::endl;

exit(-1);

}

// Calculate the AABB box and normalize the model size

float maxx = -11451419.19;

float maxy = -11451419.19;

float maxz = -11451419.19;

float minx = 11451419.19;

float miny = 11451419.19;

float minz = 11451419.19;

// Read by line

while (std::getline(fin, line)) {

std::istringstream sin(line); // Parse and read a row of data as a string stream

std::string type;

GLfloat x, y, z;

int v0, v1, v2;

int vn0, vn1, vn2;

int vt0, vt1, vt2;

char slash;

// Count the number of inclined rods and read them in different formats

int slashCnt = 0;

for (int i = 0; i < line.length(); i++) {

if (line[i] == '/') slashCnt++;

}

// Read obj file

sin >> type;

if (type == "v") {

sin >> x >> y >> z;

vertices.push_back(vec3(x, y, z));

maxx = max(maxx, x); maxy = max(maxx, y); maxz = max(maxx, z);

minx = min(minx, x); miny = min(minx, y); minz = min(minx, z);

}

if (type == "f") {

if (slashCnt == 6) {

sin >> v0 >> slash >> vt0 >> slash >> vn0;

sin >> v1 >> slash >> vt1 >> slash >> vn1;

sin >> v2 >> slash >> vt2 >> slash >> vn2;

}

else if (slashCnt == 3) {

sin >> v0 >> slash >> vt0;

sin >> v1 >> slash >> vt1;

sin >> v2 >> slash >> vt2;

}

else {

sin >> v0 >> v1 >> v2;

}

indices.push_back(v0 - 1);

indices.push_back(v1 - 1);

indices.push_back(v2 - 1);

}

}

// Model size normalization

float lenx = maxx - minx;

float leny = maxy - miny;

float lenz = maxz - minz;

float maxaxis = max(lenx, max(leny, lenz));

for (auto& v : vertices) {

v.x /= maxaxis;

v.y /= maxaxis;

v.z /= maxaxis;

}

// Coordinate transformation through matrix

for (auto& v : vertices) {

vec4 vv = vec4(v.x, v.y, v.z, 1);

vv = trans * vv;

v = vec3(vv.x, vv.y, vv.z);

}

// Generate Normals

std::vector<vec3> normals(vertices.size(), vec3(0, 0, 0));

for (int i = 0; i < indices.size(); i += 3) {

vec3 p1 = vertices[indices[i]];

vec3 p2 = vertices[indices[i + 1]];

vec3 p3 = vertices[indices[i + 2]];

vec3 n = normalize(cross(p2 - p1, p3 - p1));

normals[indices[i]] += n;

normals[indices[i + 1]] += n;

normals[indices[i + 2]] += n;

}

// Building an array of Triangle objects

int offset = triangles.size(); // Incremental update

triangles.resize(offset + indices.size() / 3);

for (int i = 0; i < indices.size(); i += 3) {

Triangle& t = triangles[offset + i / 3];

// Pass vertex attributes

t.p1 = vertices[indices[i]];

t.p2 = vertices[indices[i + 1]];

t.p3 = vertices[indices[i + 2]];

if (!smoothNormal) {

vec3 n = normalize(cross(t.p2 - t.p1, t.p3 - t.p1));

t.n1 = n; t.n2 = n; t.n3 = n;

}

else {

t.n1 = normalize(normals[indices[i]]);

t.n2 = normalize(normals[indices[i + 1]]);

t.n3 = normalize(normals[indices[i + 2]]);

}

// Transmission material

t.material = material;

}

}

// Build BVH

int buildBVH(std::vector<Triangle>& triangles, std::vector<BVHNode>& nodes, int l, int r, int n) {

if (l > r) return 0;

// Note:

// It cannot be operated by pointer, reference, etc. it must be operated by nodes[id]

// Because STD:: vector < > will be copied to larger memory during capacity expansion, the address will change

// The pointer and reference point to the original memory, so an error will occur

nodes.push_back(BVHNode());

int id = nodes.size() - 1; // Note: save the index first

nodes[id].left = nodes[id].right = nodes[id].n = nodes[id].index = 0;

nodes[id].AA = vec3(1145141919, 1145141919, 1145141919);

nodes[id].BB = vec3(-1145141919, -1145141919, -1145141919);

// Calculate AABB

for (int i = l; i <= r; i++) {

// Minimum point AA

float minx = min(triangles[i].p1.x, min(triangles[i].p2.x, triangles[i].p3.x));

float miny = min(triangles[i].p1.y, min(triangles[i].p2.y, triangles[i].p3.y));

float minz = min(triangles[i].p1.z, min(triangles[i].p2.z, triangles[i].p3.z));

nodes[id].AA.x = min(nodes[id].AA.x, minx);

nodes[id].AA.y = min(nodes[id].AA.y, miny);

nodes[id].AA.z = min(nodes[id].AA.z, minz);

// Maximum point BB

float maxx = max(triangles[i].p1.x, max(triangles[i].p2.x, triangles[i].p3.x));

float maxy = max(triangles[i].p1.y, max(triangles[i].p2.y, triangles[i].p3.y));

float maxz = max(triangles[i].p1.z, max(triangles[i].p2.z, triangles[i].p3.z));

nodes[id].BB.x = max(nodes[id].BB.x, maxx);

nodes[id].BB.y = max(nodes[id].BB.y, maxy);

nodes[id].BB.z = max(nodes[id].BB.z, maxz);

}

// No more than n triangles return leaf nodes

if ((r - l + 1) <= n) {

nodes[id].n = r - l + 1;

nodes[id].index = l;

return id;

}

// Otherwise, recursive tree building

float lenx = nodes[id].BB.x - nodes[id].AA.x;

float leny = nodes[id].BB.y - nodes[id].AA.y;

float lenz = nodes[id].BB.z - nodes[id].AA.z;

// Divided by x

if (lenx >= leny && lenx >= lenz)

std::sort(triangles.begin() + l, triangles.begin() + r + 1, cmpx);

// Divided by y

if (leny >= lenx && leny >= lenz)

std::sort(triangles.begin() + l, triangles.begin() + r + 1, cmpy);

// Divided by z

if (lenz >= lenx && lenz >= leny)

std::sort(triangles.begin() + l, triangles.begin() + r + 1, cmpz);

// recursion

int mid = (l + r) / 2;

int left = buildBVH(triangles, nodes, l, mid, n);

int right = buildBVH(triangles, nodes, mid + 1, r, n);

nodes[id].left = left;

nodes[id].right = right;

return id;

}

// SAH optimized construction of BVH

int buildBVHwithSAH(std::vector<Triangle>& triangles, std::vector<BVHNode>& nodes, int l, int r, int n) {

if (l > r) return 0;

nodes.push_back(BVHNode());

int id = nodes.size() - 1;

nodes[id].left = nodes[id].right = nodes[id].n = nodes[id].index = 0;

nodes[id].AA = vec3(1145141919, 1145141919, 1145141919);

nodes[id].BB = vec3(-1145141919, -1145141919, -1145141919);

// Calculate AABB

for (int i = l; i <= r; i++) {

// Minimum point AA

float minx = min(triangles[i].p1.x, min(triangles[i].p2.x, triangles[i].p3.x));

float miny = min(triangles[i].p1.y, min(triangles[i].p2.y, triangles[i].p3.y));

float minz = min(triangles[i].p1.z, min(triangles[i].p2.z, triangles[i].p3.z));

nodes[id].AA.x = min(nodes[id].AA.x, minx);

nodes[id].AA.y = min(nodes[id].AA.y, miny);

nodes[id].AA.z = min(nodes[id].AA.z, minz);

// Maximum point BB

float maxx = max(triangles[i].p1.x, max(triangles[i].p2.x, triangles[i].p3.x));

float maxy = max(triangles[i].p1.y, max(triangles[i].p2.y, triangles[i].p3.y));

float maxz = max(triangles[i].p1.z, max(triangles[i].p2.z, triangles[i].p3.z));

nodes[id].BB.x = max(nodes[id].BB.x, maxx);

nodes[id].BB.y = max(nodes[id].BB.y, maxy);

nodes[id].BB.z = max(nodes[id].BB.z, maxz);

}

// No more than n triangles return leaf nodes

if ((r - l + 1) <= n) {

nodes[id].n = r - l + 1;

nodes[id].index = l;

return id;

}

// Otherwise, recursive tree building

float Cost = INF;

int Axis = 0;

int Split = (l + r) / 2;

for (int axis = 0; axis < 3; axis++) {

// Sort by x, y and z axes respectively

if (axis == 0) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpx);

if (axis == 1) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpy);

if (axis == 2) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpz);

// Leftmax [i]: the maximum xyz value in [l, I]

// Leftmin [i]: the smallest xyz value in [l, I]

std::vector<vec3> leftMax(r - l + 1, vec3(-INF, -INF, -INF));

std::vector<vec3> leftMin(r - l + 1, vec3(INF, INF, INF));

// Calculate prefix note i-l to align to subscript 0

for (int i = l; i <= r; i++) {

Triangle& t = triangles[i];

int bias = (i == l) ? 0 : 1; // Special treatment of the first element

leftMax[i - l].x = max(leftMax[i - l - bias].x, max(t.p1.x, max(t.p2.x, t.p3.x)));

leftMax[i - l].y = max(leftMax[i - l - bias].y, max(t.p1.y, max(t.p2.y, t.p3.y)));

leftMax[i - l].z = max(leftMax[i - l - bias].z, max(t.p1.z, max(t.p2.z, t.p3.z)));

leftMin[i - l].x = min(leftMin[i - l - bias].x, min(t.p1.x, min(t.p2.x, t.p3.x)));

leftMin[i - l].y = min(leftMin[i - l - bias].y, min(t.p1.y, min(t.p2.y, t.p3.y)));

leftMin[i - l].z = min(leftMin[i - l - bias].z, min(t.p1.z, min(t.p2.z, t.p3.z)));

}

// Rightmax [i]: the largest xyz value in [I, R]

// Rightmin [i]: the smallest xyz value in [I, R]

std::vector<vec3> rightMax(r - l + 1, vec3(-INF, -INF, -INF));

std::vector<vec3> rightMin(r - l + 1, vec3(INF, INF, INF));

// Calculate suffix note i-l to align to subscript 0

for (int i = r; i >= l; i--) {

Triangle& t = triangles[i];

int bias = (i == r) ? 0 : 1; // Special treatment of the first element

rightMax[i - l].x = max(rightMax[i - l + bias].x, max(t.p1.x, max(t.p2.x, t.p3.x)));

rightMax[i - l].y = max(rightMax[i - l + bias].y, max(t.p1.y, max(t.p2.y, t.p3.y)));

rightMax[i - l].z = max(rightMax[i - l + bias].z, max(t.p1.z, max(t.p2.z, t.p3.z)));

rightMin[i - l].x = min(rightMin[i - l + bias].x, min(t.p1.x, min(t.p2.x, t.p3.x)));

rightMin[i - l].y = min(rightMin[i - l + bias].y, min(t.p1.y, min(t.p2.y, t.p3.y)));

rightMin[i - l].z = min(rightMin[i - l + bias].z, min(t.p1.z, min(t.p2.z, t.p3.z)));

}

// Traversal search segmentation

float cost = INF;

int split = l;

for (int i = l; i <= r - 1; i++) {

float lenx, leny, lenz;

// Left [l, i]

vec3 leftAA = leftMin[i - l];

vec3 leftBB = leftMax[i - l];

lenx = leftBB.x - leftAA.x;

leny = leftBB.y - leftAA.y;

lenz = leftBB.z - leftAA.z;

float leftS = 2.0 * ((lenx * leny) + (lenx * lenz) + (leny * lenz));

float leftCost = leftS * (i - l + 1);

// Right [i+1, r]

vec3 rightAA = rightMin[i + 1 - l];

vec3 rightBB = rightMax[i + 1 - l];

lenx = rightBB.x - rightAA.x;

leny = rightBB.y - rightAA.y;

lenz = rightBB.z - rightAA.z;

float rightS = 2.0 * ((lenx * leny) + (lenx * lenz) + (leny * lenz));

float rightCost = rightS * (r - i);

// Record the minimum answer for each split

float totalCost = leftCost + rightCost;

if (totalCost < cost) {

cost = totalCost;

split = i;

}

}

// Record the best answer for each axis

if (cost < Cost) {

Cost = cost;

Axis = axis;

Split = split;

}

}

// Split by best axis

if (Axis == 0) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpx);

if (Axis == 1) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpy);

if (Axis == 2) std::sort(&triangles[0] + l, &triangles[0] + r + 1, cmpz);

// recursion

int left = buildBVHwithSAH(triangles, nodes, l, Split, n);

int right = buildBVHwithSAH(triangles, nodes, Split + 1, r, n);

nodes[id].left = left;

nodes[id].right = right;

return id;

}

// ----------------------------------------------------------------------------- //

// draw

clock_t t1, t2;

double dt, fps;

unsigned int frameCounter = 0;

void display() {

// Frame timing

t2 = clock();

dt = (double)(t2 - t1) / CLOCKS_PER_SEC;

fps = 1.0 / dt;

std::cout << "\r";

std::cout << std::fixed << std::setprecision(2) << "FPS : " << fps << " Number of iterations: " << frameCounter;

t1 = t2;

// Camera parameters

vec3 eye = vec3(-sin(radians(rotatAngle)) * cos(radians(upAngle)), sin(radians(upAngle)), cos(radians(rotatAngle)) * cos(radians(upAngle)));

eye.x *= r; eye.y *= r; eye.z *= r;

mat4 cameraRotate = lookAt(eye, vec3(0, 0, 0), vec3(0, 1, 0)); // The camera looks at the origin

cameraRotate = inverse(cameraRotate); // The inverse matrix of lookat converts the light direction

// Pass uniform to pass1

glUseProgram(pass1.program);

glUniform3fv(glGetUniformLocation(pass1.program, "eye"), 1, value_ptr(eye));

glUniformMatrix4fv(glGetUniformLocation(pass1.program, "cameraRotate"), 1, GL_FALSE, value_ptr(cameraRotate));

glUniform1ui(glGetUniformLocation(pass1.program, "frameCounter"), frameCounter++);// Transmit counter as random seed

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_BUFFER, trianglesTextureBuffer);

glUniform1i(glGetUniformLocation(pass1.program, "triangles"), 0);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_BUFFER, nodesTextureBuffer);

glUniform1i(glGetUniformLocation(pass1.program, "nodes"), 1);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, lastFrame);

glUniform1i(glGetUniformLocation(pass1.program, "lastFrame"), 2);

glActiveTexture(GL_TEXTURE3);

glBindTexture(GL_TEXTURE_2D, hdrMap);

glUniform1i(glGetUniformLocation(pass1.program, "hdrMap"), 3);

// draw

pass1.draw();

pass2.draw(pass1.colorAttachments);

pass3.draw(pass2.colorAttachments);

glutSwapBuffers();

}

// Every frame

void frameFunc() {

glutPostRedisplay();

}

// Mouse motion function

double lastX = 0.0, lastY = 0.0;

void mouse(int x, int y) {

frameCounter = 0;

// Adjust rotation

rotatAngle += 150 * (x - lastX) / 512;

upAngle += 150 * (y - lastY) / 512;

upAngle = min(upAngle, 89.0f);

upAngle = max(upAngle, -89.0f);

lastX = x, lastY = y;

glutPostRedisplay(); // Repaint

}

// Mouse down

void mouseDown(int button, int state, int x, int y) {

if (button == GLUT_LEFT_BUTTON && state == GLUT_DOWN) {

lastX = x, lastY = y;

}

}

// Mouse wheel function

void mouseWheel(int wheel, int direction, int x, int y) {

frameCounter = 0;

r += -direction * 0.5;

glutPostRedisplay(); // Repaint

}

// ----------------------------------------------------------------------------- //

int main(int argc, char** argv) {

glutInit(&argc, argv); // glut initialization

glutInitDisplayMode(GLUT_RGBA | GLUT_DEPTH);

glutInitWindowSize(512, 512);// Window size

glutInitWindowPosition(350, 50);

glutCreateWindow("Path Tracing GPU"); // Create OpenGL context

glewInit();

// ----------------------------------------------------------------------------- //

// scene config

std::vector<Triangle> triangles;

Material m;

m.baseColor = vec3(0, 1, 1);

readObj("models/Stanford Bunny.obj", triangles, m, getTransformMatrix(vec3(0, 0, 0), vec3(0.3, -1.6, 0), vec3(1.5, 1.5, 1.5)),true);

m.baseColor = vec3(0.725, 0.71, 0.68);

readObj("models/quad.obj", triangles, m, getTransformMatrix(vec3(0, 0, 0), vec3(0, -1.4, 0), vec3(18.83, 0.01, 18.83)), false);

m.baseColor = vec3(1, 1, 1);

m.emissive = vec3(20, 20, 20);

readObj("models/quad.obj", triangles, m, getTransformMatrix(vec3(0, 0, 0), vec3(0.0, 1.38, -0.0), vec3(0.7, 0.01, 0.7)), false);

int nTriangles = triangles.size();

std::cout << "Model read complete: common " << nTriangles << " Three triangles" << std::endl;

// Establish bvh

BVHNode testNode;

testNode.left = 255;

testNode.right = 128;

testNode.n = 30;

testNode.AA = vec3(1, 1, 0);

testNode.BB = vec3(0, 1, 0);

std::vector<BVHNode> nodes{ testNode };

//buildBVH(triangles, nodes, 0, triangles.size() - 1, 8);

buildBVHwithSAH(triangles, nodes, 0, triangles.size() - 1, 8);

int nNodes = nodes.size();

std::cout << "BVH Build complete: common " << nNodes << " Nodes" << std::endl;

// Coded triangle, material

std::vector<Triangle_encoded> triangles_encoded(nTriangles);

for (int i = 0; i < nTriangles; i++) {

Triangle& t = triangles[i];

Material& m = t.material;

// Vertex Position

triangles_encoded[i].p1 = t.p1;

triangles_encoded[i].p2 = t.p2;

triangles_encoded[i].p3 = t.p3;

// Vertex normal

triangles_encoded[i].n1 = t.n1;

triangles_encoded[i].n2 = t.n2;

triangles_encoded[i].n3 = t.n3;

// texture of material

triangles_encoded[i].emissive = m.emissive;

triangles_encoded[i].baseColor = m.baseColor;

triangles_encoded[i].param1 = vec3(m.subsurface, m.metallic, m.specular);

triangles_encoded[i].param2 = vec3(m.specularTint, m.roughness, m.anisotropic);

triangles_encoded[i].param3 = vec3(m.sheen, m.sheenTint, m.clearcoat);

triangles_encoded[i].param4 = vec3(m.clearcoatGloss, m.IOR, m.transmission);

}

// Code BVHNode, aabb

std::vector<BVHNode_encoded> nodes_encoded(nNodes);

for (int i = 0; i < nNodes; i++) {

nodes_encoded[i].childs = vec3(nodes[i].left, nodes[i].right, 0);

nodes_encoded[i].leafInfo = vec3(nodes[i].n, nodes[i].index, 0);

nodes_encoded[i].AA = nodes[i].AA;

nodes_encoded[i].BB = nodes[i].BB;

}

// ----------------------------------------------------------------------------- //

// Generate texture

// Triangle array

GLuint tbo0;

glGenBuffers(1, &tbo0);

glBindBuffer(GL_TEXTURE_BUFFER, tbo0);

glBufferData(GL_TEXTURE_BUFFER, triangles_encoded.size() * sizeof(Triangle_encoded), &triangles_encoded[0], GL_STATIC_DRAW);

glGenTextures(1, &trianglesTextureBuffer);

glBindTexture(GL_TEXTURE_BUFFER, trianglesTextureBuffer);

glTexBuffer(GL_TEXTURE_BUFFER, GL_RGB32F, tbo0);

// BVHNode array

GLuint tbo1;

glGenBuffers(1, &tbo1);

glBindBuffer(GL_TEXTURE_BUFFER, tbo1);

glBufferData(GL_TEXTURE_BUFFER, nodes_encoded.size() * sizeof(BVHNode_encoded), &nodes_encoded[0], GL_STATIC_DRAW);

glGenTextures(1, &nodesTextureBuffer);

glBindTexture(GL_TEXTURE_BUFFER, nodesTextureBuffer);

glTexBuffer(GL_TEXTURE_BUFFER, GL_RGB32F, tbo1);

// hdr panorama

HDRLoaderResult hdrRes;

bool r = HDRLoader::load("./HDR/sunset.hdr", hdrRes);

hdrMap = getTextureRGB32F(hdrRes.width, hdrRes.height);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB32F, hdrRes.width, hdrRes.height, 0, GL_RGB, GL_FLOAT, hdrRes.cols);

// ----------------------------------------------------------------------------- //

// Pipeline configuration

pass1.program = getShaderProgram("./shaders/fshader.fsh", "./shaders/vshader.vsh");

//pass1.width = pass1.height = 256;

pass1.colorAttachments.push_back(getTextureRGB32F(pass1.width, pass1.height));

pass1.colorAttachments.push_back(getTextureRGB32F(pass1.width, pass1.height));

pass1.colorAttachments.push_back(getTextureRGB32F(pass1.width, pass1.height));

pass1.bindData();

glUseProgram(pass1.program);

glUniform1i(glGetUniformLocation(pass1.program, "nTriangles"), triangles.size());

glUniform1i(glGetUniformLocation(pass1.program, "nNodes"), nodes.size());

glUniform1i(glGetUniformLocation(pass1.program, "width"), pass1.width);

glUniform1i(glGetUniformLocation(pass1.program, "height"), pass1.height);

glUseProgram(0);

pass2.program = getShaderProgram("./shaders/pass2.fsh", "./shaders/vshader.vsh");

lastFrame = getTextureRGB32F(pass2.width, pass2.height);

pass2.colorAttachments.push_back(lastFrame);

pass2.bindData();

pass3.program = getShaderProgram("./shaders/pass3.fsh", "./shaders/vshader.vsh");

pass3.bindData(true);

// ----------------------------------------------------------------------------- //

std::cout << "start..." << std::endl << std::endl;

glEnable(GL_DEPTH_TEST); // Opening depth test

glClearColor(0.0, 0.0, 0.0, 1.0); // Background color - Black

glutDisplayFunc(display); // Set display callback function

glutIdleFunc(frameFunc); // Refresh when idle

glutMotionFunc(mouse); // Mouse drag

glutMouseFunc(mouseDown); // Press the left mouse button

glutMouseWheelFunc(mouseWheel); // Wheel zoom

glutMainLoop();

return 0;

}

10.2. Slice shader (pass1, raytrace)

#version 330 core

in vec3 pix;

out vec4 fragColor;

// ----------------------------------------------------------------------------- //

uniform uint frameCounter;

uniform int nTriangles;

uniform int nNodes;

uniform int width;

uniform int height;

uniform samplerBuffer triangles;

uniform samplerBuffer nodes;

uniform sampler2D lastFrame;

uniform sampler2D hdrMap;

uniform vec3 eye;

uniform mat4 cameraRotate;

// ----------------------------------------------------------------------------- //

#define PI 3.1415926

#define INF 114514.0

#define SIZE_TRIANGLE 12

#define SIZE_BVHNODE 4

// ----------------------------------------------------------------------------- //

// Triangle data format

struct Triangle {

vec3 p1, p2, p3; // Vertex coordinates

vec3 n1, n2, n3; // Vertex normal

};

// BVH tree node

struct BVHNode {

int left; // Left subtree

int right; // Right subtree

int n; // Contains the number of triangles

int index; // Triangle index

vec3 AA, BB; // Collision box

};

// Object surface material definition

struct Material {

vec3 emissive; // The luminous color when used as a light source

vec3 baseColor;

float subsurface;

float metallic;

float specular;

float specularTint;

float roughness;

float anisotropic;

float sheen;

float sheenTint;

float clearcoat;

float clearcoatGloss;

float IOR;

float transmission;

};

// light

struct Ray {

vec3 startPoint;

vec3 direction;

};

// Ray intersection result

struct HitResult {

bool isHit; // Hit

bool isInside; // Whether to hit from inside

float distance; // Distance from intersection

vec3 hitPoint; // Light midpoint

vec3 normal; // Hit point normal

vec3 viewDir; // The direction of the light hitting the point

Material material; // Surface material of hit point

};

// ----------------------------------------------------------------------------- //

/*

* Generate random vectors, depending on the frameCounter frame counter

* Code source: https://blog.demofox.org/2020/05/25/casual-shadertoy-path-tracing-1-basic-camera-diffuse-emissive/

*/

uint seed = uint(

uint((pix.x * 0.5 + 0.5) * width) * uint(1973) +

uint((pix.y * 0.5 + 0.5) * height) * uint(9277) +

uint(frameCounter) * uint(26699)) | uint(1);

uint wang_hash(inout uint seed) {

seed = uint(seed ^ uint(61)) ^ uint(seed >> uint(16));

seed *= uint(9);

seed = seed ^ (seed >> 4);

seed *= uint(0x27d4eb2d);

seed = seed ^ (seed >> 15);

return seed;

}

float rand() {

return float(wang_hash(seed)) / 4294967296.0;

}

// ----------------------------------------------------------------------------- //

// Hemispherical uniform sampling

vec3 SampleHemisphere() {

float z = rand();

float r = max(0, sqrt(1.0 - z*z));

float phi = 2.0 * PI * rand();

return vec3(r * cos(phi), r * sin(phi), z);

}

/*

vec3 toNormalHemisphere(vec3 v, vec3 N) {

vec3 tangent = vec3(0);

if(N.yz==vec2(0)) tangent = vec3(0, 0, -N.x);

else if(N.xz==vec2(0)) tangent = vec3(0, 0, N.y);

else if(N.xy==vec2(0)) tangent = vec3(-N.z, 0, 0);

else if(abs(N.x)>abs(N.y)) tangent = normalize(vec3(0, N.z, -N.y));

else tangent = normalize(vec3(-N.z, 0, N.x));

vec3 bitangent = cross(N, tangent);

return normalize(v.x * tangent + v.y * bitangent + v.z * N);

}

*/

// Project the vector v onto the normal hemisphere of N

vec3 toNormalHemisphere(vec3 v, vec3 N) {

vec3 helper = vec3(1, 0, 0);

if(abs(N.x)>0.999) helper = vec3(0, 0, 1);

vec3 tangent = normalize(cross(N, helper));

vec3 bitangent = normalize(cross(N, tangent));

return v.x * tangent + v.y * bitangent + v.z * N;

}

// ----------------------------------------------------------------------------- //

// Convert the 3D vector v to the texture coordinate uv of HDR map

vec2 SampleSphericalMap(vec3 v) {

vec2 uv = vec2(atan(v.z, v.x), asin(v.y));

uv /= vec2(2.0 * PI, PI);

uv += 0.5;

uv.y = 1.0 - uv.y;

return uv;

}

// Get HDR ambient color

vec3 sampleHdr(vec3 v) {

vec2 uv = SampleSphericalMap(normalize(v));

vec3 color = texture2D(hdrMap, uv).rgb;

color = min(color, vec3(10));

return color;

}

// ----------------------------------------------------------------------------- //

// Gets the triangle with the i-th subscript

Triangle getTriangle(int i) {

int offset = i * SIZE_TRIANGLE;

Triangle t;

// Vertex coordinates

t.p1 = texelFetch(triangles, offset + 0).xyz;

t.p2 = texelFetch(triangles, offset + 1).xyz;

t.p3 = texelFetch(triangles, offset + 2).xyz;

// normal

t.n1 = texelFetch(triangles, offset + 3).xyz;

t.n2 = texelFetch(triangles, offset + 4).xyz;

t.n3 = texelFetch(triangles, offset + 5).xyz;

return t;

}

// Gets the material of the triangle with subscript i

Material getMaterial(int i) {

Material m;

int offset = i * SIZE_TRIANGLE;

vec3 param1 = texelFetch(triangles, offset + 8).xyz;

vec3 param2 = texelFetch(triangles, offset + 9).xyz;

vec3 param3 = texelFetch(triangles, offset + 10).xyz;

vec3 param4 = texelFetch(triangles, offset + 11).xyz;

m.emissive = texelFetch(triangles, offset + 6).xyz;

m.baseColor = texelFetch(triangles, offset + 7).xyz;

m.subsurface = param1.x;

m.metallic = param1.y;

m.specular = param1.z;

m.specularTint = param2.x;

m.roughness = param2.y;

m.anisotropic = param2.z;

m.sheen = param3.x;

m.sheenTint = param3.y;

m.clearcoat = param3.z;

m.clearcoatGloss = param4.x;

m.IOR = param4.y;

m.transmission = param4.z;

return m;

}

// Gets the BVHNode object of the ith subscript

BVHNode getBVHNode(int i) {

BVHNode node;

// Left and right subtree

int offset = i * SIZE_BVHNODE;

ivec3 childs = ivec3(texelFetch(nodes, offset + 0).xyz);

ivec3 leafInfo = ivec3(texelFetch(nodes, offset + 1).xyz);

node.left = int(childs.x);

node.right = int(childs.y);

node.n = int(leafInfo.x);

node.index = int(leafInfo.y);

// Bounding box

node.AA = texelFetch(nodes, offset + 2).xyz;

node.BB = texelFetch(nodes, offset + 3).xyz;

return node;

}

// ----------------------------------------------------------------------------- //

// Intersection of light and triangle

HitResult hitTriangle(Triangle triangle, Ray ray) {

HitResult res;