Thesis Guide

RotNet performs self supervised learning by predicting image rotation

This is a paper published by ICLR in 2018, which has been cited more than 1100 times. The idea of this paper comes from: if someone does not understand the concept of the object depicted in the image, he cannot recognize the rotation applied to the image.

In this article, we review the unsupervised representation learning by predicting image rotation at the University Paris Est. Using RotNet, image features are learned by training ConvNets to recognize 2d rotation applied to the image as input. Through this method, the unsupervised pre training AlexNet model achieves 54.4% of the mAP, which is only 2.4 points lower than the supervised AlexNet.

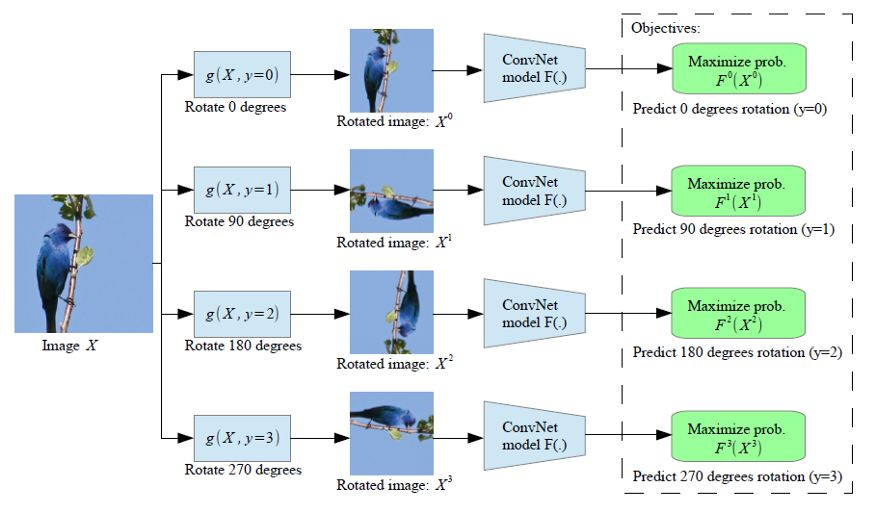

Image rotation prediction framework

Given four possible geometric transformations, namely 0, 90, 180 and 270 degree rotations, the convolution network model F(:) is trained to identify which rotation is applied to the input image.

Fy(Xy) is the probability of rotation transformation y predicted by model F(:), its input is an image that has been rotated and transformed, and the rotation angle of the output image.

In order to successfully predict the rotation of the image, ConvNet model must learn to locate the significant targets in the image, identify their direction and object type, and then associate the object direction with the original image.

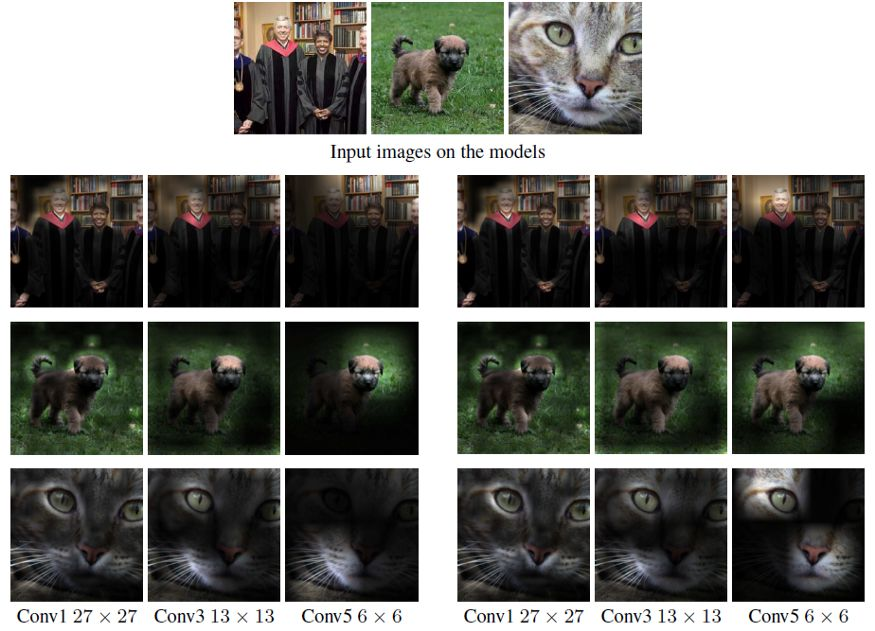

The attention map generated by the trained AlexNet model (a) identifies the object (supervised) and (b) identifies the image rotation (self supervised).

The above attention map is calculated according to the activation amplitude of each spatial unit of the convolution layer, which essentially reflects where the network focuses most of its attention to classify the input image.

On the way, it can be seen that both the supervised model and the self supervised model seem to focus on roughly the same image area.

Rotating and dragging verification code solution

Once upon a time, were you troubled by a rotary verification code? Yes, today's topic - Rotary verification code.

When simulating login, the picture verification code is a big difficulty.

But yes RotNet , this problem will be solved easily Rotating and dragging verification code solution.

Two ideas

Image rotation considers two ideas: regression and classification

- Regression: the predicted numerical results range from 0 to 360 °

- Classification: predict 360 categories. The model predicts which category has the greatest probability of output

The convolution neural network is defined to train the rotating picture set to predict the rotation angle of the picture.

Big data application competition

Big data application competition : computer vision is widely used in many AI, such as automatic driving, visual navigation, target detection, target recognition and so on. All of them are related to computer vision, and image technology can often help improve computer vision, such as random clipping, random rotation, image blur and so on. The importance of image technology to computer vision is self-evident, so the title of this big data application competition is image righting challenge.

Convolutional neural network

Classification code:

# number of convolutional filters to use nb_filters = 64 # size of pooling area for max pooling pool_size = (2, 2) # convolution kernel size kernel_size = (3, 3) # number of classes nb_classes = 360 # model definition input = Input(shape=(img_rows, img_cols, img_channels)) x = Conv2D(nb_filters, kernel_size, activation='relu')(input) x = Conv2D(nb_filters, kernel_size, activation='relu')(x) x = MaxPooling2D(pool_size=(2, 2))(x) x = Dropout(0.25)(x) x = Flatten()(x) x = Dense(128, activation='relu')(x) x = Dropout(0.25)(x) x = Dense(nb_classes, activation='softmax')(x) model = Model(inputs=input, outputs=x) model.summary()

Model compilation

# model compilation

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=[angle_error])

Training parameters

# training parameters batch_size = 128 nb_epoch = 50

Callback

# callbacks

checkpointer = ModelCheckpoint(

filepath=os.path.join(output_folder, model_name + '.hdf5'),

save_best_only=True

)

early_stopping = EarlyStopping(patience=2)

tensorboard = TensorBoard()

model training

# training loop

model.fit_generator(

RotNetDataGenerator(

X_train,

batch_size=batch_size,

preprocess_func=binarize_images,

shuffle=True

),

steps_per_epoch=nb_train_samples / batch_size,

epochs=nb_epoch,

validation_data=RotNetDataGenerator(

X_test,

batch_size=batch_size,

preprocess_func=binarize_images

),

validation_steps=nb_test_samples / batch_size,

verbose=1,

callbacks=[checkpointer, early_stopping, tensorboard]

)

Complete code

"""

@Author: ZS

@CSDN : https://zsyll.blog.csdn.net/

@Time : 2021/11/20 10:48

"""

from __future__ import print_function

import os

import sys

from keras.callbacks import ModelCheckpoint, EarlyStopping, TensorBoard, ReduceLROnPlateau

from keras.applications.resnet50 import ResNet50

from keras.applications.imagenet_utils import preprocess_input

from keras.models import Model

from keras.layers import Dense, Flatten

from keras.optimizers import SGD

sys.path.append(os.path.dirname(os.path.dirname(os.path.abspath(__file__))))

from utils import angle_error, RotNetDataGenerator

from getImagePath import getPath

data_path = r'./data/image/'

train_filenames, test_filenames = getPath(data_path)

print(len(train_filenames), 'train samples')

print(len(test_filenames), 'test samples')

model_name = 'rotnet_resnet50'

# Classification quantity

nb_classes = 360

# input image shape

input_shape = (320, 320, 3)

# Load base model

base_model = ResNet50(weights='imagenet', include_top=False,

input_shape=input_shape)

# Add classification layer

x = base_model.output

x = Flatten()(x)

final_output = Dense(nb_classes, activation='softmax', name='fc360')(x)

# Create a new model

model = Model(inputs=base_model.input, outputs=final_output)

model.summary()

# Model compilation

model.compile(loss='categorical_crossentropy',

optimizer=SGD(lr=0.01, momentum=0.9),

metrics=[angle_error])

# Training parameters

batch_size = 64

nb_epoch = 20

output_folder = 'models'

if not os.path.exists(output_folder):

os.makedirs(output_folder)

# callbacks

monitor = 'val_angle_error'

checkpointer = ModelCheckpoint(

filepath=os.path.join(output_folder, model_name + '.hdf5'),

monitor=monitor,

save_best_only=True

)

reduce_lr = ReduceLROnPlateau(monitor=monitor, patience=3)

early_stopping = EarlyStopping(monitor=monitor, patience=5)

tensorboard = TensorBoard()

# Training model

model.fit_generator(

RotNetDataGenerator(

train_filenames,

input_shape=input_shape,

batch_size=batch_size,

preprocess_func=preprocess_input,

crop_center=True,

crop_largest_rect=True,

shuffle=True

),

steps_per_epoch=len(train_filenames) / batch_size,

epochs=nb_epoch,

validation_data=RotNetDataGenerator(

test_filenames,

input_shape=input_shape,

batch_size=batch_size,

preprocess_func=preprocess_input,

crop_center=True,

crop_largest_rect=True

),

validation_steps=len(test_filenames) / batch_size,

callbacks=[checkpointer, reduce_lr, early_stopping, tensorboard],

workers=10

)

Model call

# In the import area, sys is mandatory, and others are imported according to requirements

from __future__ import print_function

import os

import sys

import random

import numpy as np

import pandas as pd

import cv2

import tensorflow as tf

import tensorflow.keras as keras

import matplotlib.pyplot as plt

from mykeras.applications.imagenet_utils import preprocess_input

from mykeras.models import load_model

from utils import display_examples, RotNetDataGenerator, angle_error

import warnings

warnings.filterwarnings("ignore")

from tensorflow.keras import layers

# Code area, write according to requirements

class FileSequence(keras.utils.Sequence):

def __init__(self,filenames,batch_size,filefunc,fileargs=(),labels=None,labelfunc=None,labelargs=(),shuffle=False):

if labels: assert len(filenames) == len(labels)

self.filenames = filenames

self.batch_size = batch_size

self.filefunc = filefunc

self.fileargs = fileargs

self.labels = labels

self.labelfunc = labelfunc

self.labelargs = labelargs

if shuffle:

idx_list = list(range(len(self.filenames)))

random.shuffle(idx_list)

self.filenames = [self.filenames[idx] for idx in idx_list]

if self.labels: self.labels = [self.labels[idx] for idx in idx_list]

def __len__(self):

return int(np.ceil(len(self.filenames) / float(self.batch_size)))

def __getitem__(self, idx):

batch_filenames = self.filenames[idx * self.batch_size: (idx+1) * self.batch_size]

files = []

for filename in batch_filenames:

# tf.print(filename)

file = self.filefunc(filename,*self.fileargs)

files.append(file)

if self.labels:

batch_labels = self.labels[idx * self.batch_size: (idx+1) * self.batch_size]

if self.labelfunc:

return np.array(files), self.labelfunc(batch_labels,*self.labelargs)

else:

return np.array(files), batch_labels

else:

return np.array(files)

def fillWhite(img,size,mode=None):

if len(img.shape) == 2: img = img.reshape(*img.shape,-1)

assert len(img.shape) == 3

h, w, c = img.shape

assert (h < size) and (w < size)

fillImg = np.zeros(shape=(size,size,c))

if mode == "random":

sh = random.randint(0,size-h)

sw = random.randint(0,size-w)

fillImg[sh:sh+h,sw:sw+w,...] = img

elif mode == "centre" or mode == "center":

fillImg[(size-h)//2:(size+h)//2,(size-w)//2:(size+w)//2,...] = img

else:

fillImg[:h,:w,...] = img

return fillImg

def cropImg(img,size,mode=None):

if len(img.shape) == 2: img = img.reshape(*img.shape,-1)

assert len(img.shape) == 3

h, w, c = img.shape

assert (h >= size) and (w >= size)

if mode == "random":

sh = random.randint(0,h-size)

sw = random.randint(0,w-size)

cropImg = img[sh:sh+size,sw:sw+size,...]

elif mode == "centre" or mode == "center":

cropImg = img[(h-size)//2:(h+size)//2,(w-size)//2:(w+size)//2,...]

else:

cropImg = img[:size,:size,...]

return cropImg

def fillCrop(img,size,mode=None):

if len(img.shape) == 2: img = img.reshape(*img.shape,-1)

assert len(img.shape) == 3

h, w, c = img.shape

assert ((h >= size) and (w < size)) or ((h < size) and (w >= size))

fillcropImg = np.zeros(shape=(size,size,c))

if mode == "random":

if (h >= size) and (w < size):

sh = random.randint(0,h-size)

sw = random.randint(0,size-w)

fillcropImg[:,sw:sw+w,:] = img[sh:sh+size,...]

else:

sh = random.randint(0,size-h)

sw = random.randint(0,w-size)

fillcropImg[sh:sh+h,...] = img[:,sw:sw+size,:]

elif mode == "centre" or mode == "center":

if (h >= size) and (w < size):

fillcropImg[:,(size-w)//2:(size+w)//2,:] = img[(h-size)//2:(h+size)//2,...]

else:

fillcropImg[(size-h)//2:(size+h)//2,...] = img[:,(w-size)//2:(w+size)//2,:]

else:

if (h >= size) and (w < size):

fillcropImg[:,:size,:] = img[:size,...]

else:

fillcropImg[:size,...] = img[:,:size,:]

return fillcropImg

def resizeImg(img,size,mode=None):

if len(img.shape) == 2: img = img.reshape(*img.shape,-1)

assert len(img.shape) == 3

h, w, c = img.shape

if (h < size) and (w < size): return fillWhite(img,size,mode)

elif (h >= size) and (w >= size): return cropImg(img,size,mode)

else: return fillCrop(img,size,mode)

def filefunc(filename,mode):

tf.print(filename)

img = cv2.imread(filename)

if not isinstance(img,np.ndarray):

tf.print(filename)

h, w, c = img.shape

if (h >=256) or (w >= 256):

img = resizeImg(img,256,mode)

img = cv2.resize(img,(64,64))

elif (h >=128) or (w >= 128):

img = resizeImg(img,128,mode)

img = cv2.resize(img,(64,64))

else:

img = resizeImg(img,64,mode)

return img

# Main function, fixed format, to_pred_dir is the folder where the forecast is located, result_save_path generates a path for the prediction results

# The following is an example

def main(to_pred_dir, result_save_path):

runpyp = os.path.abspath(__file__)

modeldirp = os.path.dirname(runpyp)

modelp = os.path.join(modeldirp,"model.hdf5")

model = load_model(modelp, custom_objects={'angle_error': angle_error}) # custom object

pred_imgs = os.listdir(to_pred_dir)

pred_imgsp_lines = [os.path.join(to_pred_dir,p) for p in pred_imgs]

name, label = display_examples(

model,

pred_imgsp_lines,

num_images=len(pred_imgsp_lines),

size=(224, 224),

crop_center=True,

crop_largest_rect=True,

preprocess_func=preprocess_input,

)

df = pd.DataFrame({"id":name,"label":label})

df.to_csv(result_save_path,index=None)

# !!! be careful:

# The parameter given in the picture contest is to_pred_dir is a folder whose picture content is

# to_pred_dir/to_pred_0.png

# to_pred_dir/to_pred_1.png

# to_pred_dir/......

# The csv file header to be generated is ID and label, as follows

# image_id,label

# to_pred_0,4

# to_pred_1,76

# to_pred_2,...

if __name__ == "__main__":

to_pred_dir = sys.argv[1] # Folder path to be predicted

result_save_path = sys.argv[2] # File path for saving prediction results

main(to_pred_dir, result_save_path)

reference resources: Link

come on.

thank!

strive!