Sentinel's flow control is to monitor the QPS of application traffic or the number of concurrent threads. When the specified threshold is reached, it controls the flow to avoid being overwhelmed by the instantaneous flow peak, so as to ensure high availability.

This paper analyzes the flow control algorithm from the perspective of mathematical formula and code, so we need to understand some Sentinel nouns first.

- QPS: number of requests per second, that is, the number of requests that the server can process per second when constantly sending requests to the server.

- Concurrent threads: refers to the number of threads accessing the resource at the same time.

Parameters involved in Sentinel flow control interface are as follows:

- Resource: resource name, that is, the object of the flow restriction rule.

- count: current limiting threshold.

- grade: current limiting threshold type: 1-QPS, 0-number of concurrent threads. The default value is QPS.

- limitApp: call source for flow control: default.

- If it is default, the call source is not distinguished.

- strategy: judge based on resources: 0 - itself, 1 - other associated resources, 2 - link entry. The default value is based on the resources themselves.

- controlBehavior: flow control effect: 0-direct rejection, 2-queue waiting, 1-warm-up and cold start. The default value is direct rejection.

- Direct rejection is also called fast failure: formula flow limiting algorithm - total number method; When the QPS exceeds the threshold of any rule, the new request will be rejected immediately.

I Preheating and token bucket algorithm

When the length of the system is at the low water level, when the flow suddenly increases, directly pull the system to the high water level, which may crush the system instantly. Through "cold start", when the passing flow increases slowly, it gradually increases to the upper limit of the threshold within a certain time, giving the cold system a preheating time to avoid the cold system being crushed.

Note: this effect only applies to QPS flow control and does not support concurrent thread flow control

1. Principle

To set the preheating flow control of QPS using sentinel, you need to set the threshold count and the preheating duration warmUpPeriodInSec.

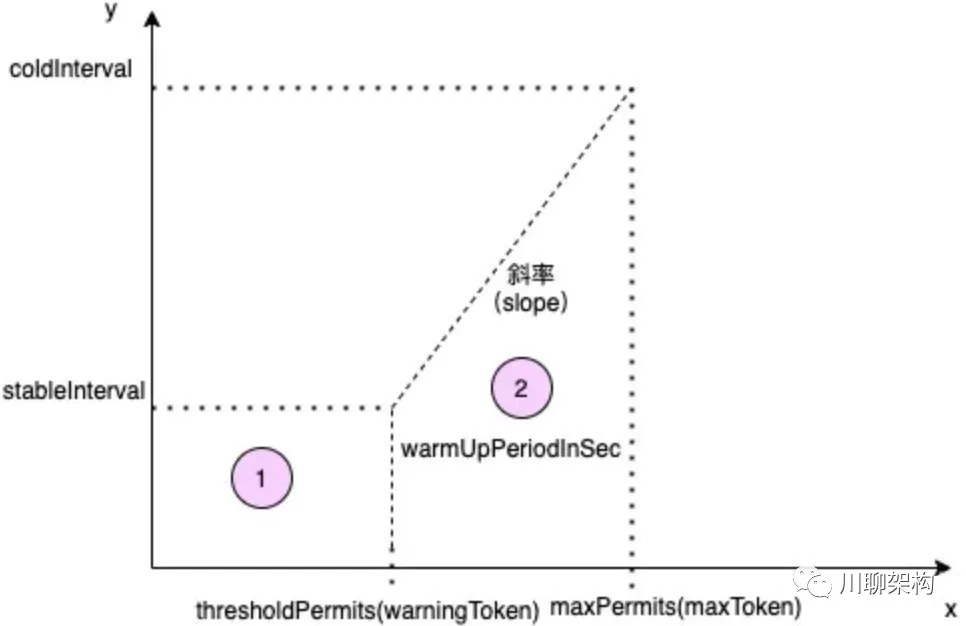

As shown in the figure above: the X-axis represents the number of tokens in the token bucket, and the y-axis represents the time required to produce a token (seconds); Relevant parameters are as follows:

- Stable interval: it takes time to stably produce a token

- coldInterval: the longest time required to produce a token, which is related to the cold start factor coldFactor; You can set it through - Dcsp.sentinel. ow.cold.factor. The default is 3.

- Warmupperiodsec (preheating time seconds): the preheating time is 10 seconds by default, and the corresponding coordinate diagram is (2) trapezoidal area.

- Threshold permissions: a threshold value in the token bucket. When the value is exceeded, preheating is enabled.

- Maxpermissions (maxtoken): the maximum number of tokens in the token bucket.

2. Conversion relationship

count: known to be set by the user, eg: 100 requests per second are allowed. warmUpPeriodInSec: it is known that it is set by the user. The default is 10 seconds. The (2) trapezoidal area coldFactor in the time area is 3 by default.

Formula 1:

stableInterval=1/count(It takes time to stably produce a token)

Formula 2:

coldInterval=stableInterval*coldFactor Cold start factor(Maximum time required to produce a token)

Equation 3:

Premise: since coldFactor is 3 by default, the distance between y-axis stableinterval and coldinterval is twice the distance between 0 and stableinterval, and the red (2) trapezoidal area in the time area is twice the rectangular area of Red 1

Coordinate time(1)Rectangular area=long(thresholdPermits(warningToken))*wide(stableInterval)

Equation 4:

Coordinate time(1)Rectangular area=0.5*warmUpPeriodSec

Equation 5:

(thresholdPermits(warningToken))=0.5*warmUpPeriodSec/stableInterval

code:

warningToken=(int)(warmUpPeriodInSec*count)/(coldFactor-1);

Equation 6:

Premise: the area of trapezoid = (upper low + lower low) * high / 2 deduces the maxpermissions (maxtoken) value

maxPermits=2*warmUpPeriodSec/(stableInterval+coldInterval)+thresholdPermits(warningToken)

code:

maxToken=warningToken+(int)(2*warmUpPeriodInSec*count/(1.0+coldFactor));

Equation 7:

Premise: slope formula = (y1-y2)/(x1-x2)

slope=(coldInterval-stableInterval)/(maxToken-warningToken)

code:

slope=(coldFactor-1.0)/count/(maxToken-warningToken);

3. Principle

When the token in the token bucket is < thresholdpermissions (warningtoken), the token is produced at a fixed rate and the request traffic is stable.

When the token > thresholdpermissions (warningtoken), the preheating is enabled. The rate of the produced token is less than the rate of the token falling. After a period of time, the token < = thresholdpermissions (warningtoken), the request returns to a stable state, and the preheating ends.

4.WarmUpControlle

private void construct(double count, int warmUpPeriodInSec,int coldFactor) {

if (coldFactor <= 1) {

throw new IllegalArgumentException("Cold factor should be larger than 1");

}

this.count = count;

this.coldFactor = coldFactor;

// (1) thresholdPermits = 0.5 * warmupPeriod / stableInterval.

// warningToken = 100;

warningToken = (int)(warmUpPeriodInSec * count) / (coldFactor - 1);

// (2) maxPermits = thresholdPermits + 2 * warmupPeriod / (stableInterval + coldInterval)

// maxToken = 200

maxToken = warningToken + (int)(2 * warmUpPeriodInSec * count / (1.0 + coldFactor));

// slope = (coldIntervalMicros - stableIntervalMicros) / (maxPermits - thresholdPermits);

slope = (coldFactor - 1.0) / count / (maxToken - warningToken);

}

public boolean canPass(Node node, int acquireCount, boolean prioritized) {

long passQps = (long) node.passQps();

long previousQps = (long) node.previousPassQps();

// (1) Token production process and sliding process

syncToken(previousQps);

// Start calculating its slope

// If you enter the cordon, start adjusting his qps

long restToken = storedTokens.get();

// (2) The number of tokens remaining in the token bucket

if (restToken >= warningToken) { // (3-1) when the remaining token is greater than the token bucket threshold, start preheating

long aboveToken = restToken - warningToken;

// The consumption speed is faster than warning, but slower than

// current interval = restToken*slope+1/count

// (4) Calculate the QPS during preheating according to the slope

double warningQps = Math.nextUp(1.0 / (aboveToken * slope + 1.0 / count));

if (passQps + acquireCount <= warningQps) { //(5) Determine whether to release

return true;

}

} else { // (3-2) if the remaining token is less than the token bucket threshold, the preheating is not turned on and the flow is stable

if (passQps + acquireCount <= count) {

return true;

}

}

return false;

}

protected void syncToken(long passQps) {

long currentTime = TimeUtil.currentTimeMillis();

// Remove mantissa, that is, seconds

currentTime = currentTime - currentTime % 1000;

// Timestamp of last record

long oldLastFillTime = lastFilledTime.get();

if (currentTime <= oldLastFillTime) {

return;

}

// Gets the number of tokens in the bucket

long oldValue = storedTokens.get();

// Token production

long newValue = coolDownTokens(currentTime, passQps);

if (storedTokens.compareAndSet(oldValue, newValue)) { // 2 put the produced token into the token bucket

long currentValue = storedTokens.addAndGet(0 -passQps); // Slide the used token from the token bucket

if (currentValue < 0) {

storedTokens.set(0L);

}

lastFilledTime.set(currentTime);

}

}

private long coolDownTokens(long currentTime, long passQps) {

long oldValue = storedTokens.get();

long newValue = oldValue;

// Prerequisites for adding a token:

// When the token consumption is much lower than the warning line

if (oldValue < warningToken) {

newValue = (long)(oldValue + (currentTime -

lastFilledTime.get()) * count / 1000);

} else if (oldValue > warningToken) {

if (passQps < (int)count / coldFactor) {

newValue = (long)(oldValue + (currentTime - lastFilledTime.get()) * count / 1000);

}

}

return Math.min(newValue, maxToken);

}

- Queue up -

The uniform queuing mode will strictly control the interval of requests passing, that is, let the requests pass at a uniform speed; The corresponding is the leaky bucket algorithm.

This effect is only applicable to QPS flow control, and concurrent thread flow control is not supported.

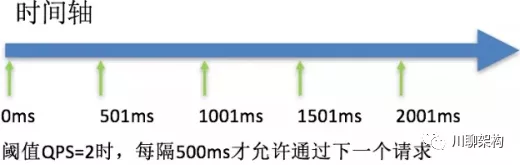

one Uniform queue

There is a timeout waiting time. Once the predetermined time is exceeded, the current will be limited.

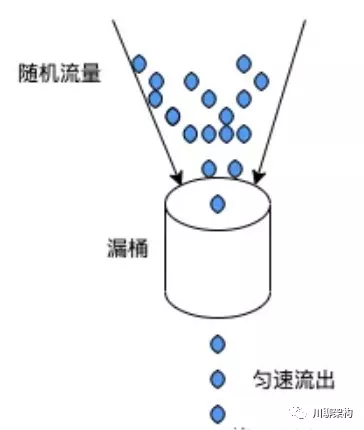

2. Leakybucket algorithm

The random burst flow flows out at a stable rate after passing through the leaky bucket, which plays the role of flow control and smoothing.

3. Implementation class

RateLimiterController: achieve uniform speed by controlling the time interval of requests.

public boolean canPass(Node node, int acquireCount, boolean prioritized) {

// Pass when acquire count is less or equal than 0.

if (acquireCount <= 0) {

return true;

}

// Reject when count is less or equal than 0.

// Otherwise,the costTime will be max of long and waitTime will overflow in some cases.

if (count <= 0) {

return false;

}

long currentTime = TimeUtil.currentTimeMillis();

// Calculate the interval between every two requests.

// 1) Time interval between requests

long costTime = Math.round(1.0 * (acquireCount) / count * 1000);

// Expected pass time of this request.

// 2) Calculate the pass reservation time of this request = pass time of last request + time interval

long expectedTime = costTime + latestPassedTime.get();

// 3) When the expected time < = the current time, it is allowed to pass and update the last request timestamp

if (expectedTime <= currentTime) {

// Contention may exist here, but it's okay.

latestPassedTime.set(currentTime);

return true;

} else {

// Calculate the time to wait.

// 4) When the expected time > the current time, you need to wait; Waiting time required for calculation

long waitTime = costTime + latestPassedTime.get() - TimeUtil.currentTimeMillis();

// 5) If the waiting time > the latest queue time, the default timeout of 500 milliseconds will be rejected

if (waitTime > maxQueueingTimeMs) {

return false;

} else {

long oldTime =

latestPassedTime.addAndGet(costTime);

try {

waitTime = oldTime -

TimeUtil.currentTimeMillis();

// 6)

if (waitTime > maxQueueingTimeMs) {

latestPassedTime.addAndGet(-costTime);

return false;

}

// in race condition waitTime may <= 0

// 7)

if (waitTime > 0) {

Thread.sleep(waitTime);

}

return true;

} catch (InterruptedException e) {

}

}

}

return false;

}Note: suppose the set threshold count=100, that is, 100 requests are allowed per second. Each time a request is passed, acquireCount=1, and the formula costTime=10 is inserted, that is, the time interval between two requests is 10 seconds.

If water An architect, now working on Xiaomi Xiaoai open platform, a beautiful code farmer with both talent and appearance, usually likes to summarize knowledge and brush algorithm problems, and often participates in LeetCode algorithm weekly competition.

Lin huaichuan

Graduated from Xi'an Jiaotong University; Chief architect of Naixue education and head of teaching and research; Former senior architect of Dashu finance, founder of Technical Committee and technical director; Technical director of Hongye Trading Business Department of Tianyang; Years of experience in the Internet financial industry (ToB).