1 Preface

Through the explanations in the previous two chapters, we have basically mastered the principle and use of Eureka. Next, we will talk about how Eureka is applied from the dimensions of security, monitoring, high availability and tuning.

2 Eureka security configuration

Eureka is an important registry in the whole spring cloud microservice architecture, and it can only register the microservices it needs. In order to ensure Eureka security, spring security needs to be introduced for FS Eureka server to implement security configuration.

The first step is to add in the pom file of FS Eureka server

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

</dependency>

Step 2: FS Eureka server modifies the configuration file application YML, add security configuration:

spring:

application:

name: fs-eureka-service

# eureka add authority authentication

security:

# Username and password

user:

name: fullset

password: fs958

eureka:

client:

#Do not register with the registry as a client

register-with-eureka: false

#Do not obtain registration information through Eureka

fetch-registry: false

service-url:

defaultZone: http://fullset:fs958@127.0.0.1:8611/eureka/

Step 3: FS Eureka client modifies the configuration file application YML, add security configuration. Only microservices with correct authentication information can be registered in Eureka.

eureka:

client:

serviceUrl:

defaultZone: http://fullset:fs958@127.0.0.1:8611/eureka/

3 Eureka health test

Similarly, the following configurations need to be added before Eureka can perform health check on the service.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

The status page and health indicator of Eureka instance default to "/ info" and "/ health", respectively, which are the default locations of useful endpoints in the Spring Boot actuator application.

If you use a non default context path or servlet path (for example, server.servletPath=/foo) or management endpoint path (for example, management.contextPath=/admin), you need to change these, even for the actuator application. Example:

eureka:

instance:

statusPageUrlPath: ${management.context-path}/info

healthCheckUrlPath: ${management.context-path}/health

4 Eureka parameter tuning

For the problem that the Client fails to notify Eureka Server when it goes offline, you can adjust the scheduling frequency of EvictionTask:

#The default is 60 seconds eureka.server.eviction-interval-timer-in-ms=5000

To solve the problem that Eureka Client does not obtain the Server registration information in time when the new service is launched, the Client can appropriately improve the frequency of pulling the Server registration information:

#Default 30 seconds eureka.client.registry-fetch-interval-seconds=5

In the actual production process, if the heartbeat between the service instance and Eureka Server cannot be maintained as scheduled due to frequent problems such as network jitter, the following parameters can be appropriately reduced:

#Default 30 eureka.instance.leaseRenewalIntervalInSeconds=10 #Default 0.85 eureka.server.renewalPercentThreshold=0.49

Service tuning requires long-term comprehensive consideration of various data. Here are just some references based on eureka's principle and parameter function.

5 Eureka high availability

Eureka is the core component of the whole microservice architecture. If there is a problem with Eureka server, all microservices cannot be registered, and the whole project will be completely paralyzed. In order to avoid such problems, Eureka cluster mode can be adopted, that is, multiple hosts are used to jointly implement Eureka registration service, so that even if one host has problems, other hosts can provide service support normally. By running multiple instances and requesting them to register with each other, Eureka can be made more flexible and available. In fact, this is the default behavior. Add:

spring:

application:

name: EUREKA-HA

---

server:

port: 7777

spring:

profiles: peer1

eureka:

instance:

hostname: peer1

client:

serviceUrl:

defaultZone: http://peer2:8888/eureka/,http://peer3:9999/eureka/

---

server:

port: 8888

spring:

profiles: peer2

eureka:

instance:

hostname: peer2

client:

serviceUrl:

defaultZone: http://peer1:7777/eureka/,http://peer3:9999/eureka/

---

server:

port: 9999

spring:

profiles: peer3

eureka:

instance:

hostname: peer3

client:

serviceUrl:

defaultZone: http://peer1:7777/eureka/,http://peer2:8888/eureka/

In this example, we have a YAML file, which can be set up by different spring profiles. Active starts Eureka servers with different configurations. The above configuration declares the configurations of two Eureka servers, which are registered with each other. Multiple peers can be added. As long as there is a connection point in these Eureka servers, the data of these registries can be synchronized, which increases high availability through server redundancy. Even if one Eureka Server goes down, it will not lead to system collapse. visit http://peer1:7777 We will find that there are already peer2 and peer3 nodes.

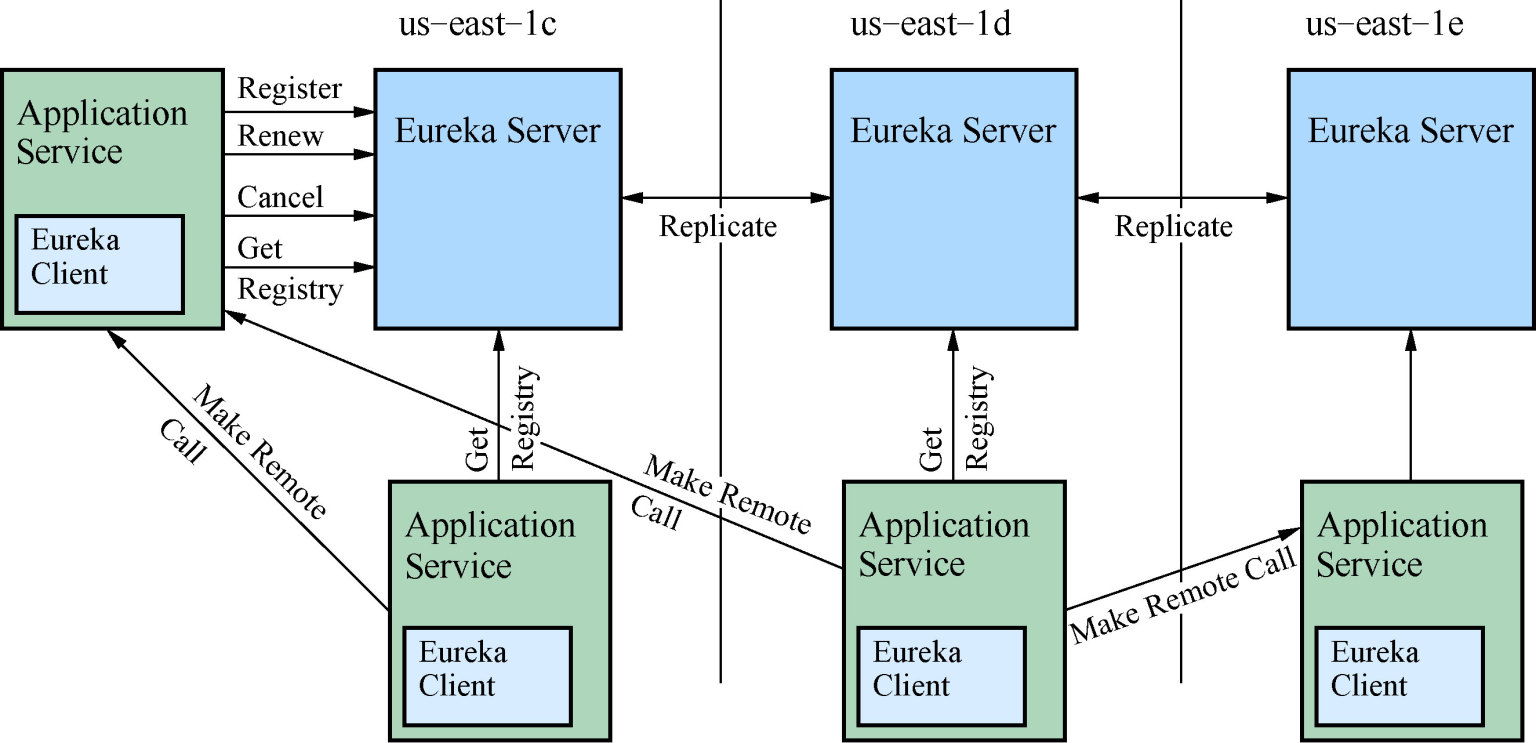

The following figure shows Eureka's highly available architecture, which is from Eureka's open source code document in GitHub

There are two roles in this architecture, namely Eureka Server and Eureka Client. Eureka Client is divided into application service and Application Client, that is, service provider and service consumer. Each region has an Eureka cluster, and at least one Eureka Server in each region can handle regional failures to prevent server paralysis.

Six yards of insight

Eureka provides Spring Cloud with highly available service discovery and registration components, including service registration, sending heartbeat renewal, service offline and service discovery, which are completed independently in the background, simplifying the development work of developers.

Of course, Eureka also has defects. Because the synchronous replication between clusters is carried out through HTTP, based on the unreliability of the network, the registry information between Eureka servers in the cluster inevitably has asynchronous time nodes, which does not meet the C (data consistency) in the CAP.