Background Introduction:

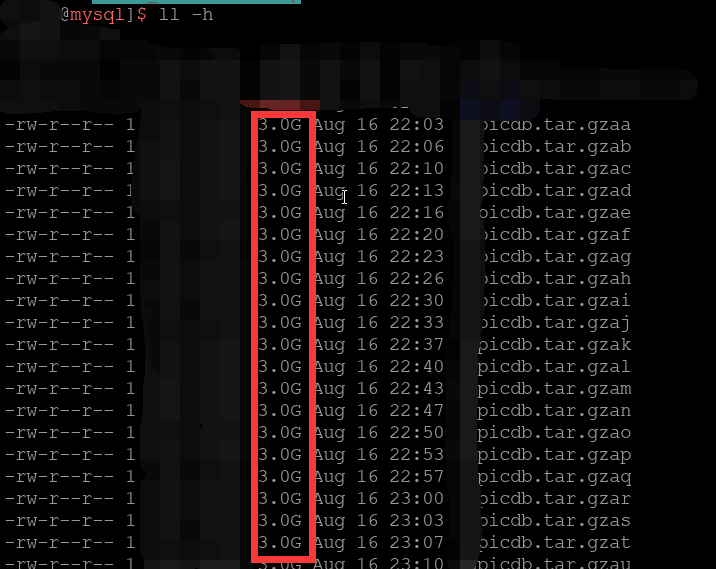

Because of the continuous expansion of backup data, it is necessary to transfer the backup data (about 2T) from the existing network back to the local area for remote storage. But 2T is too big and needs to be compressed. Here we use 3G as a compression package. Here is the compression script

#!/bin/bash

# This script is a fully compressed mongodb backup file

printf "start mongodb Backup compression $(date +%Y%m%d_%A_%Z%T)\n"

cd /data_master/backup/

tar czf - picdb | split -b 3072m - picdb.tar.gz

if [ $? != 0 ]

then

printf "mongodb Backup Compression Failed $(date +%Y%m%d_%A_%Z%T)\n"

else

printf "mongodb Backup Compression Completed $(date +%Y%m%d_%A_%Z%T)\n"

fi

echo "Residual capacity: `df -h |awk -F '[ ]+' 'NR==7{print $4}'`"

The result of compression is the same as the figure above, but there are a lot of them.

The next problem is: the bandwidth of the existing network is limited, how to transfer these compressed packets back to the local. Speed-limited transmission (speed-limited 500KB/s) is carried out from 6 a.m. to 10 p.m. and from 10 p.m. to 6 a.m.

#!/bin/bash

# SFTP transfer script

# IP address

IP=local IP

# PORT port

PORT=Local port number

# USER username

USER=Local users

# PASSWD password

PASSWD=User password

#The directory where the compressed package is located

CLIENTDIR=/data_master/backup/

#SFTP Server to Download Directory

SEVERDIR=/sftp/data_master/

# Set the current hour

# xiaoshi=`date +"%Y-%m-%d %H:%M.%S"|awk -F "[ :]+" '{print $2}'`

#File name to be downloaded

# FILE=

while :

do

xiaoshi=`date +"%Y-%m-%d %H:%M.%S"|awk -F "[ :]+" '{print $2}'`

if [ ${xiaoshi} -ge 6 ] && [ ${xiaoshi} -lt 22 ];

then

echo "Speed limits should be enforced when the time is greater than 6 hours and less than 22 hours."

File=`head -1 /home/lsy/Sftp.txt`

printf "Start Transfer Compressed Backup $(date +%Y%m%d_%A_%Z%T)\n" >>/home/lsy/sftp_file.log

cd ${CLIENTDIR}

lftp -u user,Password sftp://Local IP Address: Port Number << EOF

cd ${SEVERDIR}

lcd ${CLIENTDIR}

set net:limit-rate 500000:500000

reput ${File}

by

EOF

if [ $? -eq 0 ]

then

echo "The transmission was successful. Here is the file name" >>/home/lsy/sftp_file.log

echo ${File} >>/home/lsy/sftp_file.log

sleep 3

else

echo "The transmission failed." >>/home/lsy/sftp_file.log

sleep 5

exit 2

fi

sed -i 1d /home/lsy/Sftp.txt

printf "Transmission complete $(date +%Y%m%d_%A_%Z%T)\n" >>/home/lsy/sftp_file.log

else

echo "Cancel speed limit"

File=`head -1 /home/lsy/Sftp.txt`

printf "Start Transfer Compressed Backup $(date +%Y%m%d_%A_%Z%T)\n" >>/home/lsy/sftp_file.log

cd ${CLIENTDIR}

lftp -u user,Password sftp://Local IP: Port << EOF

cd ${SEVERDIR}

lcd ${CLIENTDIR}

reput ${File}

by

EOF

if [ $? -eq 0 ]

then

echo "Transfer succeeded. Here is the file name" >>/home/lsy/sftp_file.log

echo ${File} >>/home/lsy/sftp_file.log

sleep 3

else

echo "The transmission failed." >>/home/lsy/sftp_file.log

sleep 5

exit 2

fi

sed -i 1d /home/lsy/Sftp.txt

printf "Transmission complete $(date +%Y%m%d_%A_%Z%T)\n" >>/home/lsy/sftp_file.log

fi

done

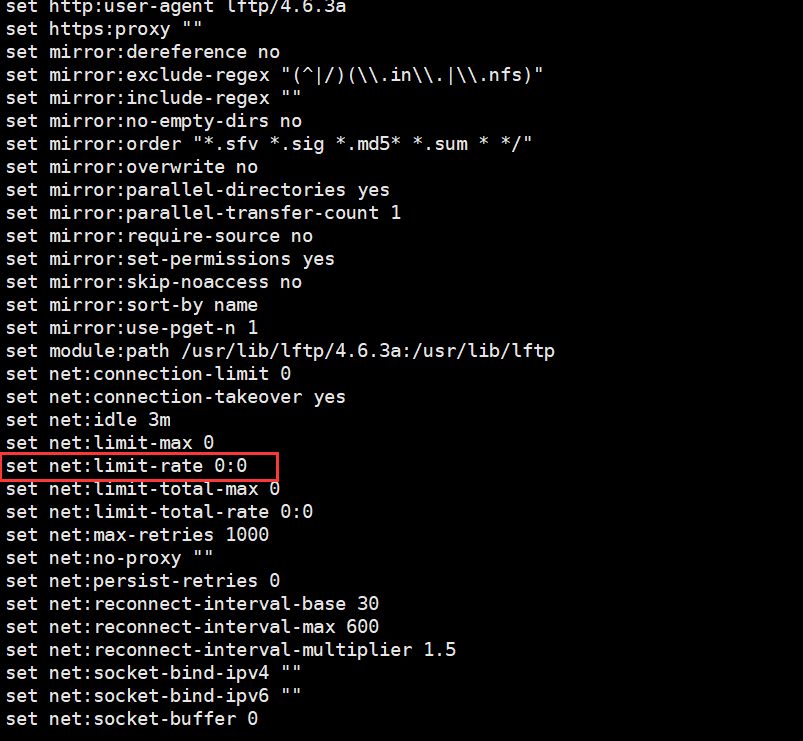

curl -s "Nail Robot" -H "Content-Type: application/json" -d "{'msgtype': 'text', 'text': {'content': \"$(date +%Y%m%d-%H%M%S): $(cat /home/lsy/sftp_file.log)\"}, 'at': {'isAtAll': true} }"Here's a very important point to note: set net:limit-rate 500000:500000 is the LFTP speed limit command. A lot of articles have been found on the internet. They use set net:limit-rate 500000,500000. The number is measured by me and checked by man lftp. Here they use: I hope you can reduce the number of pits.

The above is my transmission process and script, I hope to help you.