1. Problem description

This paper realizes the real-time detection of the tennis ball in the image by reading the image taken by the camera, calculating its distance and determining its orientation. The core problem is how to detect tennis from the picture taken by the camera and eliminate interference items. In addition, in order to apply this method to the embedded system, the computational complexity of the system should be minimized to avoid affecting the real-time performance.

2. Implementation method

For ball monochrome targets such as tennis, Hough transform can be selected for circle detection, or tennis can be segmented from video frames through color segmentation. If the background is complex and there are many obstacles, you can also choose to train a special tennis recognition model, such as the common YOLO, which can improve the recognition accuracy. But the disadvantage of this method is that it is difficult to deploy. Next, I will introduce these methods in detail.

2.1 Hough transform detection

The basic idea of Hough transform is that the tennis ball can be regarded as a circle from every direction, and any circle can be mapped from the expression in the two-dimensional coordinate system to the three-dimensional space. That is, the expression of a circle in Cartesian coordinate system:

It can be mapped to a point (a,b,r) in the three-dimensional coordinate system. Then, after mapping an image to the three-dimensional coordinate system, a peak in the coordinate system may mean a circle. The specific mathematical principle of this method will not be repeated. Our primary goal is to understand its usage. If you want to know more about the principle of Hough transform, you can search other articles.

2.1.1 HoughCircles parameter and return value introduction

1. Function parameters

In OpenCV, there is a function CV2 Houghcircles () uses Hough transform to detect circles. The function has 8 parameters. namely:

Image: 8bit, single channel gray image

Method: Hough transform method, but currently only supports CV2 HOUGH_ GRADIENT

dp: the resolution of the accumulator image. For example, when the value of dp is 1, the accumulator will have the same resolution as the source image; When the dp value is set to 2, the height and width of the accumulator become half of the source image. The value of dp cannot be less than 1.

min_dist: the minimum value that can distinguish between two different circles. If the value is too small, many non-existent circles may be detected incorrectly. If the value is too large, some circles may be lost.

param1: the upper and lower threshold of Canny edge detection is half of this value. That is, if it exceeds the upper limit of the threshold, it is the edge point, and if it is lower than the lower limit of the threshold, it is discarded. The middle part depends on whether the edges are connected.

param2: threshold value of detected circle quality. The higher the value, the closer the detected circle is to the standard circle. The smaller the value, the easier it is to detect the wrong circle.

min_radius: limit the minimum radius of a circle that can be detected. Circles below this value will be discarded.

max_radius: limit the maximum radius of a circle that can be detected. Circles above this value will be discarded.

2. Return value

The function will return a numpy array containing the center coordinates and radius information of all detected circles. To call out this information, you can split the array. For example:

circles=cv2.HoughCircles(gray,cv2.HOUGH_GRADIENT,1,100,param1=50,param2=70,minRadius=1,maxRadius=200)

for i in circles[0,:]:

cv2.circle(frame, (i[0],i[1]),i[2],(0,255,0),2)

After segmentation, the first bit of the array is x coordinate, the second bit is y coordinate, and the third bit is radius. After obtaining these data, combined with CV2 The circle () method can draw a circle on the image to display the detection results.

2.1.2 algorithm flow

First, we will briefly introduce the algorithm process, and the code will be put in 2.1.3.

(1) Initialize a VideoCapture object and use the read() method to read a frame of image from the camera;

(2) cvtColor() method is used to convert the image from BGR color gamut to gray image;

(3) Gaussian blur is used to filter the image, and the size of the kernel can be determined according to the situation. I use the core of (7,7);

(4) Use HoughCircles() method to detect circles.

(5) Obtain the center coordinates and radius of the circle, and mark the circle on the source image.

2.1.3 code application examples

Since the code is written in thorny on the virtual machine, I don't know how to transfer it to Chinese, so the comments are written in English. In addition, the program was originally used to control the ball picking car, so it will output the direction and distance of the ball relative to the camera in the console.

import cv2

import numpy as np

import math

try:

cap = cv2.VideoCapture(0) #from camera

#cap = cv2.VideoCapture('./cvtest.mp4') #from video file

except:

exit()

NoneType = None #variable for comparison

RotateAngle = 0 #the angle needs to be rotate (the nearest ball)

halfWidth = 0 #half of the image's width

StdDiameter = 6.70 #the standard diameter of tennis ball

StdPing = 4 #the standard diameter of table tennis ball

FixValue = 5 #fix the calculation of real angle for vehicle to rotate

while(True):

ret, frame = cap.read() #capture frame by frame

halfWidth = frame.shape[1]/2

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) #convert frame to gray

gray = cv2.GaussianBlur(gray, (7, 7), 0) #gaussianblur denoise

circles = cv2.HoughCircles(gray,cv2.HOUGH_GRADIENT,1,100,param1=50,param2=70,minRadius=1,maxRadius=200)

gray = cv2.Canny(gray, 100, 300) #houghcircles find the circles, canny detect the edge

binary, contours, hierarchy = cv2.findContours(gray, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) #find contours

cv2.imshow('edge', binary) #show the image of contours

if type(circles) != type(NoneType): #to avoid shutdown due to no circles found

TimeStamp = 0

Reset = False

for i in circles[0,:]:

cv2.circle(frame, (i[0],i[1]),i[2],(0,255,0),2) #draw the circle

cv2.circle(frame, (i[0],i[1]),2,(0,0,255),3) #draw the center of circle

tanTheta = (i[0]-halfWidth)/(1.43*halfWidth) #the tan value

RotateAngle = math.atan(tanTheta) #calculate the angle

Distance = 850/i[2] #calculate the distance between ball and camera

FixAngle = math.atan(Distance*math.sin(RotateAngle)/(Distance*math.cos(RotateAngle)+FixValue)) #calculate the fixed angle

Angle = FixAngle*180/math.pi #transform from rad to degree

if RotateAngle < 0:

print('Rotate Angle is ',abs(Angle),' Left')

else:

print('Rotate Angle is ',abs(Angle),' Right')

print('Distance is ',Distance,' cm')

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

There are several points to note in this Code:

(1) Canny edge detection and CV2 The findcontours () method is used to compare the effects. These two lines of code can be omitted;

(2) It may seem stupid to add the variable NoneType, but when the HoughCircles() method does not detect a circle, it will produce a return value of None. If you don't judge whether the numpy array is returned by circles, the program will report an error when executing the for loop. Because NoneType cannot be segmented.

(3) The direction and distance of the output sphere are determined by the field of view of the camera. The data in this program can only be used for the camera I use, and the output results of other cameras may be meaningless. If you want to output correct results, you need to adjust the parameters for your camera.

The effect of using this code is shown in the figure above. It can be seen that the effect of Hough transform method is fairly good. It can stably detect the sphere with clear outline, but it can also see its significant shortcomings. As shown in the figure above, we can see that the tennis ball on the left is not detected, and the table tennis ball below will also be detected. Therefore, its main problem is that it does not refuse round objects, and even the round patterns on clothes will be marked by it. This is clearly not what we want.

In addition, this method also requires the object to have a clear contour, otherwise the probability of successfully detecting it will be very low. The most fatal problem is that when this program encounters striped objects, it will greatly increase the false detection rate, and the number of frames that the program can process per second will be reduced to 0.1 ~ 1 due to a large number of calculations. This is a problem that cannot be avoided by using Hough transform.

2.2 color segmentation method for tennis ball detection

The idea of the color segmentation method is that the tennis ball can basically be regarded as a monochrome object. As long as the color block containing only the tennis ball in the source image is segmented, an image containing only the tennis ball can be obtained (the binary mask is obtained. It will be described in detail later), and the interference of objects of other colors can be excluded.

2.2.1 HSV color space in opencv

In reality, the illumination of different parts of tennis is different, which leads to that it is not monochrome in BGR color space and is difficult to segment. Therefore, when we use this method, we need to do it in HSV color space. HSV color space also uses three values to represent a color. namely:

Hue: measured by angle, the range is 0 ° ~ 360 °, in which 0 ° is red, 120 ° is green and 240 ° is blue. It is worth noting that in OpenCV, the range of this attribute is doubled to 0 to 180.

Saturation: saturation S indicates the degree to which the color is close to the spectral color. A color can be seen as the result of the mixing of a spectral color with white. The larger the proportion of spectral color, the higher the degree of color close to spectral color and the higher the saturation of color. In OpenCV, the value range is 0 to 255. The larger the value, the more saturated the color is. Generally speaking, the smaller the value, the whiter it becomes.

Lightness (Value): lightness indicates the brightness of the color, usually ranging from 0% (black) to 100% (white). In OpenCV, the Value ranges from 0 to 255.

After the image is converted to HSV color space, the tone peak of the tennis ball will be concentrated in a very short interval, because there is only a difference in saturation and lightness between the bright and dark parts of the tennis ball. As long as we keep this hue range and adjust the saturation and lightness, we can extract the tennis ball from the image.

2.2.2 algorithm flow

First, we will briefly introduce the algorithm process, and the code will be put in 2.2.3.

(1) Initialize a VideoCapture object and use the read() method to read a frame of image from the camera;

(2) Use CV2 Cvtcolor () method converts the image from BGR color space to HSV color space;

(3) Gaussian blur using a kernel of (3,3) size and CV2 The tennis ball is segmented by inrange() method; (cv2.inRange() method can separate the color in the middle of the given upper and lower thresholds. The separated part is white and the other parts are black)

(4) Use CV2 The morphologyex () method performs a closed operation to reduce the holes caused by the interference items in the mask; (closed operation expands first and then corrodes, which can bridge small holes)

(5) Use CV2 Findcontours () method obtains all contours in the mask obtained after step (4);

(6) Use CV2 The boundingrect() method obtains the coordinates and width height values of the circumscribed rectangle corresponding to all contours;

(7) Use CV2 The countarea() method calculates the area contained in the contour and compares it with the set detection circle threshold. If it is higher than this value, it is considered as a tennis ball, otherwise it is discarded. Mark the detected tennis ball on the source image.

2.2.3 code application examples

In order to ensure the standardization of the code, I divided the code into two files, main Py and function Py, where, main Py intuitively shows the process of this method, function Py defines the functions used in the main program.

main.py

import function as tennis

import cv2

cap = cv2.VideoCapture(0)

while(True):

ret, src = cap.read()

contours = tennis.FindBallContours(src)

src = tennis.DrawRectangle(src, contours)

cv2.imshow('src',src)

if cv2.waitKey(1) & 0xFF == ord('e'):

break

funciton.py

import cv2

import numpy as np

#global variable

#########################################################################################

tennisLowDist = np.array([20, 50, 140]) #tested best threshold of tennis detection

tennisHighDist = np.array([40, 200, 255])

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(5,5)) #ellipse kernel

radius = 0

#########################################################################################

#functions definition

#########################################################################################

def FindBallContours(src):

img = cv2.cvtColor(src, cv2.COLOR_BGR2HSV) #transfer to HSV field

img = cv2.GaussianBlur(img,(3,3),0) #denoise

mask = cv2.inRange(img, tennisLowDist, tennisHighDist) #generate a mask with only tennis ball

mask = cv2.morphologyEx(mask, cv2.MORPH_CLOSE, kernel) #morphology close operation for remove small noise point

#mask = cv2.morphologyEx(mask, cv2.MORPH_OPEN, kernel)

image, contours, hierarchy= cv2.findContours(mask,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE) #find the contours

return contours

def DrawRectangle(src, contours):

for c in contours:

x,y,w,h = cv2.boundingRect(c) #find each contours' circumscribed rectangle

if y!=0: #contour only makes sense when y!=0

if w>h:

radius = w

else: radius = h

area = cv2.contourArea(c) #calculate the area of each contour

if area > w*h*0.75*0.5 and 0.7 < w/h < 1.5 and area > 100: #threshold of area limit and w/h

cv2.rectangle(src,(x,y),(x+radius,y+radius),(0,0,255),2) #draw rectangle of ball

else:

continue

return src

#########################################################################################

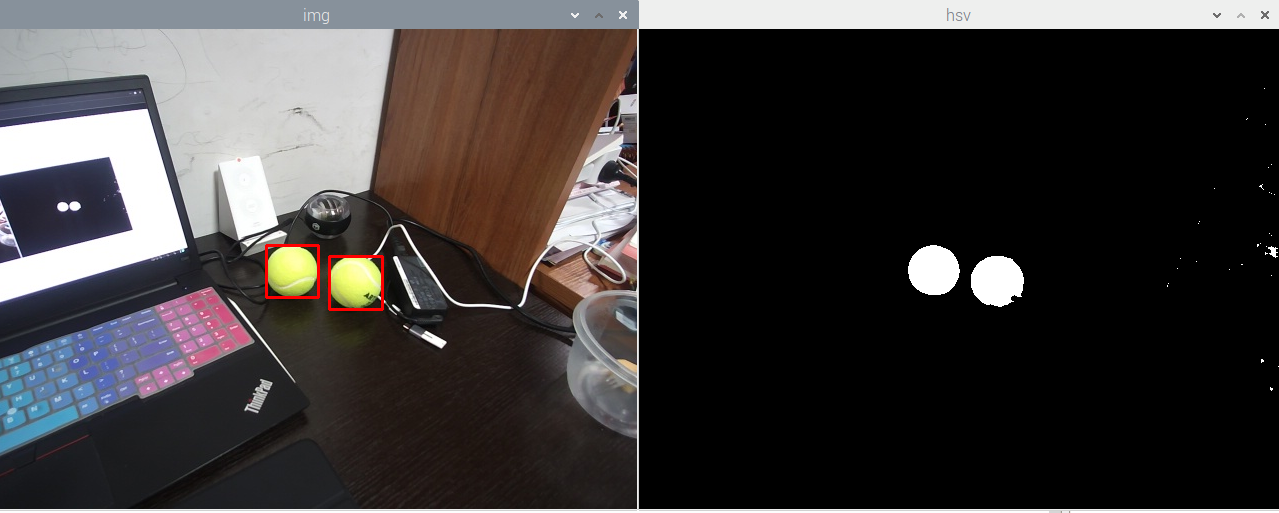

The effect of this code is shown in the figure:

On the left is the effect after the tennis ball is marked in the source image, and on the right is the mask after the tennis ball is extracted in the HSV color space.

The recognition effect is published in station B: https://www.bilibili.com/video/BV1BS4y1C7LG/

What should be noted in this Code:

(1) If area > W * h * 0.75 * 0.5 this line of code calculates whether it is a tennis ball by the percentage of the color block area in the area of the external rectangle. At the same time, it also limits the size of the area to avoid noise being detected as a tennis ball. The probability of false identification can be adjusted by changing the conditions in this line of code to make its performance meet the requirements.

(2) Closed operation can be used according to the situation. For the LOGO on tennis, using closed operation is a very effective method. For pure color tennis, including the application scenarios extended by the program, it is not necessary to use closed operation.

Compared with the first Hough transform method, the performance of this method is more stable. In addition, after closed operation, it can ensure that the tennis ball can be detected under certain occlusion. But this method is still not the most perfect method. It is limited by the quality of the camera. If the camera performance is poor, the color of the tennis ball may completely turn white, resulting in a large part of the color of the tennis ball turning white, which cannot be separated from the HSV color space, resulting in detection failure. Moreover, the threshold used in segmentation needs to be adjusted in continuous debugging. At present, for my camera, this threshold is H (20,40), S (50200), V (140255).