1, SIFT introduction

SIFT, scale invariant feature transformation, is a description used in the field of image processing. This description has scale invariance and can detect key points in the image. It is a local feature descriptor.

1. Features of SIFT algorithm

(1) It has good stability and invariance, can adapt to the changes of rotation, scale scaling and brightness, and can be free from the interference of angle change, affine transformation and noise to a certain extent.

(2) It has good discrimination and can match the discrimination information quickly and accurately in the massive feature database

(3) Multiplicity, even if there is only a single object, can produce a large number of eigenvectors

(4) High speed, fast feature vector matching

(5) Scalability, can be combined with other forms of eigenvectors

2 essence of SIFT algorithm

Find the key points in different scale spaces, and calculate the direction of the key points.

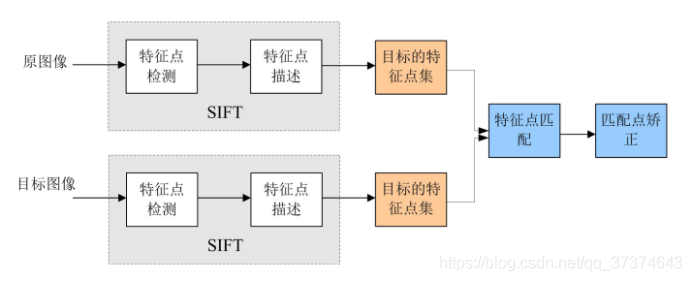

3 SIFT algorithm to achieve feature matching mainly has the following three processes

(1) Extracting key points: key points are some very prominent points that will not disappear due to illumination, scale, rotation and other factors, such as corner points, edge points, bright spots in dark areas and dark spots in bright areas. This step is to search the image positions in all scale spaces. Potential points of interest with scale and rotation invariance are identified through Gaussian differential function.

(2) Locate key points and determine the feature direction: at each candidate position, a fine fitting model is used to determine the position and scale. The selection of key points depends on their stability. Then, one or more directions are assigned to each key point position based on the local gradient direction of the image. All subsequent operations on image data are relative to the direction of the key points Transforms to, scale, and position to provide invariance to these transformations.

(3) By comparing the feature vectors of each key point, several pairs of matching feature points are found, and the corresponding relationship between scenes is established.

4 scale space

(1) Concept

Scale space is the concept and method of trying to simulate human eyes to observe objects in the field of images. For example, when observing a tree, the key is whether we want to observe the leaves or the whole tree: if it is a whole tree (equivalent to observing in a large scale), the details of the image should be removed. If it is a leaf (observed at a small scale), the local details should be observed.

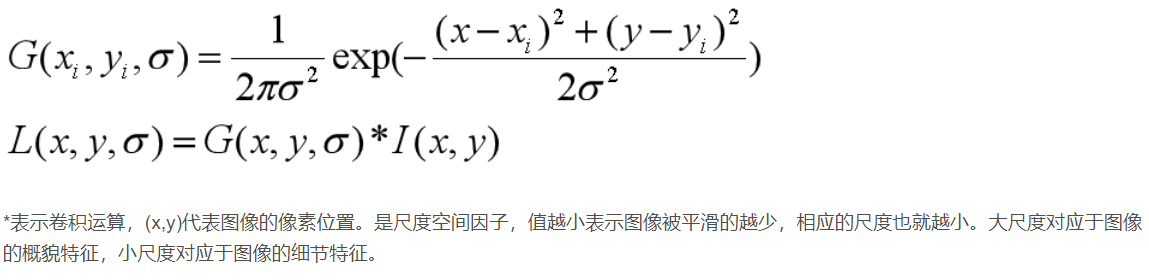

SIFT algorithm adopts Gaussian kernel function to filter when constructing scale space, so that the original image can save the most detailed features. After Gaussian filtering, the detailed features are gradually reduced to simulate the feature representation in the case of large scale.

There are two main reasons for filtering with Gaussian kernel function:

a Gaussian kernel function is the only scale invariant kernel function.

b DoG kernel function can be approximated as LoG function, which can make feature extraction easier. Meanwhile, David In this paper, the author of Lowe proposed that filtering after twice up sampling the original image can retain more information for subsequent feature extraction and matching. In fact, scale space image generation is the current image and different scale kernel parameters σ The image generated after convolution operation.

(2) Show

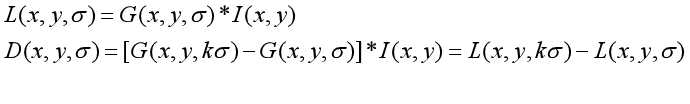

L(x, y, σ) , It is defined as the original image I(x, y) and a variable scale 2-dimensional Gaussian function G(x, y, σ) Convolution operation.

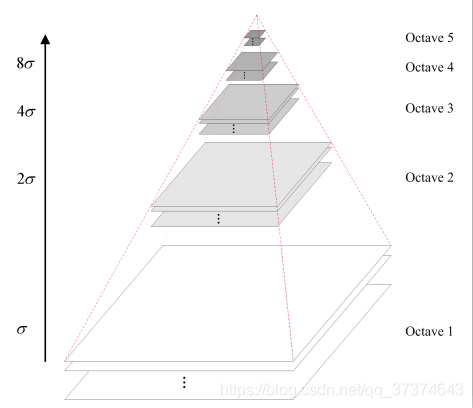

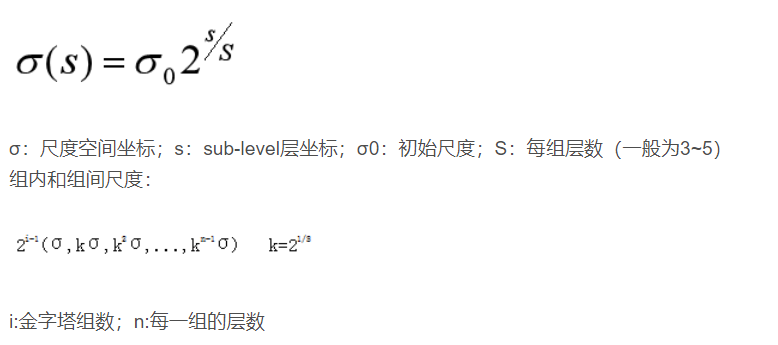

5 construction of Gaussian pyramid

(1) Concept

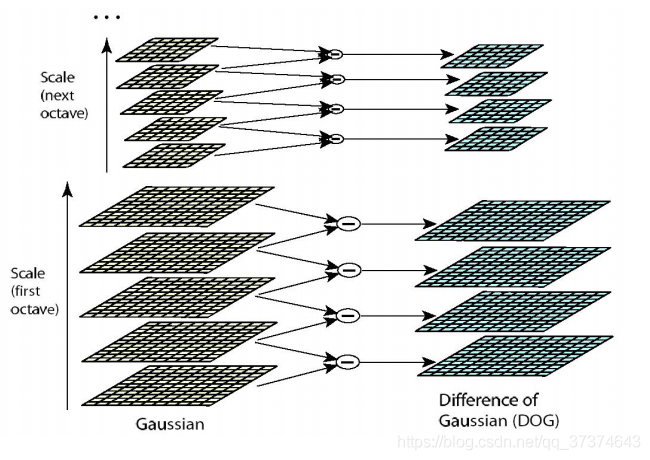

The scale space is represented by Gaussian pyramid during implementation. The construction of Gaussian pyramid is divided into two steps:

a Gaussian smoothing of the image;

b downsampling the image.

The pyramid model of image refers to the pyramid model that continuously reduces the order and samples the original image to obtain a series of images of different sizes, from large to small and from bottom to top. The original image is the first layer of the pyramid. The new image obtained by each downsampling is one layer of the pyramid (one image per layer), and each pyramid has n layers in total. In order to make the scale reflect its continuity, Gaussian pyramid adds Gaussian filter on the basis of simple downsampling. As shown in the above figure, Gaussian blur is applied to an image of each layer of the image pyramid with different parameters, Octave represents the number of image groups that can be generated by an image, and Interval represents the number of image layers included in a group of images. In addition, during downsampling, the initial image (bottom image) of a group of images on the Gaussian pyramid is obtained by sampling every other point of the penultimate image of the previous group of images.

(2) Show

If there are o groups and s layers in the Gaussian image pyramid, there are

6 DOG space extreme value detection

(1) DOG function

(2) DoG Gaussian difference pyramid

a corresponding to the DOG operator, the DOG pyramid needs to be constructed.

The change of pixel value on the image can be seen through the Gaussian difference image. (if there is no change, there is no feature. The feature must be as many points as possible.) the DOG image depicts the outline of the target.

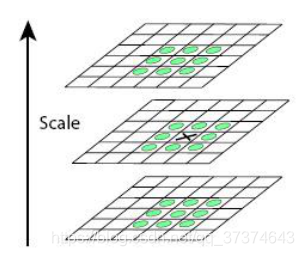

B. dog local extreme value detection

Feature points are composed of local extreme points in dog space. In order to find the extreme point of dog function, each pixel should be compared with all its adjacent points to see whether it is larger or smaller than its adjacent points in image domain and scale domain. Feature points are composed of local extreme points in dog space. In order to find the extreme point of dog function, each pixel should be compared with all its adjacent points to see whether it is larger or smaller than its adjacent points in image domain and scale domain. As shown in the figure below, the middle detection point and its 8 adjacent points on the same scale and 9 corresponding to the upper and lower adjacent scales × The two points are compared with 26 points in total to ensure that extreme points are detected in both scale space and two-dimensional image space.

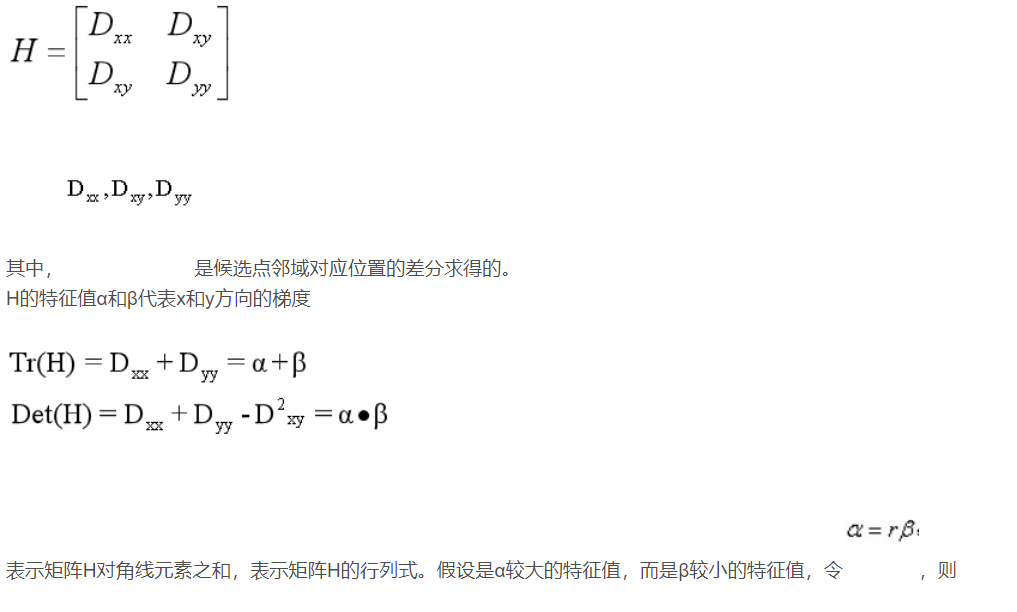

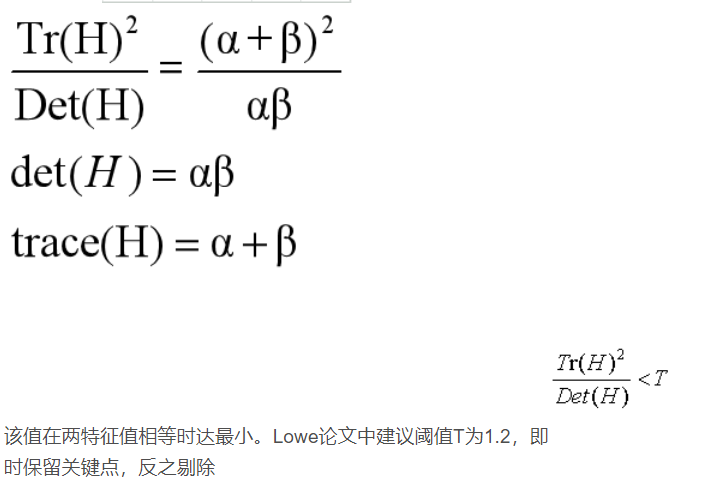

b remove edge effects

In the direction of edge gradient, the principal curvature value is relatively large, while along the edge direction, the principal curvature value is small. Principal curvature and 2 of DoG function D(x) of candidate feature points × 2Hessian matrix is proportional to the eigenvalue of H.

7 key point direction assignment

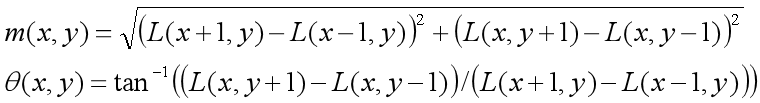

(1) To find the extreme points through scale invariance, it is necessary to assign a reference direction to each key point by using the local features of the image, so that the descriptor is invariant to the image rotation. For the key points detected in the DOG pyramid, collect the Gaussian pyramid image 3 σ The gradient and direction distribution characteristics of pixels in the neighborhood window. The modulus and direction of the gradient are as follows:

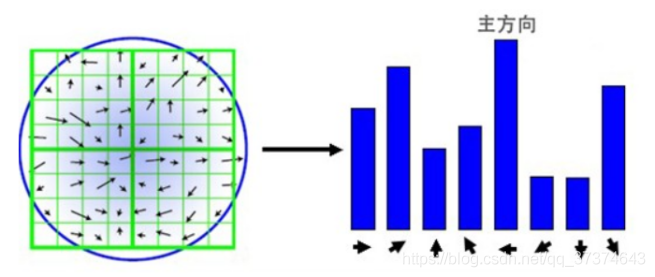

(2) This algorithm adopts the gradient histogram statistical method to count the image pixels in a certain area with the key point as the origin and determine the direction of the key point. After completing the gradient calculation of the key point, the histogram is used to count the gradient and direction of the pixels in the neighborhood. The gradient histogram divides the direction range of 0 ~ 360 degrees into 36 columns, of which 10 degrees are for each column. As shown in the figure below, the peak square of the histogram The direction represents the main direction of the key point, the peak of the direction histogram represents the direction of the neighborhood gradient at the feature point, and the maximum value in the histogram is taken as the main direction of the key point. In order to enhance the robustness of matching, only the direction whose peak value is greater than 80% of the peak value in the main direction is retained as the secondary direction of the key point.

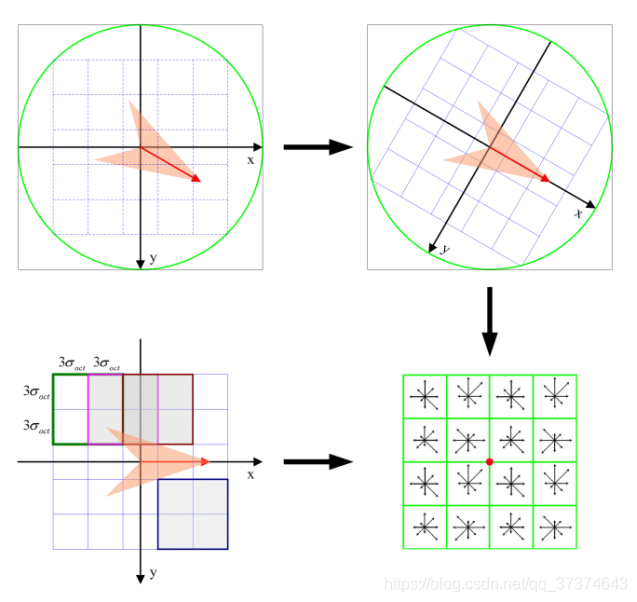

8 key point description

For each key point, it has three information: location, scale and direction. Create a descriptor for each key point and describe the key point with a set of vectors so that it does not change with various changes, such as illumination change, viewing angle change, etc. This descriptor includes not only the key points, but also the pixels around the key points that contribute to it, and the descriptor should have high uniqueness to improve the probability of correct matching of feature points.

Lowe experimental results show that the descriptor adopts 4 × four × 8 = 128 dimensional vector representation, the comprehensive effect is the best (invariance and uniqueness).

9 key point matching

(1) For template map (reference image) and real-time map (observation map,

observation image) creates a subset of key descriptions. Target recognition is accomplished by comparing the key point descriptors in the two-point set. The similarity measure of key descriptor with 128 dimensions adopts Euclidean distance.

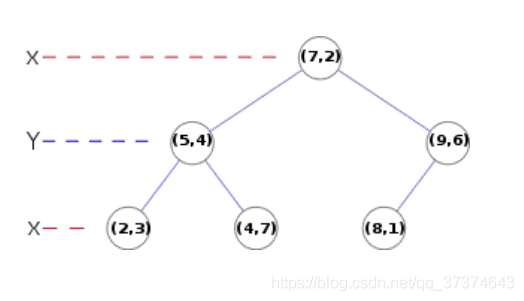

(3) The matching can be completed by exhaustive method, but it takes too much time. Therefore, the data structure of kd tree is generally used to complete the search. The search content is to search the original image feature points closest to the feature points of the target image and the sub adjacent original image feature points based on the key points of the target image.

Kd tree, as shown below, is a balanced binary tree

10 summary

SIFT features have stability and invariance, and play a very important role in the field of image processing and computer vision. It is also very complex. Because it is not long to contact sift, we still do not understand the relevant knowledge. After consulting and referring from many parties, the content of this article is not detailed enough. Please forgive me. The following is a rough summary of SIFT algorithm.

(1) Extreme value detection in DoG scale space.

(2) Delete unstable extreme points.

(3) Determine the main direction of feature points

(4) The descriptor of feature points is generated for key point matching.

2, Partial source code

function varargout = interface(varargin)

% INTERFACE M-file for interface.fig

% INTERFACE, by itself, creates a new INTERFACE or raises the existing

% singleton*.

%

% H = INTERFACE returns the handle to a new INTERFACE or the handle to

% the existing singleton*.

%

% INTERFACE('CALLBACK',hObject,eventData,handles,...) calls the local

% function named CALLBACK in INTERFACE.M with the given input arguments.

%

% INTERFACE('Property','Value',...) creates a new INTERFACE or raises the

% existing singleton*. Starting from the left, property value pairs are

% applied to the GUI before interface_OpeningFunction gets called. An

% unrecognized property name or invalid value makes property application

% stop. All inputs are passed to interface_OpeningFcn via varargin.

%

% *See GUI Options on GUIDE's Tools menu. Choose "GUI allows only one

% instance to run (singleton)".

%

% See also: GUIDE, GUIDATA, GUIHANDLES

% Edit the above text to modify the response to help interface

% Last Modified by GUIDE v2.5 01-Jun-2007 09:23:14

% Begin initialization code - DO NOT EDIT

gui_Singleton = 1;

gui_State = struct('gui_Name', mfilename, ...

'gui_Singleton', gui_Singleton, ...

'gui_OpeningFcn', @interface_OpeningFcn, ...

'gui_OutputFcn', @interface_OutputFcn, ...

'gui_LayoutFcn', [] , ...

'gui_Callback', []);

if nargin & isstr(varargin{1})

gui_State.gui_Callback = str2func(varargin{1});

end

if nargout

[varargout{1:nargout}] = gui_mainfcn(gui_State, varargin{:});

else

gui_mainfcn(gui_State, varargin{:});

end

% End initialization code - DO NOT EDIT

% --- Executes just before interface is made visible.

function interface_OpeningFcn(hObject, eventdata, handles, varargin)

% This function has no output args, see OutputFcn.

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% varargin command line arguments to interface (see VARARGIN)

% Choose default command line output for interface

handles.output = hObject;

% Update handles structure

guidata(hObject, handles);

% UIWAIT makes interface wait for user response (see UIRESUME)

% uiwait(handles.figure1);

% --- Outputs from this function are returned to the command line.

function varargout = interface_OutputFcn(hObject, eventdata, handles)

% varargout cell array for returning output args (see VARARGOUT);

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% Get default command line output from handles structure

varargout{1} = handles.output;

% --- Executes on button press in pushbutton1.

function pushbutton1_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton1 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

[filename,path]=uigetfile('*.jpg;*.bmp','*.bmp','Open file');

allfilename=strcat(path,filename);

ima=imread(allfilename);

axes(handles.axes1);

imshow(ima);title('Input image')

[imshowage,flag]=Require(ima);

I=imread('r1.bmp');

figure

imshow(I);

I=imread('r1.bmp');

imshow(I);

X=cent(Max(i),1);Y=cent(Max(i),2);%White is 1;

MX(i)=round(X);MY(i)=round(Y);

bx=boud(Max(i),1);by=boud(Max(i),2);blen=boud(Max(i),4);bwid=boud(Max(i),3);

bx1=round(bx);by1=round(by);Mblen(i)=round(blen);Mbwid(i)=round(bwid);

if (blen>=bwid)

MR=bwid;

else

MR=blen;

end

if (MX(i)+round(MR/4)<=lie&&MY(i)+round(MR/6)<=hang&&TC(MY(i)+round(MR/6),MX(i)+round(MR/4))==1)

t2=1;

end

if (MX(i)-round(MR/4)>0&&MY(i)-round(MR/6)>0&&TC(MY(i)-round(MR/6),MX(i)-round(MR/4))==1)

t4=1;

end

if (MY(i)+round(MR/6)<=hang&&MX(i)-round(MR/4)>0&&TC(MY(i)+round(MR/6),MX(i)-round(MR/4))==1)

t7=1;

end

if (MY(i)-round(MR/6)>0&&MX(i)+round(MR/4)<=lie&&TC(MY(i)-round(MR/6),MX(i)+round(MR/4))==1)

t8=1;

end

figure

imshow(J);

imwrite(J,'r11.bmp','bmp');

3, Operation results

4, matlab version and references

1 matlab version

2014a

2 references

[1] Cai Limei MATLAB image processing -- theory, algorithm and example analysis [M] Tsinghua University Press, 2020

[2] Yang Dan, Zhao Haibin, long Zhe Detailed explanation of MATLAB image processing example [M] Tsinghua University Press, 2013

[3] Zhou pin MATLAB image processing and graphical user interface design [M] Tsinghua University Press, 2013

[4] Liu Chenglong Proficient in MATLAB image processing [M] Tsinghua University Press, 2015