Supplementary knowledge of credit card identification project

1. Template matching

The principle of template matching is similar to that of convolution. The template slides from the origin on the original image to calculate the difference between the template and (where the image is covered by the template). There are six calculation methods for this difference in opencv, and then the results of each calculation are put into a matrix and output as the results. If the original figure is AXB size and the template is AXB size, the matrix of the output result is (A-a+1)x(B-b+1)

- TM_SQDIFF: the calculated square is different. The smaller the calculated value is, the more relevant it is

- TM_CCORR: calculate the correlation. The larger the calculated value, the more relevant it is

- TM_CCOEFF: calculate the correlation coefficient. The larger the calculated value, the more relevant it is

- TM_ SQDIFF_ Normalized: the calculated normalized square is different. The closer the calculated value is to 0, the more relevant it is

- TM_ CCORR_ Normalized: calculate the normalized correlation. The closer the calculated value is to 1, the more relevant it is

- TM_ CCOEFF_ Normalized: calculate the normalized correlation coefficient. The closer the calculated value is to 1, the more relevant it is

It is suggested that the normalized calculation method will be relatively fair

- matchTemplate(image, templ, method[, result[, mask]]) for template matching

- Image is the image to match

- templ is a template image

- Method is the calculation method

- result is the matrix obtained after matching calculation

- Mask is a mask

- minMaxLoc(src[, mask]) gets the location of the maximum and minimum values

- Returns four values: minimum value, maximum value, minimum value coordinate and maximum value coordinate

import cv2

import numpy as np

img = cv2.imread('lena.jpg', 0)

template = cv2.imread('face.jpg', 0)

res = cv2.matchTemplate(img, template, cv2.TM_SQDIFF)

print(res.shape)

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res)

print(min_loc)

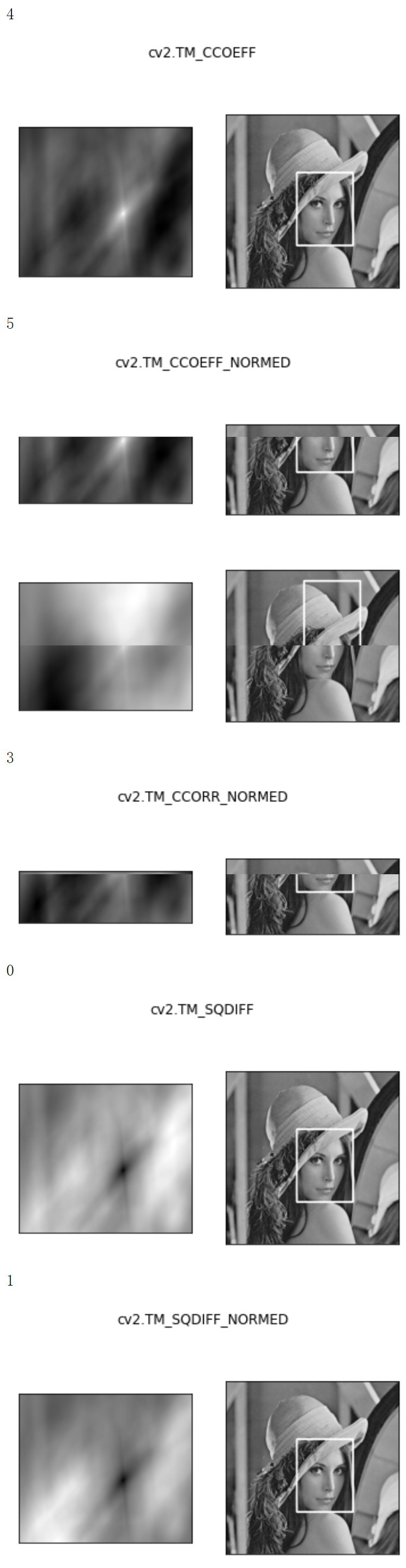

Show the differences of 6 matching calculation methods:

import cv2

import numpy as np

img = cv2.imread('lena.jpg', 0)

template = cv2.imread('face.jpg', 0)

methods = ['cv2.TM_CCOEFF', 'cv2.TM_CCOEFF_NORMED', 'cv2.TM_CCORR',

'cv2.TM_CCORR_NORMED', 'cv2.TM_SQDIFF', 'cv2.TM_SQDIFF_NORMED']

for meth in methods:

img2 = img.copy()

# True value of matching method

method = eval(meth)

print (method)

res = cv2.matchTemplate(img, template, method)

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res)

# If it is square difference matching TM_SQDIFF or normalized square difference matching TM_SQDIFF_ Normalized, take the minimum value

if method in [cv2.TM_SQDIFF, cv2.TM_SQDIFF_NORMED]:

top_left = min_loc

else:

top_left = max_loc

bottom_right = (top_left[0] + w, top_left[1] + h)

# Draw rectangle

cv2.rectangle(img2, top_left, bottom_right, 255, 2)

plt.subplot(121), plt.imshow(res, cmap='gray')

plt.xticks([]), plt.yticks([]) # Hide axes

plt.subplot(122), plt.imshow(img2, cmap='gray')

plt.xticks([]), plt.yticks([])

plt.suptitle(meth)

plt.show()

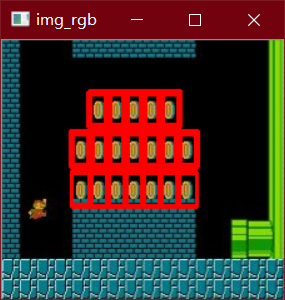

Match multiple objects

img_rgb = cv2.imread('mario.jpg')

img_gray = cv2.cvtColor(img_rgb, cv2.COLOR_BGR2GRAY)

template = cv2.imread('mario_coin.jpg', 0)

h, w = template.shape[:2]

res = cv2.matchTemplate(img_gray, template, cv2.TM_CCOEFF_NORMED)

threshold = 0.8

# Take coordinates with matching degree greater than% 80

loc = np.where(res >= threshold)

for pt in zip(*loc[::-1]): # *The number indicates an optional parameter

bottom_right = (pt[0] + w, pt[1] + h)

cv2.rectangle(img_rgb, pt, bottom_right, (0, 0, 255), 2)

cv2.imshow('img_rgb', img_rgb)

cv2.waitKey(0)

cv2.destroyAllWindows()

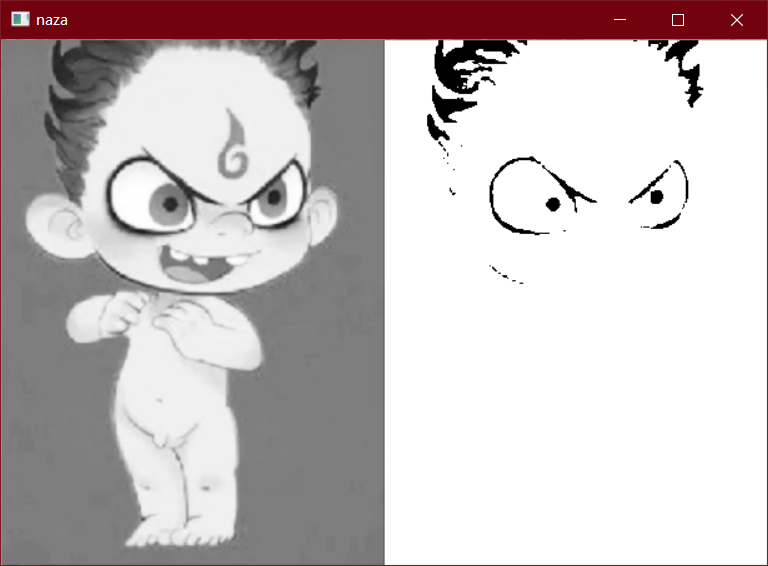

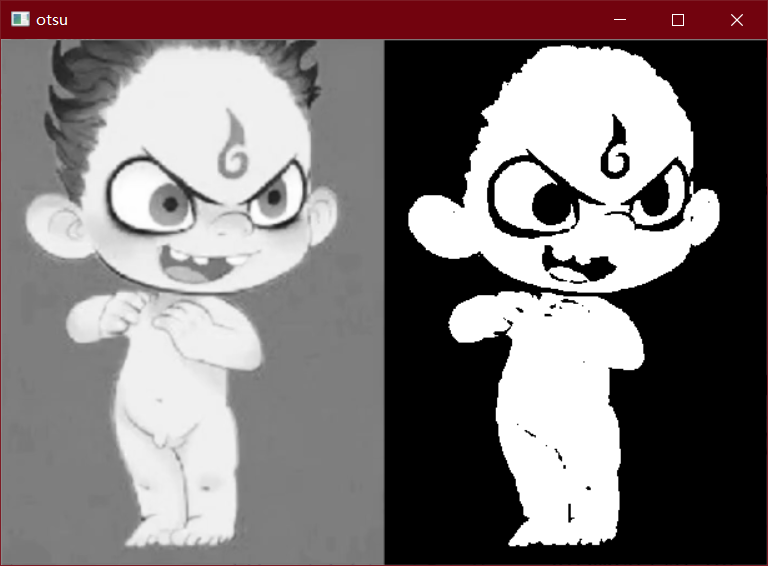

2. Otsu threshold

When the threshold range cannot be determined manually, Otsu's method can be used to determine the threshold by calculation

Otsu applies to the gray histogram of the picture, which is a graph with bimodal structure

import cv2

import numpy as np

import matplotlib.pyplot as plt

naza = cv2.imread('naza.png')

naza_gray = cv2.cvtColor(naza, cv2.COLOR_BGR2GRAY)

_ = plt.hist(naza_gray.ravel(), bins=256, range=[0, 255])

# Common threshold processing

ret, dst = cv2.threshold(naza_gray, 80, 255, cv2.THRESH_BINARY)

cv2.imshow('naza', np.hstack((naza_gray, dst)))

# ostu threshold processing

ret, dst = cv2.threshold(cat_gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

cv2.imshow('otsu', np.hstack((cat_gray, dst)))

cv2.waitKey(0)

cv2.destroyAllWindows()

3. Credit card digital identification

Basic idea:

The general idea is to take out each number in the credit card as a template to match the 10 numbers in the template

-

First process the template to obtain the template of each number and its corresponding digital ID

-

Then process the credit card and take out the digital area of the credit card through a series of preprocessing operations

-

Then take out each number to match with the 10 numbers in the template