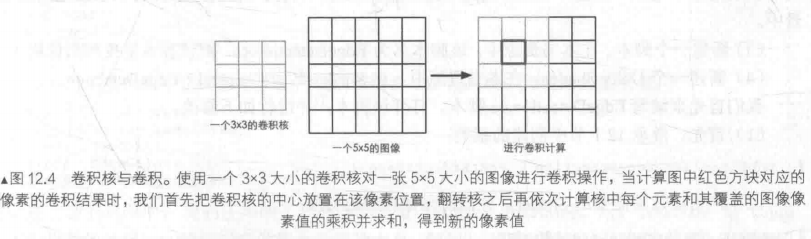

The principle is to use the edge detection operator to convolute the image. In image processing, convolution refers to using a convolution to check each pixel in an image for a series of operations.

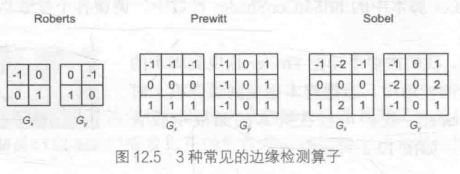

Common edge detection operators:

Both of them contain convolution kernels in two directions, which are used to detect the edge information in the horizontal and vertical directions respectively. During edge detection, we need to perform convolution calculation for each pixel respectively to obtain the gradient values Gx and Gy in two directions, and the overall gradient can be calculated according to the following formula:

Since the above calculation includes open root operation, for performance reasons, we sometimes use absolute value operation instead of open root operation:

When the gradient G is obtained, we can judge which pixels correspond to the edge (the greater the gradient value, the more likely it is to be edge points).

Post processing script:

using UnityEngine;

using System.Collections;

//Inherit base class

public class EdgeDetection : PostEffectsBase {

//Declare shaders and create materials

public Shader edgeDetectShader;

private Material edgeDetectMaterial = null;

public Material material {

get {

edgeDetectMaterial = CheckShaderAndCreateMaterial(edgeDetectShader, edgeDetectMaterial);

return edgeDetectMaterial;

}

}

[Range(0.0f, 1.0f)]

public float edgesOnly = 0.0f; //Adjust the edge line intensity. When it is 0, the edge is superimposed on the original rendered image, and when it is 1, only the edge is displayed

public Color edgeColor = Color.black; //stroke color

public Color backgroundColor = Color.white; //background color

void OnRenderImage (RenderTexture src, RenderTexture dest) {

if (material != null) {

material.SetFloat("_EdgeOnly", edgesOnly);

material.SetColor("_EdgeColor", edgeColor);

material.SetColor("_BackgroundColor", backgroundColor);

Graphics.Blit(src, dest, material);

} else {

Graphics.Blit(src, dest);

}

}

}

Shader code is as follows:

Shader "Unity Shaders Book/Chapter 12/Edge Detection" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_EdgeOnly ("Edge Only", Float) = 1.0

_EdgeColor ("Edge Color", Color) = (0, 0, 0, 1)

_BackgroundColor ("Background Color", Color) = (1, 1, 1, 1)

}

SubShader {

Pass {

ZTest Always Cull Off ZWrite Off

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex vert

#pragma fragment fragSobel

sampler2D _MainTex;

uniform half4 _MainTex_TexelSize;

fixed _EdgeOnly;

fixed4 _EdgeColor;

fixed4 _BackgroundColor;

struct v2f {

float4 pos : SV_POSITION;

half2 uv[9] : TEXCOORD0;

};

v2f vert(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

o.uv[0] = uv + _MainTex_TexelSize.xy * half2(-1, -1);

o.uv[1] = uv + _MainTex_TexelSize.xy * half2(0, -1);

o.uv[2] = uv + _MainTex_TexelSize.xy * half2(1, -1);

o.uv[3] = uv + _MainTex_TexelSize.xy * half2(-1, 0);

o.uv[4] = uv + _MainTex_TexelSize.xy * half2(0, 0);

o.uv[5] = uv + _MainTex_TexelSize.xy * half2(1, 0);

o.uv[6] = uv + _MainTex_TexelSize.xy * half2(-1, 1);

o.uv[7] = uv + _MainTex_TexelSize.xy * half2(0, 1);

o.uv[8] = uv + _MainTex_TexelSize.xy * half2(1, 1);

return o;

}

fixed luminance(fixed4 color) {

return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b;

}

//Sobel operator

half Sobel(v2f i) {

const half Gx[9] = {-1, 0, 1,

-2, 0, 2,

-1, 0, 1};

const half Gy[9] = {-1, -2, -1,

0, 0, 0,

1, 2, 1};

half texColor;

half edgeX = 0;

half edgeY = 0;

for (int it = 0; it < 9; it++) {

texColor = luminance(tex2D(_MainTex, i.uv[it]));

edgeX += texColor * Gx[it];

edgeY += texColor * Gy[it];

}

half edge = 1 - abs(edgeX) - abs(edgeY);

return edge;

}

//Fragment Shader

fixed4 fragSobel(v2f i) : SV_Target {

half edge = Sobel(i);

fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, i.uv[4]), edge);

fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly);

}

ENDCG

}

}

FallBack Off

}

In the above code, we also declare a new variable MainTex_ TexelSize. xxx _TexelSize is the size of each texture element corresponding to the XXX texture provided by Unity. For example, for a 512X512 size texture, the value is about 0.001953 (i.e. 1 / 512). Because convolution needs to sample the texture in the adjacent region, we need to use_ MainTex_ Texelsize to calculate the texture coordinates of each adjacent area.

Vertex shader:

We define a texture array with dimension 9 in the v2f structure, which corresponds to the 9 neighborhood texture coordinates required when sampling with Sobel operator. By transferring the code for calculating the sampling texture coordinates from the slice shader to the vertex shader, the operation can be reduced and the performance can be improved. Since the interpolation from vertex shader to slice shader is linear, such transfer does not affect the calculation results of texture coordinates.

Slice shader:

Firstly, we call Sobel function to calculate the gradient value edge of the current pixel, and use this value to calculate the color values under the background of the original image and pure color respectively, and then use_ EdgeOnly interpolates between the two to get the final pixel value. Sobel function will use Sobel operator to detect the edge of the original image.

We first define the convolution kernels Gx and Gy used in the horizontal and vertical directions, then sample 9 pixels in turn, calculate their brightness values, multiply them by the corresponding weights in the convolution kernels Gx and Gy, and superimpose them on their respective gradient values. Finally, we subtract the absolute values of the gradient values in the horizontal and vertical directions from 1 to obtain edge. The smaller the edge value, the more likely it is to be an edge point. At this point, the edge detection process ends.