preface

Harbeth It is a small part of utilities and extensions on Apple's Metal framework, which is committed to making your Swift GPU code more concise and allowing you to build pipeline prototypes faster. This paper introduces and designs the filter based on GPU, graphics processing and filter making 👒👒👒

Function list

🟣 At present, Metal Moudle The most important features can be summarized as follows:

-

Support operator and functional operation

-

Support rapid filter design

-

Support fast expansion of output source

-

Support camera acquisition effects

-

Support matrix convolution

-

The filter part is roughly divided into the following modules:

- Blend : Image Fusion Technology

- Blur : blur effect

- ColorProcess Basic pixel color processing of image:

- Effect : effect processing

- Lookup : lookup table filter

- Matrix : matrix convolution filter

- Shape : image shape size dependent

- VisualEffect : visual dynamic effects

-

To sum up, there are 100 + filters for you to use.

- Code zero intrusion injection filter function,

Original code: ImageView.image = originImage Injection filter code: let filter = C7ColorMatrix4x4(matrix: Matrix4x4.sepia) ImageView.image = try? originImage.make(filter: filter)

- Capture and generate pictures with camera

Injection edge detection filter

var filter = C7EdgeGlow()

filter.lineColor = UIColor.blue

Generate camera collector

let collector = C7FilterCollector(callback: {

self.ImageView.image = $0

})

collector.captureSession.sessionPreset = AVCaptureSession.Preset.hd1280x720

collector.filter = filter

ImageView.layer.addSublayer(collector)

Main part

-

Core plate

- C7FilterProtocol : the filter design must comply with this agreement.

- modifier: encoder type and corresponding function name.

- Factors: set and modify parameter factors, which need to be converted to Float.

- otherInputTextures: multiple input sources, including an array of mtltextures

- outputSize: change the size of the output image.

- C7FilterProtocol : the filter design must comply with this agreement.

-

Output plate

- C7FilterOutput : output content protocol, which must be implemented for all outputs.

- make: generate data according to filter processing.

- makeGroup: multiple filter combinations. Please note that the order in which filters are added may affect the result of image generation.

- C7FilterImage : image input source based on C7FilterOutput. The following modes only support encoder based on parallel computing.

- C7FilterTexture : Based on the texture input source of C7FilterOutput, the input texture is converted into filter processing texture.

- C7FilterOutput : output content protocol, which must be implemented for all outputs.

Design filter

- Let's share how to design and handle the first filter

- Implementation protocol C7FilterProtocal

public protocol C7FilterProtocol {

///Encoder type and corresponding function name

///

///The corresponding 'kernel' function name is required for calculation

///Rendering requires a 'vertex' shader function name and a 'fragment' shader function name

var modifier: Modifier { get }

///Make buffer

///Setting and modifying parameter factors need to be converted to 'Float'.

var factors: [Float] { get }

///Multiple input source extension

///Array containing 'MTLTexture'

var otherInputTextures: C7InputTextures { get }

///Change the size of the output image

func outputSize(input size: C7Size)-> C7Size

}

- Write kernel shader based on parallel computing.

- Configure pass parameter factor, only Float type is supported.

- Configure additional required textures.

for instance

Design a soul out of body filter,

public struct C7SoulOut: C7FilterProtocol {

/// The adjusted soul, from 0.0 to 1.0, with a default of 0.5

public var soul: Float = 0.5

public var maxScale: Float = 1.5

public var maxAlpha: Float = 0.5

public var modifier: Modifier {

return .compute(kernel: "C7SoulOut")

}

public var factors: [Float] {

return [soul, maxScale, maxAlpha]

}

public init() { }

}

-

This filter requires three parameters:

- Soul: adjusted soul, from 0.0 to 1.0. The default is 0.5

- maxScale: maximum soul ratio

- maxAlpha: maximum soul transparency

-

Write kernel functions based on Parallel Computing

kernel void C7SoulOut(texture2d<half, access::write> outputTexture [[texture(0)]],

texture2d<half, access::sample> inputTexture [[texture(1)]],

constant float *soulPointer [[buffer(0)]],

constant float *maxScalePointer [[buffer(1)]],

constant float *maxAlphaPointer [[buffer(2)]],

uint2 grid [[thread_position_in_grid]]) {

constexpr sampler quadSampler(mag_filter::linear, min_filter::linear);

const half4 inColor = inputTexture.read(grid);

const float x = float(grid.x) / outputTexture.get_width();

const float y = float(grid.y) / outputTexture.get_height();

const half soul = half(*soulPointer);

const half maxScale = half(*maxScalePointer);

const half maxAlpha = half(*maxAlphaPointer);

const half alpha = maxAlpha * (1.0h - soul);

const half scale = 1.0h + (maxScale - 1.0h) * soul;

const half soulX = 0.5h + (x - 0.5h) / scale;

const half soulY = 0.5h + (y - 0.5h) / scale;

const half4 soulMask = inputTexture.sample(quadSampler, float2(soulX, soulY));

const half4 outColor = inColor * (1.0h - alpha) + soulMask * alpha;

outputTexture.write(outColor, grid);

}

- Simple to use. Because my design is based on parallel computing pipeline, I can directly generate pictures

var filter = C7SoulOut() filter.soul = 0.5 filter.maxScale = 2.0 ///Display directly in ImageView ImageView.image = try? originImage.makeImage(filter: filter)

- As for the above dynamic effect, it is also very simple. Add a timer and change the soul value. It's simple.

Advanced Usage

- Operator chain processing

/// 1. Convert to BGRA let filter1 = C7Color2(with: .color2BGRA) /// 2. Adjust particle size var filter2 = C7Granularity() filter2.grain = 0.8 /// 3. Adjust white balance var filter3 = C7WhiteBalance() filter3.temperature = 5555 /// 4. Adjust highlight shadows var filter4 = C7HighlightShadow() filter4.shadows = 0.4 filter4.highlights = 0.5 /// 5. Combined operation let AT = C7FilterTexture.init(texture: originImage.mt.toTexture()!) let result = AT ->> filter1 ->> filter2 ->> filter3 ->> filter4 /// 6. Get results filterImageView.image = result.outputImage()

- Batch operation processing

/// 1. Convert to RBGA let filter1 = C7Color2(with: .color2RBGA) /// 2. Adjust particle size var filter2 = C7Granularity() filter2.grain = 0.8 /// 3. Configure Soul effect var filter3 = C7SoulOut() filter3.soul = 0.7 /// 4. Combined operation let group: [C7FilterProtocol] = [filter1, filter2, filter3] /// 5. Get results filterImageView.image = try? originImage.makeGroup(filters: group)

Both methods can handle the multi filter scheme. How to choose depends on your mood. ✌️

CocoaPods

- If you want to import Metal In the Poole module, you need to:

pod 'Harbeth'

- If you want to import OpenCV Image module, in Podfile:

pod 'OpencvQueen'

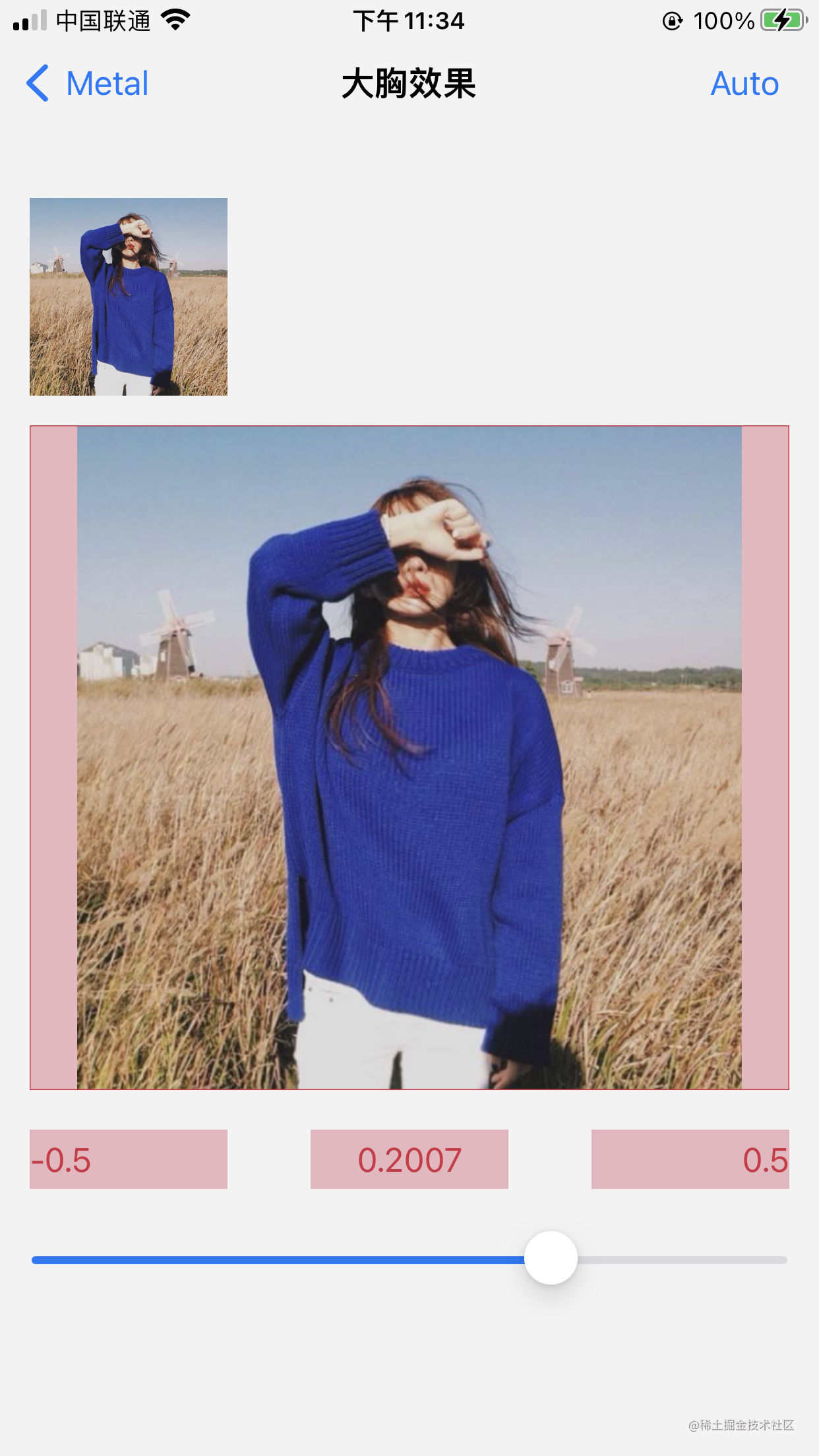

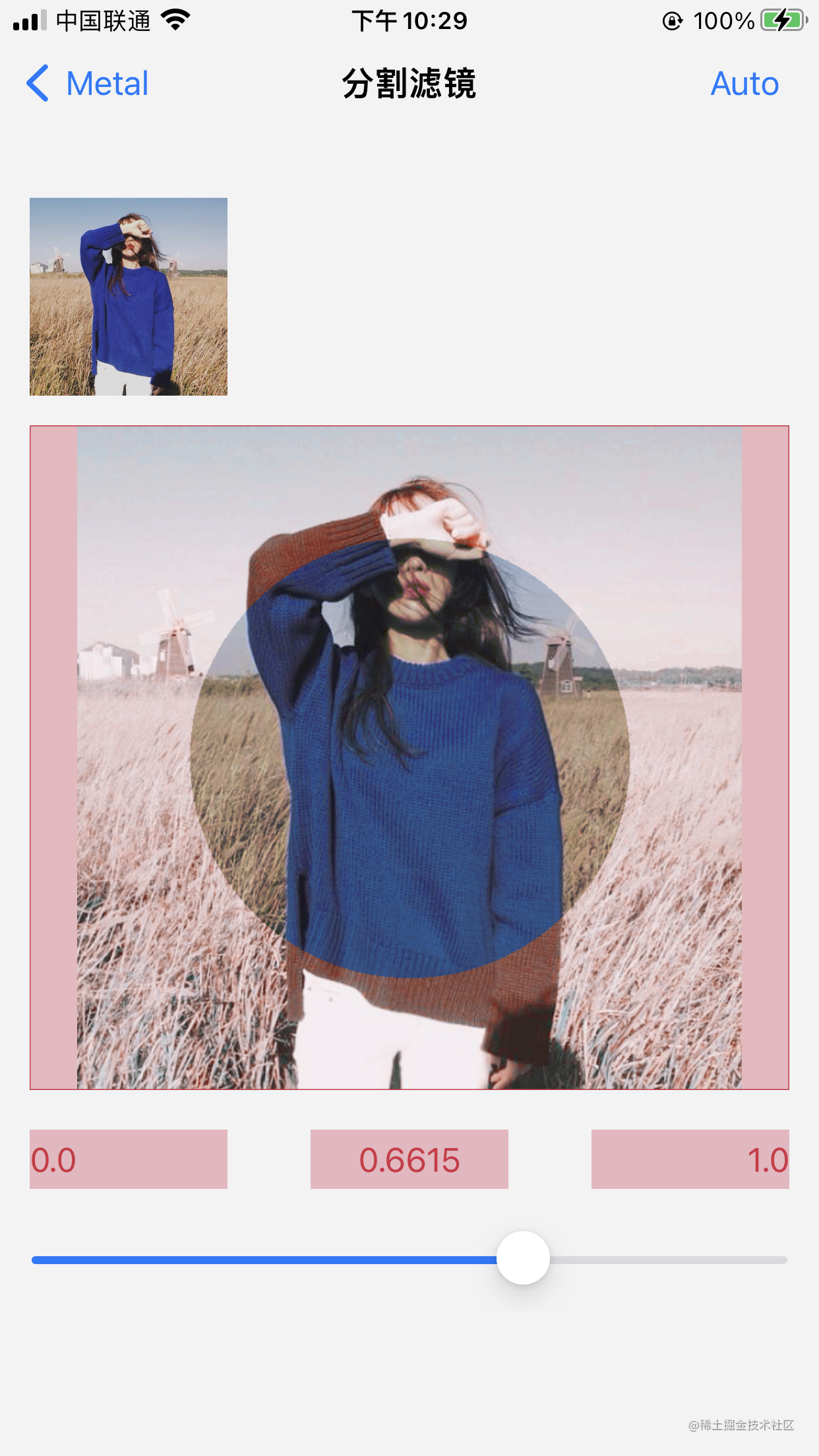

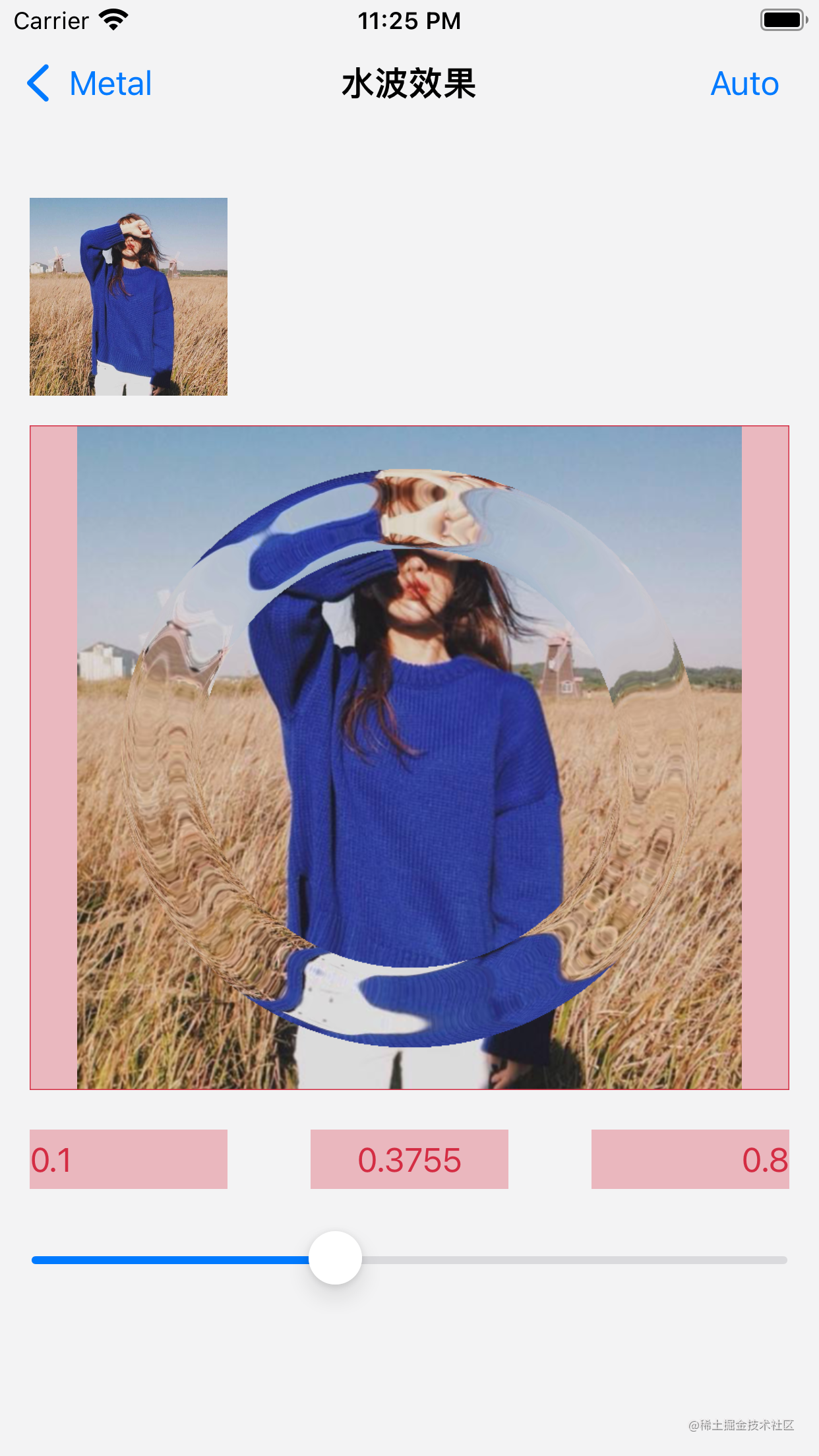

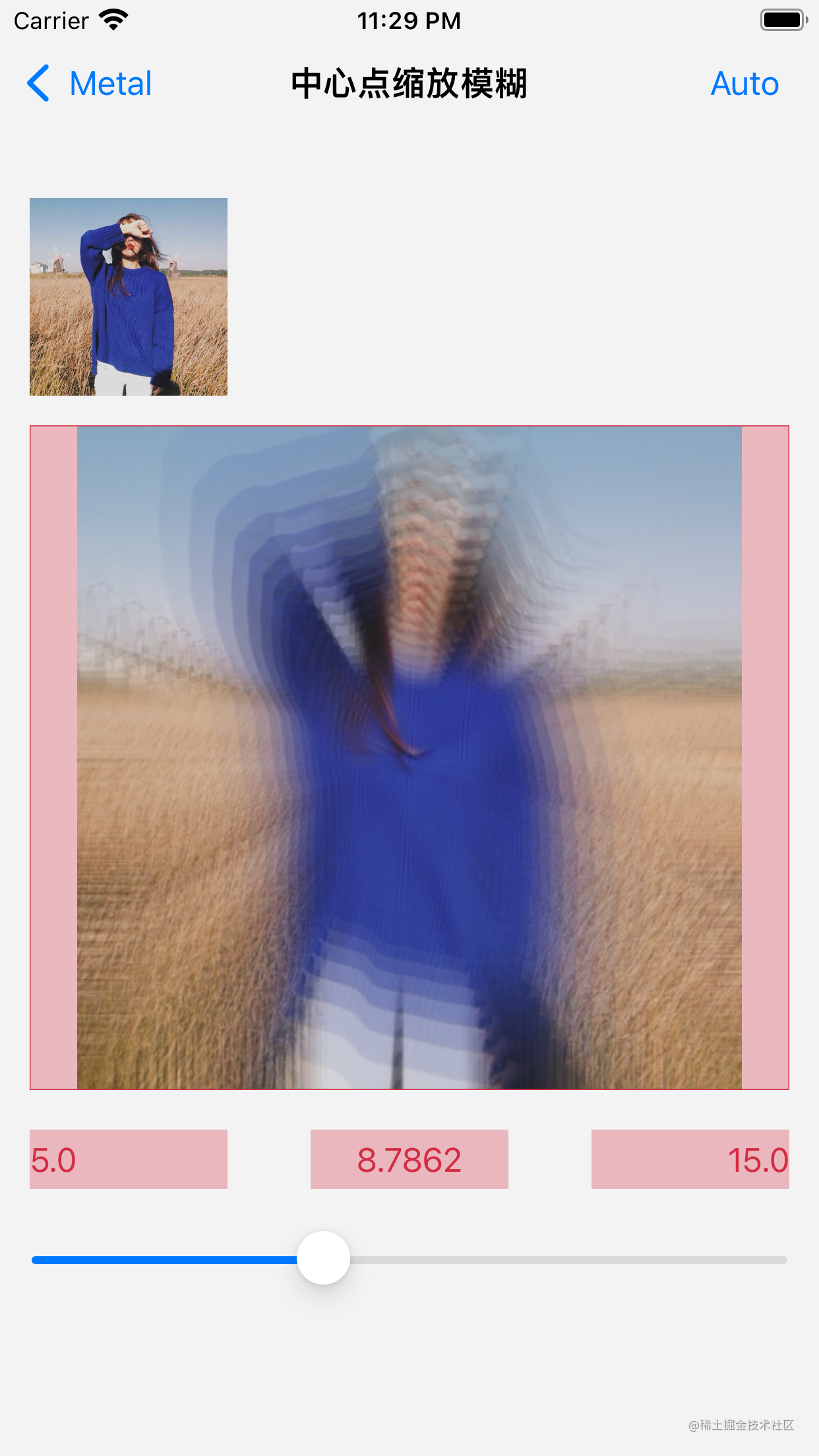

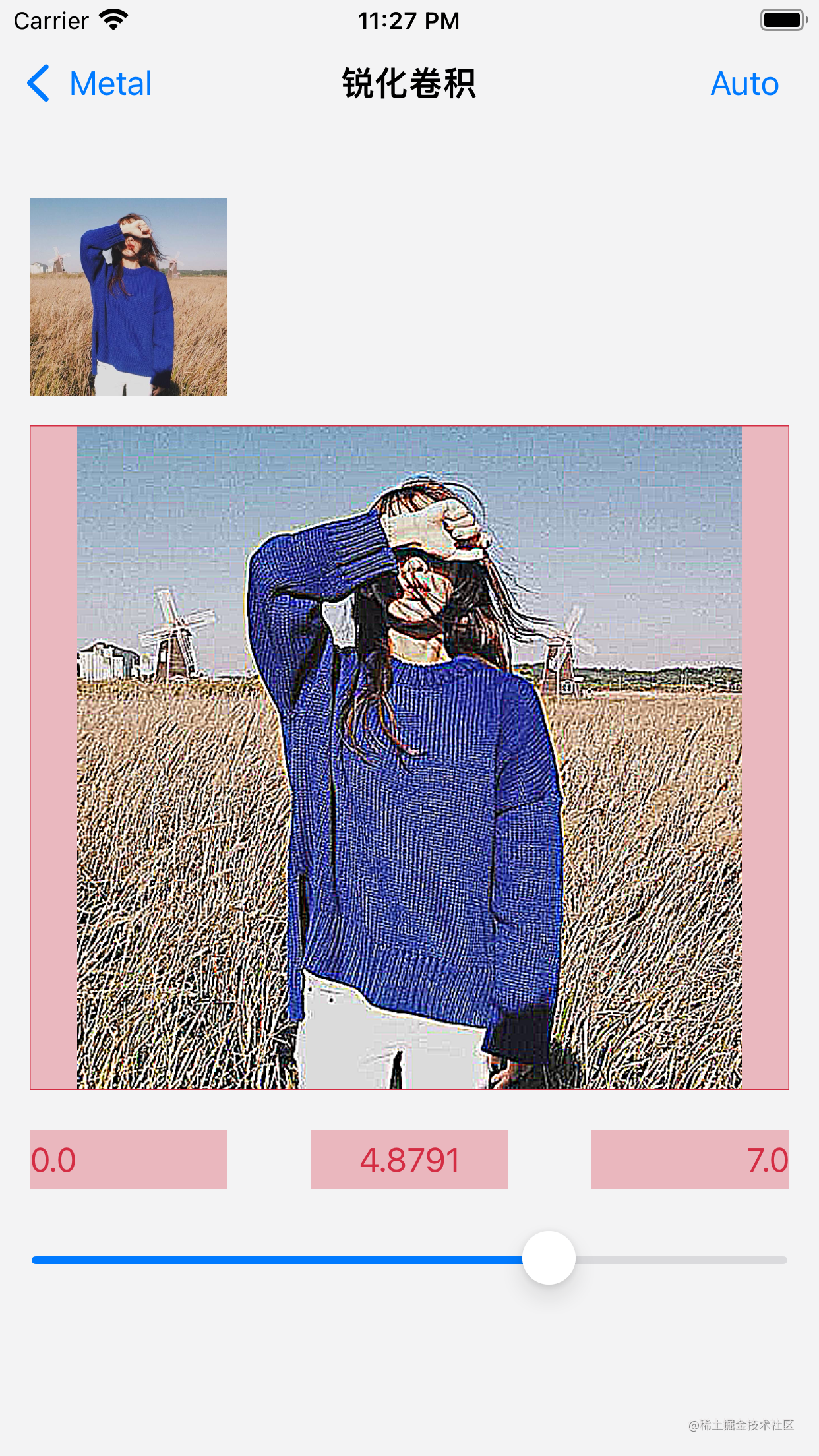

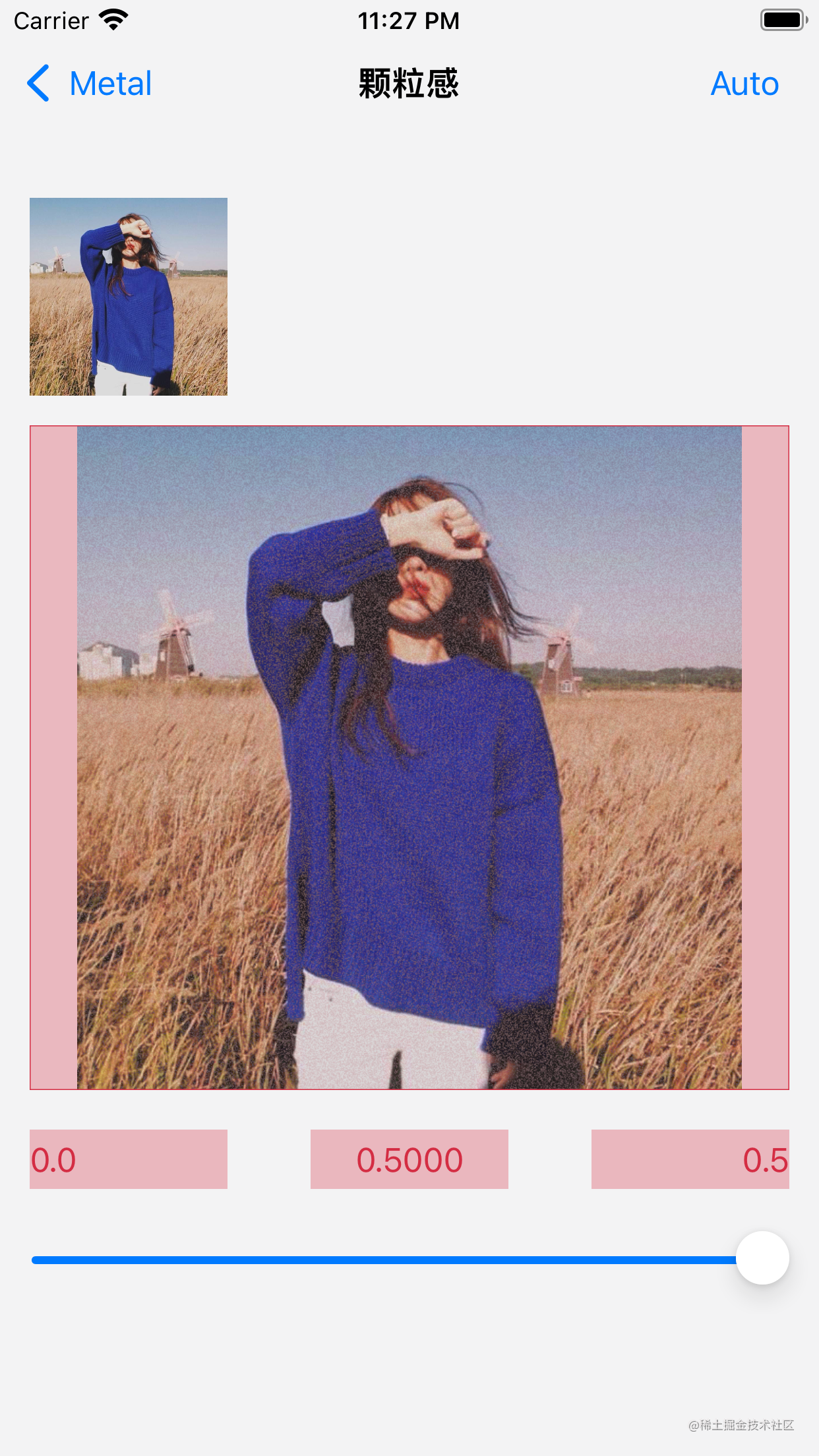

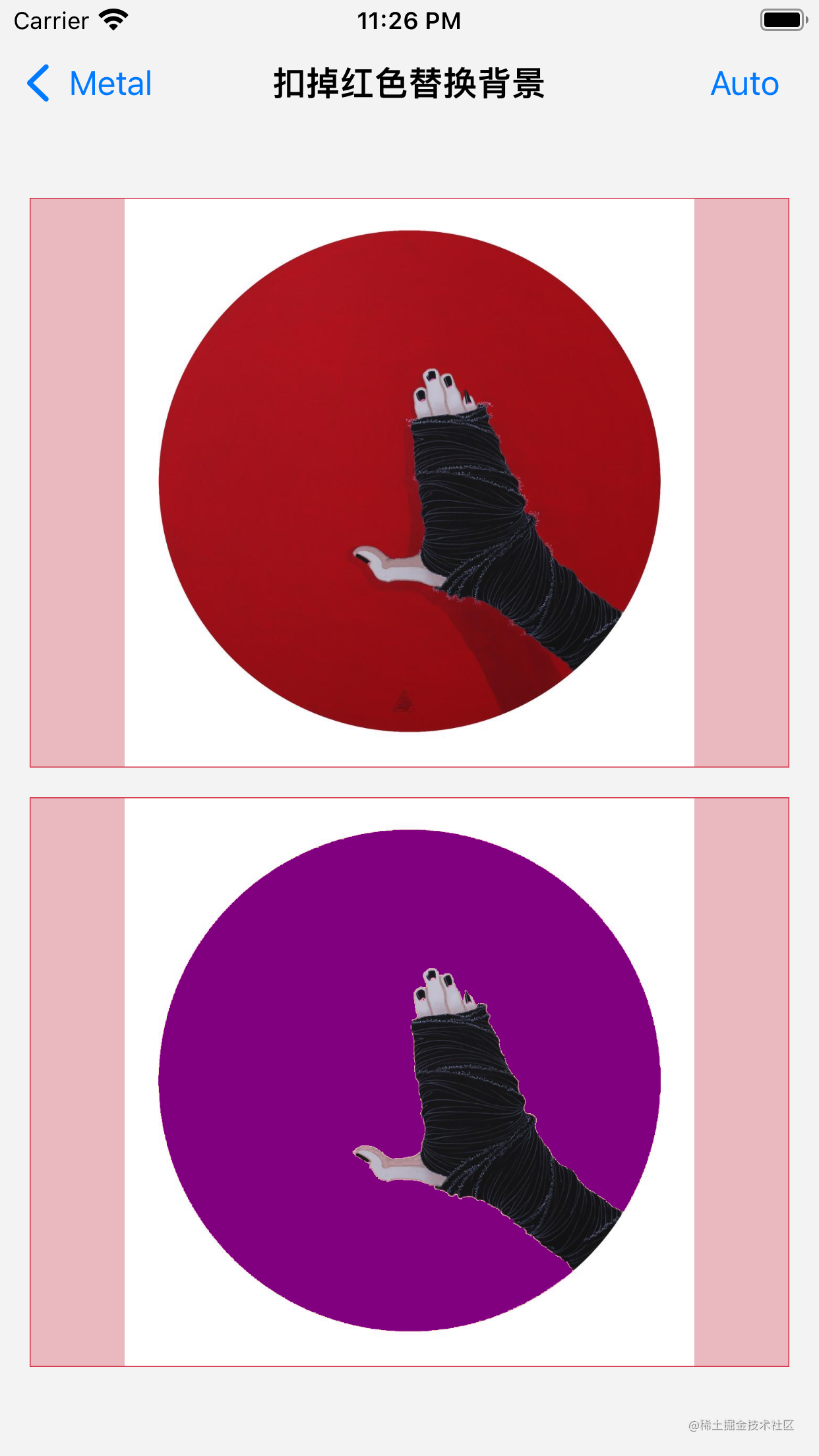

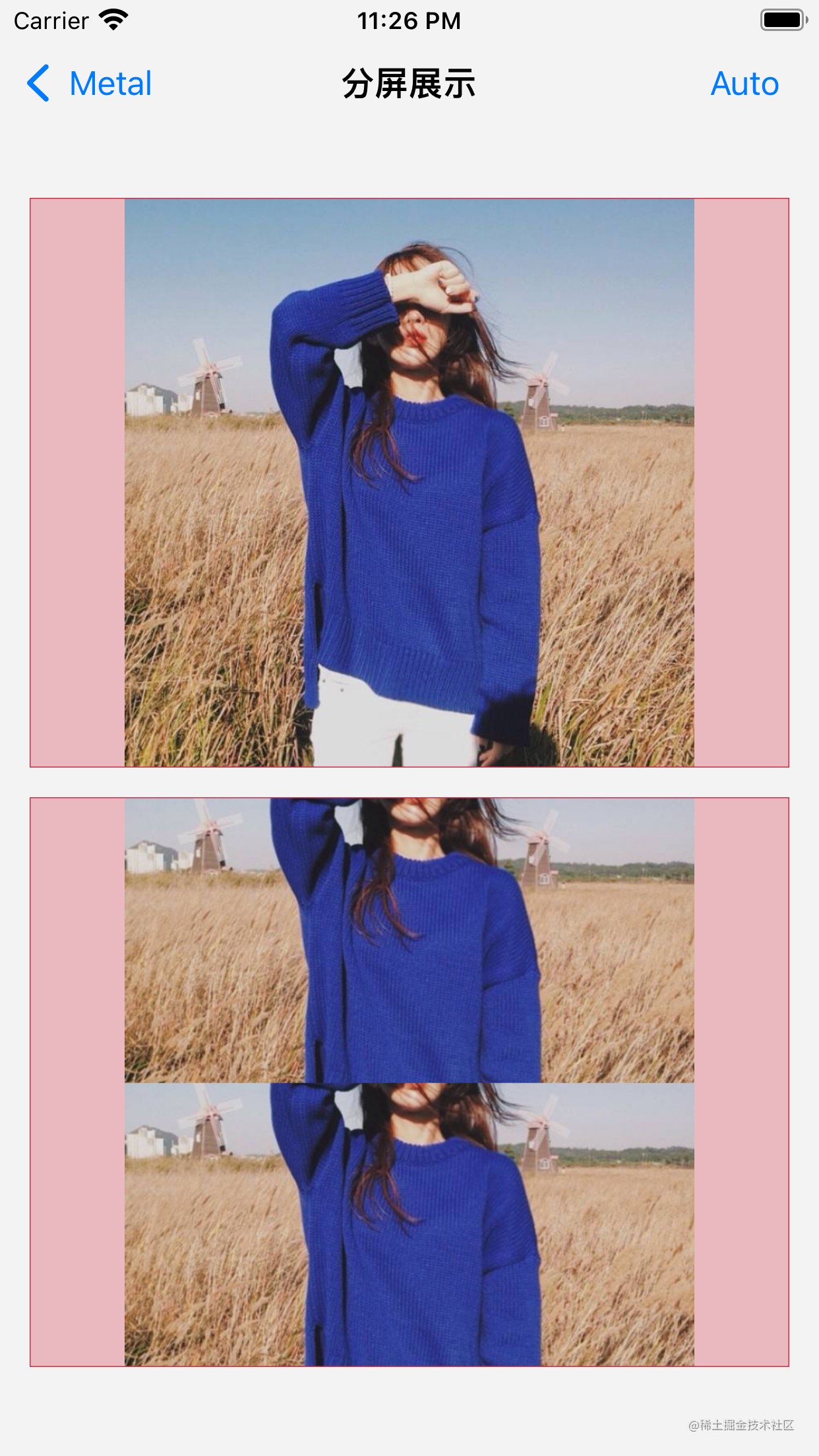

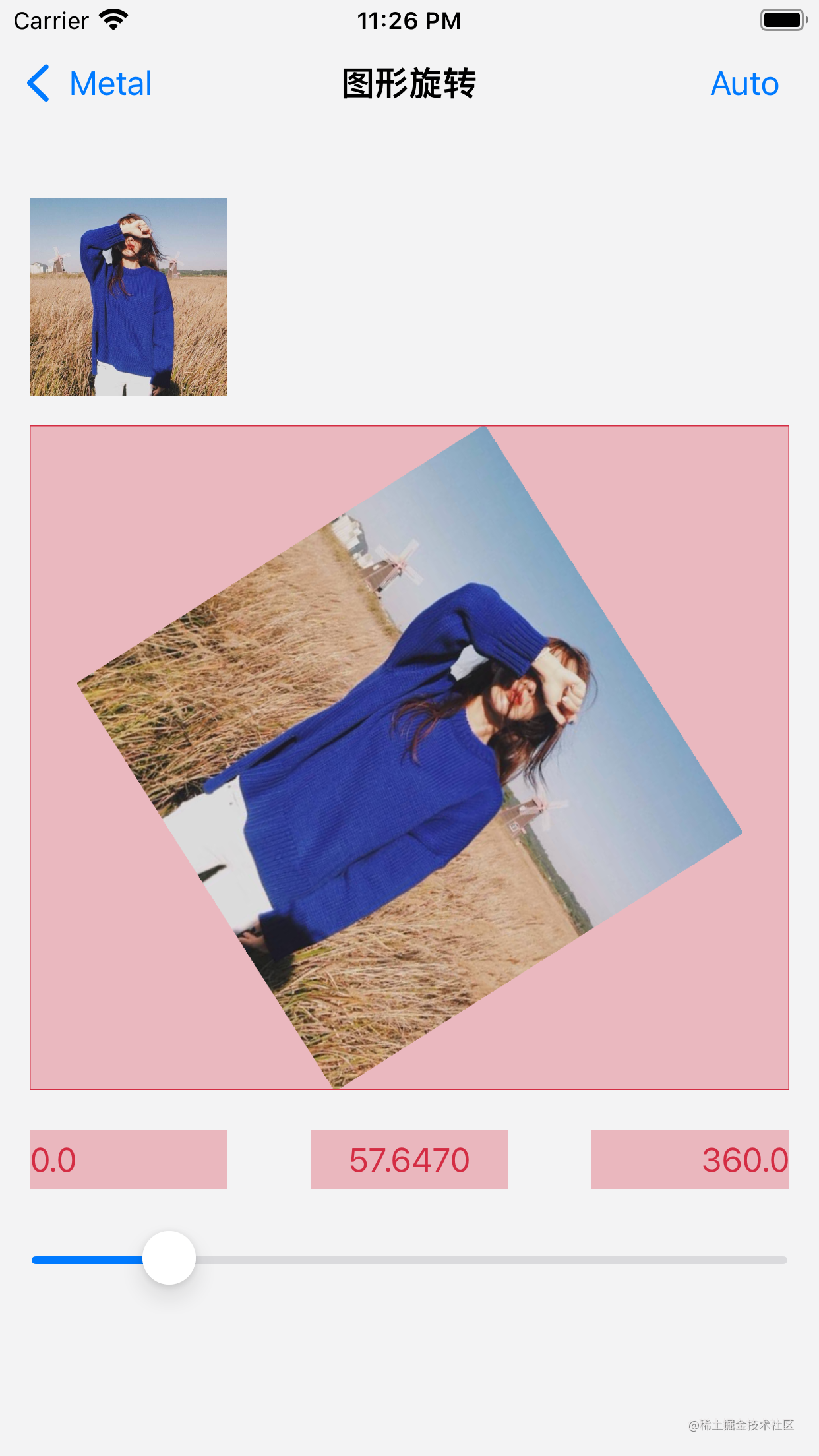

design sketch

- Rendering of the first wave part:

|

|

|---|

|

|

|

|---|

|

|

|

|---|

|

|

|

|---|

last

- That's all for the introduction and design of filter frame.

- Slowly add other relevant filters and give me a star if you like 🌟 All right.

- Filter Demo address At present, it contains 100 + kinds of filters. Of course, most filter algorithms are for reference GPUImage From design.

- Attach a development acceleration library KJCategoriesDemo address 🎷 Favorite bosses can order a star 🌟

✌️.